-

尚硅谷大数据项目《在线教育之实时数仓》笔记002

目录

第06章 数据仓库环境准备

P006

P007

P008

http://node001:16010/master-status

[atguigu@node001 ~]$ start-hbase.sh

- [atguigu@node001 ~]$ start-hbase.sh

- SLF4J: Class path contains multiple SLF4J bindings.

- SLF4J: Found binding in [jar:file:/opt/module/hbase/hbase-2.0.5/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: Found binding in [jar:file:/opt/module/hadoop/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

- SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

- running master, logging to /opt/module/hbase/hbase-2.0.5/logs/hbase-atguigu-master-node001.out

- node002: running regionserver, logging to /opt/module/hbase/hbase-2.0.5/bin/../logs/hbase-atguigu-regionserver-node002.out

- node003: running regionserver, logging to /opt/module/hbase/hbase-2.0.5/bin/../logs/hbase-atguigu-regionserver-node003.out

- node001: running regionserver, logging to /opt/module/hbase/hbase-2.0.5/logs/hbase-atguigu-regionserver-node001.out

- [atguigu@node001 ~]$ jpsall

- ================ node001 ================

- 3041 DataNode

- 6579 HMaster

- 2869 NameNode

- 3447 NodeManager

- 6778 HRegionServer

- 6940 Jps

- 3646 JobHistoryServer

- 3806 QuorumPeerMain

- ================ node002 ================

- 1746 DataNode

- 3289 HRegionServer

- 2074 NodeManager

- 2444 QuorumPeerMain

- 1949 ResourceManager

- 3471 Jps

- ================ node003 ================

- 2240 QuorumPeerMain

- 1938 SecondaryNameNode

- 3138 HRegionServer

- 1842 DataNode

- 2070 NodeManager

- 3338 Jps

- [atguigu@node001 ~]$

P009

[atguigu@node001 conf]$ cd /opt/module/hbase/apache-phoenix-5.0.0-HBase-2.0-bin

[atguigu@node001 apache-phoenix-5.0.0-HBase-2.0-bin]$ bin/sqlline.py node001,node002,node003:2181[atguigu@node001 ~]$ start-hbase.sh

[atguigu@node001 ~]$ /opt/module/hbase/apache-phoenix-5.0.0-HBase-2.0-bin/bin/sqlline.py node001,node002,node003:2181

- [atguigu@node001 apache-phoenix-5.0.0-HBase-2.0-bin]$ start-hbase.sh

- SLF4J: Class path contains multiple SLF4J bindings.

- SLF4J: Found binding in [jar:file:/opt/module/hbase/hbase-2.0.5/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: Found binding in [jar:file:/opt/module/hadoop/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

- SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

- running master, logging to /opt/module/hbase/hbase-2.0.5/logs/hbase-atguigu-master-node001.out

- node002: running regionserver, logging to /opt/module/hbase/hbase-2.0.5/logs/hbase-atguigu-regionserver-node002.out

- node003: running regionserver, logging to /opt/module/hbase/hbase-2.0.5/logs/hbase-atguigu-regionserver-node003.out

- node001: running regionserver, logging to /opt/module/hbase/hbase-2.0.5/logs/hbase-atguigu-regionserver-node001.out

- node002: running master, logging to /opt/module/hbase/hbase-2.0.5/logs/hbase-atguigu-master-node002.out

- [atguigu@node001 apache-phoenix-5.0.0-HBase-2.0-bin]$ bin/sqlline.py node001,node002,node003:2181

- Setting property: [incremental, false]

- Setting property: [isolation, TRANSACTION_READ_COMMITTED]

- issuing: !connect jdbc:phoenix:node001,node002,node003:2181 none none org.apache.phoenix.jdbc.PhoenixDriver

- Connecting to jdbc:phoenix:node001,node002,node003:2181

- SLF4J: Class path contains multiple SLF4J bindings.

- SLF4J: Found binding in [jar:file:/opt/module/hbase/apache-phoenix-5.0.0-HBase-2.0-bin/phoenix-5.0.0-HBase-2.0-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: Found binding in [jar:file:/opt/module/hadoop/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

- 23/09/12 10:52:47 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- Connected to: Phoenix (version 5.0)

- Driver: PhoenixEmbeddedDriver (version 5.0)

- Autocommit status: true

- Transaction isolation: TRANSACTION_READ_COMMITTED

- Building list of tables and columns for tab-completion (set fastconnect to true to skip)...

- 133/133 (100%) Done

- Done

- sqlline version 1.2.0

- 0: jdbc:phoenix:node001,node002,node003:2181> !table

- +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+--------------+

- | TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_RO |

- +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+--------------+

- | | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false |

- | | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false |

- | | SYSTEM | LOG | SYSTEM TABLE | | | | | | true |

- | | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false |

- | | SYSTEM | STATS | SYSTEM TABLE | | | | | | false |

- +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+--------------+

- 0: jdbc:phoenix:node001,node002,node003:2181>

P010

6.2.2 Hbase 环境搭建

P011

6.2.3 Redis 环境搭建

- [atguigu@node001 redis-6.0.8]$ /usr/local/bin/redis-server

- 3651:C 14 Sep 2023 10:23:44.668 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

- 3651:C 14 Sep 2023 10:23:44.668 # Redis version=6.0.8, bits=64, commit=00000000, modified=0, pid=3651, just started

- 3651:C 14 Sep 2023 10:23:44.668 # Warning: no config file specified, using the default config. In order to specify a config file use /usr/local/bin/redis-server /path/to/redis.conf

- _._

- _.-``__ ''-._

- _.-`` `. `_. ''-._ Redis 6.0.8 (00000000/0) 64 bit

- .-`` .-```. ```\/ _.,_ ''-._

- ( ' , .-` | `, ) Running in standalone mode

- |`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

- | `-._ `._ / _.-' | PID: 3651

- `-._ `-._ `-./ _.-' _.-'

- |`-._`-._ `-.__.-' _.-'_.-'|

- | `-._`-._ _.-'_.-' | http://redis.io

- `-._ `-._`-.__.-'_.-' _.-'

- |`-._`-._ `-.__.-' _.-'_.-'|

- | `-._`-._ _.-'_.-' |

- `-._ `-._`-.__.-'_.-' _.-'

- `-._ `-.__.-' _.-'

- `-._ _.-'

- `-.__.-'

- 3651:M 14 Sep 2023 10:23:44.671 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

- 3651:M 14 Sep 2023 10:23:44.671 # Server initialized

- 3651:M 14 Sep 2023 10:23:44.671 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect.

- 3651:M 14 Sep 2023 10:23:44.671 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo madvise > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled (set to 'madvise' or 'never').

- 3651:M 14 Sep 2023 10:23:44.671 * Ready to accept connections

[atguigu@node001 redis-6.0.8]$ /usr/local/bin/redis-server # 前台启动

[atguigu@node001 ~]$ redis-server ./my_redis.conf # 后台启动

[atguigu@node001 ~]$ jps

4563 Jps

[atguigu@node001 ~]$ ps -ef | grep redis

atguigu 4558 1 0 10:29 ? 00:00:00 redis-server 127.0.0.1:6379

atguigu 4579 3578 0 10:29 pts/0 00:00:00 grep --color=auto redis

[atguigu@node001 ~]$ redis-cli

127.0.0.1:6379> quit

[atguigu@node001 ~]$P012

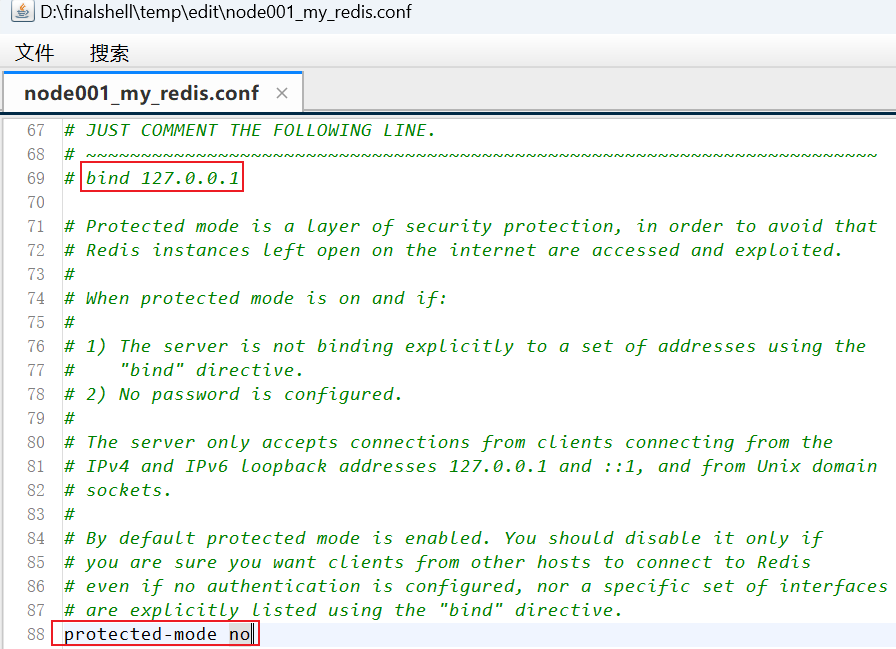

修改redis配置,允许外部访问。

P013

6.2.4 ClickHouse环境搭建

‘abrt-cli status‘ timed out 解决办法(2021综合整理)_abrt-cli status' timed out_爱喝咖啡的程序猿的博客-CSDN博客

cd /-bash: 无法为立即文档创建临时文件: 设备上没有空间。

[root@node001 mapper]# docker stop $(docker ps -aq) # 停止所有的docker容器

- [atguigu@node001 clickhouse]$ sudo systemctl start clickhouse-server

- [atguigu@node001 clickhouse]$ clickhouse-client -m

- ClickHouse client version 20.4.5.36 (official build).

- Connecting to localhost:9000 as user default.

- Code: 209. DB::NetException: Timeout exceeded while reading from socket ([::1]:9000)

- [atguigu@node001 clickhouse]$

- [atguigu@node001 ~]$ sudo systemctl status clickhouse-server

- ● clickhouse-server.service - ClickHouse Server (analytic DBMS for big data)

- Loaded: loaded (/etc/systemd/system/clickhouse-server.service; enabled; vendor preset: disabled)

- Active: active (running) since 二 2023-09-19 10:18:17 CST; 1min 42s ago

- Main PID: 1033 (clickhouse-serv)

- Tasks: 56

- Memory: 230.4M

- CGroup: /system.slice/clickhouse-server.service

- └─1033 /usr/bin/clickhouse-server --config=/etc/clickhouse-server/config.xml --pid-file=/run/clickhouse-server/clickhouse-server.pid

- 9月 19 10:18:19 node001 clickhouse-server[1033]: Include not found: clickhouse_compression

- 9月 19 10:18:19 node001 clickhouse-server[1033]: Logging trace to /var/log/clickhouse-server/clickhouse-server.log

- 9月 19 10:18:19 node001 clickhouse-server[1033]: Logging errors to /var/log/clickhouse-server/clickhouse-server.err.log

- 9月 19 10:18:20 node001 clickhouse-server[1033]: Processing configuration file '/etc/clickhouse-server/users.xml'.

- 9月 19 10:18:20 node001 clickhouse-server[1033]: Include not found: networks

- 9月 19 10:18:20 node001 clickhouse-server[1033]: Saved preprocessed configuration to '/var/lib/clickhouse//preprocessed_configs/users.xml'.

- 9月 19 10:18:24 node001 clickhouse-server[1033]: Processing configuration file '/etc/clickhouse-server/config.xml'.

- 9月 19 10:18:24 node001 clickhouse-server[1033]: Include not found: clickhouse_remote_servers

- 9月 19 10:18:24 node001 clickhouse-server[1033]: Include not found: clickhouse_compression

- 9月 19 10:18:24 node001 clickhouse-server[1033]: Saved preprocessed configuration to '/var/lib/clickhouse//preprocessed_configs/config.xml'.

- [atguigu@node001 ~]$ clickhouse-client -m

- ClickHouse client version 20.4.5.36 (official build).

- Connecting to localhost:9000 as user default.

- Connected to ClickHouse server version 20.4.5 revision 54434.

- node001 :)

- node001 :) Bye.

- [atguigu@node001 ~]$

[atguigu@node001 ~]$ clickhouse-client -m

ClickHouse client version 20.4.5.36 (official build).

Connecting to localhost:9000 as user default.

Connected to ClickHouse server version 20.4.5 revision 54434.node001 :)

node001 :) Bye.

[atguigu@node001 ~]$P014

6.3 模拟数据准备

- zookeeper、

- kafka、

- f1.sh(f1采集上传到kafka,不需要上传到hdfs)、

- data_mocker(造日志数据)。/opt/module/data_mocker/01-onlineEducation/java -jar edu2021-mock-2022-06-18.jar

1、用户行为日志(topic_log)

com.github.shyiko.mysql.binlog.network.ServerException: Could not find first log file name in binary log index file

at com.github.shyiko.mysql.binlog.BinaryLogClient.listenForEventPackets(BinaryLogClient.java:926) ~[mysql-binlog-connector-java-0.23.3.jar:0.23.3]

at com.github.shyiko.mysql.binlog.BinaryLogClient.connect(BinaryLogClient.java:595) ~[mysql-binlog-connector-java-0.23.3.jar:0.23.3]

at com.github.shyiko.mysql.binlog.BinaryLogClient$7.run(BinaryLogClient.java:839) ~[mysql-binlog-connector-java-0.23.3.jar:0.23.3]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_212]maxwell :Could not find first log file name in binary log index file_新一aaaaa的博客-CSDN博客

删除mysql中的maxwell数据库。

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic topic_log # 启动一个kafka消费者挂起在node001- 连接成功

- Last login: Thu Oct 26 14:12:50 2023 from 192.168.10.1

- [atguigu@node001 ~]$ cd /opt/module/data_mocker/

- [atguigu@node001 data_mocker]$ cd 01-onlineEducation/

- [atguigu@node001 01-onlineEducation]$ java -jar edu2021-mock-2022-06-18.jar

- SLF4J: Class path contains multiple SLF4J bindings.

- SLF4J: Found binding in [jar:file:/opt/module/data_mocker/01-onlineEducation/edu2021-mock-2022-06-18.jar!/BOOT-INF/lib/logback-classic-1.2.3.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: Found binding in [jar:file:/opt/module/data_mocker/01-onlineEducation/edu2021-mock-2022-06-18.jar!/BOOT-INF/lib/slf4j-log4j12-1.7.7.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

- SLF4J: Actual binding is of type [ch.qos.logback.classic.util.ContextSelectorStaticBinder]

- . ____ _ __ _ _

- /\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

- ( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

- \\/ ___)| |_)| | | | | || (_| | ) ) ) )

- ' |____| .__|_| |_|_| |_\__, | / / / /

- =========|_|==============|___/=/_/_/_/

- :: Spring Boot :: (v2.0.7.RELEASE)

2、业务数据(topic_db)

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic topic_db- [atguigu@node001 ~]$ maxwell.sh start # 启动maxwell

- [atguigu@node001 ~]$ cd ~/bin

- [atguigu@node001 bin]$ mysql_to_kafka_inc_init.sh all

-

相关阅读:

2021年认证杯SPSSPRO杯数学建模A题(第一阶段)医学图像的配准全过程文档及程序

wenet--学习笔记(1)

go学习-JS的encodeURIComponent转go

分布式-分布式选举算法

【剑指offer系列】71. 求1+2+…+n

Launcher app prediction

腾讯音乐评论审核、分类与排序算法技术

免费享受企业级安全:雷池社区版WAF,高效专业的Web安全的方案

全波形反演的深度学习方法: 第 3 章 常规反演

Nvidia-docker运行错误- Nvidia-docker : Unknown runtime specified nvidia

- 原文地址:https://blog.csdn.net/weixin_44949135/article/details/132811616