-

postgresql|数据库|centos7下基于postgresql-12的主从复制的pgpool-4.4的部署和使用

前言:

postgresql数据库只用自身的一些配置是无法做到最优的优化的,需要通过一些外置插件(中间件)来提高服务器的整体性能,通俗的说就是数据库仅仅依靠自身是无法达到性能最优的,很多时候需要更改数据库的整体架构,使用一些目前来说成熟的技术,比如,读写分离技术,负载均衡技术,高速缓存技术等等集群方面的技术。

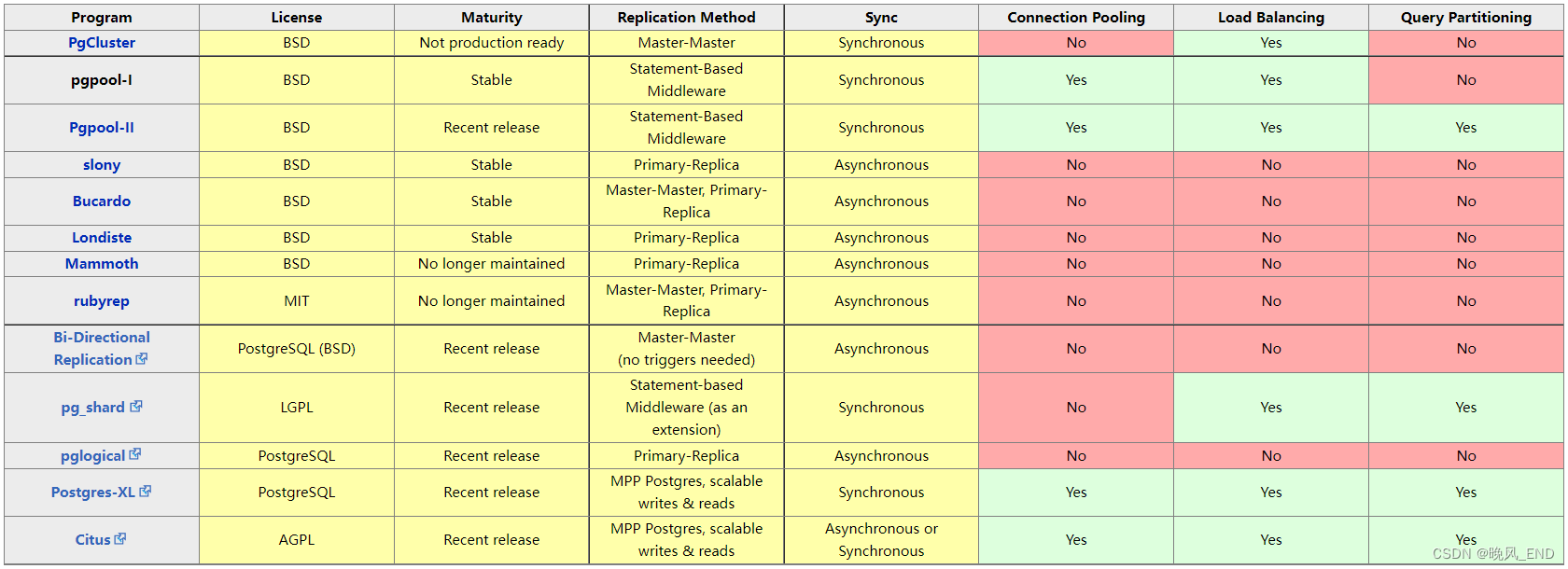

下图是一些较为成熟的集群方案:

从上表可以看到,pgpool是比较全面的一个中间件,什么连接池,负载均衡都有,还有没有写出来的缓存功能,其实使用此中间件的不能拒绝的诱惑就是负载均衡和缓存了,其它的功能倒是没有什么。

本文将就pgpool的负载均衡和高速缓存功能做一个详细的介绍。

一,

pgpool的简单介绍

Pgpool-II是一个在PostgreSQL服务器和PostgreSQL数据库客户端之间工作的中间件。它是根据BSD许可证授权的。它提供以下功能。

连接池

Pgpool-II保存与PostgreSQL服务器的连接,并在具有相同属性(即用户名,数据库,协议版本)的新连接进入时重用它们。它减少了连接开销,并提高了系统的整体吞吐量。

复制

Pgpool-II可以管理多个PostgreSQL服务器。使用复制功能可以在2个或更多物理磁盘上创建实时备份,以便在磁盘发生故障时服务可以继续运行而不会停止服务器。

负载均衡

如果复制了数据库,则在任何服务器上执行SELECT查询都将返回相同的结果。Pgpool-II利用复制功能,通过在多个服务器之间分配SELECT查询来减少每个PostgreSQL服务器的负载,从而提高系统的整体吞吐量。充其量,性能与PostgreSQL服务器的数量成比例地提高。在许多用户同时执行许多查询的情况下,负载平衡最有效。

限制超出连接

PostgreSQL的最大并发连接数有限制,连接在这么多连接后被拒绝。但是,设置最大连接数会增加资源消耗并影响系统性能。pgpool-II对最大连接数也有限制,但额外连接将排队,而不是立即返回错误。

看门狗

Watchdog可以协调多个Pgpool-II,创建一个强大的集群系统,避免单点故障或脑裂。看门狗可以对其他pgpool-II节点执行生命检查,以检测Pgpoll-II的故障。如果活动Pgpool-II发生故障,则可以将备用Pgpool-II提升为活动状态,并接管虚拟IP。

查询缓存

在内存中查询缓存允许保存一对SELECT语句及其结果。如果有相同的SELECT,Pgpool-II将从缓存中返回值。由于不涉及SQL解析或访问PostgreSQL,因此在内存缓存中使用速度非常快。另一方面,在某些情况下,它可能比正常路径慢,因为它增加了存储缓存数据的一些开销。

Pgpool-II讲PostgreSQL的后端和前端协议,并在后端和前端之间传递消息。因此,数据库应用程序(前端)认为Pgpool-II是实际的PostgreSQL服务器,服务器(后端)将Pgpool-II视为其客户端之一。因为Pgpool-II对服务器和客户端都是透明的,所以现有的数据库应用程序可以与Pgpool-II一起使用。Pgpool-II讲述PostgreSQL的后端和前端协议,并在它们之间传递连接。因此,数据库应用程序(前端)认为Pgpool-II是实际的PostgreSQL服务器,服务器(后端)将Pgpool-II视为其客户端之一。因为Pgpool-II对服务器和客户端都是透明的,所以现有的数据库应用程序可以与Pgpool-II一起使用,不需要对现有的业务系统进行更改。

那么,架构方面应该是通过看门狗,利用一个虚拟IP(也就是VIP)代理一个自身就是主从流复制的集群,VIP可以看做是前端,数据库可以看做后端,而主从流复制的数据库集群是具有这么一个特点:主服务器可读可写,从服务只读不可写。VIP通过pgpool的负载均衡功能就可以即可读也可以写了,负载均衡的策略是主从根据特定算法分配读任务,写任务仍然是交给主服务器完成。

这样,集群的使用率就自然的增高了,一些常用的查询语句通过pgpool的缓存功能,缓存起来,自然的整个集群的查询效率就提高了。

OK,下面开始讲述如何搭建pgpool。

二,

pgpool的官方网址:pgpool Wiki

下载和安装教程都有,里面也提供了一些比较新的rpm安装包,rpm仓库地址是:Index of /yum/rpms/4.4/redhat/rhel-7-x86_64

直接将该地址配置成yum仓库就可以了

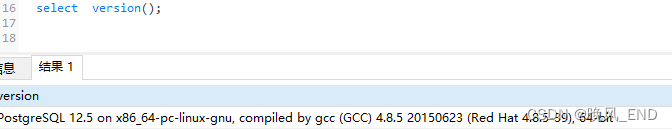

postgresql的版本和大体情况如下:

11服务器是主服务器,12是从服务器

主从复制的搭建见我的博客:postgresql|数据库|【postgresql-12的基于pg_basebackup的主从复制部署】_postgresql12 主备_晚风_END的博客-CSDN博客

三,

pgpool的部署

该部署工作是比较繁琐的,难度是比较高的,主要是权限问题需要小心处理,其次是参数众多,很多地方需要根据实际的情况调整,最后是pgpool的功能比较多,如何配置好是需要比较多的耐心。

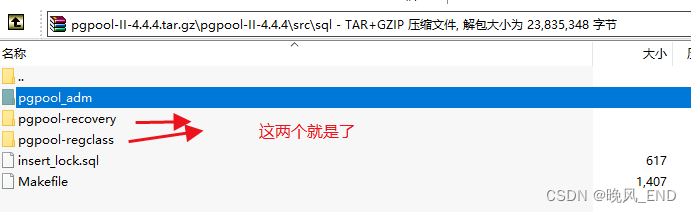

首先,大概介绍一下pgpool的组件,该中间件的管理组件有三个,一个是数据库侧使用的pool工具,该工具是以插件的形式安装在postgresql数据库内的,第二个是操作系统侧的pcp工具,这个工具需要在pgpool的主配置文件内配置,第三个是pgpoolAdm,此工具是PHP编写的web端管理工具,可以在web端方便的查看pgpool并且管理配置pgpool,目前的版本应该是需要高版本的PHP支持,暂时没有使用。

1,

管理工具的安装

本案例中,只安装数据库侧的管理工具pool和pcp,pool工具在源码包内。

pgpool-II-4.4.4.tar.gz这个文件上传到服务器解压后,和普通的postgresql插件没什么区别,一样的make && make install 就可以了,前提是环境变量里有定义PGHOME和PGDATA这两个变量。

2,

yum安装

配置好本地仓库和上面提到的官网仓库就可以运行以下命令安装了,这里安装了一个memcached服务,该服务后面作为缓存服务使用的。

yum install pgpool-II-pg12-debuginfo-4.4.2 pgpool-II-pg12-4.4.2 pgpool-II-pg12-devel-4.4.2 pgpool-II-pg12-extensions-4.4.2 -yyum install memcached -y &&systemctl enable memcached && systemctl enable pgpool && systemctl start pgpool memcached安装完毕后将会在/etc目录下看到pgpool-II,此目录里是pgpool的配置文件和一些高可用故障转移脚本,本案例中这些脚本不打算使用,只配置pgpool服务,另外需要注意,两个服务器都需要安装,memcached只在一个服务器安装就可以了

3,

配置文件

可以看到,这些文件都是postgres的属组,这些一定要注意哦

- [root@node1 pgpool-II]# ls -al

- total 144

- drwxr-xr-x. 3 root root 202 Sep 18 06:18 .

- drwxr-xr-x. 83 root root 8192 Sep 17 19:16 ..

- -rw------- 1 postgres postgres 900 Sep 17 11:15 pcp.conf

- -rw-------. 1 postgres postgres 858 Jan 22 2023 pcp.conf.sample

- -rw------- 1 postgres postgres 52960 Sep 18 02:01 pgpool.conf

- -rw-------. 1 postgres postgres 52964 Jan 22 2023 pgpool.conf.sample

- -rw------- 1 postgres postgres 2 Sep 17 10:21 pgpool_node_id

- -rw------- 1 postgres postgres 3537 Sep 17 11:54 pool_hba.conf

- -rw-------. 1 postgres postgres 3476 Jan 22 2023 pool_hba.conf.sample

- -rw-------. 1 postgres postgres 45 Sep 17 11:05 pool_passwd

- drwxr-xr-x. 2 root root 4096 Sep 17 10:02 sample_scripts

pcp.conf 的配置

该文件是存放pgpool的管理密码,此密码可以和postgresql数据库的密码不一样,也就是说随便定,定义方式非常简单,用户名:密码的形式添加在该文件末尾即可,只是需要注意一点,密码是md5加密的,不能明文(两种方式都可以,嫌麻烦的话就第三行那个命令,用户是postgres,密码是123456)

- [root@node1 pgpool-II]# pg_md5 123456

- e10adc3949ba59abbe56e057f20f883e

- [root@node1 pgpool-II]# echo "postgres:e10adc3949ba59abbe56e057f20f883e">>./pcp.conf

- [root@node1 pgpool-II]# echo "postgres:`pg_md5 123456`">>./pcp.conf

- [root@node1 pgpool-II]# cat pcp.conf

- # PCP Client Authentication Configuration File

- # ============================================

- #

- # This file contains user ID and his password for pgpool

- # communication manager authentication.

- #

- # Note that users defined here do not need to be PostgreSQL

- # users. These users are authorized ONLY for pgpool

- # communication manager.

- #

- # File Format

- # ===========

- #

- # List one UserID and password on a single line. They must

- # be concatenated together using ':' (colon) between them.

- # No spaces or tabs are allowed anywhere in the line.

- #

- # Example:

- # postgres:e8a48653851e28c69d0506508fb27fc5

- #

- # Be aware that there will be no spaces or tabs at the

- # beginning of the line! although the above example looks

- # like so.

- #

- # Lines beginning with '#' (pound) are comments and will

- # be ignored. Again, no spaces or tabs allowed before '#'.

- # USERID:MD5PASSWD

- postgres:e10adc3949ba59abbe56e057f20f883e

pgpool.conf文件的配置:

该文件是pgpool的主配置文件,其中注释的行已经全部去掉了,只保留了放开的内容

说明:该配置文件内定义的文件路径需要手动建立,/var/run/postgresql 属组是postgres

sr_check_user = 'nobody' 这个nobody用户需要在主数据库创建,创建命令为create role nobody login replication encrypted password 'replica';

为什么是主数据库呢?因为是流复制,主数据库创建了 ,从数据库自然就有了嘛,上面提到的插件也是如此的哦。

- [root@node1 pgpool-II]# sed -e '/^$/d' pgpool.conf |grep -v "\#"

- backend_clustering_mode = 'streaming_replication'

- listen_addresses = '*'

- port = 15433

- unix_socket_directories = '/var/run/postgresql'

- pcp_listen_addresses = '*'

- pcp_port = 19999

- pcp_socket_dir = '/var/run/postgresql'

- backend_hostname0 = '192.168.123.11'

- backend_port0 = 5432

- backend_weight0 = 1

- backend_data_directory0 = '/usr/local/pgsql/data'

- backend_flag0 = 'ALLOW_TO_FAILOVER'

- backend_application_name0 = 'node1'

- backend_hostname1 = '192.168.123.12'

- backend_port1 = 5432

- backend_weight1 = 1

- backend_data_directory1 = '/usr/local/pgsql/data'

- backend_flag1 = 'ALLOW_TO_FAILOVER'

- backend_application_name1 = 'node2'

- enable_pool_hba = on

- pool_passwd = 'pool_passwd'

- process_management_mode = dynamic

- num_init_children = 32

- min_spare_children = 5

- max_spare_children = 10

- max_pool = 4

- child_life_time = 5min

- log_destination = 'stderr'

- log_connections = on

- log_disconnections = on

- log_hostname = on

- log_statement = on

- log_per_node_statement = on

- log_client_messages = on

- logging_collector = on

- log_directory = '/var/log/pgpool_log'

- log_filename = 'pgpool-%a.log'

- log_file_mode = 0600

- log_truncate_on_rotation = on

- log_rotation_age = 1d

- log_rotation_size = 0

- pid_file_name = '/var/run/postgresql/pgpool.pid'

- logdir = '/tmp'

- connection_cache = on

- reset_query_list = 'ABORT; DISCARD ALL'

- load_balance_mode = on

- database_redirect_preference_list = 'postgres:1'

- sr_check_period = 10

- sr_check_user = 'nobody'

- sr_check_password = 'replica'

- sr_check_database = 'postgres'

- delay_threshold = 1

- delay_threshold_by_time = 1

- prefer_lower_delay_standby = on

- use_watchdog = on

- hostname0 = '192.168.123.11'

- wd_port0 = 9000

- pgpool_port0 = 15433

- hostname1 = '192.168.123.12'

- wd_port1 = 9000

- pgpool_port1 = 15433

- wd_ipc_socket_dir = '/var/run/postgresql'

- delegate_ip = '192.168.123.222'

- if_cmd_path = '/sbin'

- if_up_cmd = 'ip addr add $_IP_$/24 dev ens33 label ens33:0'

- if_down_cmd = 'ip addr del $_IP_$/24 dev ens33'

- arping_path = '/usr/sbin'

- arping_cmd = 'arping -U $_IP_$ -w 1 -I ens33'

- wd_monitoring_interfaces_list = ''

- wd_lifecheck_method = 'heartbeat'

- wd_interval = 10

- heartbeat_hostname0 = '192.168.123.11'

- heartbeat_port0 = 19694

- heartbeat_device0 = 'ens33'

- heartbeat_hostname1 = '192.168.123.12'

- heartbeat_port1 = 19694

- heartbeat_device1 = 'ens33'

- wd_life_point = 3

- wd_lifecheck_query = 'SELECT 1'

- memory_cache_enabled = off

- memqcache_method = 'memcached'

- memqcache_memcached_host = '192.168.123.11'

- memqcache_memcached_port = 11211

- memqcache_total_size = 64MB

- memqcache_max_num_cache = 1000000

- memqcache_expire = 0

- memqcache_cache_block_size = 1MB

pool_passwd文件的配置:

- 重要:

- su - postgres

- pg_md5 -m -p -u postgres pool_passwd

- #此时会提示输入密码,此密码是postgresql服务器的postgres用户的密码,一会会用此命令登录postgresql数据库的哦

- [root@node1 pgpool-II]# su - postgres

- Last login: Mon Sep 18 06:34:54 CST 2023 on pts/1

- [postgres@node1 ~]$ pg_md5 -m -p -u postgres pool_passwd

- password:

- [postgres@node1 ~]$ logout

- [root@node1 pgpool-II]# cat pool_passwd

- postgres:md5a3556571e93b0d20722ba62be61e8c2d

pool_hab.conf文件的配置

该文件的作用是定义pgpool哪些用户可以访问哪些后端的postgresql数据库,功能和postgresql数据库的pg_hba.conf文件类似

如果不想太麻烦(也就是不太安全),那么,如下配置即可:

- # IPv4 local connections:

- host all all 127.0.0.1/32 trust

- host all all ::1/128 trust

- host all all 0.0.0.0/0 md5

pgpool_node_id文件的配置

此文件是标识文件,表明该pgpool 对应于哪个后端,因此,11服务器上此文件内容就一个0,12服务器上次文件内容就一个1即可,当然了,如果有其它的节点,就依次增加数字即可,最多好像是127个节点。

创建用户

Pcp.conf 文件内的用户和密码可以自己随意指定,pool_pass文件内的用户必须是数据库的真实用户和密码,目前只使用了postgres用户

- 配置pcp.conf文件

- [root@node1 pgpool-II]# pg_md5 123456

- e10adc3949ba59abbe56e057f20f883e

- [root@node1 pgpool-II]# echo "postgres:e10adc3949ba59abbe56e057f20f883e">>./pcp.conf

- [root@node1 pgpool-II]# echo "postgres:`pg_md5 123456`">>./pcp.conf

- [root@node1 pgpool-II]# cat pcp.conf

- # PCP Client Authentication Configuration File

- # ============================================

- #

- # This file contains user ID and his password for pgpool

- # communication manager authentication.

- #

- # Note that users defined here do not need to be PostgreSQL

- # users. These users are authorized ONLY for pgpool

- # communication manager.

- #

- # File Format

- # ===========

- #

- # List one UserID and password on a single line. They must

- # be concatenated together using ':' (colon) between them.

- # No spaces or tabs are allowed anywhere in the line.

- #

- # Example:

- # postgres:e8a48653851e28c69d0506508fb27fc5

- #

- # Be aware that there will be no spaces or tabs at the

- # beginning of the line! although the above example looks

- # like so.

- #

- # Lines beginning with '#' (pound) are comments and will

- # be ignored. Again, no spaces or tabs allowed before '#'.

- # USERID:MD5PASSWD

- postgres:e10adc3949ba59abbe56e057f20f883e

- 重要—配置pool_passwd:

- su - postgres

- pg_md5 -m -p -u postgres pool_passwd

- #此时会提示输入密码,此密码是postgresql服务器的postgres用户的密码,一会会用此命令登录postgresql数据库的哦

- [root@node1 pgpool-II]# su - postgres

- Last login: Mon Sep 18 06:34:54 CST 2023 on pts/1

- [postgres@node1 ~]$ pg_md5 -m -p -u postgres pool_passwd

- password:

- [postgres@node1 ~]$ logout

- [root@node1 pgpool-II]# cat pool_passwd

- postgres:md5a3556571e93b0d20722ba62be61e8c2d

- pool_hab.conf文件的配置

- 该文件的作用是定义pgpool哪些用户可以访问哪些后端的postgresql数据库,功能和postgresql数据库的pg_hba.conf文件类似

- 如果不想太麻烦(也就是不太安全),那么,建议是直接把数据库的pg_hba.conf文件复制过来即可

- 配置文件内的命令需要有粘滞特殊权限,ip和arping命令,因此,命令如下:

- chmod u+s /sbin/ip

- chmod u+s /usr/sbin/arping

启动服务和停止服务

- 根据以上的配置文件,我们需要把配置文件里用到的文件夹手动创建出来,并赋予postgres属组:

-

- mkdir /var/run/postgresql

- chown -Rf postgres. /var/run/postgresql

-

- pgpool的启动和停止

- 该中间件的启停是比较特殊的,既可以使用systemctl管理器管理也可以直接二进制启停,为了规范操作,就使用systemctl管理进行吧:

- systemctl enable pgpool && systemctl start pgpool

- 服务正常启动的样子:

- [root@node1 pgpool-II]# systemctl status pgpool

- ● pgpool.service - Pgpool-II

- Loaded: loaded (/usr/lib/systemd/system/pgpool.service; enabled; vendor preset: disabled)

- Active: active (running) since Mon 2023-09-18 01:04:32 CST; 19h ago

- Process: 58354 ExecStop=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf $STOP_OPTS stop (code=exited, status=0/SUCCESS)

- Process: 45217 ExecReload=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf reload (code=exited, status=0/SUCCESS)

- Main PID: 58360 (pgpool)

- Tasks: 20

- Memory: 14.1M

- CGroup: /system.slice/pgpool.service

- ├─58360 /usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf -n

- ├─58361 pgpool: PgpoolLogger

- ├─58362 pgpool: watchdog

- ├─58371 pgpool: lifecheck

- ├─58372 pgpool: postgres postgres 192.168.123.1(50284) idle

- ├─58373 pgpool: heartbeat receiver

- ├─58374 pgpool: wait for connection request

- ├─58375 pgpool: heartbeat sender

- ├─58376 pgpool: wait for connection request

- ├─58377 pgpool: wait for connection request

- ├─58378 pgpool: postgres postgres 192.168.123.1(50279) idle

- ├─58379 pgpool: wait for connection request

- ├─58380 pgpool: wait for connection request

- ├─58381 pgpool: wait for connection request

- ├─58382 pgpool: wait for connection request

- ├─58383 pgpool: wait for connection request

- ├─58385 pgpool: PCP: wait for connection request

- ├─58386 pgpool: worker process

- ├─58387 pgpool: health check process(0)

- └─58388 pgpool: health check process(1)

- Sep 18 01:20:32 node1 systemd[1]: Reloading Pgpool-II.

- Sep 18 01:20:32 node1 systemd[1]: Reloaded Pgpool-II.

- Sep 18 01:25:42 node1 systemd[1]: Reloading Pgpool-II.

- Sep 18 01:25:42 node1 systemd[1]: Reloaded Pgpool-II.

- Sep 18 01:37:32 node1 systemd[1]: Reloading Pgpool-II.

- Sep 18 01:37:32 node1 systemd[1]: Reloaded Pgpool-II.

- Sep 18 01:47:36 node1 systemd[1]: Reloading Pgpool-II.

- Sep 18 01:47:36 node1 systemd[1]: Reloaded Pgpool-II.

- Sep 18 02:01:32 node1 systemd[1]: Reloading Pgpool-II.

- Sep 18 02:01:32 node1 systemd[1]: Reloaded Pgpool-II.

- 直接二进制形式启停的命令:

- [root@node2 ~]# pgpool ###启动

- [root@node2 ~]# pgpool -m fast stop

- 2023-09-18 21:01:30.055: main pid 44987: LOG: stop request sent to pgpool (pid: 39228). waiting for termination...

- 2023-09-18 21:01:30.055: main pid 44987: LOCATION: main.c:546

- .done.

- (这里需要注意一点,systemctl和二进制不能混用,否则另一个是不会生效的,下面是混用后的演示)

- [root@node2 ~]# pgpool

- [root@node2 ~]# systemctl status pgpool

- ● pgpool.service - Pgpool-II

- Loaded: loaded (/usr/lib/systemd/system/pgpool.service; disabled; vendor preset: disabled)

- Active: failed (Result: exit-code) since Mon 2023-09-18 21:01:30 CST; 22s ago

- Process: 44988 ExecStop=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf $STOP_OPTS stop (code=exited, status=3)

- Process: 44599 ExecReload=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf reload (code=exited, status=0/SUCCESS)

- Process: 39228 ExecStart=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf $OPTS (code=exited, status=0/SUCCESS)

- Main PID: 39228 (code=exited, status=0/SUCCESS)

- Sep 18 01:25:38 node2 systemd[1]: Reloaded Pgpool-II.

- Sep 18 01:37:24 node2 systemd[1]: Reloading Pgpool-II.

- Sep 18 01:37:24 node2 systemd[1]: Reloaded Pgpool-II.

- Sep 18 01:47:31 node2 systemd[1]: Reloading Pgpool-II.

- Sep 18 01:47:31 node2 systemd[1]: Reloaded Pgpool-II.

- Sep 18 02:01:29 node2 systemd[1]: Reloading Pgpool-II.

- Sep 18 02:01:29 node2 systemd[1]: Reloaded Pgpool-II.

- Sep 18 21:01:30 node2 systemd[1]: pgpool.service: control process exited, code=exited status=3

- Sep 18 21:01:30 node2 systemd[1]: Unit pgpool.service entered failed state.

- Sep 18 21:01:30 node2 systemd[1]: pgpool.service failed.

- [root@node2 ~]# systemctl start pgpool

- [root@node2 ~]# systemctl status pgpool

- ● pgpool.service - Pgpool-II

- Loaded: loaded (/usr/lib/systemd/system/pgpool.service; disabled; vendor preset: disabled)

- Active: failed (Result: exit-code) since Mon 2023-09-18 21:02:01 CST; 1s ago

- Process: 45637 ExecStop=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf $STOP_OPTS stop (code=exited, status=3)

- Process: 44599 ExecReload=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf reload (code=exited, status=0/SUCCESS)

- Process: 45635 ExecStart=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf $OPTS (code=exited, status=3)

- Main PID: 45635 (code=exited, status=3)

- Sep 18 21:02:01 node2 systemd[1]: Started Pgpool-II.

- Sep 18 21:02:01 node2 pgpool[45635]: 2023-09-18 21:02:01.310: main pid 45635: FATAL: could not open pid file "/var/run/postgresql/pgpool.pid"

- Sep 18 21:02:01 node2 systemd[1]: pgpool.service: main process exited, code=exited, status=3/NOTIMPLEMENTED

- Sep 18 21:02:01 node2 pgpool[45637]: 2023-09-18 21:02:01.316: main pid 45637: FATAL: could not read pid file

- Sep 18 21:02:01 node2 pgpool[45637]: 2023-09-18 21:02:01.316: main pid 45637: LOCATION: main.c:532

- Sep 18 21:02:01 node2 systemd[1]: pgpool.service: control process exited, code=exited status=3

- Sep 18 21:02:01 node2 systemd[1]: Unit pgpool.service entered failed state.

- Sep 18 21:02:01 node2 systemd[1]: pgpool.service failed.

- [root@node2 ~]# pgpool -m fast stop

- 2023-09-18 21:02:13.716: main pid 45805: LOG: stop request sent to pgpool (pid: 45161). waiting for termination...

- 2023-09-18 21:02:13.716: main pid 45805: LOCATION: main.c:546

- .done.

- [root@node2 ~]# systemctl start pgpool

- [root@node2 ~]# systemctl status pgpool

- ● pgpool.service - Pgpool-II

- Loaded: loaded (/usr/lib/systemd/system/pgpool.service; disabled; vendor preset: disabled)

- Active: active (running) since Mon 2023-09-18 21:02:21 CST; 2s ago

- Process: 45637 ExecStop=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf $STOP_OPTS stop (code=exited, status=3)

- Process: 44599 ExecReload=/usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf reload (code=exited, status=0/SUCCESS)

- Main PID: 46021 (pgpool)

- Tasks: 20

- Memory: 6.3M

- CGroup: /system.slice/pgpool.service

- ├─46021 /usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf -n

- ├─46022 pgpool: PgpoolLogger

- ├─46023 pgpool: watchdog

- ├─46025 pgpool: lifecheck

- ├─46026 pgpool: heartbeat receiver

- ├─46027 pgpool: heartbeat sender

- ├─46028 pgpool: wait for connection request

- ├─46029 pgpool: wait for connection request

- ├─46030 pgpool: wait for connection request

- ├─46031 pgpool: wait for connection request

- ├─46032 pgpool: wait for connection request

测试读写分离,负载均衡

-

- pgpool的管理工具简单的使用

- pcp是一套管理工具,也就是说有很多pcp名称开始的一族命令

- [root@node1 pgpool-II]# pcp_

- pcp_attach_node pcp_health_check_stats pcp_node_info pcp_proc_count pcp_promote_node pcp_reload_config pcp_watchdog_info

- pcp_detach_node pcp_node_count pcp_pool_status pcp_proc_info pcp_recovery_node pcp_stop_pgpool

- 查看有几个pgpool节点:

- 注意,pcp定义的端口需要写哦,如果你更改过了的话,本文使用的是19999,这个命令可以随意定义,定义在pcp.conf 文件内,只是记得要md5加密哦。本例是 用户是postgres,密码是123456

- [root@node2 pgpool-II]# echo "postgres:`pg_md5 123456`" >>pcp.conf

- [root@node2 pgpool-II]# pcp_node_count -U postgres -p 19999

- Password:

- 2

- 查询pgpool集群的信息:

- [root@node2 pgpool-II]# pcp_node_info -Upostgres -p19999

- Password:

- 192.168.123.11 5432 1 0.500000 waiting unknown primary unknown 0 none none 2023-09-18 21:06:40

- 192.168.123.12 5432 1 0.500000 waiting unknown standby unknown 0.000000 none none 2023-09-18 21:06:40

- 重新加载所有配置:

- [root@node2 pgpool-II]# pcp_reload_config -Upostgres -p19999

- Password:

- pcp_reload_config -- Command Successful

- 查看看门狗的状态:

- [root@node2 pgpool-II]# pcp_watchdog_info -U postgres -p19999

- Password:

- 2 2 NO 192.168.123.11:15433 Linux node1 192.168.123.11

- 192.168.123.12:15433 Linux node2 192.168.123.12 15433 9000 7 STANDBY 0 MEMBER

- 192.168.123.11:15433 Linux node1 192.168.123.11 15433 9000 4 LEADER 0 MEMBER

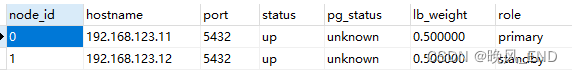

- 数据库内使用插件管理pgpool:

- 查看pool的状态:

- [root@node2 pgpool-II]# su - postgres -c "psql -Upostgres -p 15433 -h 192.168.123.222"

- Password for user postgres:

- psql (12.5)

- Type "help" for help.

- postgres=# show pool_nodes;

- node_id | hostname | port | status | pg_status | lb_weight | role | pg_role | select_cnt | load_balance_node | replication_delay | replication_state | replication_sync_state | last_status_change

- ---------+----------------+------+--------+-----------+-----------+---------+---------+------------+-------------------+-------------------+-------------------+------------------------+---------------------

- 0 | 192.168.123.11 | 5432 | up | unknown | 0.500000 | primary | unknown | 0 | false | 0 | | | 2023-09-18 21:53:08

- 1 | 192.168.123.12 | 5432 | up | unknown | 0.500000 | standby | unknown | 0 | true | 0.000000 second | | | 2023-09-18 21:53:08

- (2 rows)

- 查看缓存命中率:

- 目前暂时是关闭的

- postgres=# show pool_cache;

- num_cache_hits | num_selects | cache_hit_ratio | num_hash_entries | used_hash_entries | num_cache_entries | used_cache_entries_size | free_cache_entries_size | fragment_cache_entries_size

- ----------------+-------------+-----------------+------------------+-------------------+-------------------+-------------------------+-------------------------+-----------------------------

- 0 | 0 | 0.00 | 0 | 0 | 0 | 0 | 0 | 0

- (1 row)

-

- 测试以及一些需要注意的地方

- OK,开始测试啦,这里需要着重说明,VIP是非常非常重要的,测试的时候是使用VIP登录的哦:

- VIP登录数据库:

- [root@node1 pgpool-II]# su - postgres -c "psql -Upostgres -p 15433 -h 192.168.123.222"

- Password for user postgres:

- psql (12.5)

- Type "help" for help.

- 随便建立一个测试用的表,并向表内些一个测试数据,这个就不班门弄斧了,非常的简单,然后查询pool的状态:

- postgres=# show pool_nodes;

- node_id | hostname | port | status | pg_status | lb_weight | role | pg_role | select_cnt | load_balance_node | replication_delay | replication_state | replication_sync_state | last_status_change

- ---------+----------------+------+--------+-----------+-----------+---------+---------+------------+-------------------+-------------------+-------------------+------------------------+---------------------

- 0 | 192.168.123.11 | 5432 | up | unknown | 0.500000 | primary | unknown | 11 | false | 0 | | | 2023-09-18 21:53:08

- 1 | 192.168.123.12 | 5432 | up | unknown | 0.500000 | standby | unknown | 12 | true | 0.000000 second | | | 2023-09-18 21:53:08

- (2 rows)

- 可以看到,负载均衡是生效的,select_cnt 是查询次数,主节点查询了11次,从节点查询了12次

- 利用navicat再次查询,或者使用pgbench压测工具:

- 编辑

- postgres=# show pool_nodes;

- node_id | hostname | port | status | pg_status | lb_weight | role | pg_role | select_cnt | load_balance_node | replication_delay | replication_state | replication_sync_state | last_status_change

- ---------+----------------+------+--------+-----------+-----------+---------+---------+------------+-------------------+-------------------+-------------------+------------------------+---------------------

- 0 | 192.168.123.11 | 5432 | up | unknown | 0.500000 | primary | unknown | 32 | false | 0 | | | 2023-09-18 21:53:08

- 1 | 192.168.123.12 | 5432 | up | unknown | 0.500000 | standby | unknown | 33 | true | 0.000000 second | | | 2023-09-18 21:53:08

- (2 rows)

- 查看pgpool的日志(截取相关部分日志,如下):

- 2023-09-18 22:41:50.701: Navicat pid 39838: LOCATION: pool_proto_modules.c:2712

- 2023-09-18 22:41:50.701: Navicat pid 39838: LOG: statement: select * from test1131

- 2023-09-18 22:41:50.701: Navicat pid 39838: LOCATION: pool_proto_modules.c:211

- 2023-09-18 22:41:50.701: Navicat pid 39838: LOG: DB node id: 1 backend pid: 8540 statement: select * from test1131

- 2023-09-18 22:41:50.701: Navicat pid 39838: LOCATION: pool_proto_modules.c:3569

- 2023-09-18 22:41:50.702: Navicat pid 39845: LOG: Query message from frontend.

- 2023-09-18 22:41:50.702: Navicat pid 39845: DETAIL: query: "SELECT c.conkey FROM pg_constraint c WHERE c.contype = 'p' and c.conrelid = 16398"

- 2023-09-18 22:41:50.702: Navicat pid 39845: LOCATION: pool_proto_modules.c:2712

- 2023-09-18 22:41:50.702: Navicat pid 39845: LOG: statement: SELECT c.conkey FROM pg_constraint c WHERE c.contype = 'p' and c.conrelid = 16398

- 2023-09-18 22:41:50.702: Navicat pid 39845: LOCATION: pool_proto_modules.c:211

- 2023-09-18 22:41:50.702: Navicat pid 39845: LOG: DB node id: 0 backend pid: 61668 statement: SELECT c.conkey FROM pg_constraint c WHERE c.contype = 'p' and c.conrelid = 16398

- 2023-09-18 22:41:50.702: Navicat pid 39845: LOCATION: pool_proto_modules.c:3569

- 2023-09-18 22:41:52.695: Navicat pid 39838: LOG: Query message from frontend.

- 2023-09-18 22:41:52.695: Navicat pid 39838: DETAIL: query: "show pool_nodes"

- 2023-09-18 22:41:52.695: Navicat pid 39838: LOCATION: pool_proto_modules.c:2712

- 2023-09-18 22:41:52.695: Navicat pid 39838: LOG: statement: show pool_nodes

- 2023-09-18 22:41:52.695: Navicat pid 39838: LOCATION: pool_proto_modules.c:211

- 2023-09-18 22:42:28.368: psql pid 39844: LOG: Query message from frontend.

- 2023-09-18 22:42:28.368: psql pid 39844: DETAIL: query: "show pool_nodes;"

- 2023-09-18 22:42:28.368: psql pid 39844: LOCATION: pool_proto_modules.c:2712

- 2023-09-18 22:42:28.368: psql pid 39844: LOG: statement: show pool_nodes;

- 2023-09-18 22:42:28.368: psql pid 39844: LOCATION: pool_proto_modules.c:211

- 可以看到,负载均衡功能完美运行

- 缓存服务的测试:

- 首先,查看memcache服务是否正常,确认正常后,修改主配置文件,打开缓存功能:

- [root@node1 pgpool-II]# systemctl status memcached

- ● memcached.service - Memcached

- Loaded: loaded (/usr/lib/systemd/system/memcached.service; enabled; vendor preset: disabled)

- Active: active (running) since Sun 2023-09-17 19:16:26 CST; 1 day 3h ago

- Main PID: 1443 (memcached)

- Tasks: 6

- Memory: 4.4M

- CGroup: /system.slice/memcached.service

- └─1443 /usr/bin/memcached -u memcached -p 11211 -m 64 -c 1024

- Sep 17 19:16:26 node1 systemd[1]: Started Memcached.

- 编辑

- 重新加载pgpool服务,两个服务器都要修改,重新加载:

- 可以看到部分查询落在了主节点,但没有缓存,部分查询落在了从节点,走的是缓存

- 2023-09-18 22:50:54.481: Navicat pid 47139: LOG: fetch from memory cache

- 2023-09-18 22:50:54.342: Navicat pid 47139: DETAIL: query result fetched from cache. statement: select * from test1131

- 2023-09-18 22:50:54.185: Navicat pid 47138: LOG: DB node id: 0 backend pid: 25501 statement: SELECT c.conkey FROM pg_constraint c WHERE c.contype = 'p' and c.conrelid = 16398

- 2023-09-18 22:50:54.185: Navicat pid 47138: LOCATION: pool_proto_modules.c:3569

- 2023-09-18 22:50:54.342: Navicat pid 47139: LOG: Query message from frontend.

- 2023-09-18 22:50:54.342: Navicat pid 47139: DETAIL: query: "select * from test1131"

- 2023-09-18 22:50:54.342: Navicat pid 47139: LOCATION: pool_proto_modules.c:2712

- 2023-09-18 22:50:54.342: Navicat pid 47139: LOG: statement: select * from test1131

- 2023-09-18 22:50:54.342: Navicat pid 47139: LOCATION: pool_proto_modules.c:211

- 2023-09-18 22:50:54.342: Navicat pid 47139: LOG: fetch from memory cache

- 2023-09-18 22:50:54.342: Navicat pid 47139: DETAIL: query result fetched from cache. statement: select * from test1131

- 2023-09-18 22:50:54.342: Navicat pid 47139: LOCATION: pool_memqcache.c:821

- 2023-09-18 22:50:54.343: Navicat pid 47138: LOG: Query message from frontend.

- 2023-09-18 22:50:54.343: Navicat pid 47138: DETAIL: query: "SELECT c.conkey FROM pg_constraint c WHERE c.contype = 'p' and c.conrelid = 16398"

- 2023-09-18 22:50:54.343: Navicat pid 47138: LOCATION: pool_proto_modules.c:2712

- 2023-09-18 22:50:54.343: Navicat pid 47138: LOG: statement: SELECT c.conkey FROM pg_constraint c WHERE c.contype = 'p' and c.conrelid = 16398

- 2023-09-18 22:50:54.343: Navicat pid 47138: LOCATION: pool_proto_modules.c:211

- 2023-09-18 22:50:54.343: Navicat pid 47138: LOG: DB node id: 0 backend pid: 25501 statement: SELECT c.conkey FROM pg_constraint c WHERE c.contype = 'p' and c.conrelid = 16398

- 2023-09-18 22:50:54.343: Navicat pid 47138: LOCATION: pool_proto_modules.c:3569

- 2023-09-18 22:50:54.480: Navicat pid 47139: LOG: Query message from frontend.

- 2023-09-18 22:50:54.481: Navicat pid 47139: DETAIL: query: "select * from test1131"

- 2023-09-18 22:50:54.481: Navicat pid 47139: LOCATION: pool_proto_modules.c:2712

- 2023-09-18 22:50:54.481: Navicat pid 47139: LOG: statement: select * from test1131

- 2023-09-18 22:50:54.481: Navicat pid 47139: LOCATION: pool_proto_modules.c:211

- 2023-09-18 22:50:54.481: Navicat pid 47139: LOG: fetch from memory cache

- 2023-09-18 22:50:54.481: Navicat pid 47139: DETAIL: query result fetched from cache. statement: select * from test1131

- 2023-09-18 22:50:54.481: Navicat pid 47139: LOCATION: pool_memqcache.c:821

- 查看缓存命中率:

- 可以看到查询了53次,总命中率是0.5(cache_hit_ratio就是缓存命中率,可以知道,该数据库集群的性能会得到极大的提升)

- postgres=# show pool_cache;

- num_cache_hits | num_selects | cache_hit_ratio | num_hash_entries | used_hash_entries | num_cache_entries | used_cache_entries_size | free_cache_entries_size | fragment_cache_entries_size

- ----------------+-------------+-----------------+------------------+-------------------+-------------------+-------------------------+-------------------------+-----------------------------

- 53 | 52 | 0.50 | 0 | 0 | 0 | 0 | 0 | 0

- (1 row)

- postgres=# show pool_nodes;

- node_id | hostname | port | status | pg_status | lb_weight | role | pg_role | select_cnt | load_balance_node | replication_delay | replication_state | replication_sync_state | last_status_change

- ---------+----------------+------+--------+-----------+-----------+---------+---------+------------+-------------------+-------------------+-------------------+------------------------+---------------------

- 0 | 192.168.123.11 | 5432 | up | unknown | 0.500000 | primary | unknown | 52 | false | 0 | | | 2023-09-18 22:48:37

- 1 | 192.168.123.12 | 5432 | up | unknown | 0.500000 | standby | unknown | 0 | true | 0.000000 second | | | 2023-09-18 22:48:37

-

主备切换(这个功能用不上)

安装及使用总结

首先,需要明白一点,pgpool并不是十分容易配置的服务,可能在配置和调试的过程中会有非常多的错误,但是不需要害怕这些错误,善于利用百度等等搜索引擎,其次,主配置文件里的很多地方配置好后可能是需要重启服务的,而服务的重启顺序是先从节点重启在主节点重启,这么做的原因是可能会配置故障恢复脚本等情形下的高可用,防止主节点乱跑,从而给自己造成不必要的麻烦。

最后pool_hba.conf 里最好使用数据库内的pg_hab.conf的所有内容,一个是安全,一个是防止两者不一致造成的麻烦(毕竟报错后的问题分析还是比较麻烦的嘛)

-

相关阅读:

仿牛客网项目---帖子详情功能的实现

VS配置opencv

HTML5期末考核大作业 基于HTML+CSS+JavaScript沪上美食(9页)

django——公众号服务开发

Go/Golang语言学习实践[回顾]教程13--详解Go语言的词法解析单元

.NET使用CsvHelper快速读取和写入CSV文件

1. 算法简介

多目标优化问题的研究概述(Matlab代码实现)

【uniapp】实现对TXT文本文件的读取和保存下载

蓝鲸DevOps荣获平台类工具企业选择率第一

- 原文地址:https://blog.csdn.net/alwaysbefine/article/details/132942114