-

AI项目六:基于YOLOV5的CPU版本部署openvino

若该文为原创文章,转载请注明原文出处。

一、CPU版本DEMO测试

1、创建一个新的虚拟环境

conda create -n course_torch_openvino python=3.82、激活环境

conda activate course_torch_openvino3、安装pytorch cpu版本

pip install torch torchvision torchaudio -i https://pypi.tuna.tsinghua.edu.cn/simple

4、安装

使用的是yolov5-5版本,github上下载。

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

5、运行demo

python demo.py完整代码

- import cv2

- import numpy as np

- import torch

- import time

- # model = torch.hub.load('./yolov5', 'custom', path='./weights/ppe_yolo_n.pt',source='local') # local repo

- model = torch.hub.load('./yolov5', 'custom', 'weights/poker_n.pt',source='local')

- model.conf = 0.4

- cap = cv2.VideoCapture(0)

- fps_time = time.time()

- while True:

- ret,frame = cap.read()

- frame = cv2.flip(frame,1)

- img_cvt = cv2.cvtColor(frame,cv2.COLOR_BGR2RGB)

- # Inference

- results = model(img_cvt)

- result_np = results.pandas().xyxy[0].to_numpy()

- for box in result_np:

- l,t,r,b = box[:4].astype('int')

- cv2.rectangle(frame,(l,t),(r,b),(0,255,0),5)

- cv2.putText(frame,str(box[-1]),(l,t-20),cv2.FONT_ITALIC,1,(0,255,0),2)

- now = time.time()

- fps_text = 1/(now - fps_time)

- fps_time = now

- cv2.putText(frame,str(round(fps_text,2)),(50,50),cv2.FONT_ITALIC,1,(0,255,0),2)

- cv2.imshow('demo',frame)

- if cv2.waitKey(10) & 0xFF == ord('q'):

- break

- cap.release()

- cv2.destroyAllWindows()

运行正常

二、YOLOV5转换成openvino

1、安装onnx

pip install onnx==1.11.0

2、修改文件

修改export.py 的第121行,修改成

opset_version=103、导出onnx

使用训练好的best.pt文件,把best.pt转成onnx文件

转换命令为:

python export.py --weights ../weights/best.pt --img 640 --batch 14、转成openvino

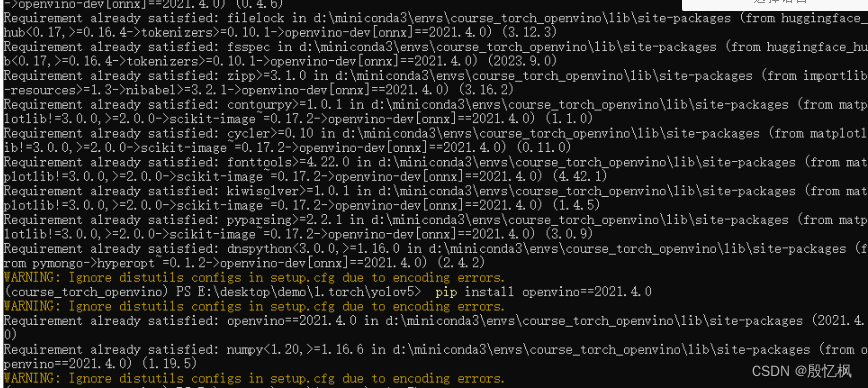

转换前先安装环境

- pip install openvino-dev[onnx]==2021.4.0

- pip install openvino==2021.4.0

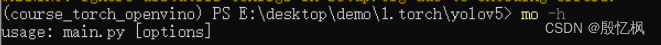

验证一下,输入mo -h

接下来转换模型,使用下面命令导出模型

mo --input_model weights/best.onnx --model_name weights/ir_model -s 255 --reverse_input_channels --output Conv_294,Conv_245,Conv_196会生成3个文件, ir_model.xml就是要用的文件。

5、运行

python yolov5_demo.py -i cam -m weights/ir_model.xml -d CPU代码:

- import logging

- import os

- import sys

- from argparse import ArgumentParser, SUPPRESS

- from math import exp as exp

- from time import time,sleep

- import numpy as np

- import cv2

- from openvino.inference_engine import IENetwork, IECore

- logging.basicConfig(format="[ %(levelname)s ] %(message)s", level=logging.INFO, stream=sys.stdout)

- log = logging.getLogger()

- def build_argparser():

- parser = ArgumentParser(add_help=False)

- args = parser.add_argument_group('Options')

- args.add_argument('-h', '--help', action='help', default=SUPPRESS, help='Show this help message and exit.')

- args.add_argument("-m", "--model", help="Required. Path to an .xml file with a trained model.",

- required=True, type=str)

- args.add_argument("-i", "--input", help="Required. Path to an image/video file. (Specify 'cam' to work with "

- "camera)", required=True, type=str)

- args.add_argument("-l", "--cpu_extension",

- help="Optional. Required for CPU custom layers. Absolute path to a shared library with "

- "the kernels implementations.", type=str, default=None)

- args.add_argument("-d", "--device",

- help="Optional. Specify the target device to infer on; CPU, GPU, FPGA, HDDL or MYRIAD is"

- " acceptable. The sample will look for a suitable plugin for device specified. "

- "Default value is CPU", default="CPU", type=str)

- args.add_argument("-t", "--prob_threshold", help="Optional. Probability threshold for detections filtering",

- default=0.5, type=float)

- args.add_argument("-iout", "--iou_threshold", help="Optional. Intersection over union threshold for overlapping "

- "detections filtering", default=0.4, type=float)

- return parser

- class YoloParams:

- # ------------------------------------------- Extracting layer parameters ------------------------------------------

- # Magic numbers are copied from yolo samples

- def __init__(self, side):

- self.num = 3 #if 'num' not in param else int(param['num'])

- self.coords = 4 #if 'coords' not in param else int(param['coords'])

- self.classes = 80 #if 'classes' not in param else int(param['classes'])

- self.side = side

- self.anchors = [10.0, 13.0, 16.0, 30.0, 33.0, 23.0, 30.0, 61.0, 62.0, 45.0, 59.0, 119.0, 116.0, 90.0, 156.0,198.0,373.0, 326.0] #if 'anchors' not in param else [float(a) for a in param['anchors'].split(',')]

- def letterbox(img, size=(640, 640), color=(114, 114, 114), auto=True, scaleFill=False, scaleup=True):

- # Resize image to a 32-pixel-multiple rectangle https://github.com/ultralytics/yolov3/issues/232

- shape = img.shape[:2] # current shape [height, width]

- w, h = size

- # Scale ratio (new / old)

- r = min(h / shape[0], w / shape[1])

- if not scaleup: # only scale down, do not scale up (for better test mAP)

- r = min(r, 1.0)

- # Compute padding

- ratio = r, r # width, height ratios

- new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

- dw, dh = w - new_unpad[0], h - new_unpad[1] # wh padding

- if auto: # minimum rectangle

- dw, dh = np.mod(dw, 64), np.mod(dh, 64) # wh padding

- elif scaleFill: # stretch

- dw, dh = 0.0, 0.0

- new_unpad = (w, h)

- ratio = w / shape[1], h / shape[0] # width, height ratios

- dw /= 2 # divide padding into 2 sides

- dh /= 2

- if shape[::-1] != new_unpad: # resize

- img = cv2.resize(img, new_unpad, interpolation=cv2.INTER_LINEAR)

- top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

- left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

- img = cv2.copyMakeBorder(img, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

- top2, bottom2, left2, right2 = 0, 0, 0, 0

- if img.shape[0] != h:

- top2 = (h - img.shape[0])//2

- bottom2 = top2

- img = cv2.copyMakeBorder(img, top2, bottom2, left2, right2, cv2.BORDER_CONSTANT, value=color) # add border

- elif img.shape[1] != w:

- left2 = (w - img.shape[1])//2

- right2 = left2

- img = cv2.copyMakeBorder(img, top2, bottom2, left2, right2, cv2.BORDER_CONSTANT, value=color) # add border

- return img

- def scale_bbox(x, y, height, width, class_id, confidence, im_h, im_w, resized_im_h=640, resized_im_w=640):

- gain = min(resized_im_w / im_w, resized_im_h / im_h) # gain = old / new

- pad = (resized_im_w - im_w * gain) / 2, (resized_im_h - im_h * gain) / 2 # wh padding

- x = int((x - pad[0])/gain)

- y = int((y - pad[1])/gain)

- w = int(width/gain)

- h = int(height/gain)

- xmin = max(0, int(x - w / 2))

- ymin = max(0, int(y - h / 2))

- xmax = min(im_w, int(xmin + w))

- ymax = min(im_h, int(ymin + h))

- # Method item() used here to convert NumPy types to native types for compatibility with functions, which don't

- # support Numpy types (e.g., cv2.rectangle doesn't support int64 in color parameter)

- return dict(xmin=xmin, xmax=xmax, ymin=ymin, ymax=ymax, class_id=class_id.item(), confidence=confidence.item())

- def entry_index(side, coord, classes, location, entry):

- side_power_2 = side ** 2

- n = location // side_power_2

- loc = location % side_power_2

- return int(side_power_2 * (n * (coord + classes + 1) + entry) + loc)

- def parse_yolo_region(blob, resized_image_shape, original_im_shape, params, threshold):

- # ------------------------------------------ Validating output parameters ------------------------------------------

- out_blob_n, out_blob_c, out_blob_h, out_blob_w = blob.shape

- predictions = 1.0/(1.0+np.exp(-blob))

- # ------------------------------------------ Extracting layer parameters -------------------------------------------

- orig_im_h, orig_im_w = original_im_shape

- resized_image_h, resized_image_w = resized_image_shape

- objects = list()

- side_square = params.side * params.side

- # ------------------------------------------- Parsing YOLO Region output -------------------------------------------

- bbox_size = int(out_blob_c/params.num) #4+1+num_classes

- index=0

- for row, col, n in np.ndindex(params.side, params.side, params.num):

- bbox = predictions[0, n*bbox_size:(n+1)*bbox_size, row, col]

- x, y, width, height, object_probability = bbox[:5]

- class_probabilities = bbox[5:]

- if object_probability < threshold:

- continue

- x = (2*x - 0.5 + col)*(resized_image_w/out_blob_w)

- y = (2*y - 0.5 + row)*(resized_image_h/out_blob_h)

- if int(resized_image_w/out_blob_w) == 8 & int(resized_image_h/out_blob_h) == 8: #80x80,

- idx = 0

- elif int(resized_image_w/out_blob_w) == 16 & int(resized_image_h/out_blob_h) == 16: #40x40

- idx = 1

- elif int(resized_image_w/out_blob_w) == 32 & int(resized_image_h/out_blob_h) == 32: # 20x20

- idx = 2

- width = (2*width)**2* params.anchors[idx * 6 + 2 * n]

- height = (2*height)**2 * params.anchors[idx * 6 + 2 * n + 1]

- class_id = np.argmax(class_probabilities)

- confidence = object_probability

- objects.append(scale_bbox(x=x, y=y, height=height, width=width, class_id=class_id, confidence=confidence,im_h=orig_im_h, im_w=orig_im_w, resized_im_h=resized_image_h, resized_im_w=resized_image_w))

- if index >30:

- break

- index+=1

- return objects

- def intersection_over_union(box_1, box_2):

- width_of_overlap_area = min(box_1['xmax'], box_2['xmax']) - max(box_1['xmin'], box_2['xmin'])

- height_of_overlap_area = min(box_1['ymax'], box_2['ymax']) - max(box_1['ymin'], box_2['ymin'])

- if width_of_overlap_area < 0 or height_of_overlap_area < 0:

- area_of_overlap = 0

- else:

- area_of_overlap = width_of_overlap_area * height_of_overlap_area

- box_1_area = (box_1['ymax'] - box_1['ymin']) * (box_1['xmax'] - box_1['xmin'])

- box_2_area = (box_2['ymax'] - box_2['ymin']) * (box_2['xmax'] - box_2['xmin'])

- area_of_union = box_1_area + box_2_area - area_of_overlap

- if area_of_union == 0:

- return 0

- return area_of_overlap / area_of_union

- def main():

- args = build_argparser().parse_args()

- # ------------- 1. Plugin initialization for specified device and load extensions library if specified -------------

- ie = IECore()

- if args.cpu_extension and 'CPU' in args.device:

- ie.add_extension(args.cpu_extension, "CPU")

- # -------------------- 2. Reading the IR generated by the Model Optimizer (.xml and .bin files) --------------------

- model = args.model

- net = ie.read_network(model=model)

- # ---------------------------------------------- 4. Preparing inputs -----------------------------------------------

- input_blob = next(iter(net.input_info))

- # Defaulf batch_size is 1

- net.batch_size = 1

- # Read and pre-process input images

- n, c, h, w = net.input_info[input_blob].input_data.shape

- # labels_map = [x.strip() for x in f]

- labels_map = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

- 'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

- 'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

- 'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

- 'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

- 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

- 'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

- 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

- 'hair drier', 'toothbrush']

- input_stream = 0 if args.input == "cam" else args.input

- is_async_mode = True

- cap = cv2.VideoCapture(input_stream)

- number_input_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

- number_input_frames = 1 if number_input_frames != -1 and number_input_frames < 0 else number_input_frames

- wait_key_code = 1

- # Number of frames in picture is 1 and this will be read in cycle. Sync mode is default value for this case

- if number_input_frames != 1:

- ret, frame = cap.read()

- else:

- is_async_mode = False

- wait_key_code = 0

- # ----------------------------------------- 5. Loading model to the plugin -----------------------------------------

- exec_net = ie.load_network(network=net, num_requests=2, device_name=args.device)

- cur_request_id = 0

- next_request_id = 1

- render_time = 0

- parsing_time = 0

- # ----------------------------------------------- 6. Doing inference -----------------------------------------------

- initial_w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

- initial_h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

- origin_im_size = (initial_h,initial_w)

- while cap.isOpened():

- # Here is the first asynchronous point: in the Async mode, we capture frame to populate the NEXT infer request

- # in the regular mode, we capture frame to the CURRENT infer request

- if is_async_mode:

- ret, next_frame = cap.read()

- else:

- ret, frame = cap.read()

- if not ret:

- break

- if is_async_mode:

- request_id = next_request_id

- in_frame = letterbox(frame, (w, h))

- else:

- request_id = cur_request_id

- in_frame = letterbox(frame, (w, h))

- in_frame0 = in_frame

- # resize input_frame to network size

- in_frame = in_frame.transpose((2, 0, 1)) # Change data layout from HWC to CHW

- in_frame = in_frame.reshape((n, c, h, w))

- # Start inference

- start_time = time()

- exec_net.start_async(request_id=request_id, inputs={input_blob: in_frame})

- # Collecting object detection results

- objects = list()

- if exec_net.requests[cur_request_id].wait(-1) == 0:

- output = exec_net.requests[cur_request_id].output_blobs

- start_time = time()

- for layer_name, out_blob in output.items():

- layer_params = YoloParams(side=out_blob.buffer.shape[2])

- objects += parse_yolo_region(out_blob.buffer, in_frame.shape[2:],

- frame.shape[:-1], layer_params,

- args.prob_threshold)

- parsing_time = time() - start_time

- # Filtering overlapping boxes with respect to the --iou_threshold CLI parameter

- objects = sorted(objects, key=lambda obj : obj['confidence'], reverse=True)

- for i in range(len(objects)):

- if objects[i]['confidence'] == 0:

- continue

- for j in range(i + 1, len(objects)):

- if intersection_over_union(objects[i], objects[j]) > args.iou_threshold:

- objects[j]['confidence'] = 0

- # Drawing objects with respect to the --prob_threshold CLI parameter

- objects = [obj for obj in objects if obj['confidence'] >= args.prob_threshold]

- for obj in objects:

- # Validation bbox of detected object

- if obj['xmax'] > origin_im_size[1] or obj['ymax'] > origin_im_size[0] or obj['xmin'] < 0 or obj['ymin'] < 0:

- continue

- color = (0,255,0)

- det_label = labels_map[obj['class_id']] if labels_map and len(labels_map) >= obj['class_id'] else \

- str(obj['class_id'])

- cv2.rectangle(frame, (obj['xmin'], obj['ymin']), (obj['xmax'], obj['ymax']), color, 2)

- cv2.putText(frame,

- "#" + det_label + ' ' + str(round(obj['confidence'] * 100, 1)) + ' %',

- (obj['xmin'], obj['ymin'] - 7), cv2.FONT_ITALIC, 1, color, 2)

- # Draw performance stats over frame

- async_mode_message = "Async mode: ON"if is_async_mode else "Async mode: OFF"

- cv2.putText(frame, async_mode_message, (10, int(origin_im_size[0] - 20)), cv2.FONT_ITALIC, 1,

- (10, 10, 200), 2)

- fps_time = time() - start_time

- if fps_time !=0:

- fps = 1 / fps_time

- cv2.putText(frame, 'fps:'+str(round(fps,2)), (50, 50), cv2.FONT_ITALIC, 1, (0, 255, 0), 2)

- cv2.imshow("DetectionResults", frame)

- if is_async_mode:

- cur_request_id, next_request_id = next_request_id, cur_request_id

- frame = next_frame

- key = cv2.waitKey(wait_key_code)

- # ESC key

- if key == 27:

- break

- # Tab key

- if key == 9:

- exec_net.requests[cur_request_id].wait()

- is_async_mode = not is_async_mode

- log.info("Switched to {} mode".format("async" if is_async_mode else "sync"))

- cv2.destroyAllWindows()

- if __name__ == '__main__':

- sys.exit(main() or 0)

三、总结

通过openvino加速,CPU没有GPU下,从原本的20帧左右提升到50多帧,效果还可以,就 是用自己的模型,训练出来的效果不怎么好。

使用树莓派等嵌入板子使用openvino效果还可以。

如有侵权,或需要完整代码,请及时联系博主。

-

相关阅读:

10.Ribbon 源码分析(springcloud)

MongoDB系列之Window环境部署配置

C语言(第三十七天)

如何转换Corona和Vray材质?cr材质转vr材质的方法

【仿牛客网笔记】Spring Boot实践,开发社区登录模块-账号设置,检查登录

CLion使用相关问题

代码随想录图论 第三天 | 130. 被围绕的区域 417. 太平洋大西洋水流问题

2023年9月18日

Java 内省机制

Vue的路由系统(Vue Router)

- 原文地址:https://blog.csdn.net/weixin_38807927/article/details/132796457