-

Kafka3.0.0版本——消费者(Range分区分配策略以及再平衡)

一、Range分区分配策略原理

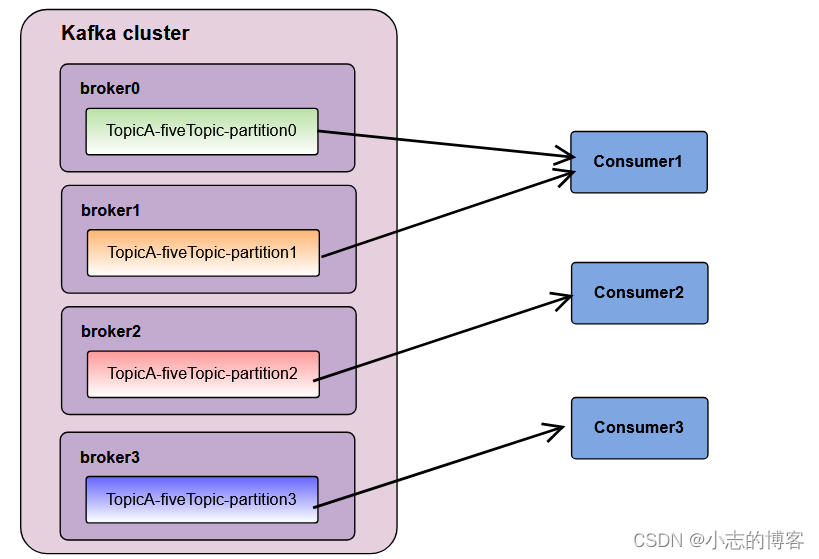

- Range 是对每个 topic 而言的。首先对同一个 topic 里面的分区按照序号进行排序,并对消费者按照字母顺序进行排序。

1.1、Range分区分配策略原理的示例一

假如现在有 4 个分区,3 个消费者,排序后的分区将会是0,1,2,3;消费者排序完之后将会是C1,C2,C3。

- 通过 partitions数/consumer数 来决定每个消费者应该消费几个分区。 如果除不尽,那么前面几个消费者将会多消费 1 个分区。

- 例如:4/3 = 1 余 1 ,除不尽,那么消费者C1便会多消费1个分区。

1.2、Range分区分配策略原理的示例二

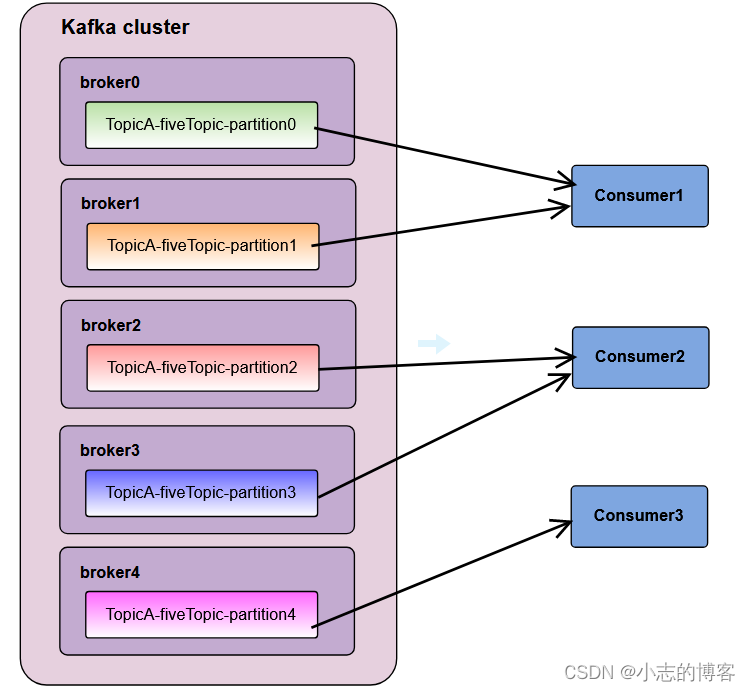

假如现在有 5 个分区,3 个消费者,排序后的分区将会是0,1,2,3,4;消费者排序完之后将会是C1,C2,C3。

- 通过 partitions数/consumer数 来决定每个消费者应该消费几个分区。 如果除不尽,那么前面几个消费者将会多消费 1 个分区。

- 例如:5/3 = 1 余 2 ,除不尽,那么消费者么C1和C2分别多消费一个分区。

1.3、Range分区分配策略原理的示例注意事项

- 如果只是针对 1 个 topic 而言,C1消费者多消费1个分区影响不是很大。但是如果有N多个topic,那么针对每个 topic,消费者 C1都将多消费 1 个分区,topic越多,C1消费的分区会比其他消费者明显多消费 N 个分区。 容易产生数据倾斜!

二、Range 分区分配策略代码案例

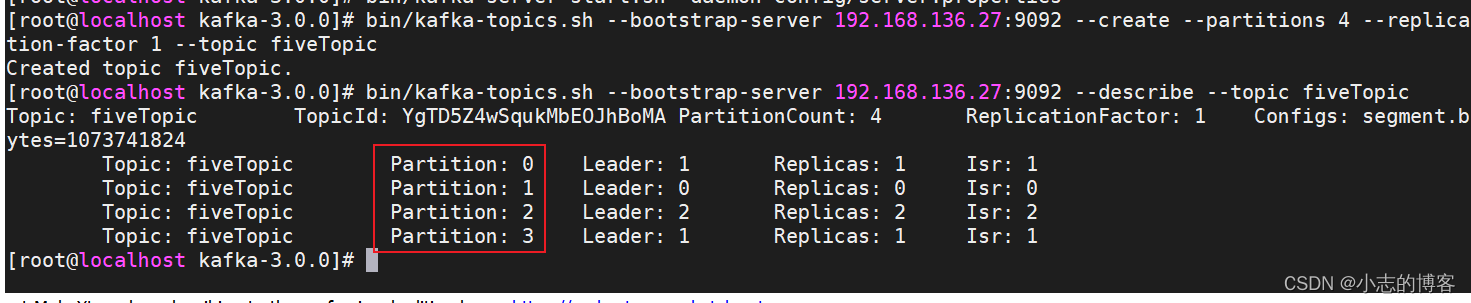

2.1、创建带有4个分区的fiveTopic主题

-

在 Kafka 集群控制台,创建带有4个分区的fiveTopic主题

bin/kafka-topics.sh --bootstrap-server 192.168.136.27:9092 --create --partitions 4 --replication-factor 1 --topic fiveTopic- 1

2.2、创建三个消费者 组成 消费者组

-

复制 CustomConsumer1类,创建 CustomConsumer2和CustomConsumer3。这样可以由三个消费者组成消费者组,组名都为“test”。

package com.xz.kafka.consumer; import org.apache.kafka.clients.consumer.ConsumerConfig; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; import org.apache.kafka.common.serialization.StringDeserializer; import java.time.Duration; import java.util.ArrayList; import java.util.Properties; public class CustomConsumer1 { public static void main(String[] args) { // 0 配置 Properties properties = new Properties(); // 连接 bootstrap.servers properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.136.27:9092,192.168.136.28:9092,192.168.136.29:9092"); // 反序列化 properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName()); properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName()); // 配置消费者组id properties.put(ConsumerConfig.GROUP_ID_CONFIG,"test"); // 1 创建一个消费者 "", "hello" KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(properties); // 2 订阅主题 first ArrayList<String> topics = new ArrayList<>(); topics.add("fiveTopic"); kafkaConsumer.subscribe(topics); // 3 消费数据 while (true){ ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(Duration.ofSeconds(1)); for (ConsumerRecord<String, String> consumerRecord : consumerRecords) { System.out.println(consumerRecord); } } } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

2.3、创建生产者

-

创建CustomProducer生产者。

package com.xz.kafka.producer; import org.apache.kafka.clients.producer.*; import org.apache.kafka.common.serialization.StringSerializer; import java.util.Properties; public class CustomProducerCallback { public static void main(String[] args) throws InterruptedException { //1、创建 kafka 生产者的配置对象 Properties properties = new Properties(); //2、给 kafka 配置对象添加配置信息:bootstrap.servers properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.136.27:9092,192.168.136.28:9092,192.168.136.29:9092"); //3、指定对应的key和value的序列化类型 key.serializer value.serializer properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName()); properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName()); //4、创建 kafka 生产者对象 KafkaProducer<String, String> kafkaProducer = new KafkaProducer<>(properties); //5、调用 send 方法,发送消息 for (int i = 0; i < 200; i++) { kafkaProducer.send(new ProducerRecord<>("fiveTopic", "hello kafka" + i), new Callback() { @Override public void onCompletion(RecordMetadata metadata, Exception exception) { if (exception == null){ System.out.println("主题: "+metadata.topic() + " 分区: "+ metadata.partition()); } } }); Thread.sleep(2); } // 3 关闭资源 kafkaProducer.close(); } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

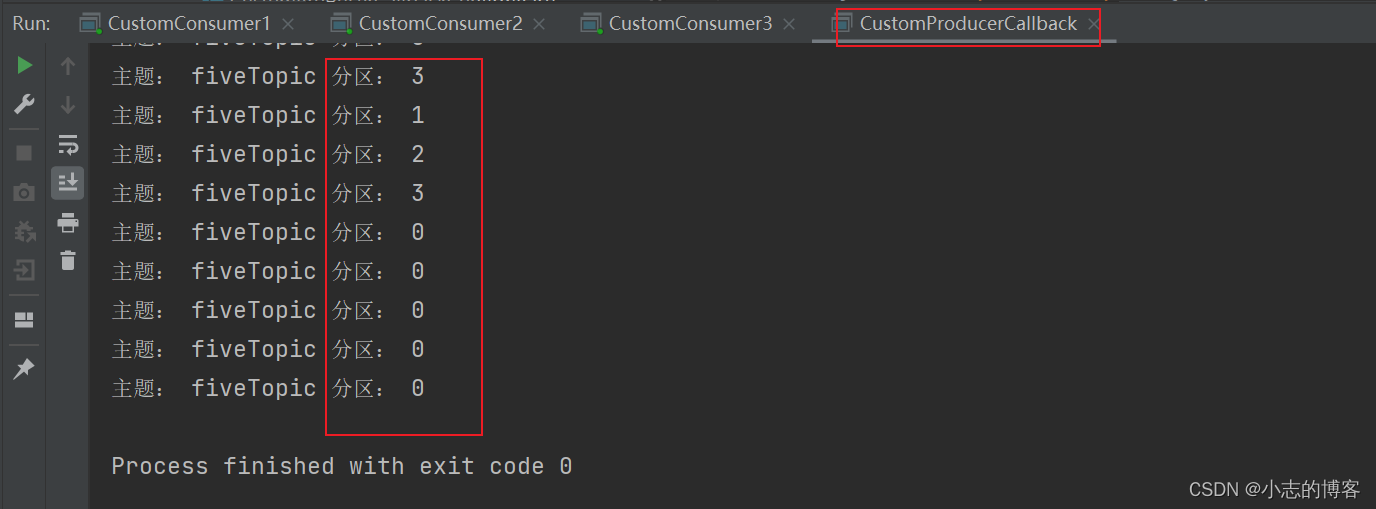

2.4、测试

-

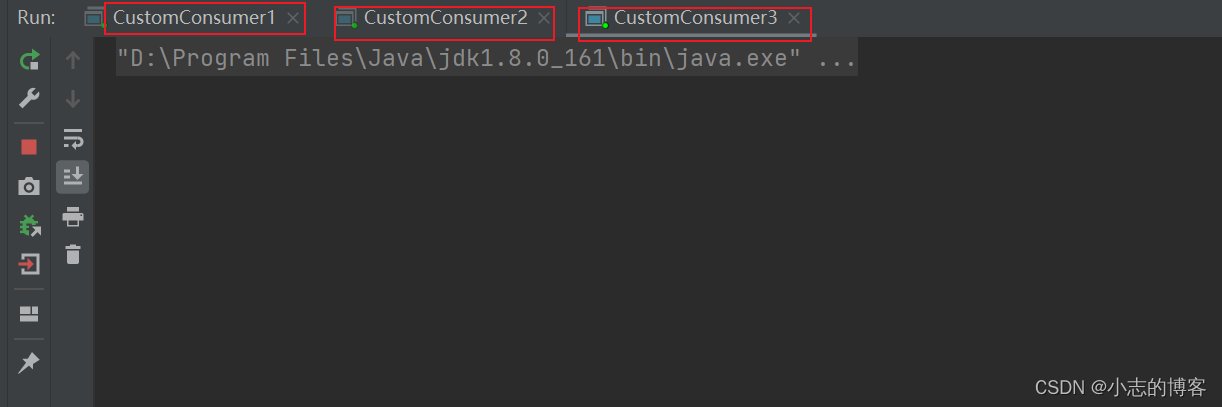

首先,在 IDEA中分别启动消费者1、消费者2和消费者3代码

-

然后,在 IDEA中分别启动生产者代码

-

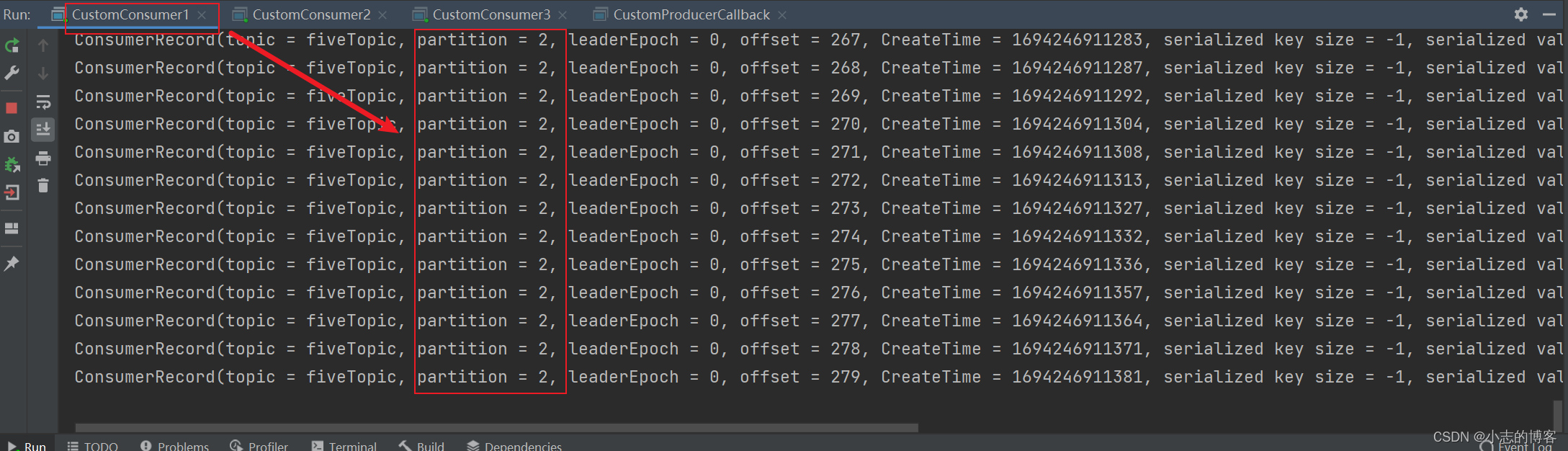

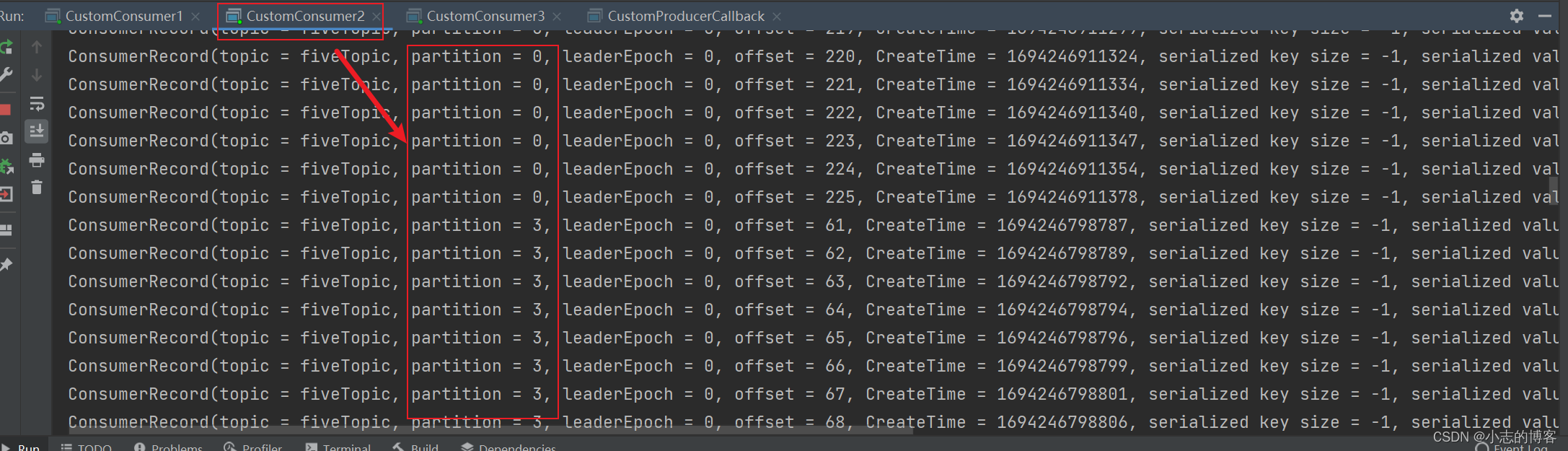

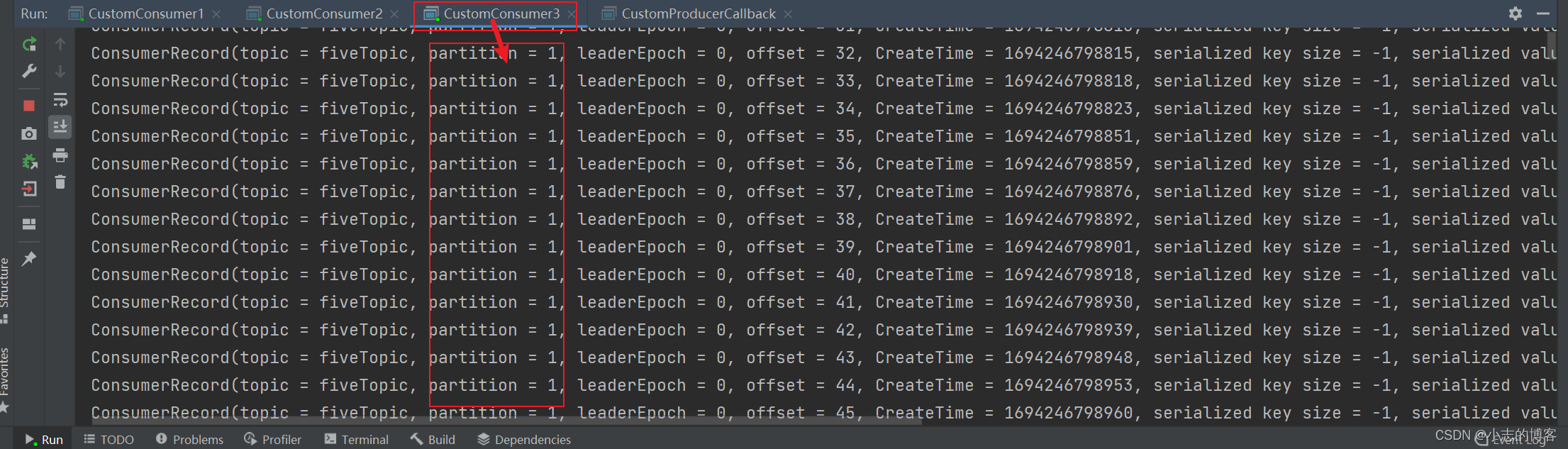

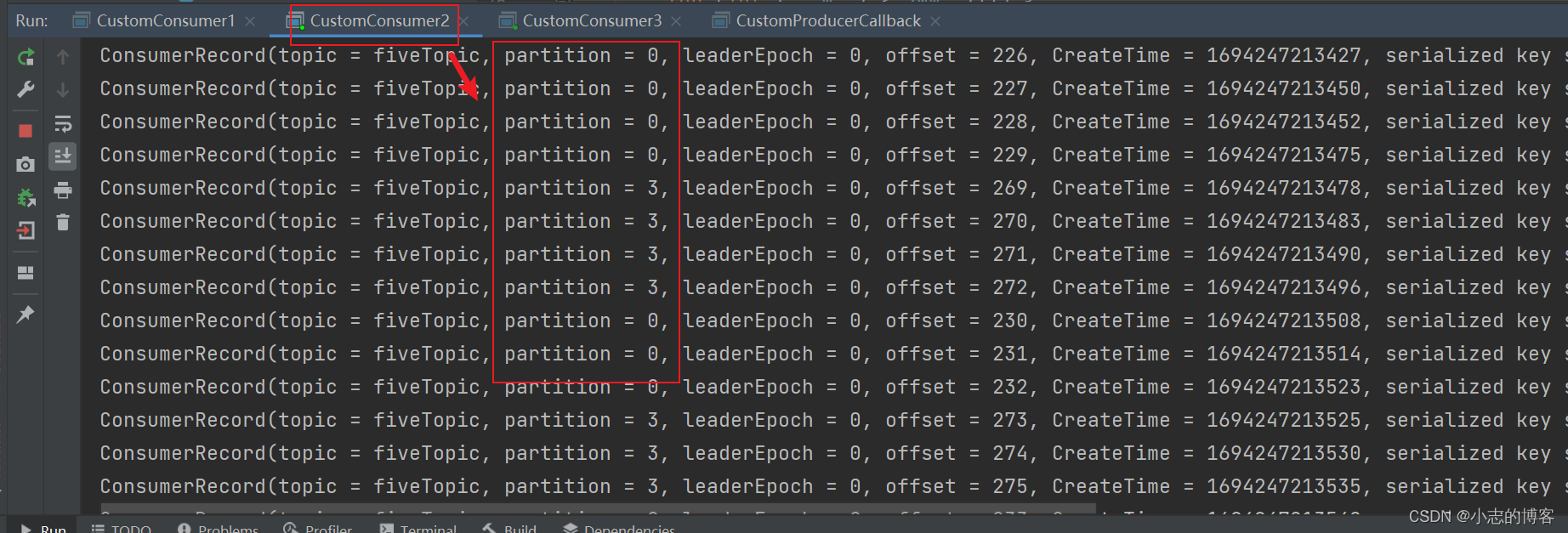

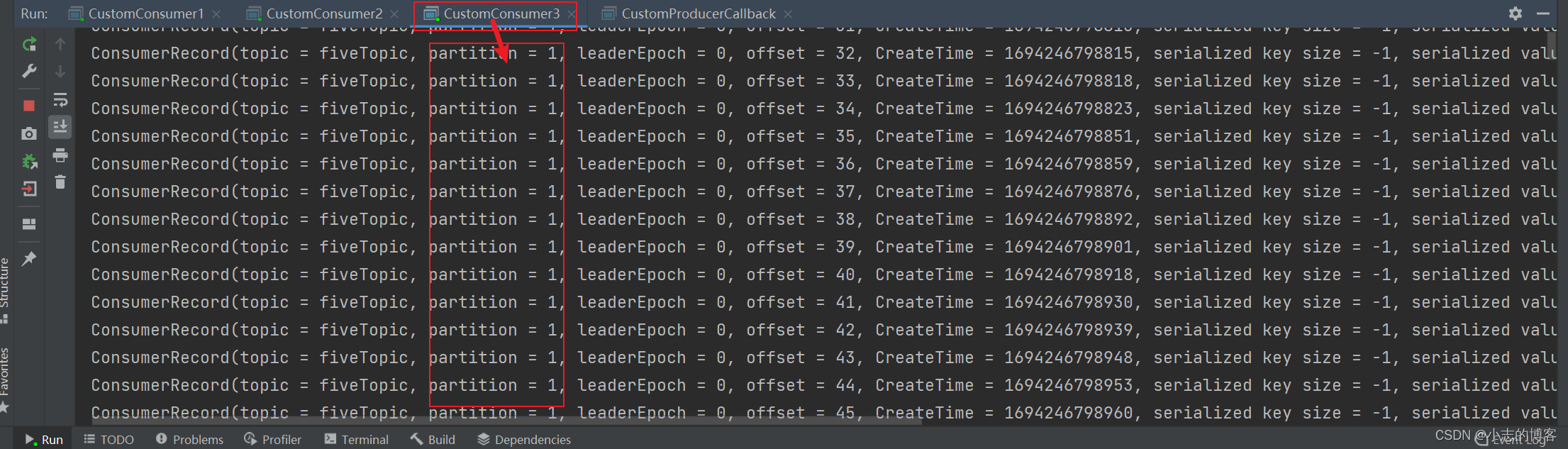

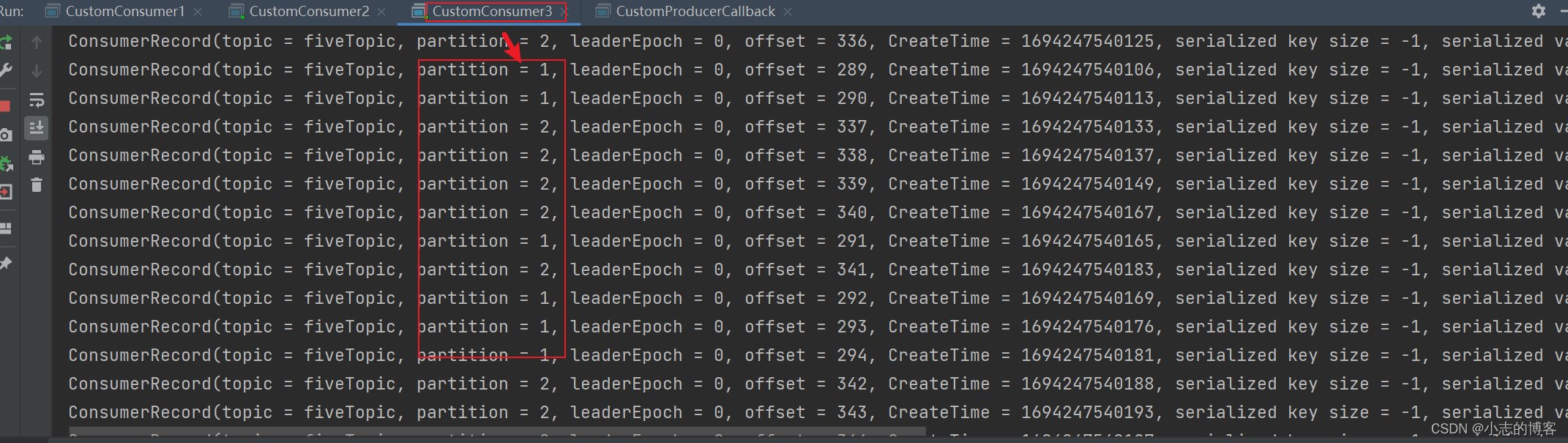

在 IDEA 控制台观察消费者1、消费者2和消费者3控制台接收到的数据,如下图所示:

2.5、Range 分区分配策略代码案例说明

- 由上述测试输出结果截图可知: 消费者1消费2分区的数据;消费者2消费0和3分区的数据;消费者3消费2分区的数据。

- 说明:Kafka 默认的分区分配策略就是 Range + CooperativeSticky,所以不需要修改策略。

三、Range 分区分配再平衡案例

3.1、停止某一个消费者后,(45s 以内)重新发送消息示例

- 由下图控制台输出可知:2号消费者 消费到 0、3号分区数据。

- 由下图控制台输出可知:3号消费者 消费到 1号分区数据。

3.2、停止某一个消费者后,(45s 以后)重新发送消息示例

- 由下图控制台输出可知:2号消费者 消费到 0、3号分区数据。

- 由下图控制台输出可知:3号消费者 消费到 1、2号分区数据。

3.3、Range 分区分配再平衡案例说明

- 1号消费者挂掉后,消费者组需要按照超时时间 45s 来判断它是否退出,所以需要等待,时间到了 45s 后,判断它真的退出就会把任务分配给其他 broker 执行。

- 消费者1 已经被踢出消费者组,所以重新按照 range 方式分配。

-

相关阅读:

华为机试 - 勾股数元组

【Python+Appium】自动化测试(十一)location与size获取元素坐标

【数据结构】基础:栈(C语言)

PostgreSQL 索引优化与性能调优(十一)

API 文档搜索引擎

LeetCode刷题系列 -- 32. 最长有效括号

scp -r ./dist root@你的IP:/root/www/website/解释

高斯线性模型

入门力扣自学笔记147 C++ (题目编号1598)

Python---数据序列类型之间的相互转换

- 原文地址:https://blog.csdn.net/li1325169021/article/details/132778350