-

tensorflow2.x --------------------DenseNet-----------------------------

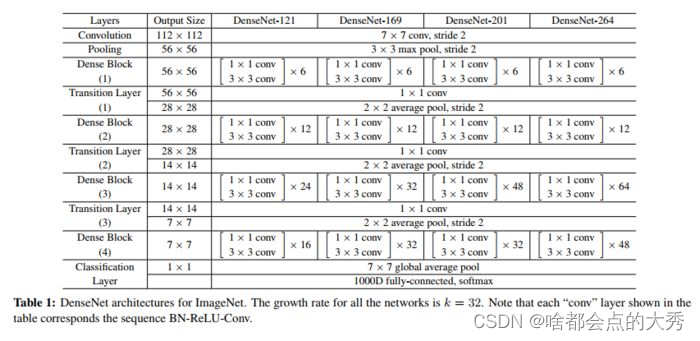

用tensorflow2.4实现了DenseNet-121,训练基于ImageNet图像数据集,图片输入大小为 224x224 。网络结构采用包含4个DenseBlock的DenseNet-BC,每个DenseNet-BC由若干个 BN+ReLU+1x1 Conv+BN+ReLU+3x3 Conv(Dense_layer)且每个DenseBlock的特征图大小分别为56,28,14,7,DenseNet中每个DenseBlock分别有 [6,12,24,16] 个Dense_layer,在每个DenseBlock后连接Transition module,Transition module 包括一个1x1的卷积和2x2的AveragePooling,具体结构为BN+ReLU+1x1 Conv+2x2 AveragePooling,Transition module层还可以起到压缩模型的作用,假定Transition module的上层DenseBlock得到的特征图channels数为 m ,Transition层可以产生m*n个channels,其中 n 是压缩系数(compression rate)。当 n=1 时,channelss数经过Transition层没有变化,即没有压缩模型,文中使用n=0.5 。具体网络结构如下图:

这里需要注意一点的是:DenseNet网络层中的第一个卷积层的填充步长为3,即在行和列各填充3行,padding参数只有’same’和‘valid’,所以需要单独对输入值进行填充,使用tensorflow.keras.layers中的ZeroPadding2D()对输入进行单独填充处理。具体使用下面命令:self.padding=ZeroPadding2D(((2,1),(2,1)))- 1

这一点可以通过输入输出尺寸公式得到验证。

具体代码如下:import tensorflow as tf import numpy as np import os from tensorflow.keras.layers import * from tensorflow.keras import Model class DenseLayer(Model): def __init__(self,bottleneck_size,growth_rate): super().__init__() self.filters=growth_rate self.bottleneck_size=bottleneck_size self.b1=BatchNormalization() self.a1=Activation('relu') self.c1=Conv2D(filters=self.bottleneck_size,kernel_size=(1,1),strides=1) self.b2=BatchNormalization() self.a2=Activation('relu') self.c2=Conv2D(filters=32,kernel_size=(3,3),strides=1,padding='same') def call(self,*x): x=tf.concat(x,2) x=self.b1(x) x=self.a1(x) x=self.c1(x) x=self.b2(x) x=self.a2(x) y=self.c2(x) return y class DenseBlock(Model): def __init__(self,Dense_layers_num,growth_rate):#Dense_layers_num每个denseblock中的denselayer数,growth super().__init__() self.Dense_layers_num=Dense_layers_num self.Dense_layers=[] bottleneck_size=4*growth_rate for i in range(Dense_layers_num): layer=DenseLayer(bottleneck_size,growth_rate) self.Dense_layers.append(layer) def call(self,input): x=[input] for layer in self.Dense_layers: output=layer(*x) x.append(output) y=tf.concat(x,2) return y class Transition(Model): def __init__(self,filters): super().__init__() self.b=BatchNormalization() self.a=Activation('relu') self.c=Conv2D(filters=filters,kernel_size=(1,1),strides=1) self.p=AveragePooling2D(pool_size=(2,2),strides=2) def call(self,x): x=self.b(x) x=self.a(x) x=self.c(x) y=self.p(x) return y class DenseNet(Model): def __init__(self,block_list=[6,12,24,16],compression_rate=0.5,filters=64): super().__init__() growth_rate=32 self.padding=ZeroPadding2D(((1,2),(1,2))) self.c1=Conv2D(filters=filters,kernel_size=(7,7),strides=2,padding='valid') self.b1=BatchNormalization() self.a1=Activation('relu') self.p1=MaxPooling2D(pool_size=(3,3),strides=2,padding='same') self.blocks=tf.keras.models.Sequential() input_channel=filters for i,layers_in_block in enumerate(block_list): if i<3 : self.blocks.add(DenseBlock(layers_in_block,growth_rate)) block_out_channels=input_channel+layers_in_block*growth_rate self.blocks.add(Transition(filters=block_out_channels*0.5)) if i==3: self.blocks.add(DenseBlock(Dense_layers_num=layers_in_block,growth_rate=growth_rate)) self.p2=GlobalAveragePooling2D() self.d2=Dense(1000,activation='softmax') def call(self,x): x=self.padding(x) x=self.c1(x) x=self.b1(x) x=self.a1(x) x=self.p1(x) x=self.blocks(x) x=self.p2(x) y=self.d2(x) return y model=DenseNet()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

-

相关阅读:

智能感测型静电消除器通常具备哪些特点

思科C9300交换机Bundle模式转换为Install模式

第2-4-10章 规则引擎Drools实战(3)-保险产品准入规则

1500*C. Journey(dfs&树的遍历&数学期望)

shell脚本练习及小总结

maven基础

阿里自爆秋招面试笔记,福音来了

REGEXP函数正则表达式

【Nginx】实战应用(服务器端集群搭建、下载站点、用户认证模块)

全网最新版的超详细的xxl_job教程(2023年),以及解决“调度失败:执行器地址为空”的问题

- 原文地址:https://blog.csdn.net/Big_SHOe/article/details/127941722