-

UE MotionBlur源码解析

摘要

UE4.26 实现的MotionBlur版本源于SIG2014 COD的分享。笔者基于该分享,结合UE的落地,解析UE版本MotionBlur代码和Shader的实现,有助于理解其中原理。欢迎讨论和指正勘误。

2.背景

背景和相关方法暂略,可参考摘要PPT

3.UE实现

UE延迟管线实现MotionBlur大致分为两个部分,四个阶段:

1.BasePass中绘制VelocityBuffer记录像素偏移

2.MotionBlurPass中分三个阶段,分别实现

2.1 像素分块,池化每个Tile Velocity的MinMax值

2.2 继续在上阶段的输出上,池化相邻像素区域Velocity的MinMax值

2.3 在池化后的Velocity方向上,进行全屏宽度可变的模糊(MotionBlur)2.1 Velocity Buffer

计算屏幕空间像素坐标、深度与上一帧的差、

//BasePassPixelShader.usf // 2d velocity, includes camera an object motion #if WRITES_VELOCITY_TO_GBUFFER_USE_POS_INTERPOLATOR float3 Velocity = Calculate3DVelocity(BasePassInterpolants.VelocityScreenPosition, BasePassInterpolants.VelocityPrevScreenPosition); #else float3 Velocity = Calculate3DVelocity(MaterialParameters.ScreenPosition, BasePassInterpolants.VelocityPrevScreenPosition); #endif float4 EncodedVelocity = EncodeVelocityToTexture(Velocity);- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

2.2 MotionBlur

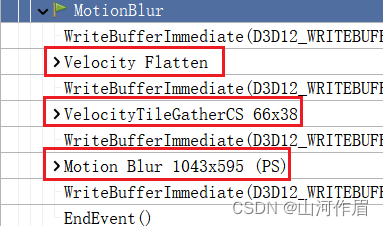

UE的MotionBlur分为三个阶段:

- VelocityFlatten(CS)

- VelocityTileGather(CS) /VelocityTileScatter(PS) 根据像素偏移的距离判断是否使用CS

- Motion Blur(PS/CS) 可选使用CS还是PS

2.2.1 Velocity Flatten

目的:

1.将VelocityBuffer值转为极坐标,和深度一起写入VelocityFlat

2.将每16x16像素区域的极坐标系下VelocityMinMax写入VelocityTile(池化)[numthreads(THREADGROUP_SIZEX, THREADGROUP_SIZEY, 1)] void VelocityFlattenMain( uint3 GroupId : SV_GroupID, uint3 DispatchThreadId : SV_DispatchThreadID, uint3 GroupThreadId : SV_GroupThreadID, uint GroupIndex : SV_GroupIndex) { uint2 PixelPos = min(DispatchThreadId.xy + Velocity_ViewportMin, Velocity_ViewportMax - 1); float4 EncodedVelocity = VelocityTexture[PixelPos]; float Depth = DepthTexture[PixelPos].x; float2 Velocity; if (EncodedVelocity.x > 0.0) { Velocity = DecodeVelocityFromTexture(EncodedVelocity).xy; } else // 使用像素两帧位置差计算Velocity { // Compute velocity due to camera motion. float2 ViewportUV = ((float2)DispatchThreadId.xy + 0.5) / Velocity_ViewportSize; float2 ScreenPos = 2 * float2(ViewportUV.x, 1 - ViewportUV.y) - 1; float4 ThisClip = float4(ScreenPos, Depth, 1); float4 PrevClip = mul(ThisClip, View.ClipToPrevClip); float2 PrevScreen = PrevClip.xy / PrevClip.w; Velocity = ScreenPos - PrevScreen; } Velocity.y *= -MotionBlur_AspectRatio; float2 VelocityPolar = CartesianToPolar(Velocity); // If the velocity vector was zero length, VelocityPolar will contain NaNs. if (any(isnan(VelocityPolar))) { VelocityPolar = float2(0.0f, 0.0f); } bool bInsideViewport = all(PixelPos.xy < Velocity_ViewportMax); // 11:11:10 (VelocityLength, VelocityAngle, Depth) float2 EncodedPolarVelocity; EncodedPolarVelocity.x = VelocityPolar.x; //弧度单位化,并旋转180度 EncodedPolarVelocity.y = VelocityPolar.y * (0.5 / PI) + 0.5; // 转化为极坐标,和深度一起写入输出OutVelocityFlatTexture BRANCH if (bInsideViewport) { OutVelocityFlatTexture[PixelPos] = float3(EncodedPolarVelocity, ConvertFromDeviceZ(Depth)).xyzz; } // Limit velocity VelocityPolar.x = min(VelocityPolar.x, MotionBlur_VelocityMax / MotionBlur_VelocityScale); // 不在ViewPort内的像素赋值2,0,可以保证降采样MinMax阶段,采到的值在有效区间里 float4 VelocityMinMax = VelocityPolar.xyxy; VelocityMinMax.x = bInsideViewport ? VelocityMinMax.x : 2; VelocityMinMax.z = bInsideViewport ? VelocityMinMax.z : 0; Shared[GroupIndex] = VelocityMinMax; //同步命令,等待组内线程执行至此,再对共享内存进行安全访问 GroupMemoryBarrierWithGroupSync(); // 采样周围像素,比较保留MinMax // 本例中 total size = 16x16 #if THREADGROUP_TOTALSIZE > 512 if (GroupIndex < 512) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 512]); GroupMemoryBarrierWithGroupSync(); #endif #if THREADGROUP_TOTALSIZE > 256 if (GroupIndex < 256) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 256]); GroupMemoryBarrierWithGroupSync(); #endif // 比较当前共享内存像素位置 与偏移128个index(16x8,下移8行)中的极坐标长度,分别保留最小和最大长度的极坐标 // 二分查找,类比一维空间二分,两倍整除 // 先纵向比较上下半部分的像素,sync #if THREADGROUP_TOTALSIZE > 128 if (GroupIndex < 128) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 128]); GroupMemoryBarrierWithGroupSync(); #endif // 0~128中继续二分 // 类似上个if,与偏移64个index(16x4,下移4行)中的极坐标长度,分别保留最小和最大长度的极坐标 #if THREADGROUP_TOTALSIZE > 64 if (GroupIndex < 64) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 64]); GroupMemoryBarrierWithGroupSync(); #endif // 猜测此处与GPU处理位数有关,对大于32位的GPU安全 // Safe for vector sizes 32 or larger, AMD and NV // TODO Intel variable size vector if (GroupIndex < 32) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 32]); if (GroupIndex < 16) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 16]); if (GroupIndex < 8) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 8]); if (GroupIndex < 4) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 4]); if (GroupIndex < 2) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 2]); if (GroupIndex < 1) Shared[GroupIndex] = MinMaxLengthPolar(Shared[GroupIndex], Shared[GroupIndex + 1]); // 将每个线程组最终得到的VelocityMinMax( Shared[0])写入OutVelocityTileTexture的像素位置 if (GroupIndex == 0) { OutVelocityTileTexture[GroupId.xy] = float4(PolarToCartesian(Shared[0].xy), PolarToCartesian(Shared[0].zw)); } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

2.2.2 VelocityTileGather / VelocityTileScatter

目的:

将2维极坐标数据 继续降采样Spread到更大的矩形上输入:

上一个Pass输出的VelocityTileTexturePostProcessVelocityFlatten.usf

[numthreads(16, 16, 1)] void VelocityGatherCS( uint3 GroupId : SV_GroupID, uint3 DispatchThreadId : SV_DispatchThreadID, uint3 GroupThreadId : SV_GroupThreadID, uint GroupIndex : SV_GroupIndex) { uint2 PixelPos = DispatchThreadId.xy; // +0.5 偏移像素 float2 UV = ((float2)PixelPos.xy + 0.5) * VelocityTile_ExtentInverse; float4 MinMaxVelocity = VelocityTileTexture[PixelPos]; // 计算VelocityTile的UV // 池化7x7 // Scatter as gather for(int x = -3; x <= 3; x++) { for(int y = -3; y <= 3; y++) { // 中间点不采 if (x == 0 && y == 0) continue; int2 Offset = int2(x,y); int2 SampleIndex = PixelPos + Offset; bool2 bInsideViewport = 0 <= SampleIndex && SampleIndex < (int2)VelocityTile_ViewportMax; if (!all(bInsideViewport)) continue; float4 ScatterMinMax = VelocityTileTexture[SampleIndex]; float2 MaxVelocity = ScatterMinMax.zw; float2 VelocityPixels = MaxVelocity * MotionBlur_VelocityScaleForTiles; // 将极坐标转化为对应像素空间的长度、方向。 具体数学依据未找到 float VelocityLengthPixelsSqr = dot(VelocityPixels, VelocityPixels); float VelocityLengthPixelsInv = rsqrtFast(VelocityLengthPixelsSqr + 1e-8); float VelocityLengthPixels = VelocityLengthPixelsSqr * VelocityLengthPixelsInv; float2 VelocityDir = VelocityPixels * VelocityLengthPixelsInv; // 最后得到屏幕空间上,采样像素位置的MaxVelocityDir // 此处未找到数学依据,大致是把采样点的步进向量offset, 投影到以采样点的VelocityDir为X轴正方向的坐标系中,得到采样像素的新坐标 // 并且根据VelocityDir计算覆盖像素的矩形QuadExtent(边长比VelocityDir的长度大,可能覆盖很多像素) // 最后判断,在同一个坐标系下,采样像素的位置是否在VelocityDir覆盖的矩形内=>采样点的MaxVelocityDir是否能覆盖到中心像素(0,0),进行池化 // Project pixel corner on to dir. This is the oriented extent of a pixel. // 1/2 pixel because shape is swept tile // +1/2 pixel for conservative rasterization // 99% to give epsilon before neighbor is filled. Otherwise all neighbors lie on edges of quad when no velocity in their direction. float PixelExtent = abs(VelocityDir.x) + abs(VelocityDir.y); float2 QuadExtent = float2(VelocityLengthPixels, 0) + PixelExtent.xx * 0.99; // Orient quad along velocity direction // 正交关系 float2 AxisX = VelocityDir; float2 AxisY = float2(-VelocityDir.y, VelocityDir.x); // Project this pixel center onto scatter quad float2 PixelCenterOnQuad; PixelCenterOnQuad.x = dot(AxisX, Offset); PixelCenterOnQuad.y = dot(AxisY, Offset); bool2 bInsideQuad = abs(PixelCenterOnQuad) < QuadExtent; if (all(bInsideQuad)) { MinMaxVelocity = MinMaxLength(MinMaxVelocity, ScatterMinMax); } } } // 池化结果写在当前位置 BRANCH if (all(PixelPos.xy < VelocityTile_ViewportMax)) { OutVelocityTileTexture[PixelPos] = MinMaxVelocity; } }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

UE切换至 VelocityTileScatter VS、PS版本的条件是,在C++里判断当前帧Velocity的跨度是否过大,超过3个Tile覆盖的屏幕大小时禁用CS版本

// PostProcessMotionBlur.cpp bool IsMotionBlurScatterRequired(const FViewInfo& View, const FScreenPassTextureViewport& SceneViewport) { const FSceneViewState* ViewState = View.ViewState; const float ViewportWidth = SceneViewport.Rect.Width(); // Normalize percentage value. const float VelocityMax = View.FinalPostProcessSettings.MotionBlurMax / 100.0f; // CS线程组大小16x16,加之模糊采样会从两个方向上进行,所以 *(0.5f / 16.0f) // Scale by 0.5 due to blur samples going both ways and convert to tiles. const float VelocityMaxInTiles = VelocityMax * ViewportWidth * (0.5f / 16.0f); // Compute path only supports the immediate neighborhood of tiles. const float TileDistanceMaxGathered = 3.0f; // 当最大速度超过CS Gather支持的距离时,使用Scatter // Scatter is used when maximum velocity exceeds the distance supported by the gather approach. const bool bIsScatterRequiredByVelocityLength = VelocityMaxInTiles > TileDistanceMaxGathered; // Cinematic is paused. const bool bInPausedCinematic = (ViewState && ViewState->SequencerState == ESS_Paused); // Use the scatter approach if requested by cvar or we're in a paused cinematic (higher quality). const bool bIsScatterRequiredByUser = CVarMotionBlurScatter.GetValueOnRenderThread() == 1 || bInPausedCinematic; return bIsScatterRequiredByUser || bIsScatterRequiredByVelocityLength; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

VelocityTileScatter 有两个Drawcall,分别Scatter VelocityMin和VelocityMax,开启深度测试

// Min, Max for (uint32 ScatterPassIndex = 0; ScatterPassIndex < static_cast<uint32>(EMotionBlurVelocityScatterPass::MAX); ScatterPassIndex++) { const EMotionBlurVelocityScatterPass ScatterPass = static_cast<EMotionBlurVelocityScatterPass>(ScatterPassIndex); if (ScatterPass == EMotionBlurVelocityScatterPass::DrawMin) { GraphicsPSOInit.BlendState = TStaticBlendStateWriteMask<CW_RGBA>::GetRHI(); GraphicsPSOInit.DepthStencilState = TStaticDepthStencilState<true, CF_Less>::GetRHI(); } else { GraphicsPSOInit.BlendState = TStaticBlendStateWriteMask<CW_BA>::GetRHI(); GraphicsPSOInit.DepthStencilState = TStaticDepthStencilState<true, CF_Greater>::GetRHI(); }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

void VelocityScatterVS( uint VId : SV_VertexID, uint IId : SV_InstanceID, out nointerpolation float4 OutColor : TEXCOORD0, out float4 OutPosition : SV_POSITION) { OutPosition = float4(0, 0, 0, 1); // needs to be the same on C++ side (faster on NVIDIA and AMD) uint QuadsPerInstance = 8; // remap the indices to get vertexid to VId and quadid into IId // IID = 该Quad在所有Instance Quads中的索引 IID 范围为 0 ~ QuadsPerInstance * NumInstances IId = IId * QuadsPerInstance + (VId / 4); VId = VId % 4; // 0,1,2,3,0.. // triangle A: 0:left top, 1:right top, 2: left bottom // triangle B: 3:right bottom, 4:left bottom, 5: right top float2 CornerOffset = float2(VId % 2, VId / 2) * 2 - 1; // 例举所有情况 0 (-1,-1) 1(1,0) 2(-1,1) 3(1,2) uint2 PixelPos = uint2(IId % VelocityTile_ViewportMax.x, IId / VelocityTile_ViewportMax.x); // 剔除超出ViewPort的像素 BRANCH if (PixelPos.y >= VelocityTile_ViewportMax.y) { OutColor = 0; return; } float2 SvPosition = PixelPos + 0.5; // 偏移至像素中心 // 采样该像素位置的MinMaxVelocity float4 MinMaxVelocity = VelocityTileTexture[PixelPos]; OutColor = MinMaxVelocity; // MotionBlur_VelocityScaleForTiles 与MotionBLur后处理参数Amout直接相关,拉伸偏移长度 float4 MinMaxVelocityPixels = MinMaxVelocity * MotionBlur_VelocityScaleForTiles; float2 VelocityPixels = MinMaxVelocityPixels.zw; // 取Max // Is the velocity small enough not to cover adjacent tiles? BRANCH if (dot(VelocityPixels, VelocityPixels) * 16 * 16 <= 0.25) //偏移长度x256后小于0.25,认为不会覆盖周围像素,对应像素直接使用MinMaxVelocity { // .... ScreenPosToViewportBias = (0.5f * ViewportSize) + ViewportMin; // 变换到屏幕空间坐标,需要关于x轴对称 类似[0,1]->[-1,1] OutPosition.xy = (SvPosition + CornerOffset * 0.5 - VelocityTile_ScreenPosToViewportBias) / VelocityTile_ScreenPosToViewportScale.xy; OutPosition.z = 0.0002; // zero clips return; } // 之后与CS版本基本一致,将像素投影到VelocityDir方向 // 极坐标转化成像素矢量(不太明白此处数学关系 原理) float VelocityLengthPixelsSqr = dot(VelocityPixels, VelocityPixels); float VelocityLengthPixelsInv = rsqrtFast(VelocityLengthPixelsSqr); float VelocityLengthPixels = VelocityLengthPixelsSqr * VelocityLengthPixelsInv; float2 VelocityDir = VelocityPixels * VelocityLengthPixelsInv; // Project pixel corner on to dir. This is the oriented extent of a pixel. // 1/2 pixel because shape is swept tile // +1/2 pixel for conservative rasterization // 99% to give epsilon before neighbor is filled. Otherwise all neighbors lie on edges of quad when no velocity in their direction. // dot(abs(VelocityDir), float2(1, 1)) float Extent = abs(VelocityDir.x) + abs(VelocityDir.y); CornerOffset *= float2(VelocityLengthPixels, 0) + Extent.xx * 0.99; // 正交AxisX AxisY // Orient along velocity direction float2 AxisX = VelocityDir; float2 AxisY = float2(-VelocityDir.y, VelocityDir.x); CornerOffset = AxisX * CornerOffset.x + AxisY * CornerOffset.y; //Scatter:: 将这个quad代表像素的VelocityMinMax,投影到其VelocityMax覆盖的像素位置,一个quad有6个顶点,会多次Scatter OutPosition.xy = (SvPosition + CornerOffset - VelocityTile_ScreenPosToViewportBias) / VelocityTile_ScreenPosToViewportScale; // 利用VelocityLength进行深度剔除,确保每个像素存储更小、更大的Velocity // Depth ordered by velocity length OutPosition.z = (ScatterPass == VELOCITY_SCATTER_PASS_MAX) ? VelocityLengthPixels : length(MinMaxVelocityPixels.xy); OutPosition.z = clamp(OutPosition.z / VelocityTile_ScreenPosToViewportScale.x * 0.5, 0.0002, 0.999); } void VelocityScatterPS( nointerpolation float4 InColor : TEXCOORD0, out float4 OutColor : SV_Target0) { OutColor = InColor; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

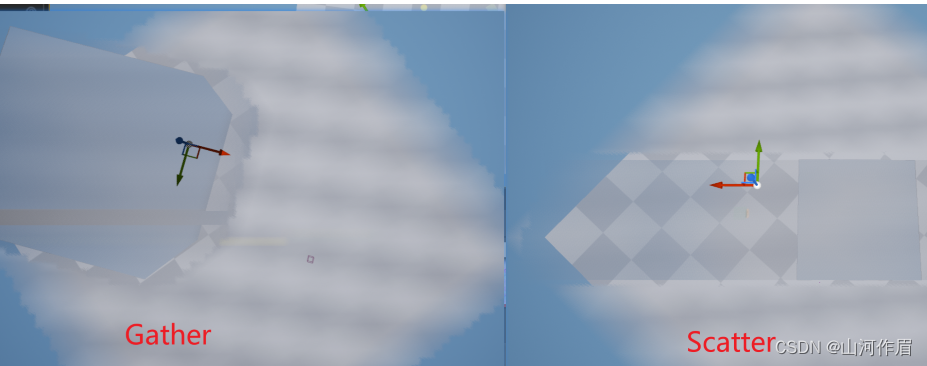

小结一下,CS版本的方法为Gather,VS/PS版本为Scatter。区别在于CS是以7x7采样中心为原点,判断周围采样点的VelocityLength能否覆盖原点自身,从而进行原点值下一步更新。Scatter从当前像素出发,向周围自己能覆盖到的像素散射自身MinMax值。两种方法都能完成临近Tile的池化。

通过命令行r.MotionBlurScatter 切换两种方法对比,VelocityLength适中的情况下无明显区别

在VelocityLength过大的情况下,差异明显

2.3 MotionBlur

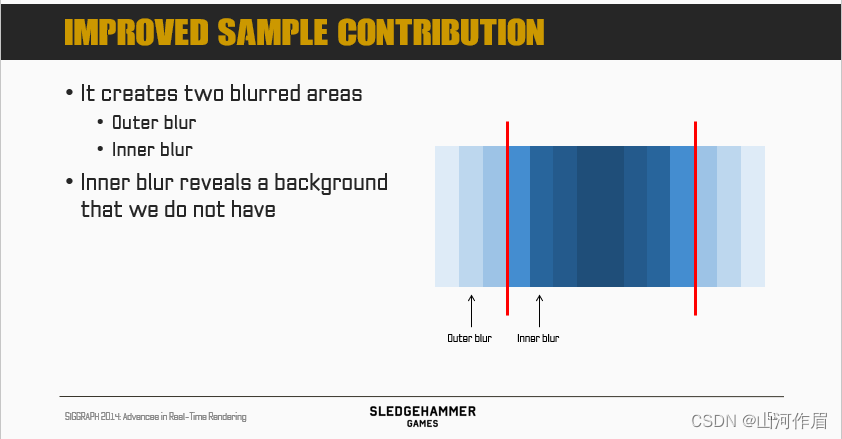

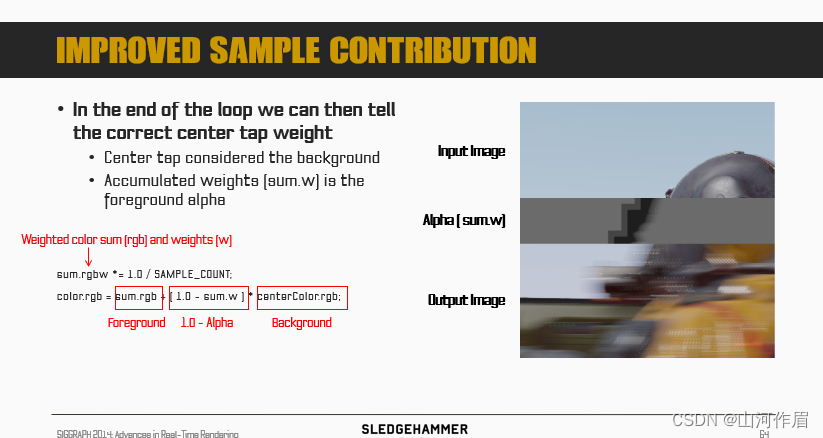

理论:需要理解外模糊、内模糊、权重、深度、镜像、前景、背景。

参考:1.知乎物体运动模糊(motion blur)

2.SIG2014 COD内外模糊:

MotionBlur将创建两个模糊区域,外模糊和内模糊,内部模糊会显示被前景挡住的背景。

外模糊:

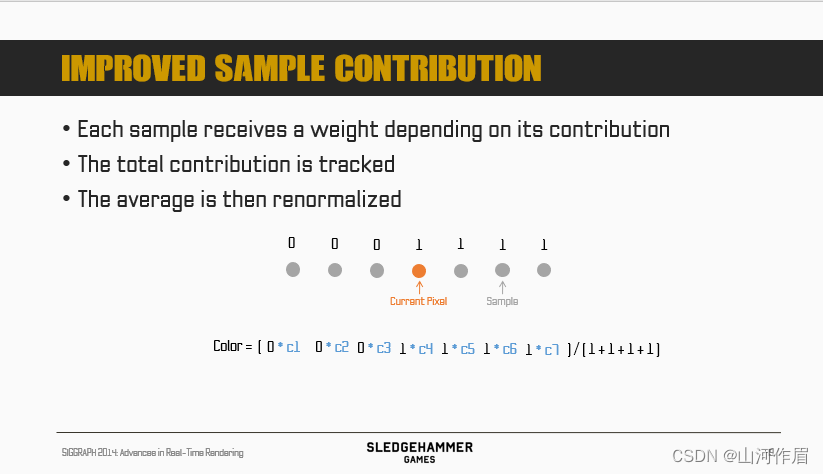

权重:

(剩余概念后续补充)

Shader:

CS版本和PS基本一致,先分析PSvoid MainPS( in noperspective float4 UVAndScreenPos : TEXCOORD0, in float4 SvPosition : SV_Position, out float4 OutColor : SV_Target0) { float2 ColorUV = UVAndScreenPos.xy; OutColor = MainMotionBlurCommon(ColorUV, floor(SvPosition.xy)); } float4 MainMotionBlurCommon(float2 ColorUV, float2 ColorPixelPos) { const uint StepCount = GetStepCountFromQuality() / 2; const float PixelToTileScale = (1.0 / 16.0); float Random = InterleavedGradientNoise(ColorPixelPos, 0); float Random2 = InterleavedGradientNoise(ColorPixelPos, 1); // [-0.25, 0.25] float2 TileJitter = (float2(Random, Random2) - 0.5) * 0.5; // 以上获得随机抖动 // Map color UV to velocity UV space. float2 VelocityUV = ColorUV * ColorToVelocity_Scale + ColorToVelocity_Bias; // 计算Tile内的UV, 以供采样MinMax // Map velocity UV to velocity tile UV space with jitter. float2 NearestVelocitySvPosition = floor(VelocityUV * Velocity_Extent) + 0.5; float2 VelocityTileUV = ((NearestVelocitySvPosition - Velocity_ViewportMin) * PixelToTileScale + TileJitter) * VelocityTile_ExtentInverse; // PixelToTileScale = 1/16 每个线程组线程数 // Velocity tile UV originates at [0,0]; only need to clamp max. VelocityTileUV = min(VelocityTileUV, VelocityTile_UVViewportBilinearMax); float4 MinMaxVelocity = VelocityTileTexture.SampleLevel(SharedVelocityTileSampler, VelocityTileUV, 0); // VelocityScale与后处理参数MotionBlurAmount相关 float2 MinVelocityPixels = MinMaxVelocity.xy * MotionBlur_VelocityScale; float2 MaxVelocityPixels = MinMaxVelocity.zw * MotionBlur_VelocityScale; float MinVelocityLengthSqrPixels = dot(MinVelocityPixels, MinVelocityPixels); float MaxVelocityLengthSqrPixels = dot(MaxVelocityPixels, MaxVelocityPixels); // Input buffer 0 as same viewport as output buffer. float4 CenterColor = ColorTexture.SampleLevel(ColorSampler, ColorUV, 0); // 沿向量的正负方向 float4 SearchVectorPixels = float4(MaxVelocityPixels, -MaxVelocityPixels); float4 SearchVector = SearchVectorPixels * Color_ExtentInverse.xyxy; // converts pixel length to sample steps float PixelToSampleScale = StepCount * rsqrt(dot(MaxVelocityPixels, MaxVelocityPixels)); // TODO expose cvars bool bSkipPath = MaxVelocityLengthSqrPixels < 0.25; bool bFastPath = MinVelocityLengthSqrPixels > 0.4 * MaxVelocityLengthSqrPixels; // 目前关, UE不支持 // Only use fast path if all threads of the compute shader would. #if COMPILER_SUPPORTS_WAVE_VOTE { bFastPath = WaveAllTrue(bFastPath); } #elif COMPUTESHADER { GroupSharedFastPath = 0; GroupMemoryBarrierWithGroupSync(); uint IgnoredOut; InterlockedAdd(GroupSharedFastPath, bFastPath ? 1 : 0); GroupMemoryBarrierWithGroupSync(); bFastPath = (GroupSharedFastPath == (THREADGROUP_SIZEX * THREADGROUP_SIZEY)); } #endif BRANCH if (bSkipPath) // 偏移小,跳过 { // 宏默认0 ,命令行r.PostProcessing.PropagateAlpha。 后处理是否支持alpha通道 #if !POST_PROCESS_ALPHA CenterColor.a = 0; #endif return CenterColor; } float4 OutColor = 0; BRANCH if (bFastPath) // 像素运动偏移大 { float4 ColorAccum = 0; // 像素根据运动方向,沿着正负方向,多次采样SceneColor,拉伸模糊 UNROLL for (uint i = 0; i < StepCount; i++) { float2 OffsetLength = (float)i + 0.5 + float2(Random - 0.5, 0.5 - Random); float2 OffsetFraction = OffsetLength / StepCount; float2 SampleUV[2]; SampleUV[0] = ColorUV + OffsetFraction.x * SearchVector.xy; SampleUV[1] = ColorUV + OffsetFraction.y * SearchVector.zw; SampleUV[0] = clamp(SampleUV[0], Color_UVViewportBilinearMin, Color_UVViewportBilinearMax); SampleUV[1] = clamp(SampleUV[1], Color_UVViewportBilinearMin, Color_UVViewportBilinearMax); // 以上计算向量上的采样UV ColorAccum += ColorTexture.SampleLevel(ColorSampler, SampleUV[0], 0); ColorAccum += ColorTexture.SampleLevel(ColorSampler, SampleUV[1], 0); } // 直接*0.5,表示fastpath情况下,两端方向上,像素的权重一样(都为前景) ColorAccum *= 0.5 / StepCount; OutColor = ColorAccum; } else // MaxVelocityLengthSqrPixels >= 0.25 { float3 CenterVelocityDepth = VelocityFlatTexture.SampleLevel(SharedVelocityFlatSampler, VelocityUV, 0).xyz; float CenterDepth = CenterVelocityDepth.z; float CenterVelocityLength = GetVelocityLengthPixels(CenterVelocityDepth.xy); float4 ColorAccum = 0; float ColorAccumWeight = 0; UNROLL for (uint i = 0; i < StepCount; i++) { float2 SampleUV[2]; float4 SampleColor[2]; float SampleDepth[2]; float SampleVelocityLength[2]; float Weight[2]; float2 OffsetLength = (float)i + 0.5 + float2(Random - 0.5, 0.5 - Random); float2 OffsetFraction = OffsetLength / StepCount; SampleUV[0] = ColorUV + OffsetFraction.x * SearchVector.xy; SampleUV[1] = ColorUV + OffsetFraction.y * SearchVector.zw; SampleUV[0] = clamp(SampleUV[0], Color_UVViewportBilinearMin, Color_UVViewportBilinearMax); SampleUV[1] = clamp(SampleUV[1], Color_UVViewportBilinearMin, Color_UVViewportBilinearMax); UNROLL for (uint j = 0; j < 2; j++) { float3 SampleVelocityDepth = VelocityFlatTexture.SampleLevel( SharedVelocityFlatSampler, SampleUV[j] * ColorToVelocity_Scale + ColorToVelocity_Bias, 0).xyz; SampleColor[j] = ColorTexture.SampleLevel(ColorSampler, SampleUV[j], 0); SampleDepth[j] = SampleVelocityDepth.z; // in pixels SampleVelocityLength[j] = GetVelocityLengthPixels(SampleVelocityDepth.xy); // -----之后代码与COD 的PPT提供的一样,PPT解释了部分原理----- // 根据采样的颜色,深度偏移、偏移长度,计算颜色权重 Weight[j] = SampleWeight(CenterDepth, SampleDepth[j], OffsetLength.x, CenterVelocityLength, SampleVelocityLength[j], PixelToSampleScale, SOFT_Z_EXTENT); } bool2 Mirror = bool2(SampleDepth[0] > SampleDepth[1], SampleVelocityLength[1] > SampleVelocityLength[0]); // 此处分析较为主观 // all(Mirror) 表示 [0] 距离相机更远,偏移更小[属于完全背景],那么它对center颜色权重由[1]提供,这种情况W0,W1都为W1 // Any(Mirror) 认为[0][1]的前背关系相对模糊,各使用各自的权重 Weight[0] = all(Mirror) ? Weight[1] : Weight[0]; Weight[1] = any(Mirror) ? Weight[1] : Weight[0]; ColorAccum += Weight[0] * SampleColor[0] + Weight[1] * SampleColor[1]; //前景 ColorAccumWeight += Weight[0] + Weight[1]; } ColorAccum *= 0.5 / StepCount; //前景色 ColorAccumWeight *= 0.5 / StepCount; //前景色权重 OutColor = ColorAccum + (1 - ColorAccumWeight) * CenterColor; } #if !POST_PROCESS_ALPHA OutColor.a = 0; #endif return OutColor; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

至此整个画面的运动模糊绘制完成。 -

相关阅读:

Transformer用于计算机视觉

【红包雨功能的】环境部署(弹性伸缩、负载均衡、Redis读写分离、云服务器部署)

如何使用数组——javascript

【日拱一卒行而不辍20220921】自制操作系统

axios和vuex

shell脚本相关基础操作汇总

easyExcel不同版本按照模板导出

蓝牙mesh基础(基本术语)

单片机学习笔记---直流电机驱动(PWM)

【图像处理笔记】图像分割之聚类和超像素

- 原文地址:https://blog.csdn.net/qq_34813925/article/details/127810623