-

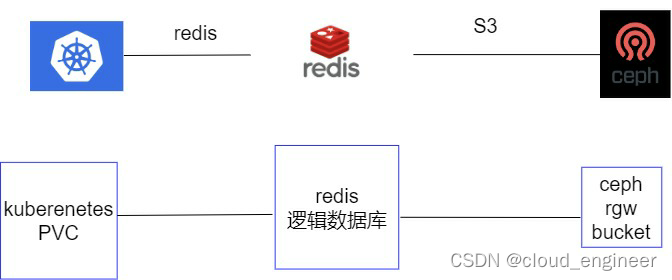

juicefs对接K8S和ceph集群对象存储作为SC后端存储

1 ceph启用对象存储

1.1 安装ceph对象存储

ceph管理节点的工作目录下,给 Ceph 对象网关节点安装Ceph对象所需的软件包。

ceph-deploy install --rgw ceph1- 1

安装对象存储

ceph-deploy rgw create ceph1- 1

测试对象存储是否开启

# curl http://ceph1:7480 <?xml version="1.0" encoding="UTF-8"?><ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/"><Owner><ID>anonymous</ID><DisplayName></DisplayName></Owner><Buckets></Buckets></ListAllMyBucketsResult>- 1

- 2

自动生成rgw pool

# ceph df RAW STORAGE: CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 2.6 TiB 2.6 TiB 3.5 GiB 3.5 GiB 0.13 TOTAL 2.6 TiB 2.6 TiB 3.5 GiB 3.5 GiB 0.13 POOLS: POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL volumes 1 128 594 MiB 408 594 MiB 0.02 853 GiB images 2 128 44 MiB 9 44 MiB 0 853 GiB test 3 8 121 B 4 121 B 0 853 GiB vms 4 128 198 MiB 136 198 MiB 0 853 GiB kube 5 128 190 MiB 178 190 MiB 0 853 GiB mypool 6 128 137 MiB 76 137 MiB 0 853 GiB testpool 7 128 0 B 0 0 B 0 853 GiB cephfs_data 8 32 474 B 3 474 B 0 853 GiB cephfs_metadata 9 16 125 KiB 26 125 KiB 0 853 GiB kubernetes 10 128 320 B 8 320 B 0 853 GiB .rgw.root 11 32 1.2 KiB 4 1.2 KiB 0 853 GiB default.rgw.control 12 32 0 B 8 0 B 0 853 GiB default.rgw.meta 13 32 1.3 KiB 7 1.3 KiB 0 853 GiB default.rgw.log 14 32 0 B 207 0 B 0 853 GiB default.rgw.buckets.index 15 32 0 B 2 0 B 0 853 GiB default.rgw.buckets.data 16 32 0B 99 0B 0 0 GiB- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

1.2 生成S3账号

radosgw-admin user create --uid="kubernetesuser" --display-name="kubernetes User"- 1

提示如下:

radosgw-admin user create --uid="kubernetesuser" --display-name="kubernetes User" { "user_id": "kubernetesuser", "display_name": "kubernetes User", "email": "", "suspended": 0, "max_buckets": 1000, "subusers": [], "keys": [ { "user": "kubernetesuser", "access_key": "6SYO4EYLZYSJZ0BEHEJK", "secret_key": "pCNWeCbHlS5sU7rLhOlTejHPqV2uAXSxFvW6k251" } ], "swift_keys": [], "caps": [], "op_mask": "read, write, delete", "default_placement": "", "default_storage_class": "", "placement_tags": [], "bucket_quota": { "enabled": false, "check_on_raw": false, "max_size": -1, "max_size_kb": 0, "max_objects": -1 }, "user_quota": { "enabled": false, "check_on_raw": false, "max_size": -1, "max_size_kb": 0, "max_objects": -1 }, "temp_url_keys": [], "type": "rgw", "mfa_ids": [] }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

2 在K8S安装redis

2.1 部署redis

本例子为单机redis,生产环境最好搭建集群redis, 另外redis也可以部署在K8S集群外,部署yaml如下:

redis-config.yamlapiVersion: v1 kind: ConfigMap metadata: name: juicefs-redis-config namespace: kube-system data: redis.conf: | bind 0.0.0.0 port 6379 pidfile .pid appendonly yes cluster-config-file nodes-6379.conf pidfile /data/middleware-data/redis/log/redis-6379.pid cluster-config-file /data/middleware-data/redis/conf/redis.conf dir /data/middleware-data/redis/data/ logfile "/data/middleware-data/redis/log/redis-6379.log" cluster-node-timeout 5000 protected-mode no- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

redis-pvc.yaml

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: juicefs-redis-pvc1 namespace: kube-system annotations: volume.beta.kubernetes.io/storage-provisioner: nfs-provisioner-01 spec: accessModes: - ReadWriteMany resources: requests: storage: 100Gi storageClassName: nfs-server- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

redis-sts.yaml

apiVersion: apps/v1 kind: StatefulSet metadata: name: juicefs-redis namespace: kube-system spec: replicas: 1 serviceName: juicefs-redis-svc selector: matchLabels: name: juicefs-redis template: metadata: labels: name: juicefs-redis spec: volumes: - name: host-time hostPath: path: /etc/localtime type: '' - name: data persistentVolumeClaim: claimName: juicefs-redis-pvc1 - name: redis-config configMap: name: juicefs-redis-config initContainers: - name: init-redis image: busybox command: ['sh', '-c', 'mkdir -p /data/middleware-data/redis/log/;mkdir -p /data/middleware-data/redis/conf/;mkdir -p /data/middleware-data/redis/data/'] volumeMounts: - name: data mountPath: /data/middleware-data/redis/ resources: limits: cpu: 250m memory: 64Mi requests: cpu: 125m memory: 32Mi containers: - name: redis image: redis:5.0.6 imagePullPolicy: IfNotPresent command: - sh - -c - "exec redis-server /data/middleware-data/redis/conf/redis.conf" ports: - containerPort: 6379 name: redis protocol: TCP volumeMounts: - name: redis-config mountPath: /data/middleware-data/redis/conf/ - name: data mountPath: /data/middleware-data/redis/ - name: host-time readOnly: true mountPath: /etc/localtime- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

redis-svc.yaml

kind: Service apiVersion: v1 metadata: labels: name: juicefs-redis-svc name: juicefs-redis-svc namespace: kube-system spec: type: NodePort ports: - name: redis port: 6379 targetPort: 6379 selector: name: juicefs-redis- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

把以上的yaml放在一个目录里:

kubect apply -f .- 1

2.2 验证

# kubectl get pod -n kube-system | grep redis juicefs-redis-0 1/1 Running 0 47h- 1

- 2

3 在K8S安装juicefs

本博客采用helm安装,增加helm源

helm repo add juicefs-csi-driver https://juicedata.github.io/charts/- 1

更新helm源

helm repo update- 1

下载juicefs的helm包

helm pull juicefs-csi-driver/juicefs-csi-driver- 1

解压

tar zxvf juicefs-csi-driver-0.13.1.tgz- 1

安装

cd juicefs-csi-driver helm install juicefs-csi-driver juicefs-csi-driver/juicefs-csi-driver -n kube-system -f ./values.yaml- 1

- 2

删除原来生成的secret juicefs-sc-secret和sc juicefs-sc, 重新创建:

juicefs-sc.yaml apiVersion: v1 kind: Secret metadata: name: juicefs-sc-secret namespace: kube-system type: Opaque stringData: name: "wangjinxiong" #自动生成 metaurl: "redis://10.254.202.142" #前面安装redis的svc地址 storage: "s3" bucket: "http://192.168.3.61:7480/kubernetes" #对象存储的地址及bucket,bucket需要提前创建 access-key: "6SYO4EYLZYSJZ0BEHEJK" #对象存储的access-key对应前面的 secret-key: "pCNWeCbHlS5sU7rLhOlTejHPqV2uAXSxFvW6k251" #对象存储的secret-key对应前面的 --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: juicefs-sc provisioner: csi.juicefs.com reclaimPolicy: Retain volumeBindingMode: Immediate parameters: csi.storage.k8s.io/node-publish-secret-name: juicefs-sc-secret csi.storage.k8s.io/node-publish-secret-namespace: kube-system csi.storage.k8s.io/provisioner-secret-name: juicefs-sc-secret csi.storage.k8s.io/provisioner-secret-namespace: kube-system- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

查看生成的pod:

# kubectl get pod -n kube-system | grep juice juicefs-csi-controller-0 3/3 Running 1 2d juicefs-csi-node-256tq 3/3 Running 0 2d juicefs-csi-node-ljbw4 3/3 Running 0 2d- 1

- 2

- 3

- 4

- 5

创建一个pvc及deploy验证:

# cat pvc-test.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: juice-pvc1 spec: accessModes: - ReadWriteMany resources: requests: storage: 1Gi storageClassName: juicefs-sc # cat juice-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-juice spec: selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 volumeMounts: - mountPath: /usr/share/nginx/html name: web-data volumes: - name: web-data persistentVolumeClaim: claimName: juice-pvc1- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

查看pvc:

# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE juice-pvc1 Bound pvc-62e3a3b2-d858-4cb4-94c9-a9241eeb8a8d 1Gi RWX juicefs-sc 46h- 1

- 2

- 3

查看worker节点挂载情况:

# df -h | grep juicefs JuiceFS:wangjinxiong 1.0P 155M 1.0P 1% /var/lib/juicefs/volume/pvc-62e3a3b2-d858-4cb4-94c9-a9241eeb8a8d-hkramn- 1

- 2

4 安装juicefs客户端

下载:

JFS_LATEST_TAG=$(curl -s https://api.github.com/repos/juicedata/juicefs/releases/latest | grep 'tag_name' | cut -d '"' -f 4 | tr -d 'v') wget "https://github.com/juicedata/juicefs/releases/download/v${JFS_LATEST_TAG}/juicefs-${JFS_LATEST_TAG}-linux-amd64.tar.gz" tar -zxf "juicefs-${JFS_LATEST_TAG}-linux-amd64.tar.gz"- 1

- 2

- 3

- 4

- 5

安装:

sudo install juicefs /usr/local/bin- 1

手动挂载到本地目录:

# juicefs mount -d redis://10.254.202.142:6379 /mnt 2022/10/30 16:22:01.650283 juicefs[18753] <INFO>: Meta address: redis://10.254.202.142:6379 [interface.go:402] 2022/10/30 16:22:01.659028 juicefs[18753] <INFO>: Ping redis: 980.333µs [redis.go:2878] 2022/10/30 16:22:01.662469 juicefs[18753] <INFO>: Data use s3://kubernetes/wangjinxiong/ [mount.go:422] 2022/10/30 16:22:01.664037 juicefs[18753] <INFO>: Disk cache (/var/jfsCache/3d458e7f-7457-4c09-9901-7edf2bba18c5/): capacity (102400 MB), free ratio (10%), max pending pages (15) [disk_cache.go:94] 2022/10/30 16:22:02.169179 juicefs[18753] <INFO>: OK, wangjinxiong is ready at /mnt [mount_unix.go:45] [root@k8s21-master01 ~]# cd /mnt [root@k8s21-master01 mnt]# ls pvc-62e3a3b2-d858-4cb4-94c9-a9241eeb8a8d- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

-

相关阅读:

sentry线上报跨域问题记录

js 实现删除数组指定元素

学无止境,资深架构师—到底是如何阅读JDK源码的?

【Python】从入门到上头— 使用re模块用于快速实现正则表达式需求(11)

【附证明】用ArcGIS中Band Collection Statistics做相关性分析可能存在错误

DSP篇--C6678功能调试系列之网络调试

mac支持fat32格式吗 mac支持什么格式的移动硬盘

Web前端:全栈开发人员——专业知识和技能

Redis - 超越缓存的多面手

android 键盘遮挡输入框问题回忆

- 原文地址:https://blog.csdn.net/cloud_engineer/article/details/127704444