前言

相信经过前一篇文章的学习,大家已经对Helm有所了解,本篇文章介绍另一款工具 Kustomize,为什么Helm如此流行,还会出现 Kustomize?而且 Kustomize 自 kubectl 1.14以来早已内置到其中,说明官方对其很认可。

我认为还是Helm 参数化模板方式来自定义配置需要学习复杂的DSL语法,难上手,易出错,而 kustomize 是使用 Kubernetes 原生概念帮助用户创作并复用声明式配置。

认识 Kustomize

安装

brew install kustomize示例解析

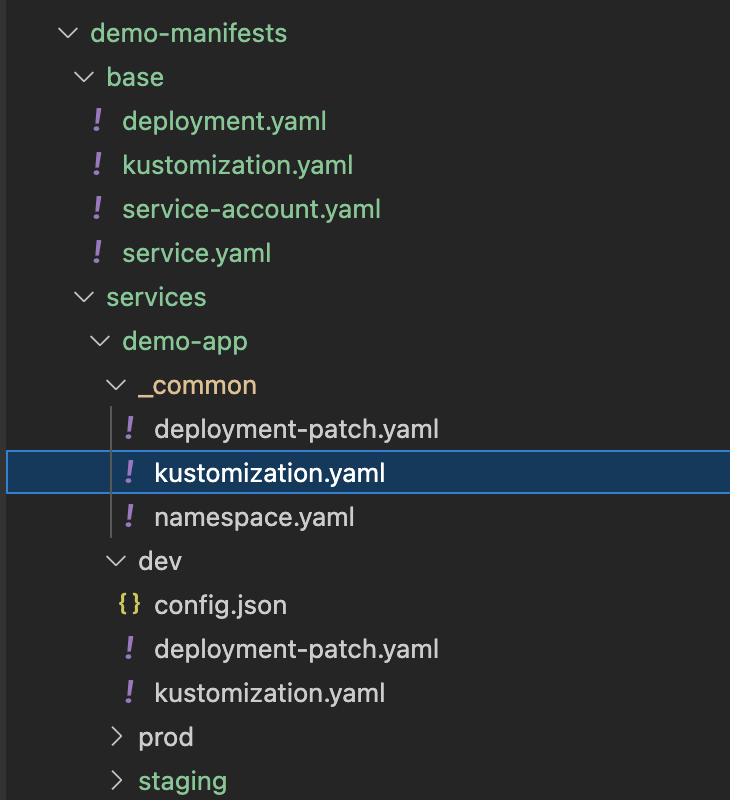

demo-manifests

├── base

│ ├── deployment.yaml

│ ├── kustomization.yaml

│ ├── service-account.yaml

│ └── service.yaml

└── services

├── demo-app

│ ├── _common

│ │ ├── deployment-patch.yaml

│ │ ├── kustomization.yaml

│ │ └── namespace.yaml

│ ├── dev

│ │ ├── config.json

│ │ ├── deployment-patch.yaml

│ │ └── kustomization.yaml

│ ├── staging

│ │ ├── config.json

│ │ ├── deployment-patch.yaml

│ │ └── kustomization.yaml

│ └── prod

│ │ ├── config.json

│ │ ├── deployment-patch.yaml

│ │ └── kustomization.yaml

└── demo-app2

└── xxx

先看base 目录,有几个常见的deployment/service/service-account YAML 资源文件

还有个 kustomization.yaml 配置文件

这里面包含了刚才的几个resource文件以及要应用于它们的一些自定义,如添加一个通用的标签Common Labels

这时候也可以通过 kustomize build 命令来生成完整的YAML进行查看

kustomize build demo-manifests/base > base.yaml

base.yaml

base.yamlbuild 出来的 YAML 每个资源对象上都会存在通用的标签 managed-by: Kustomize

接下来看 Service目录,此目录存放所有的服务项目,比如demo-app , 里面的YAML就是来覆盖base 也就是官方说的 Overlays

只需要把不同的资源描述通过Patch方式覆盖掉base中的就行了。这边我又将三个环境 dev/staging/prod 公共的部分抽取出来放入 common文件夹。

_common/kustomization.yaml文件如下

apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization resources: - ../../../base - namespace.yaml patchesStrategicMerge: - deployment-patch.yaml

我这个demo-app 需要configMap,另外心跳接口也不一样, 所以 deployment-patch.yaml 需要写出这些不一样的,然后去覆盖base中的。

apiVersion: apps/v1

kind: Deployment

metadata:

name: NAME_PLACEHOLDER

spec:

template:

spec:

serviceAccountName: NAME_PLACEHOLDER

containers:

- name: app

image: wadexu007/demo:IMG_TAG_PLACEHOLDER

livenessProbe:

failureThreshold: 5

httpGet:

path: /pizzas

port: 8080

initialDelaySeconds: 10

periodSeconds: 40

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /pizzas

port: 8080

initialDelaySeconds: 10

periodSeconds: 20

timeoutSeconds: 1

volumeMounts:

- name: config-volume

mountPath: /app/conf/config.json

subPath: config.json

volumes:

- name: config-volume

configMap:

name: demo-app-config

--- apiVersion: v1 kind: Namespace metadata: name: demo

### 文章首发于博客园 https://www.cnblogs.com/wade-xu/p/16839829.html

最后我们看 dev/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization resources: - ../_common

namespace: demo

commonLabels: app: demo-app

replicas: - count: 1 name: demo-app configMapGenerator: - files: - config.json name: demo-app-config patches: - patch: |- - op: replace path: /metadata/name value: demo-app target: name: NAME_PLACEHOLDER

patchesStrategicMerge: - deployment-patch.yaml

images: - name: wadexu007/demo newTag: 1.0.0

dev env 里面将replicas设置成1, 用config.json 生成 configMap

{

"SOME_CONFIG": "/demo/path"

}

deployment-patch.yaml 里面也将container resource request/limit 配置设置小一点, 采用 patchesStrategicMerge 与 common 和 base里面的deployment资源合并。

apiVersion: apps/v1

kind: Deployment

metadata:

name: NAME_PLACEHOLDER

spec:

template:

spec:

containers:

- name: app

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 200m

memory: 256Mi

另外还采用了 patch 的方式,改了每个资源的name --> demo-app

以及images 替换了原来的 image name and tag.

kustomize 命令如下

kustomize build demo-manifests/services/demo-app/dev > demo-app.yaml

如果用 kubectl 命令的话,示例如下

kubectl kustomize services/demo-app/dev/ > demo-app.yaml

最终结果

apiVersion: v1

kind: Namespace

metadata:

labels:

app: demo-app

name: demo

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: demo-app

managed-by: Kustomize

name: demo-app

namespace: demo

---

apiVersion: v1

data:

config.json: |-

{

"SOME_CONFIG": "/demo/path"

}

kind: ConfigMap

metadata:

labels:

app: demo-app

name: demo-app-config-t7c64mbtt2

namespace: demo

---

apiVersion: v1

kind: Service

metadata:

labels:

app: demo-app

managed-by: Kustomize

name: demo-app

namespace: demo

spec:

ports:

- port: 8080

protocol: TCP

targetPort: http

selector:

app: demo-app

managed-by: Kustomize

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: demo-app

managed-by: Kustomize

name: demo-app

namespace: demo

spec:

replicas: 1

selector:

matchLabels:

app: demo-app

managed-by: Kustomize

template:

metadata:

labels:

app: demo-app

managed-by: Kustomize

spec:

containers:

- image: wadexu007/demo:1.0.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 5

httpGet:

path: /pizzas

port: 8080

initialDelaySeconds: 10

periodSeconds: 40

timeoutSeconds: 1

name: app

ports:

- containerPort: 8080

name: http

readinessProbe:

failureThreshold: 5

httpGet:

path: /pizzas

port: 8080

initialDelaySeconds: 10

periodSeconds: 20

timeoutSeconds: 1

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 200m

memory: 256Mi

securityContext:

allowPrivilegeEscalation: false

volumeMounts:

- mountPath: /app/conf/config.json

name: config-volume

subPath: config.json

serviceAccountName: demo-app

volumes:

- configMap:

name: demo-app-config-t7c64mbtt2

name: config-volume

### https://www.cnblogs.com/wade-xu/p/16839829.html

staging/prod 文件夹也是类似dev的文件,环境不一样,config.json 以及资源配置,image tag 不一样,显示申明就行了。

完整代码请参考我的 Github

涵盖 Kustomize 知识点

- commonLabels

- patchesStrategicMerge

- patches

- configMapGenerator

- replicas

- images

总结

经过本篇文章的学习,以及上一篇 [云原生之旅 - 5)Kubernetes时代的包管理工具 Helm ]关于Helm的学习,您已经能体会到两款工具的不同之处。