-

6.ClickHouse系列之配置分片集群

副本集对数据进行完整备份,数据高可用,对于分片集群来说,不管是ES还是ClickHouse是为了解决数据横向扩展的问题,ClickHouse在实际应用中一般配置副本集就好了

1. 编写clickhouse-shard.yml文件

具体代码已上传至gitee,可直接克隆使用

# 副本集部署示例 version: '3' services: zoo1: image: zookeeper restart: always hostname: zoo1 ports: - 2181:2181 environment: ZOO_MY_ID: 1 ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181 networks: - ckNet zoo2: image: zookeeper restart: always hostname: zoo2 ports: - 2182:2181 environment: ZOO_MY_ID: 2 ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181 networks: - ckNet zoo3: image: zookeeper restart: always hostname: zoo3 ports: - 2183:2181 environment: ZOO_MY_ID: 3 ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181 networks: - ckNet ck1_1: image: clickhouse/clickhouse-server container_name: ck1_1 ulimits: nofile: soft: "262144" hard: "262144" volumes: - ./1_1/config.d:/etc/clickhouse-server/config.d - ./config.xml:/etc/clickhouse-server/config.xml ports: - 18123:8123 # http接口用 - 19000:9000 # 本地客户端用 depends_on: - zoo1 - zoo2 - zoo3 networks: - ckNet ck1_2: image: clickhouse/clickhouse-server container_name: ck1_2 ulimits: nofile: soft: "262144" hard: "262144" volumes: - ./1_2/config.d:/etc/clickhouse-server/config.d - ./config.xml:/etc/clickhouse-server/config.xml ports: - 18124:8123 # http接口用 - 19001:9000 # 本地客户端用 depends_on: - zoo1 - zoo2 - zoo3 networks: - ckNet ck2_1: image: clickhouse/clickhouse-server container_name: ck2_1 ulimits: nofile: soft: "262144" hard: "262144" volumes: - ./2_1/config.d:/etc/clickhouse-server/config.d - ./config.xml:/etc/clickhouse-server/config.xml ports: - 18125:8123 # http接口用 - 19002:9000 # 本地客户端用 depends_on: - zoo1 - zoo2 - zoo3 networks: - ckNet ck2_2: image: clickhouse/clickhouse-server container_name: ck2_2 ulimits: nofile: soft: "262144" hard: "262144" volumes: - ./2_2/config.d:/etc/clickhouse-server/config.d - ./config.xml:/etc/clickhouse-server/config.xml ports: - 18126:8123 # http接口用 - 19003:9000 # 本地客户端用 depends_on: - zoo1 - zoo2 - zoo3 networks: - ckNet networks: ckNet: driver: bridge- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

修改config.xml导入的文件名称为metrika_shard

<include_from>/etc/clickhouse-server/config.d/metrika_shard.xml</include_from>- 1

2. 分片副本具体配置

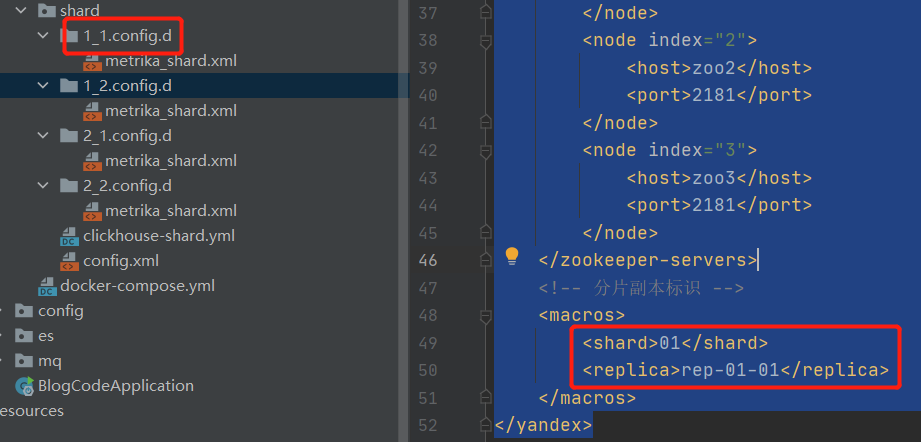

我们配置了了2个分片,每个分片1个副本,目录如下

每个目录下metrika_shard.xml配置如下

<?xml version="1.0" encoding="utf-8" ?> <yandex> <remote_servers> <shenjian_cluster> <!-- 分片1 --> <shard> <internal_replication>true</internal_replication> <replica> <!-- 第一个副本 --> <host>ck1_1</host> <port>9000</port> </replica> <replica> <!-- 第二个副本,生产环境中不同副本分布在不同机器 --> <host>ck1_2</host> <port>9000</port> </replica> </shard> <!-- 分片2 --> <shard> <internal_replication>true</internal_replication> <replica> <host>ck2_1</host> <port>9000</port> </replica> <replica> <host>ck2_2</host> <port>9000</port> </replica> </shard> </shenjian_cluster> </remote_servers> <zookeeper-servers> <node index="1"> <host>zoo1</host> <port>2181</port> </node> <node index="2"> <host>zoo2</host> <port>2181</port> </node> <node index="3"> <host>zoo3</host> <port>2181</port> </node> </zookeeper-servers> <!-- 分片副本标识 --> <macros> <shard>01</shard> <replica>rep-01-01</replica> </macros> </yandex>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

对于其他三个,只需要修改shard replica标识即可

<macros> <shard>01</shard> <replica>rep-01-02</replica> </macros>- 1

- 2

- 3

- 4

<macros> <shard>02</shard> <replica>rep-02-01</replica> </macros>- 1

- 2

- 3

- 4

<macros> <shard>02</shard> <replica>rep-02-02</replica> </macros>- 1

- 2

- 3

- 4

OK,至此为止,可以

docker-compose -f clickhouse-shard.yml up -d启动了3. 创建表

请将shenjian_cluster改为自己集群的名称,也就是metrika_shard.xml中remote_servers子标签,shard与replica无需改动,clickhouse会自动查找匹配的配置,为此集群下所有节点创建该表

CREATE TABLE house ON CLUSTER shenjian_cluster ( id String, city String, region String, name String, price Float32, publish_date DateTime ) ENGINE=ReplicatedMergeTree('/clickhouse/table/{shard}/house', '{replica}') PARTITION BY toYYYYMMDD(publish_date) PRIMARY KEY(id) ORDER BY (id, city, region, name)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

创建后,可以看到所有节点【127.0.0.1:18123 127.0.0.1:18124 127.0.0.1:18125 127.0.0.1:18126】都存在了该表,正确

4. 创建distribute表

CREATE TABLE distribute_house ON CLUSTER shenjian_cluster ( id String, city String, region String, name String, price Float32, publish_date DateTime ) ENGINE=Distributed(shenjian_cluster, default, house, hiveHash(publish_date))- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- house: 表名

- hiveHash(city): 分片键

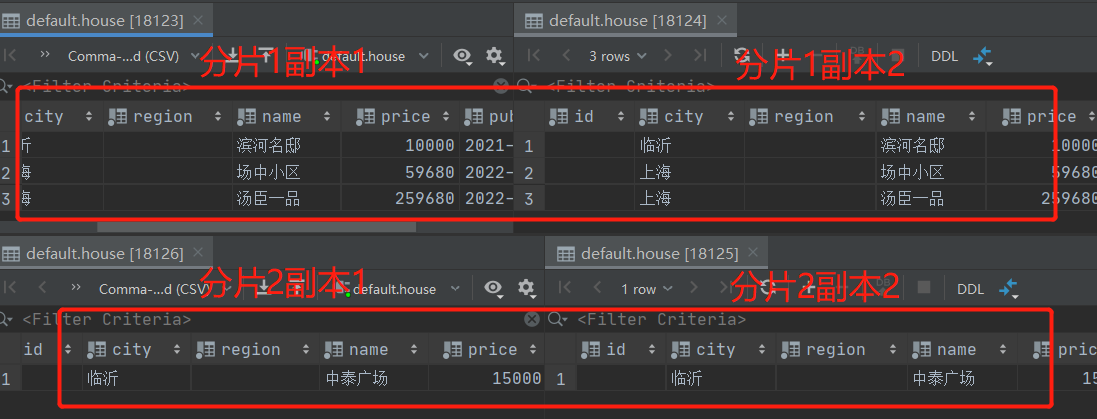

5. 新增数据验证分片集群

INSERT INTO distribute_house(city, name, price, publish_date) VALUES ('上海', '场中小区', 59680, '2022-08-01'), ('上海', '汤臣一品', 259680, '2022-08-01'), ('临沂', '滨河名邸', 10000, '2021-08-01'), ('临沂', '中泰广场', 15000, '2020-08-01');- 1

- 2

- 3

- 4

OK,分片集群成功,快给自己鼓掌吧!!!!

欢迎关注公众号算法小生或沈健的技术博客shenjian.online

-

相关阅读:

6.1.2 基于MSI文件安装MySQL

[附源码]计算机毕业设计JAVA儒家文化网站

面试官:Dubbo一次RPC请求经历哪些环节?

Elastic Stack从入门到实践(一)--Elastic Stack入门(3)--Logstash入门与Elastic Stack实战

LeetCode+ 66 - 70 高精度、二分专题

<MySQL> 查询数据进阶操作 -- 联合查询

【Compose】Desktop Application 初尝试

AutoCAD Electrical 2022——创建项目

【面试题】margin负值问题

Hive之数据类型和视图

- 原文地址:https://blog.csdn.net/SJshenjian/article/details/127454093