-

Hadoop伪分布集群搭建(单节点)

## 一、系统基础操纵:1、修改主机名

sudo hostnamectl set-hostname hadoop bash![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-LEqeHAg7-1664255722246)(:/ee9ce513a2e24ade80c7e23577721e20)]](https://1000bd.com/contentImg/2024/09/12/1955c9e56650dc37.png)

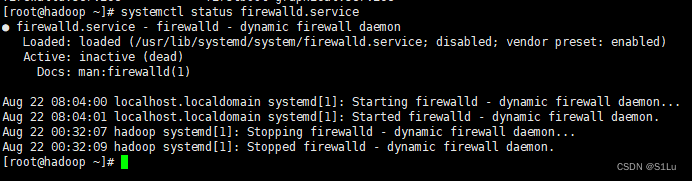

2、禁用防火墙

systemctl stop firewalld.service systemctl disable firewalld.service systemctl status firewalld.service

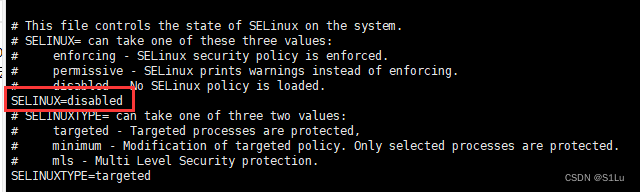

3、禁用SELinux

vi /etc/selinux/config

4、修改repo源

5、安装软件

二、Java环境配置

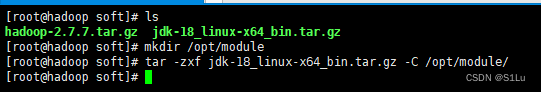

1、解压

tar -zxf jdk-8u221-linux-x64.tar.gz -C /opt/module/

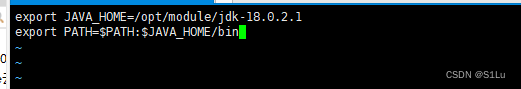

2、配置系统环境变量

vi /etc/profile.d/hadoop.shexport JAVA_HOME=/opt/module/jdk1.8.0_221 export PATH=$PATH:$JAVA_HOME/bin![(:/fd350a77086341629c77c0dfe6aa10f2)]](https://1000bd.com/contentImg/2024/09/12/1089474fe4d8afec.png)

3、生效

source /etc/profile.d/hadoop.sh

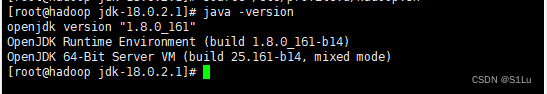

4、测试

java -version

三、ssh免密登录

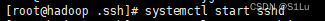

1、启动ssh守护进程

systemctl start sshd

2、查看状态

systemctl status sshd

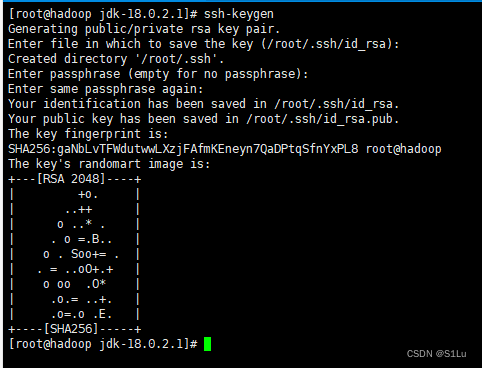

3、生成密钥对

ssh-keygen

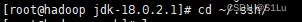

4、切换目录

cd ~/.ssh/

5、添加公钥

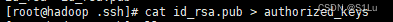

cat id_rsa.pub > authorized_keys

6、修改权限

chmod 600 authorized_keys

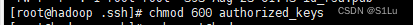

7、使用本机做回环测试

ssh root@hadoop

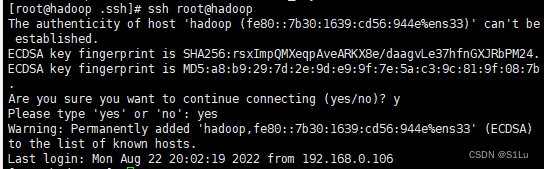

8、退出

exit

四 、gadoop配置安装

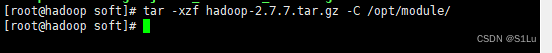

1、解压压缩包

tar -xzf hadoop-2.7.7.tar.gz -C /opt/module/

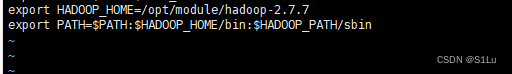

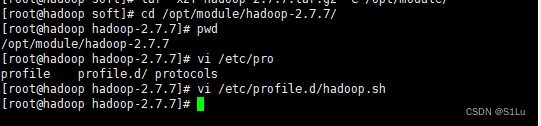

2、 配置hadoop系统环境变量

vi /etc/profile.d/hadoop.sh export HADOOP_HOME=/opt/module/hadoop-2.7.7 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_PATH/sbin

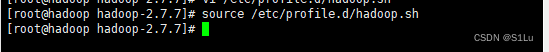

3、使脚本生效

source /etc/profile.d/hadoop.sh

4、查看是否生效

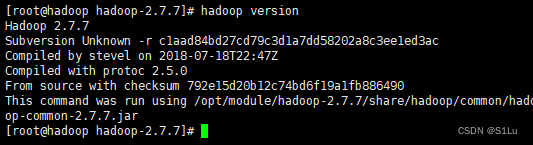

hadoop version

五、配置HDFS

1、配置

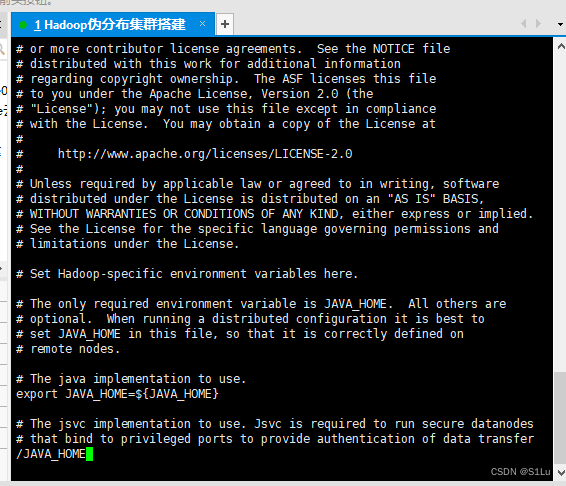

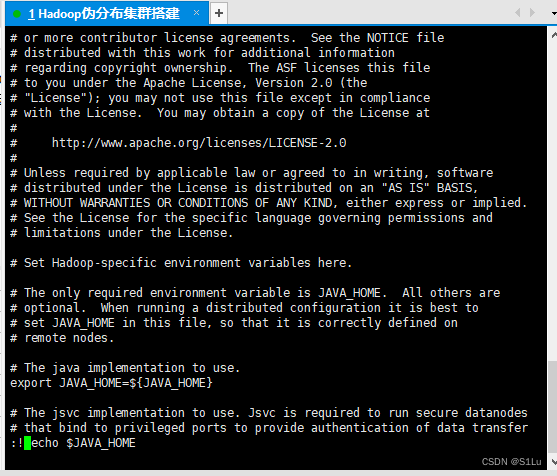

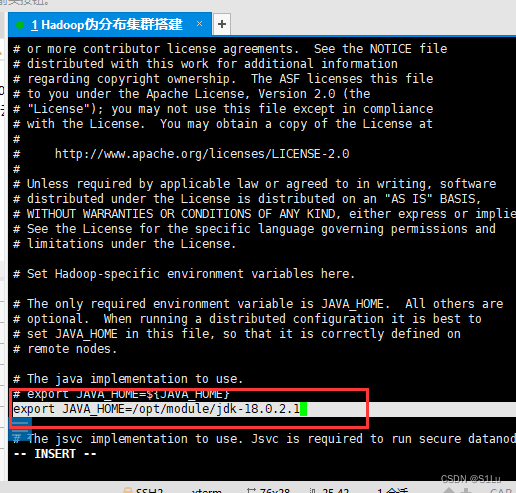

hadoop-env.sh中的JAVA_HOME先进入

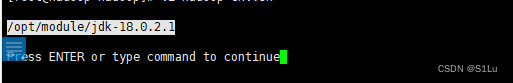

/opt/module/hadoop-2.7.7/etc/hadoopvi hadoop-env.sh :! echo $JAVA_HOME

/opt/module/jdk1.8.0_221

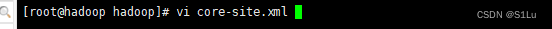

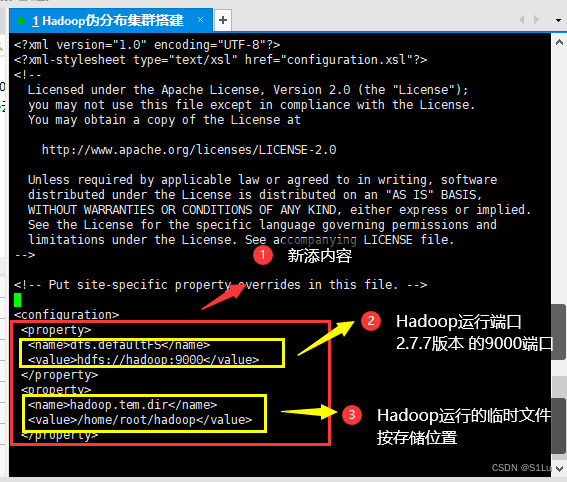

2、配置core-site.xml

vi core-site.xml

新的

<configuration> <property> <name>fs.default.namename> <value>hdfs://localhost:9000value> property> configuration>原来的

<property> <name>dfs.defaultFSname> <value>hdfs://hadoop:9000value> property> <property> <name>hadoop.tmp.dirname> <value>/home/root/hadoopvalue> property>

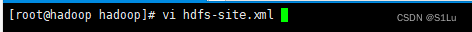

3、配置hdfs-site.xml

vi hdfs-site.xml

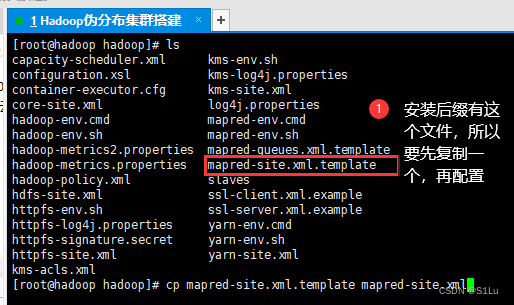

<property> <name>dfs.replicationname> <value>1value> property> <property> <name>dfs.namenode.name.dirname> <value>file:///home/root/hadoop/namevalue> property> <property> <name>dfs.namenode.data.dirname> <value>file:///home/root/hadoop/datavalue> property>4、配置mapred-site.xml文件

cp mapred-site.xml.template mapred-site.xml

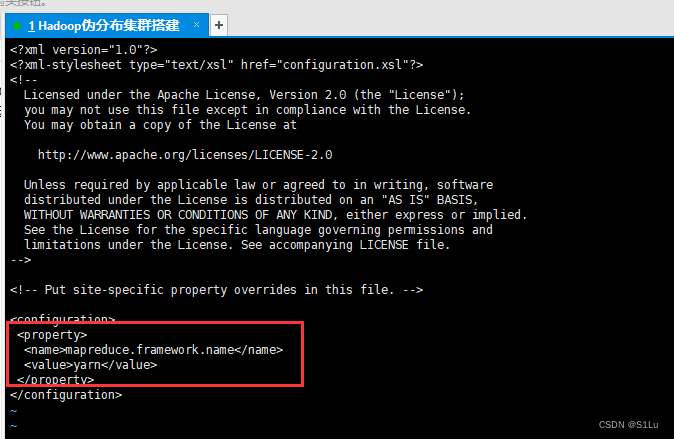

vi mapred-site.xml

<property> <name>mapreduce.framework.namename> <value>yarnvalue> property>

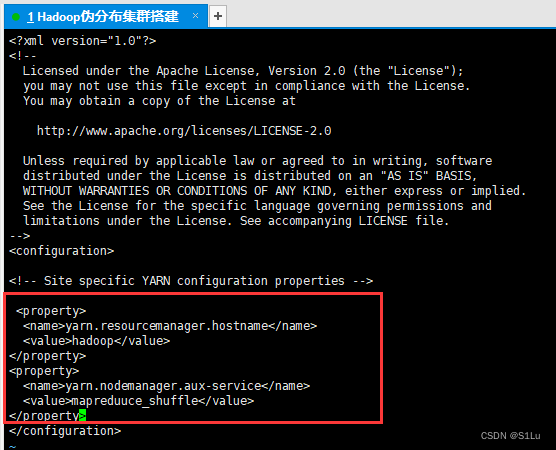

5、配置yarn-site.xml

vi yarn-site.xml

<property> <name>yarn.resourcemanager.hostnamesname> <value>hadoopvalue> property> <property> <name>yarn.nodemanager.aux-servicesname> <value>mapreduce_shufflevalue> property>

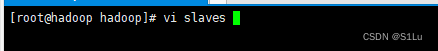

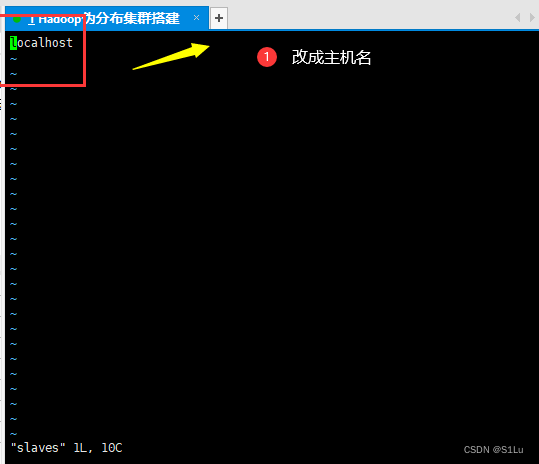

6、配置slaves

vi slaves

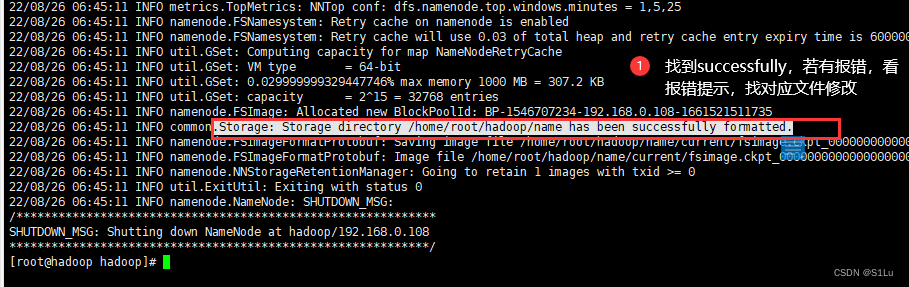

7、格式化NameNode

hdfs namenode -format

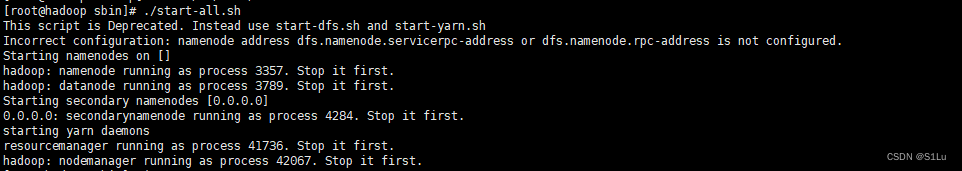

六、启动Hadoop

在

/opt/module/hadoop-2.7.7/sbin下bash start-all.sh

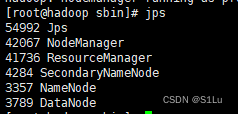

查看启动:

jps

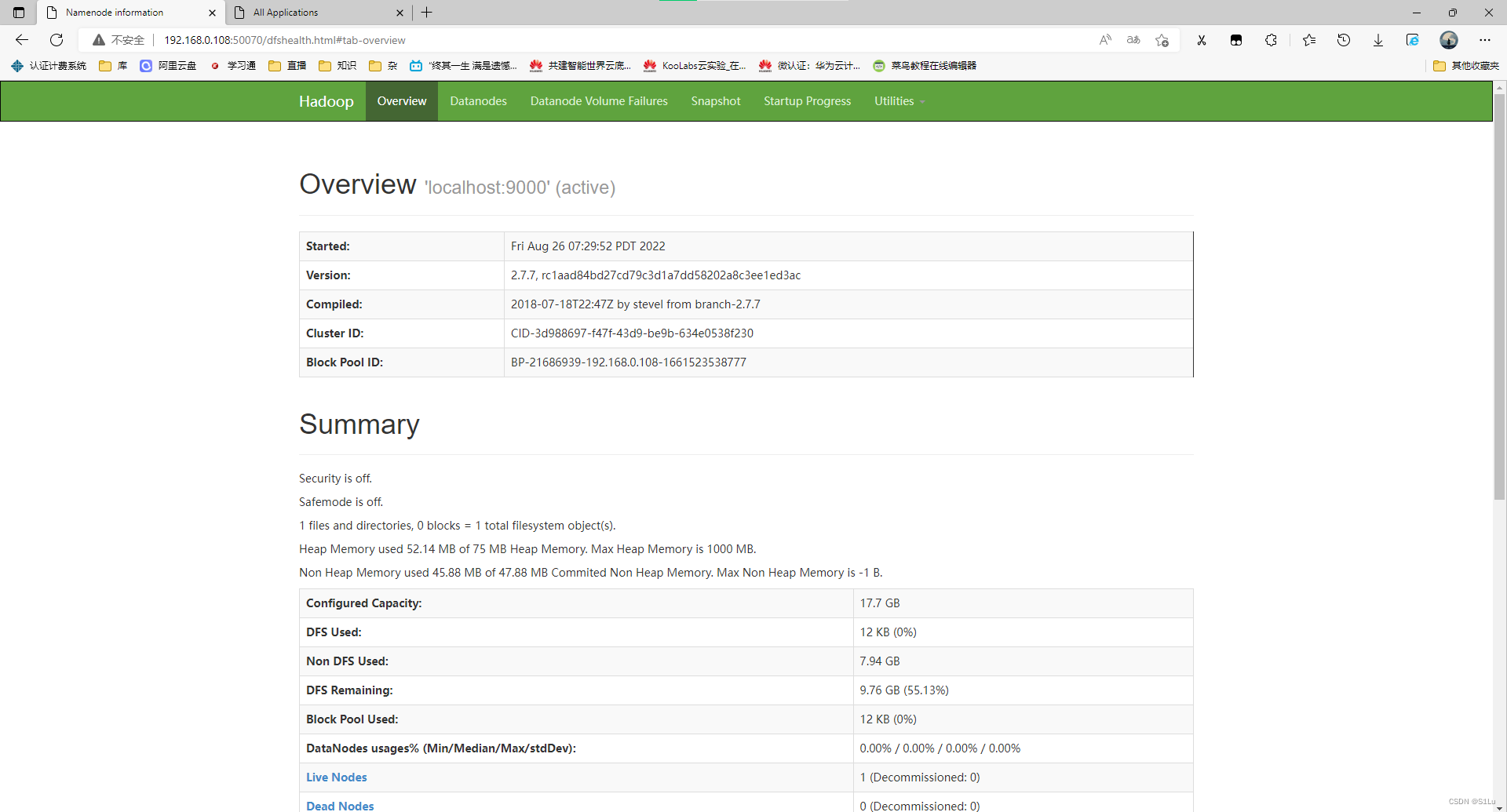

http://192.168.0.108:50070/dfshealth.html#tab-overview

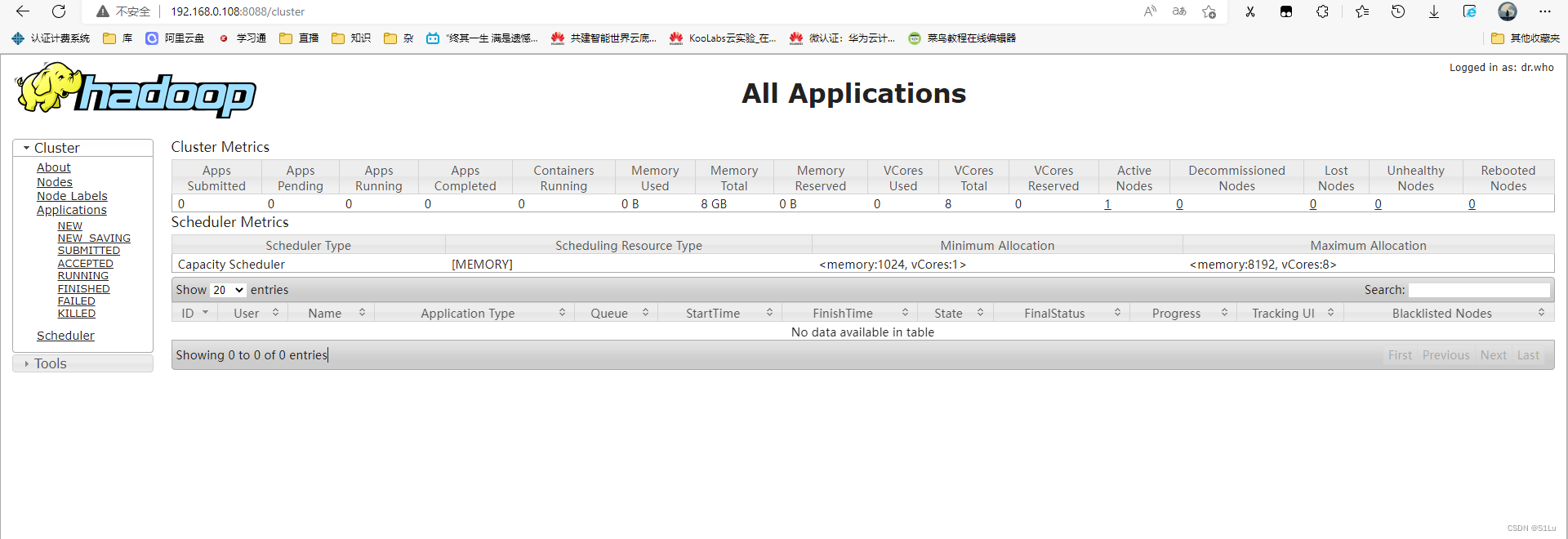

http://192.168.0.108:8088/cluster

结语

2022-08-27 12:08 凌晨

由于jar包导错了,导致最后的运行 出现不兼容现象,运行失败,两小时时间排错。卸载重装jdk,解决问题。要注意环境的需求。报错处理

参考大佬连接:

https://www.javaroad.cn/questions/79621 -

相关阅读:

高效管理文件夹名称:如何批量修改指定多样化的文件夹名称

收钱吧研发效能实践之工具篇

C#学习系列之事件

Spark(2)-基础tranform算子(一)

前端性能测试工具-lighthouse

Synchronized的实现和锁升级

HyperLynx(二十八)板层噪声分析和SI/PI联合仿真实例

通过索引名(行、列名)提取DataFrame中的数据loc()通过索引号(行、列号)提取DataFrame中的数据iloc()

【C#】认识C# (为了游戏开发 O(≧口≦)O)

2023NOIP A层联测10 集合

- 原文地址:https://blog.csdn.net/qq_51644623/article/details/127069745