-

cks 考试指南

文章目录

- 1. CIS benchmarking

- 2. ingress-nginx with TLS

- 3. network policy

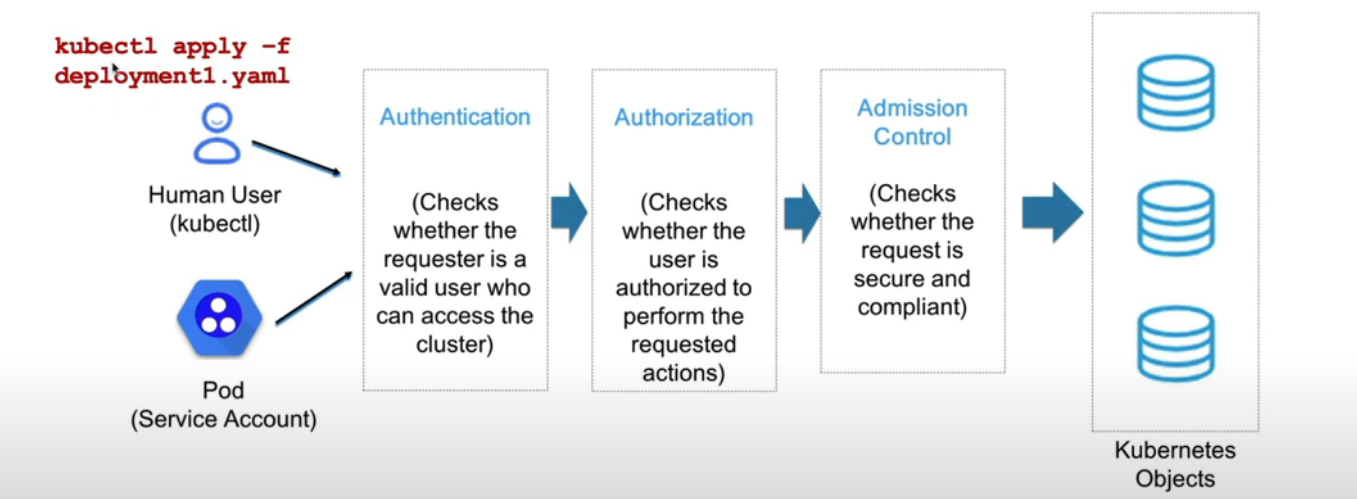

- 4. Minimize use of, and access to, GUI elements

- 5. Verify platform binaries before deploying

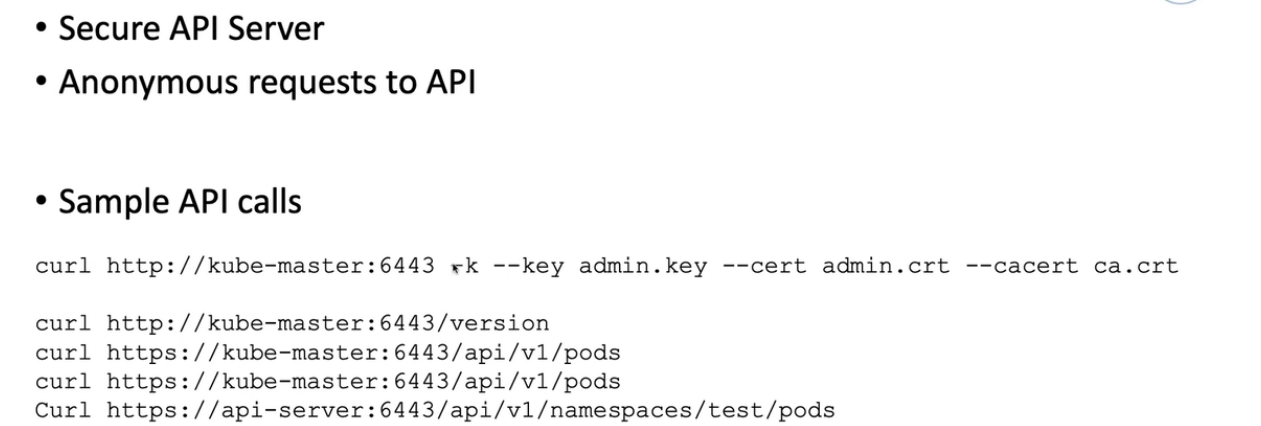

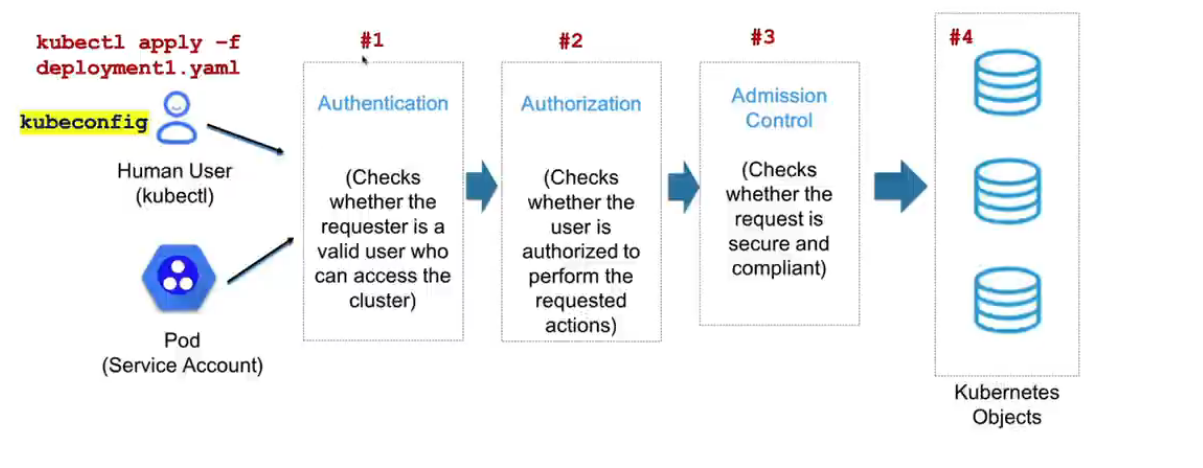

- 6. Restrict access to Kubernetes API

- 7. Disable Automount Service Account Token

- 8. Minimize host OS footprint Reduce Attack Surface

- 9. Restrict Container Access with AppArmor

- 10. Restrict a Container's Syscalls with seccomp

- 11. Pod Security Policies (PodSecurityPolicy)

- 12. Open Policy Agent - OPA Gatekeeper

- 13. Security Context for a Pod or Container

- 14. Manage Kubernetes secrets

- 15. Container Runtime Sandboxes gVisor runsc kata containers

- 16. mTLS实现pod到pod加密

- 17. 最小化基本镜像(images)占用空间

- 18. 允许Registries使用ImagePolicyWebhook

- 19. 对镜像(images)进行签名和验证

- 20. Static analysis using Kubesec, Kube-score, KubeLinter, Checkov & KubeHunter

- 21. 扫描镜像查找已知漏洞

- 22. 用Falco & Slack警报检测系统调用等文件恶意活动

- 23. Ensure immutability of containers at runtime

- 24. 使用 Audit Logs 监控访问情况

- 25. Pod安全准入控制- PodSecurityPolicy

- 26. CKS Exam Experience, Topics to Focus & Tips

1. CIS benchmarking

1.1 本地安装

curl -L https://github.com/aquasecurity/kube-bench/releases/download/v0.6.9/kube-bench_0.6.9_linux_amd64.deb -o kube-bench_0.6.9_linux_amd64.deb apt install ./kube-bench_0.6.9_linux_amd64.deb -f kube-bench- 1

- 2

- 3

- 4

- 5

1.2 kubernetes 安装

master

kubectl apply -f https://raw.githubusercontent.com/aquasecurity/kube-bench/main/job-master.yaml- 1

node:

kubectl apply -f https://raw.githubusercontent.com/aquasecurity/kube-bench/main/job-node.yaml- 1

kubectl get job kubectl get po kubectl log xxxx- 1

- 2

- 3

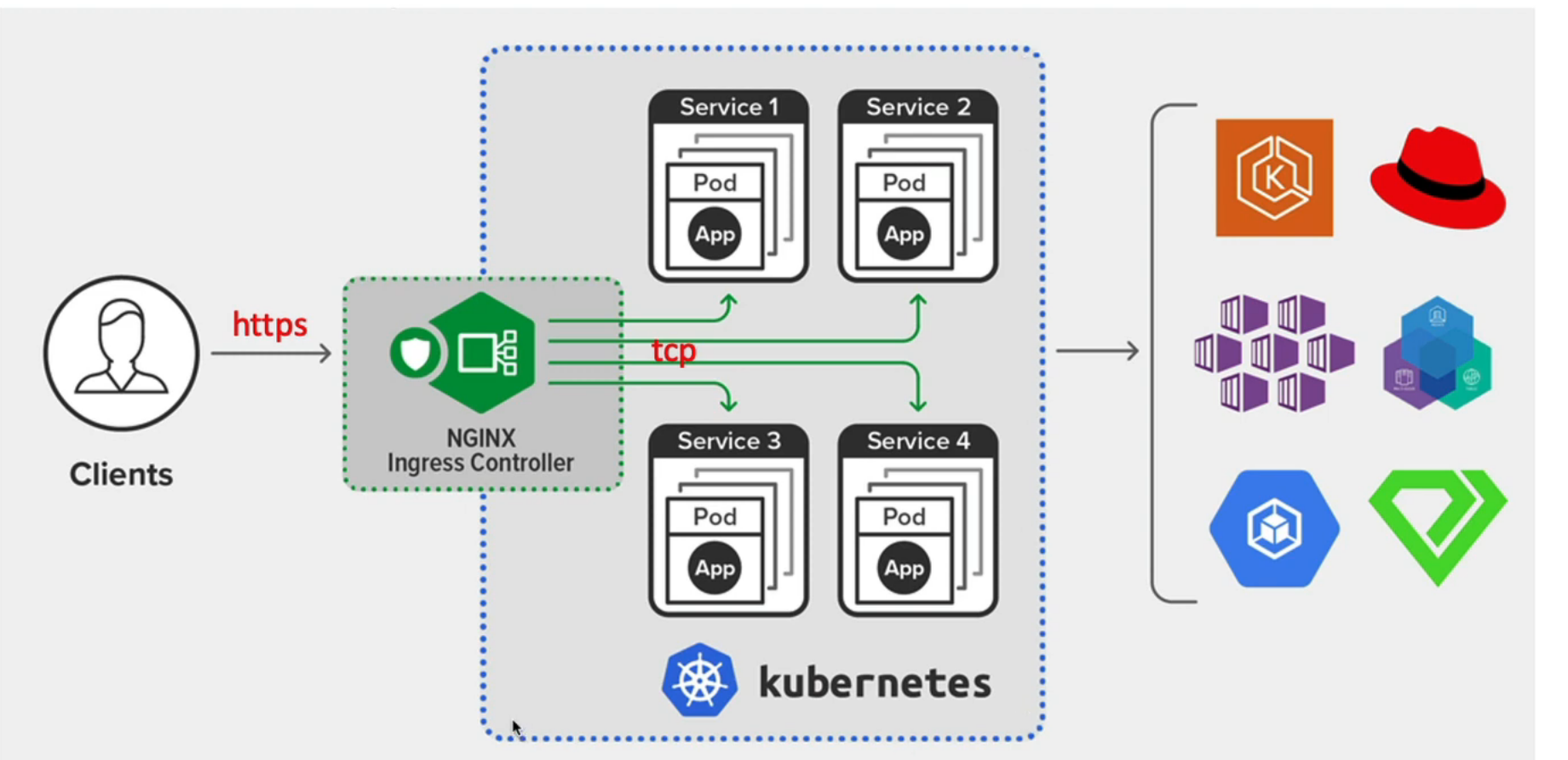

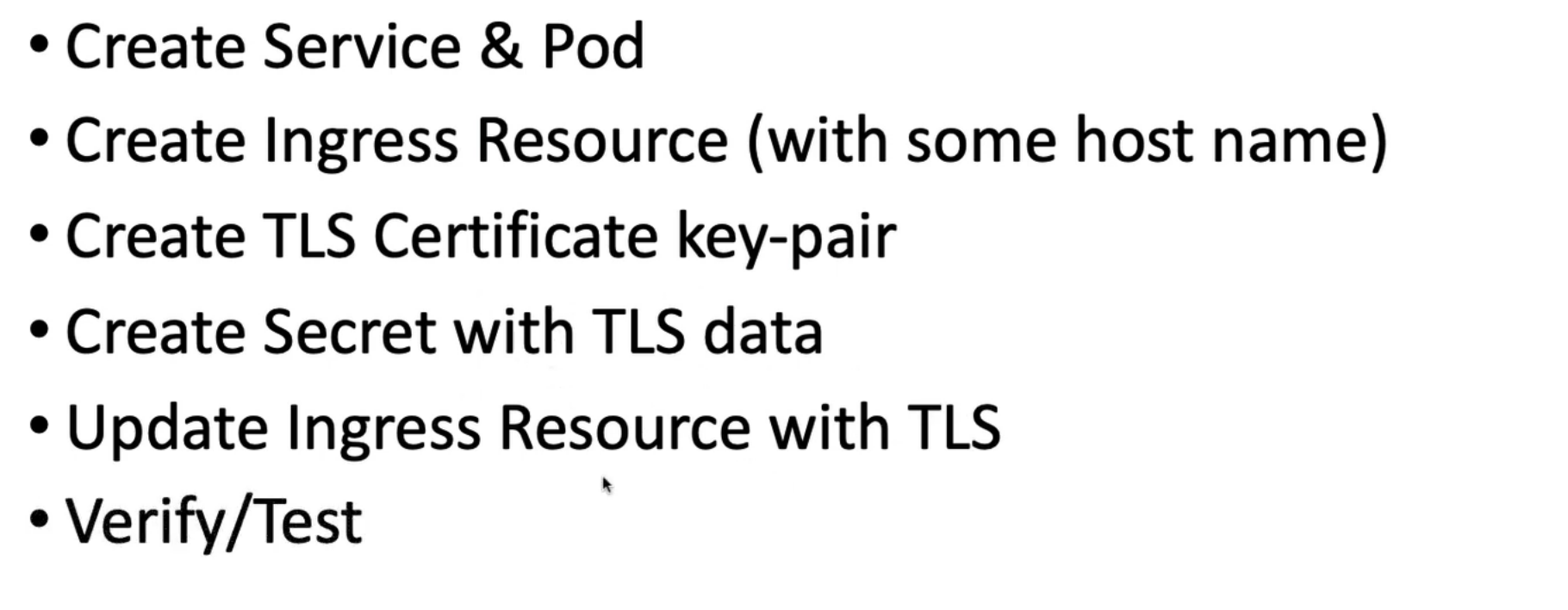

2. ingress-nginx with TLS

2.1 create svc & pod

k get no k run web --image caddy k expose po web --name caddy-svc --port 80- 1

- 2

- 3

- 4

2.2 create ingress resource

caddy-ingress.yaml

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: simple-ing spec: rules: - host: learnwithgvr.com http: paths: - path: / pathType: Prefix backend: service: name: caddy-svc port: number: 80- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

k apply -f caddy-ingress.yaml curl learnwithgvr.com- 1

- 2

2.3 create TLS certificate key-pair

openssl req -x509 -newkey rsa:4096 -sha256 -nodes -keyout tls.key -out tls.crt -subj "/CN=learnwithgvr.com" -days 365- 1

- 2

create secret with TLS data

k create secret tls sec-learnwithgvr --cert=tls.crt --key=tls.key- 1

2.4 update ingress with TLS

caddy-ingress.yaml

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: simple-ing spec: tls: - hosts: - learnwithgvr.com secretName: sec-learnwithgvr rules: - host: learnwithgvr.com http: paths: - path: / pathType: Prefix backend: service: name: caddy-svc port: number: 80- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

执行:

k apply -f caddy-ingress.yaml k describe ing- 1

- 2

2.5 verify/test

curl --cacert tls.crt https://learnwithgvr.com- 1

3. network policy

- 限制控制平面端口(6443、2379、2380、10250、10251、10252)

- 限制工作节点端口(10250、30000-32767)

- 对于云,使用 Kubernetes 网络策略来限制 pod 对云元数据的访问

示例假设 AWS 云,元数据 IP 地址为 169.254.169.254 应被阻止,而所有其他外部地址则不被阻止。

apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: deny-only-cloud-metadata-access spec: podSelector: {} policyTypes: - Ingress egress: - from: - ipBlock: cidr: 0.0.0.0/0 except: - 169.254.169.254/32- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

4. Minimize use of, and access to, GUI elements

#steps to create serviceaccount & use kubectl create serviceaccount simple-user -n kube-system kubectl create clusterrole simple-reader --verb=get,list,watch --resource=pods,deployments,services,configmaps kubectl create clusterrolebinding cluster-simple-reader --clusterrole=simple-reader --serviceaccount=kube-system:simple-user SEC_NAME=$(kubectl get serviceAccount simple-user -o jsonpath='{.secrets[0].name}') USER_TOKEN=$(kubectl get secret $SEC_NAME -o json | jq -r '.data["token"]' | base64 -d) cluster_name=$(kubectl config get-contexts $(kubectl config current-context) | awk '{print $3}' | tail -n 1) kubectl config set-credentials simple-user --token="${USER_TOKEN}" kubectl config set-context simple-reader --cluster=$cluster_name --user simple-user kubectl config set-context simple-reader- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- How to Access Dashboard?

- use kubectl proxy to access to the Dashboard http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/.

- Ref: https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/#accessing-the-dashboard-ui

- Ref: https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

5. Verify platform binaries before deploying

- CKS Preparation Guide Githubhttps://github.com/ramanagali/Interview_Guide/blob/main/CKS_Preparation_Guide.md

- Verify Platform

Example 1

curl -LO https://dl.k8s.io/v1.23.1/kubernetes-client-darwin-arm64.tar.gz -o kubernetes-client-darwin-arm64.tar.gz # Print SHA Checksums - mac shasum -a 512 kubernetes-client-darwin-arm64.tar.gz # Print SHA Checksums - linux sha512sum kubernetes-client-darwin-arm64.tar.gz sha512sum kube-apiserver sha512sum kube-proxy- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

Example 2

kubectl version --short --client #download checksum for kubectl for linux - (change version) curl -LO "https://dl.k8s.io/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl.sha256" #download old version curl -LO "https://dl.k8s.io/v1.22.1/bin/linux/amd64/kubectl.sha256" #download checksum for kubectl for mac - (change version) curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/amd64/kubectl.sha256" #insall coreutils (for mac) brew install coreutils #verify kubectl binary (for linux) echo "$(<kubectl.sha256) /usr/bin/kubectl" | sha256sum --check #verify kubectl binary (for mac) echo "$(<kubectl.sha256) /usr/local/bin/kubectl" | sha256sum -c- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- Ref: https://github.com/kubernetes/kubernetes/releases

- Ref:https://github.com/kubernetes/kubernetes/tree/master/CHANGELOG#changelogs

- Ref: https://kubernetes.io/docs/tasks/tools/

6. Restrict access to Kubernetes API

$ k get pod -A $ k proxy Starting to serve on 127.0.0.1:8001- 1

- 2

- 3

$ curl 127.0.0.1:8001 { "paths": [ "/.well-known/openid-configuration", "/api", "/api/v1", "/apis", "/apis/", "/apis/admissionregistration.k8s.io", "/apis/admissionregistration.k8s.io/v1", "/apis/admissionregistration.k8s.io/v1beta1", "/apis/apiextensions.k8s.io", "/apis/apiextensions.k8s.io/v1", "/apis/apiextensions.k8s.io/v1beta1", "/apis/apiregistration.k8s.io", "/apis/apiregistration.k8s.io/v1", "/apis/apiregistration.k8s.io/v1beta1", "/apis/apps", "/apis/apps/v1", "/apis/authentication.k8s.io", "/apis/authentication.k8s.io/v1", "/apis/authentication.k8s.io/v1beta1", "/apis/authorization.k8s.io", "/apis/authorization.k8s.io/v1", "/apis/authorization.k8s.io/v1beta1", "/apis/autoscaling", "/apis/autoscaling/v1", "/apis/autoscaling/v2beta1", "/apis/autoscaling/v2beta2", "/apis/batch", "/apis/batch/v1", "/apis/batch/v1beta1", "/apis/certificates.k8s.io", "/apis/certificates.k8s.io/v1", "/apis/certificates.k8s.io/v1beta1", "/apis/config.gatekeeper.sh", "/apis/coordination.k8s.io", "/apis/coordination.k8s.io/v1", "/apis/coordination.k8s.io/v1beta1", "/apis/crd.projectcalico.org", "/apis/crd.projectcalico.org/v1", "/apis/discovery.k8s.io", "/apis/discovery.k8s.io/v1beta1", "/apis/events.k8s.io", "/apis/events.k8s.io/v1", "/apis/events.k8s.io/v1beta1", "/apis/extensions", "/apis/extensions/v1beta1", "/apis/flowcontrol.apiserver.k8s.io", "/apis/flowcontrol.apiserver.k8s.io/v1beta1", "/apis/networking.k8s.io", "/apis/networking.k8s.io/v1", "/apis/networking.k8s.io/v1beta1", "/apis/node.k8s.io", "/apis/node.k8s.io/v1", "/apis/node.k8s.io/v1beta1", "/apis/policy", "/apis/policy/v1beta1", "/apis/rbac.authorization.k8s.io", "/apis/rbac.authorization.k8s.io/v1", "/apis/rbac.authorization.k8s.io/v1beta1", "/apis/scheduling.k8s.io", "/apis/scheduling.k8s.io/v1", "/apis/scheduling.k8s.io/v1beta1", "/apis/status.gatekeeper.sh", "/apis/storage.k8s.io", "/apis/storage.k8s.io/v1", "/apis/storage.k8s.io/v1beta1", "/apis/templates.gatekeeper.sh", "/healthz", "/healthz/autoregister-completion", "/healthz/etcd", "/healthz/log", "/healthz/ping", "/healthz/poststarthook/aggregator-reload-proxy-client-cert", "/healthz/poststarthook/apiservice-openapi-controller", "/healthz/poststarthook/apiservice-registration-controller", "/healthz/poststarthook/apiservice-status-available-controller", "/healthz/poststarthook/bootstrap-controller", "/healthz/poststarthook/crd-informer-synced", "/healthz/poststarthook/generic-apiserver-start-informers", "/healthz/poststarthook/kube-apiserver-autoregistration", "/healthz/poststarthook/priority-and-fairness-config-consumer", "/healthz/poststarthook/priority-and-fairness-config-producer", "/healthz/poststarthook/priority-and-fairness-filter", "/healthz/poststarthook/rbac/bootstrap-roles", "/healthz/poststarthook/scheduling/bootstrap-system-priority-classes", "/healthz/poststarthook/start-apiextensions-controllers", "/healthz/poststarthook/start-apiextensions-informers", "/healthz/poststarthook/start-cluster-authentication-info-controller", "/healthz/poststarthook/start-kube-aggregator-informers", "/healthz/poststarthook/start-kube-apiserver-admission-initializer", "/livez", "/livez/autoregister-completion", "/livez/etcd", "/livez/log", "/livez/ping", "/livez/poststarthook/aggregator-reload-proxy-client-cert", "/livez/poststarthook/apiservice-openapi-controller", "/livez/poststarthook/apiservice-registration-controller", "/livez/poststarthook/apiservice-status-available-controller", "/livez/poststarthook/bootstrap-controller", "/livez/poststarthook/crd-informer-synced", "/livez/poststarthook/generic-apiserver-start-informers", "/livez/poststarthook/kube-apiserver-autoregistration", "/livez/poststarthook/priority-and-fairness-config-consumer", "/livez/poststarthook/priority-and-fairness-config-producer", "/livez/poststarthook/priority-and-fairness-filter", "/livez/poststarthook/rbac/bootstrap-roles", "/livez/poststarthook/scheduling/bootstrap-system-priority-classes", "/livez/poststarthook/start-apiextensions-controllers", "/livez/poststarthook/start-apiextensions-informers", "/livez/poststarthook/start-cluster-authentication-info-controller", "/livez/poststarthook/start-kube-aggregator-informers", "/livez/poststarthook/start-kube-apiserver-admission-initializer", "/logs", "/metrics", "/openapi/v2", "/openid/v1/jwks", "/readyz", "/readyz/autoregister-completion", "/readyz/etcd", "/readyz/informer-sync", "/readyz/log", "/readyz/ping", "/readyz/poststarthook/aggregator-reload-proxy-client-cert", "/readyz/poststarthook/apiservice-openapi-controller", "/readyz/poststarthook/apiservice-registration-controller", "/readyz/poststarthook/apiservice-status-available-controller", "/readyz/poststarthook/bootstrap-controller", "/readyz/poststarthook/crd-informer-synced", "/readyz/poststarthook/generic-apiserver-start-informers", "/readyz/poststarthook/kube-apiserver-autoregistration", "/readyz/poststarthook/priority-and-fairness-config-consumer", "/readyz/poststarthook/priority-and-fairness-config-producer", "/readyz/poststarthook/priority-and-fairness-filter", "/readyz/poststarthook/rbac/bootstrap-roles", "/readyz/poststarthook/scheduling/bootstrap-system-priority-classes", "/readyz/poststarthook/start-apiextensions-controllers", "/readyz/poststarthook/start-apiextensions-informers", "/readyz/poststarthook/start-cluster-authentication-info-controller", "/readyz/poststarthook/start-kube-aggregator-informers", "/readyz/poststarthook/start-kube-apiserver-admission-initializer", "/readyz/shutdown", "/version" ] }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

$ curl 127.0.0.1:8001/api/v1 { "kind": "APIResourceList", "groupVersion": "v1", "resources": [ { "name": "bindings", "singularName": "", "namespaced": true, "kind": "Binding", "verbs": [ "create" ] }, { "name": "componentstatuses", "singularName": "", "namespaced": false, "kind": "ComponentStatus", "verbs": [ "get", "list" ], "shortNames": [ "cs" ] }, { "name": "configmaps", "singularName": "", "namespaced": true, "kind": "ConfigMap", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "cm" ], "storageVersionHash": "qFsyl6wFWjQ=" }, { "name": "endpoints", "singularName": "", "namespaced": true, "kind": "Endpoints", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "ep" ], "storageVersionHash": "fWeeMqaN/OA=" }, { "name": "events", "singularName": "", "namespaced": true, "kind": "Event", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "ev" ], "storageVersionHash": "r2yiGXH7wu8=" }, { "name": "limitranges", "singularName": "", "namespaced": true, "kind": "LimitRange", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "limits" ], "storageVersionHash": "EBKMFVe6cwo=" }, { "name": "namespaces", "singularName": "", "namespaced": false, "kind": "Namespace", "verbs": [ "create", "delete", "get", "list", "patch", "update", "watch" ], "shortNames": [ "ns" ], "storageVersionHash": "Q3oi5N2YM8M=" }, { "name": "namespaces/finalize", "singularName": "", "namespaced": false, "kind": "Namespace", "verbs": [ "update" ] }, { "name": "namespaces/status", "singularName": "", "namespaced": false, "kind": "Namespace", "verbs": [ "get", "patch", "update" ] }, { "name": "nodes", "singularName": "", "namespaced": false, "kind": "Node", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "no" ], "storageVersionHash": "XwShjMxG9Fs=" }, { "name": "nodes/proxy", "singularName": "", "namespaced": false, "kind": "NodeProxyOptions", "verbs": [ "create", "delete", "get", "patch", "update" ] }, { "name": "nodes/status", "singularName": "", "namespaced": false, "kind": "Node", "verbs": [ "get", "patch", "update" ] }, { "name": "persistentvolumeclaims", "singularName": "", "namespaced": true, "kind": "PersistentVolumeClaim", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "pvc" ], "storageVersionHash": "QWTyNDq0dC4=" }, { "name": "persistentvolumeclaims/status", "singularName": "", "namespaced": true, "kind": "PersistentVolumeClaim", "verbs": [ "get", "patch", "update" ] }, { "name": "persistentvolumes", "singularName": "", "namespaced": false, "kind": "PersistentVolume", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "pv" ], "storageVersionHash": "HN/zwEC+JgM=" }, { "name": "persistentvolumes/status", "singularName": "", "namespaced": false, "kind": "PersistentVolume", "verbs": [ "get", "patch", "update" ] }, { "name": "pods", "singularName": "", "namespaced": true, "kind": "Pod", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "po" ], "categories": [ "all" ], "storageVersionHash": "xPOwRZ+Yhw8=" }, { "name": "pods/attach", "singularName": "", "namespaced": true, "kind": "PodAttachOptions", "verbs": [ "create", "get" ] }, { "name": "pods/binding", "singularName": "", "namespaced": true, "kind": "Binding", "verbs": [ "create" ] }, { "name": "pods/eviction", "singularName": "", "namespaced": true, "group": "policy", "version": "v1beta1", "kind": "Eviction", "verbs": [ "create" ] }, { "name": "pods/exec", "singularName": "", "namespaced": true, "kind": "PodExecOptions", "verbs": [ "create", "get" ] }, { "name": "pods/log", "singularName": "", "namespaced": true, "kind": "Pod", "verbs": [ "get" ] }, { "name": "pods/portforward", "singularName": "", "namespaced": true, "kind": "PodPortForwardOptions", "verbs": [ "create", "get" ] }, { "name": "pods/proxy", "singularName": "", "namespaced": true, "kind": "PodProxyOptions", "verbs": [ "create", "delete", "get", "patch", "update" ] }, { "name": "pods/status", "singularName": "", "namespaced": true, "kind": "Pod", "verbs": [ "get", "patch", "update" ] }, { "name": "podtemplates", "singularName": "", "namespaced": true, "kind": "PodTemplate", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "storageVersionHash": "LIXB2x4IFpk=" }, { "name": "replicationcontrollers", "singularName": "", "namespaced": true, "kind": "ReplicationController", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "rc" ], "categories": [ "all" ], "storageVersionHash": "Jond2If31h0=" }, { "name": "replicationcontrollers/scale", "singularName": "", "namespaced": true, "group": "autoscaling", "version": "v1", "kind": "Scale", "verbs": [ "get", "patch", "update" ] }, { "name": "replicationcontrollers/status", "singularName": "", "namespaced": true, "kind": "ReplicationController", "verbs": [ "get", "patch", "update" ] }, { "name": "resourcequotas", "singularName": "", "namespaced": true, "kind": "ResourceQuota", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "quota" ], "storageVersionHash": "8uhSgffRX6w=" }, { "name": "resourcequotas/status", "singularName": "", "namespaced": true, "kind": "ResourceQuota", "verbs": [ "get", "patch", "update" ] }, { "name": "secrets", "singularName": "", "namespaced": true, "kind": "Secret", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "storageVersionHash": "S6u1pOWzb84=" }, { "name": "serviceaccounts", "singularName": "", "namespaced": true, "kind": "ServiceAccount", "verbs": [ "create", "delete", "deletecollection", "get", "list", "patch", "update", "watch" ], "shortNames": [ "sa" ], "storageVersionHash": "pbx9ZvyFpBE=" }, { "name": "serviceaccounts/token", "singularName": "", "namespaced": true, "group": "authentication.k8s.io", "version": "v1", "kind": "TokenRequest", "verbs": [ "create" ] }, { "name": "services", "singularName": "", "namespaced": true, "kind": "Service", "verbs": [ "create", "delete", "get", "list", "patch", "update", "watch" ], "shortNames": [ "svc" ], "categories": [ "all" ], "storageVersionHash": "0/CO1lhkEBI=" }, { "name": "services/proxy", "singularName": "", "namespaced": true, "kind": "ServiceProxyOptions", "verbs": [ "create", "delete", "get", "patch", "update" ] }, { "name": "services/status", "singularName": "", "namespaced": true, "kind": "Service", "verbs": [ "get", "patch", "update" ] } ] }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

- 321

- 322

- 323

- 324

- 325

- 326

- 327

- 328

- 329

- 330

- 331

- 332

- 333

- 334

- 335

- 336

- 337

- 338

- 339

- 340

- 341

- 342

- 343

- 344

- 345

- 346

- 347

- 348

- 349

- 350

- 351

- 352

- 353

- 354

- 355

- 356

- 357

- 358

- 359

- 360

- 361

- 362

- 363

- 364

- 365

- 366

- 367

- 368

- 369

- 370

- 371

- 372

- 373

- 374

- 375

- 376

- 377

- 378

- 379

- 380

- 381

- 382

- 383

- 384

- 385

- 386

- 387

- 388

- 389

- 390

- 391

- 392

- 393

- 394

- 395

- 396

- 397

- 398

- 399

- 400

- 401

- 402

- 403

- 404

- 405

- 406

- 407

- 408

- 409

- 410

- 411

- 412

- 413

- 414

- 415

- 416

- 417

- 418

- 419

- 420

- 421

- 422

- 423

- 424

- 425

- 426

- 427

- 428

- 429

- 430

- 431

- 432

- 433

- 434

- 435

- 436

- 437

- 438

- 439

- 440

- 441

- 442

- 443

- 444

- 445

- 446

- 447

- 448

- 449

- 450

- 451

- 452

- 453

- 454

- 455

- 456

- 457

- 458

- 459

- 460

- 461

- 462

- 463

- 464

- 465

- 466

- 467

- 468

- 469

- 470

- 471

- 472

- 473

- 474

- 475

- 476

- 477

- 478

- 479

- 480

- 481

- 482

- 483

- 484

- 485

- 486

- 487

- 488

- 489

- 490

- 491

- 492

- 493

- 494

- 495

- 496

- 497

- 498

- 499

- 500

- 501

- 502

- 503

- 504

- 505

- 506

- 507

- 508

- 509

- 510

- 511

- 512

- 513

- 514

- 515

- 516

- 517

- 518

- 519

- 520

- 521

- 522

- 523

- 524

- 525

- 526

- 527

- 528

- 529

- 530

- 531

- 532

- 533

- 534

- 535

- 536

- 537

- 538

- 539

- 540

- 541

- 542

- 543

- 544

- 545

- 546

- 547

- 548

- 549

k create ns test k run web --image nginx -n test k get po -n test- 1

- 2

- 3

$ curl 127.0.0.1:8001/api/v1/namespaces/test/pods { "kind": "PodList", "apiVersion": "v1", "metadata": { "resourceVersion": "222842" }, "items": [ { "metadata": { "name": "web", "namespace": "test", "uid": "9f0e679b-6f92-48fe-a398-fcda6b36347f", "resourceVersion": "222793", "creationTimestamp": "2022-08-30T06:09:15Z", "labels": { "run": "web" }, "annotations": { "cni.projectcalico.org/podIP": "192.168.166.145/32", "cni.projectcalico.org/podIPs": "192.168.166.145/32" }, "managedFields": [ { "manager": "kubectl-run", "operation": "Update", "apiVersion": "v1", "time": "2022-08-30T06:09:15Z", "fieldsType": "FieldsV1", "fieldsV1": {"f:metadata":{"f:labels":{".":{},"f:run":{}}},"f:spec":{"f:containers":{"k:{\"name\":\"web\"}":{".":{},"f:image":{},"f:imagePullPolicy":{},"f:name":{},"f:resources":{},"f:terminationMessagePath":{},"f:terminationMessagePolicy":{}}},"f:dnsPolicy":{},"f:enableServiceLinks":{},"f:restartPolicy":{},"f:schedulerName":{},"f:securityContext":{},"f:terminationGracePeriodSeconds":{}}} }, { "manager": "calico", "operation": "Update", "apiVersion": "v1", "time": "2022-08-30T06:09:18Z", "fieldsType": "FieldsV1", "fieldsV1": {"f:metadata":{"f:annotations":{".":{},"f:cni.projectcalico.org/podIP":{},"f:cni.projectcalico.org/podIPs":{}}}} }, { "manager": "kubelet", "operation": "Update", "apiVersion": "v1", "time": "2022-08-30T06:09:48Z", "fieldsType": "FieldsV1", "fieldsV1": {"f:status":{"f:conditions":{"k:{\"type\":\"ContainersReady\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}},"k:{\"type\":\"Initialized\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}},"k:{\"type\":\"Ready\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}}},"f:containerStatuses":{},"f:hostIP":{},"f:phase":{},"f:podIP":{},"f:podIPs":{".":{},"k:{\"ip\":\"192.168.166.145\"}":{".":{},"f:ip":{}}},"f:startTime":{}}} } ] }, "spec": { "volumes": [ { "name": "default-token-th4sb", "secret": { "secretName": "default-token-th4sb", "defaultMode": 420 } } ], "containers": [ { "name": "web", "image": "nginx", "resources": { }, "volumeMounts": [ { "name": "default-token-th4sb", "readOnly": true, "mountPath": "/var/run/secrets/kubernetes.io/serviceaccount" } ], "terminationMessagePath": "/dev/termination-log", "terminationMessagePolicy": "File", "imagePullPolicy": "Always" } ], "restartPolicy": "Always", "terminationGracePeriodSeconds": 30, "dnsPolicy": "ClusterFirst", "serviceAccountName": "default", "serviceAccount": "default", "nodeName": "node1", "securityContext": { }, "schedulerName": "default-scheduler", "tolerations": [ { "key": "node.kubernetes.io/not-ready", "operator": "Exists", "effect": "NoExecute", "tolerationSeconds": 300 }, { "key": "node.kubernetes.io/unreachable", "operator": "Exists", "effect": "NoExecute", "tolerationSeconds": 300 } ], "priority": 0, "enableServiceLinks": true, "preemptionPolicy": "PreemptLowerPriority" }, "status": { "phase": "Running", "conditions": [ { "type": "Initialized", "status": "True", "lastProbeTime": null, "lastTransitionTime": "2022-08-30T06:09:15Z" }, { "type": "Ready", "status": "True", "lastProbeTime": null, "lastTransitionTime": "2022-08-30T06:09:48Z" }, { "type": "ContainersReady", "status": "True", "lastProbeTime": null, "lastTransitionTime": "2022-08-30T06:09:48Z" }, { "type": "PodScheduled", "status": "True", "lastProbeTime": null, "lastTransitionTime": "2022-08-30T06:09:15Z" } ], "hostIP": "192.168.211.41", "podIP": "192.168.166.145", "podIPs": [ { "ip": "192.168.166.145" } ], "startTime": "2022-08-30T06:09:15Z", "containerStatuses": [ { "name": "web", "state": { "running": { "startedAt": "2022-08-30T06:09:47Z" } }, "lastState": { }, "ready": true, "restartCount": 0, "image": "nginx:latest", "imageID": "docker-pullable://nginx@sha256:b95a99feebf7797479e0c5eb5ec0bdfa5d9f504bc94da550c2f58e839ea6914f", "containerID": "docker://5d62f6cac401ab23a48b3824c4e06a92189e8e4005d1e6b78bfe26395259efeb", "started": true } ], "qosClass": "BestEffort" } } ] }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

k config view apiVersion: v1 clusters: - cluster: certificate-authority-data: DATA+OMITTED server: https://192.168.211.40:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes current-context: kubernetes-admin@kubernetes kind: Config preferences: {} users: - name: kubernetes-admin user: client-certificate-data: REDACTED client-key-data: REDACTED- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

curl -k https://192.168.211.40:6443 { "kind": "Status", "apiVersion": "v1", "metadata": { }, "status": "Failure", "message": "forbidden: User \"system:anonymous\" cannot get path \"/\"", "reason": "Forbidden", "details": { }, "code": 403 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

$ k cluster-info Kubernetes control plane is running at https://192.168.211.40:6443 KubeDNS is running at https://192.168.211.40:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy $ cat /root/.kube/config apiVersion: v1 clusters: - cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1EY3lOekE0TVRreU9Wb1hEVE15TURjeU5EQTRNVGt5T1Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTkh5CmNiNy9JRFFVOE0vd0ZsRU84VFpKY2pQNC9jYWEwbXY3RlJtbXl0dGVDaFJUTWNWcmRibFYyNzFXRVpQR2plK0wKUC9PU2U5K2ovQzFqWGdjQ1VvU2x2RHIxK1IvYnNyN3BsZVkvcTR3TXlFSGV4bHkyK3Q2a1VoL0Z5bW9iZEZqSQpGM01JbjE5QllzN1E0dFVuR3E0UGJxZGN5b01EdlpkL3RERCtMYWk0aDZLSlBGcHZwRnZTQ0RwcDdrUlROM29ICnVJU2x2d3kwUEVhVFZBano3UVBPYmhVY1NpTUQrZWtBdWErNXFoU0NvOHNQbnlZVWRxK1lUU01PT05ObWZGVnMKRTBDZlRTbm5YMVd4RmwxQ3AyaHphSFV0b1E5Yld1SjNRU004RXZkTS80S05xQ1krMDlaYVZ0NFF2NGNHUWNRSgp6MXFVUVJkZnRCT0JnMmhHSG44Q0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZCTE1KeWc2czlFbEdLRmpjdmQ2SlZvdDZzZEVNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFCMGlvRGRGaUs1T0ovVHJkWWpyalhmczQweTJ1QXMwWmc1K010TUxUelhrQjJjczRZYQp0eWR1V3htVmdWdzNYSDhrYWdmRVlUVTQ2STU5STVRQVlHekt6blAvWndZRlVVcS81ZlpNbDJ1cDh4ZUl6NUxJClN4MFFnV1oxanNhaHAwRnZLNk0wMEQrNVRQS3M5MW8vV3BqR1BYWVNhMHJ5YXd0K3dZNlNMblpDTGp4ZFR4aS8KaExXazR3WTA1WjVFZnNWNDVZYTgxVEl5V3pzekdTaWNXM3ZWU1hQRFB3Z1REOTN2WWtEbU9ZSVFVYkdtekhmTgpndmlLNlhKZ3VZR2pkRERxd2xwT1I4NVEvRFZzVWY2bS9wbk1kVThRcHFNM2Y2T0Q4eDI5WEZ3dTEra0MxSmlMCnZmNUNNdWN3S3NUY2JGUnY4RWZOaEl5c2VxTDhXYXBsUXlxdgotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg== server: https://192.168.211.40:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes current-context: kubernetes-admin@kubernetes kind: Config preferences: {} users: - name: kubernetes-admin user: client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURFekNDQWZ1Z0F3SUJBZ0lJVlRJdExqbmRtQmt3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TWpBM01qY3dPREU1TWpsYUZ3MHlNekEzTWpjd09ERTVNekphTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXlzc1ZxRmhpalllVzFNVGIKcFFYaEwrSlVHK2FuSEJTUUFDdVV5YWFrSUl3TjlPd2V3aUxLNVIvRVYrVC95OHpTcGs1cEx4cldmM3VSTTlFdgord0NwbG4xQzVGekF1cGdXSWUybnNkZ2R2am16Z3FCbGlCWTI1SW9nbXlybjMrb0RBVlRkUEV1Y1p2YndhbzhFCm9HNFVacGVwMGtZb2toT1lLMTVzWHBWOFJwNCtLSDRkcG9SaWNpSy9CTVU3MmlwcWhrZDE0bE1XVVIxVlhCa0QKY0F3cUJKTEJ0KzJKY0ZqZGYxSGdJaXhLSGRycTNHb2doY0w3eDFUMENFa3AzaGhiVDE1UzNTUC8zSE1ibktLcQplN0RNNk1UOU9DQWRybXgzdUFxMmV5akJPclliZG5JRzVJQTU2OHlhRW9KOHFVR0tRQmFrdVJockhoMXhWL3dYCkx1WWNsd0lEQVFBQm8wZ3dSakFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0h3WURWUjBqQkJnd0ZvQVVFc3duS0RxejBTVVlvV055OTNvbFdpM3F4MFF3RFFZSktvWklodmNOQVFFTApCUUFEZ2dFQkFBaG5pNkNzK3NLOVF4U0VPVFpRQWdReGtCYVpnaGF5OGJYdmxRVndQaXoreUtoZlFUeERsckRNCmNsSmoxZ0FBeVM2aDFvZ1VEcU5va29CeVM2R0tUNUVBZ24rQ1JhR1Zld0NKbnNEQ2g2Z01qYWZORG1aNEhTbloKTmE5ZFp0bSt2TklWNWhDNTBMUEJyaUQwVkZRTEFVRXhEakJ3bjVpN0FkTm8zeGRQWHNib25Kd3ZxbDFTMmEwUgpPTzdJRURhUXNFOGFFd1UvbGhocWVNczhhWkNRMU01RllQeDdhell3RmJBRmJiRVJQUk9Ld1g1WUMyaTU4OHdWCmNMREYrNnNiVzVjSk5KekJGZFp3djhhZDNseFVzODJiNFhOdXdacTQyd09IREQybDd1ODZGMFl3K2Q2aEIvbEwKaWIvRVpRZy9oZHlhQkZSQWxSa3BJL05oRDdGR1JGST0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo= client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBeXNzVnFGaGlqWWVXMU1UYnBRWGhMK0pVRythbkhCU1FBQ3VVeWFha0lJd045T3dlCndpTEs1Ui9FVitUL3k4elNwazVwTHhyV2YzdVJNOUV2K3dDcGxuMUM1RnpBdXBnV0llMm5zZGdkdmptemdxQmwKaUJZMjVJb2dteXJuMytvREFWVGRQRXVjWnZid2FvOEVvRzRVWnBlcDBrWW9raE9ZSzE1c1hwVjhScDQrS0g0ZApwb1JpY2lLL0JNVTcyaXBxaGtkMTRsTVdVUjFWWEJrRGNBd3FCSkxCdCsySmNGamRmMUhnSWl4S0hkcnEzR29nCmhjTDd4MVQwQ0VrcDNoaGJUMTVTM1NQLzNITWJuS0txZTdETTZNVDlPQ0Fkcm14M3VBcTJleWpCT3JZYmRuSUcKNUlBNTY4eWFFb0o4cVVHS1FCYWt1UmhySGgxeFYvd1hMdVljbHdJREFRQUJBb0lCQUNlVkxFMEhzM1RjbWx3OQpjSUh0ZTk3VTFvWDdwM0tic04vWG9kc2FZNzdXbDRMTzg5SUE2SW1BZ2RxR0lFZXZXdzZMRDR6YU9EUDU4b1dpCnR6TFBGa3NCZUNVSzFiT1dLL3ZEWDVBZkZ1OGlaQitESDA1SXg3NGtGK2t4bnNEZDlHZzJJRmk4aVhLdmtJMjgKRExNanlXZWRBdERBVVByeVNDbHU3TWdwZFhCeTZUZFJZTEhENjBLQkM2WlhDWUdwSlQ2aG5ZWGF2M0xtN1htawpXK0ZBeFMyUWsxR3ExTm9wMnRNbVlTd3I5ZjE0T0lFVzdaNHI5V2xtVlhsQXhWdzN5RUgwUC9UL0dGQk5VeUNBCjNITUFZSVRFak51Mlp4U2JNY2JrdGk0Q3NZMC9CMHk1UzNPNS9iUWxYTy9LYnNzZVhKVGl1djY0Q1hROVhja1gKSFRDRUFBRUNnWUVBLzNVVFR4dkJtL0FWTklRSmZPT2l1ZW5yWDlTRzVwTytma1hDeTJ4aFZFZFdPK3k2MG83Wgo1dGpTekRLYkJvOFAyOW9vS0JiZkRGaXhLQlBqYi93bHArc0hPN2svSFJBK1RBeFV4dWpITGlEVEhrU2pkWUJSCjFxQWFNOHlWamdSWTJjbzBpK21oQlN1Sk5TK2dmR1Q4Q0tISG9CSHFUQ3d5MXR3cDFYeDlFQUVDZ1lFQXl6bGUKZXBZeUdzQ1EzQ3FMdUJDU3dqbnJ4ZHE0WTFiSFlNTU1UVnI5S0dKWU04Y1NrRnRicEVJLzZYVk84Ry91QUVEZQpRUVE3Y0RTYjBISWNiWk04YXRUMTN4U0xINlhVWEtmQ1Y3cXVVQkdtUldqdlpJN0NTVkVnSG1laUdSNEVlRm9ZCjBGOTNSY0pJZnVFaVk3emlRQktPR1pqZTNpM1JSR0YzZzZwaHJKY0NnWUVBMXdNNmlsWXBZay96K1N5OU02SUIKb0F1ME1nZVd0OUpZL3IxRzFLTlhWSEZxc3F0eEg3SmUwMzlpQmI3K1hzbmhKa0g3bEtxVGVEZmFmSW9vMzJQUwphZ0JYS1R5bFU1Z05aMExseERtL0ZDTktydXBFenF4L3RXOHlQckVPbStjcXhiejg5MXBnVGhLenZORm1lZTBoCmVUNTU0RS9UN2VNeHMwakI2VStMa0FFQ2dZQUJhZnpHVFpVN3FtdFhuTlFzQzdGNXVIMXpldm9kZHRVY1R6OGUKcXF0b1JJYm9sVklEdng3OEhabmtQZlMycDVDNFg3c3NLS05oUEh4NUR0SXowUHB5bzlpeUhLcDdKZVE4WU01eApYZE1vcTNiRXRONDFqT2k5S2R0WFd0RTk2MytNZHRRRlh5U3RUNVRCalQ5NEFqQncwYkE3YlZ6Zm51SDkzOCs5CkVzcHJNUUtCZ1FDZEFYNWFjQUN5L1RudVg0bGNNRUJtbk43bGFyZCtValNHYTlsU1M1TzhFSSsvakh6OGlSckoKSlYrMllNd2lnYnBNdjM2M1p5WDBBcnRyT1FoMElXaFl0eDdlcDQ4azJLTzhHaUViNUJsZ0pmaDJLdmF0cjFwSApjQVhocCs4RUFJbUZ0c1NoRGtXdjhMaVNMa0FYOVE2dUo4bEdscFJCTnUzVTByRnBMNXFmSVE9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

$ kubectl config current-context kubernetes-admin@kubernetes $ k auth can-i --list Resources Non-Resource URLs Resource Names Verbs *.* [] [] [*] [*] [] [*] selfsubjectaccessreviews.authorization.k8s.io [] [] [create] selfsubjectrulesreviews.authorization.k8s.io [] [] [create] [/api/*] [] [get] [/api] [] [get] [/apis/*] [] [get] [/apis] [] [get] [/healthz] [] [get] [/healthz] [] [get] [/livez] [] [get] [/livez] [] [get] [/openapi/*] [] [get] [/openapi] [] [get] [/readyz] [] [get] [/readyz] [] [get] [/version/] [] [get] [/version/] [] [get] [/version] [] [get] [/version] [] [get]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

-

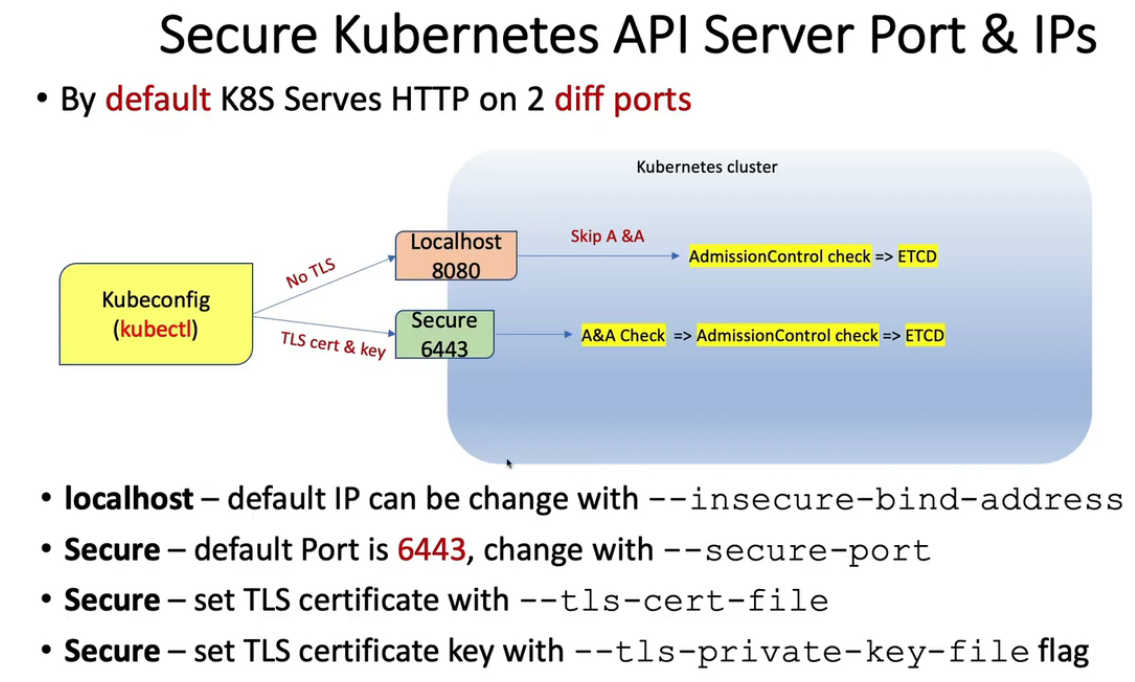

localhost

- port 8080

- no TLS

- default IP is localhost, change with

--insecure-bind-address

-

secure port

- default is 6443, change with

--secure-port - set TLS certificate with

--tls-cert-file - set TLS certificate key with

--tls-private-key-fileflag

- default is 6443, change with

Control anonymous requests to Kube-apiserver by using

--anonymous-auth=falseexample for adding anonymous access

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: anonymous-review-access rules: - apiGroups: - authorization.k8s.io resources: - selfsubjectaccessreviews - selfsubjectrulesreviews verbs: - create --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: anonymous-review-access roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: anonymous-review-access subjects: - kind: User name: system:anonymous namespace: default- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

验证

#check using anonymous kubectl auth can-i --list --as=system:anonymous -n default #check using yourown account kubectl auth can-i --list- 1

- 2

- 3

- 4

- Ref: https://kubernetes.io/docs/concepts/security/controlling-access/#api-server-ports-and-ips

- Ref: https://kubernetes.io/docs/reference/access-authn-authz/authentication/#anonymous-requests

- Ref: https://kubernetes.io/docs/tasks/access-application-cluster/access-cluster/#without-kubectl-proxy

- Ref: https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet-authentication-authorization/#kubelet-authentication

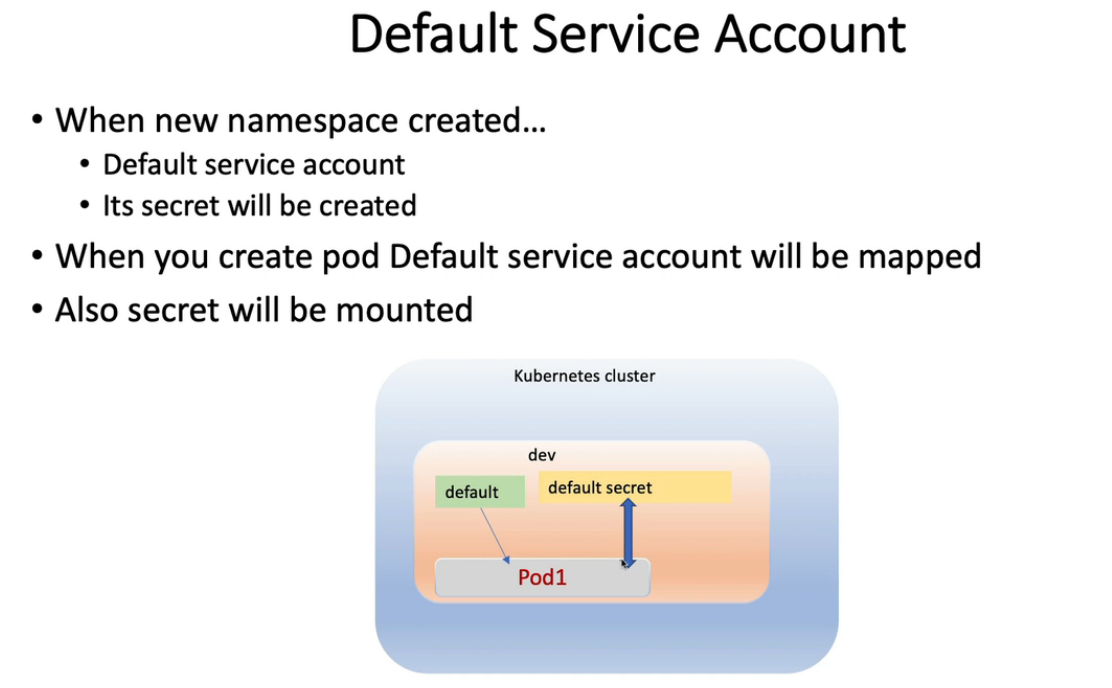

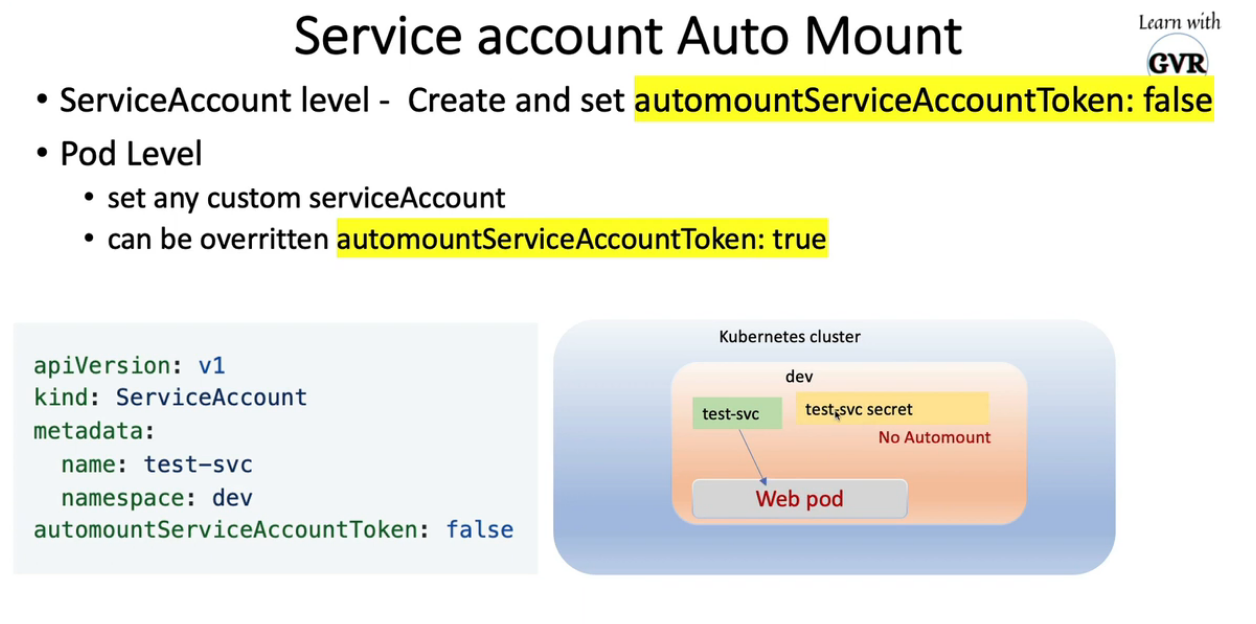

7. Disable Automount Service Account Token

$ k creat ns dev $ k get sa -n dev NAME SECRETS AGE default 1 66s $ k get secrets -n dev NAME TYPE DATA AGE default-token-7xv8n kubernetes.io/service-account-token 3 74s $ k run web --image nginx -n dev $ k get po -n dev NAME READY STATUS RESTARTS AGE web 1/1 Running 0 58s- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

$ k get po -n dev web -oyaml apiVersion: v1 kind: Pod metadata: annotations: cni.projectcalico.org/podIP: 192.168.166.146/32 cni.projectcalico.org/podIPs: 192.168.166.146/32 creationTimestamp: "2022-08-30T06:59:27Z" labels: run: web managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:metadata: f:labels: .: {} f:run: {} f:spec: f:containers: k:{"name":"web"}: .: {} f:image: {} f:imagePullPolicy: {} f:name: {} f:resources: {} f:terminationMessagePath: {} f:terminationMessagePolicy: {} f:dnsPolicy: {} f:enableServiceLinks: {} f:restartPolicy: {} f:schedulerName: {} f:securityContext: {} f:terminationGracePeriodSeconds: {} manager: kubectl-run operation: Update time: "2022-08-30T06:59:27Z" - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:metadata: f:annotations: .: {} f:cni.projectcalico.org/podIP: {} f:cni.projectcalico.org/podIPs: {} manager: calico operation: Update time: "2022-08-30T06:59:29Z" - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:status: f:conditions: k:{"type":"ContainersReady"}: .: {} f:lastProbeTime: {} f:lastTransitionTime: {} f:status: {} f:type: {} k:{"type":"Initialized"}: .: {} f:lastProbeTime: {} f:lastTransitionTime: {} f:status: {} f:type: {} k:{"type":"Ready"}: .: {} f:lastProbeTime: {} f:lastTransitionTime: {} f:status: {} f:type: {} f:containerStatuses: {} f:hostIP: {} f:phase: {} f:podIP: {} f:podIPs: .: {} k:{"ip":"192.168.166.146"}: .: {} f:ip: {} f:startTime: {} manager: kubelet operation: Update time: "2022-08-30T06:59:38Z" name: web namespace: dev resourceVersion: "227448" uid: 4098d08f-ae18-4612-bf82-fe854537ca14 spec: containers: - image: nginx imagePullPolicy: Always name: web resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: default-token-7xv8n readOnly: true dnsPolicy: ClusterFirst enableServiceLinks: true nodeName: node1 preemptionPolicy: PreemptLowerPriority priority: 0 restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: default serviceAccountName: default terminationGracePeriodSeconds: 30 tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute key: node.kubernetes.io/unreachable operator: Exists tolerationSeconds: 300 volumes: - name: default-token-7xv8n secret: defaultMode: 420 secretName: default-token-7xv8n status: conditions: - lastProbeTime: null lastTransitionTime: "2022-08-30T06:59:27Z" status: "True" type: Initialized - lastProbeTime: null lastTransitionTime: "2022-08-30T06:59:38Z" status: "True" type: Ready - lastProbeTime: null lastTransitionTime: "2022-08-30T06:59:38Z" status: "True" type: ContainersReady - lastProbeTime: null lastTransitionTime: "2022-08-30T06:59:27Z" status: "True" type: PodScheduled containerStatuses: - containerID: docker://ef8ec3aea71713fe9a4b904387ed938db8c0d90fedb7be586db41a35ce829201 image: nginx:latest imageID: docker-pullable://nginx@sha256:b95a99feebf7797479e0c5eb5ec0bdfa5d9f504bc94da550c2f58e839ea6914f lastState: {} name: web ready: true restartCount: 0 started: true state: running: startedAt: "2022-08-30T06:59:38Z" hostIP: 192.168.211.41 phase: Running podIP: 192.168.166.146 podIPs: - ip: 192.168.166.146 qosClass: BestEffort startTime: "2022-08-30T06:59:27Z"- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

k exec -it web -n dev -- sh # cat /var/run/secrets/kubernetes.io/serviceaccount/token eyJhbGciOiJSUzI1NiIsImtpZCI6IjNROWFvUGhIWk9sYzBHT3JvOUJDb2wtX29Tc1Z0blRVUmxpeEhmaUpIUFEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZXYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoiZGVmYXVsdC10b2tlbi03eHY4biIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMzA4ODQ2N2MtMjRmNC00OWJmLTkyMTItYWJkMzJkYWE4OTQwIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRldjpkZWZhdWx0In0.GfHynulFu_d9F1rq_hApxwuInPlFmJMkt8BLqfRsk8gPtxLrQXE8aJG1PzZXlYxW5oxU-QkqE3StqDZ2gfpvJfLgBMmRT3u7kt5N2zQ7L9slxJt_sazdqrpxV6848yHR_gMM3hGpKOfShyvI_vmZ9IbIIE3mpuXFbUNk6T2i5OuZgrqCX462OMp_gVMIe1DRAmxHRkU6qjPb7KSCbZsE1bu8Ov5Ns6_L5Peg9di4nge5wGj60ggiZ927Pkr93FlFyDp8xGNIjgXnGLPjiN5Lyz8sfWm-Gci-HDChMhN1NMMRjNGGaC3MJ-VbXlsibBhC0rsG9CWmnlQs9I-5OOrR6A- 1

- 2

- 3

k get secrets -n dev NAME TYPE DATA AGE default-token-7xv8n kubernetes.io/service-account-token 3 22m root@master:~# k describe secrets -n dev default-token-7xv8n Name: default-token-7xv8n Namespace: dev Labels: <none> Annotations: kubernetes.io/service-account.name: default kubernetes.io/service-account.uid: 3088467c-24f4-49bf-9212-abd32daa8940 Type: kubernetes.io/service-account-token Data ==== token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjNROWFvUGhIWk9sYzBHT3JvOUJDb2wtX29Tc1Z0blRVUmxpeEhmaUpIUFEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZXYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoiZGVmYXVsdC10b2tlbi03eHY4biIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMzA4ODQ2N2MtMjRmNC00OWJmLTkyMTItYWJkMzJkYWE4OTQwIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRldjpkZWZhdWx0In0.GfHynulFu_d9F1rq_hApxwuInPlFmJMkt8BLqfRsk8gPtxLrQXE8aJG1PzZXlYxW5oxU-QkqE3StqDZ2gfpvJfLgBMmRT3u7kt5N2zQ7L9slxJt_sazdqrpxV6848yHR_gMM3hGpKOfShyvI_vmZ9IbIIE3mpuXFbUNk6T2i5OuZgrqCX462OMp_gVMIe1DRAmxHRkU6qjPb7KSCbZsE1bu8Ov5Ns6_L5Peg9di4nge5wGj60ggiZ927Pkr93FlFyDp8xGNIjgXnGLPjiN5Lyz8sfWm-Gci-HDChMhN1NMMRjNGGaC3MJ-VbXlsibBhC0rsG9CWmnlQs9I-5OOrR6A ca.crt: 1066 bytes namespace: 3 bytes- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

token 一致。

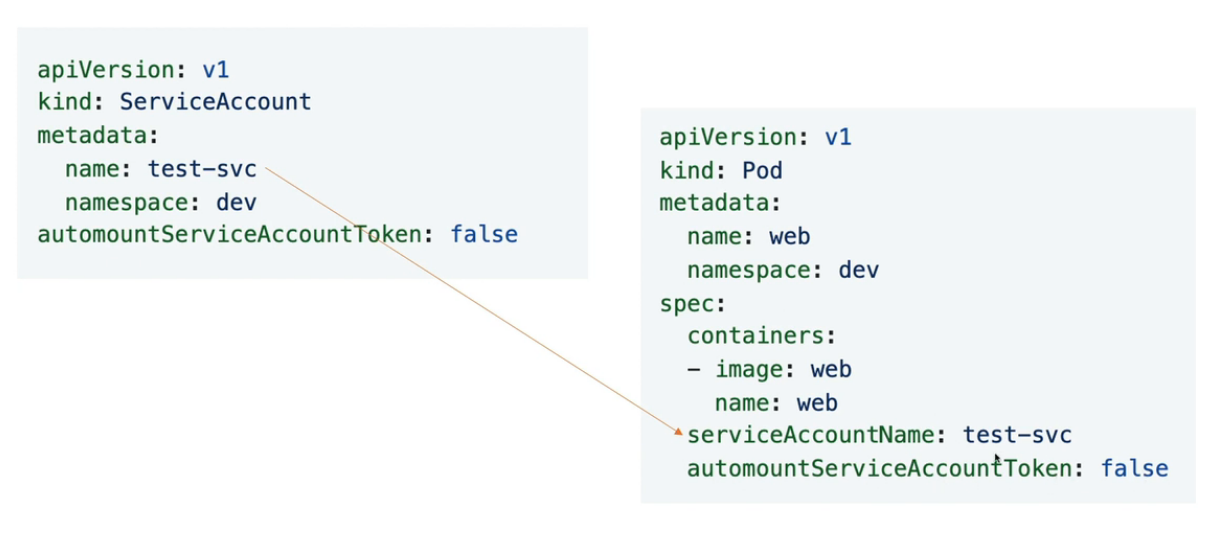

7.1 创建服务账户:test-dev

$ k create sa test-svc -n dev --dry-run=client -o yaml > svc.yaml $ vim svc.yaml apiVersion: v1 kind: ServiceAccount metadata: creationTimestamp: null name: test-svc namespace: dev automountServiceAccountToken: false # 添加 $ k get sa -n dev NAME SECRETS AGE default 1 34m test-svc 1 2m48s $ k get secrets -n dev NAME TYPE DATA AGE default-token-7xv8n kubernetes.io/service-account-token 3 34m test-svc-token-6qs7z kubernetes.io/service-account-token 3 3m- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

7.2 创建无secret token pod:web2

k run web2 --image nginx -n dev --dry-run=client -o yaml > web2.yaml $ cat web2.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: web2 name: web2 namespace: dev spec: serviceAccountName: test-svc # 添加 automountServiceAccountToken: false # 添加 containers: - image: nginx name: web2 resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {} $ k apply -f web2.yaml k get pod -n dev web2 NAME READY STATUS RESTARTS AGE web2 1/1 Running 0 47s # 无挂载 $ k describe pod -n dev web2- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

7.3 服务test-svc RBAC角色权限

kubectl create role test-role -n dev --verb=get,list,watch,create --resource=pods --resource=pods,deployments,services,configmaps kubectl create rolebinding cluster-test-binding -n dev --role=test-role --serviceaccount=dev:test-svc- 1

- 2

- 3

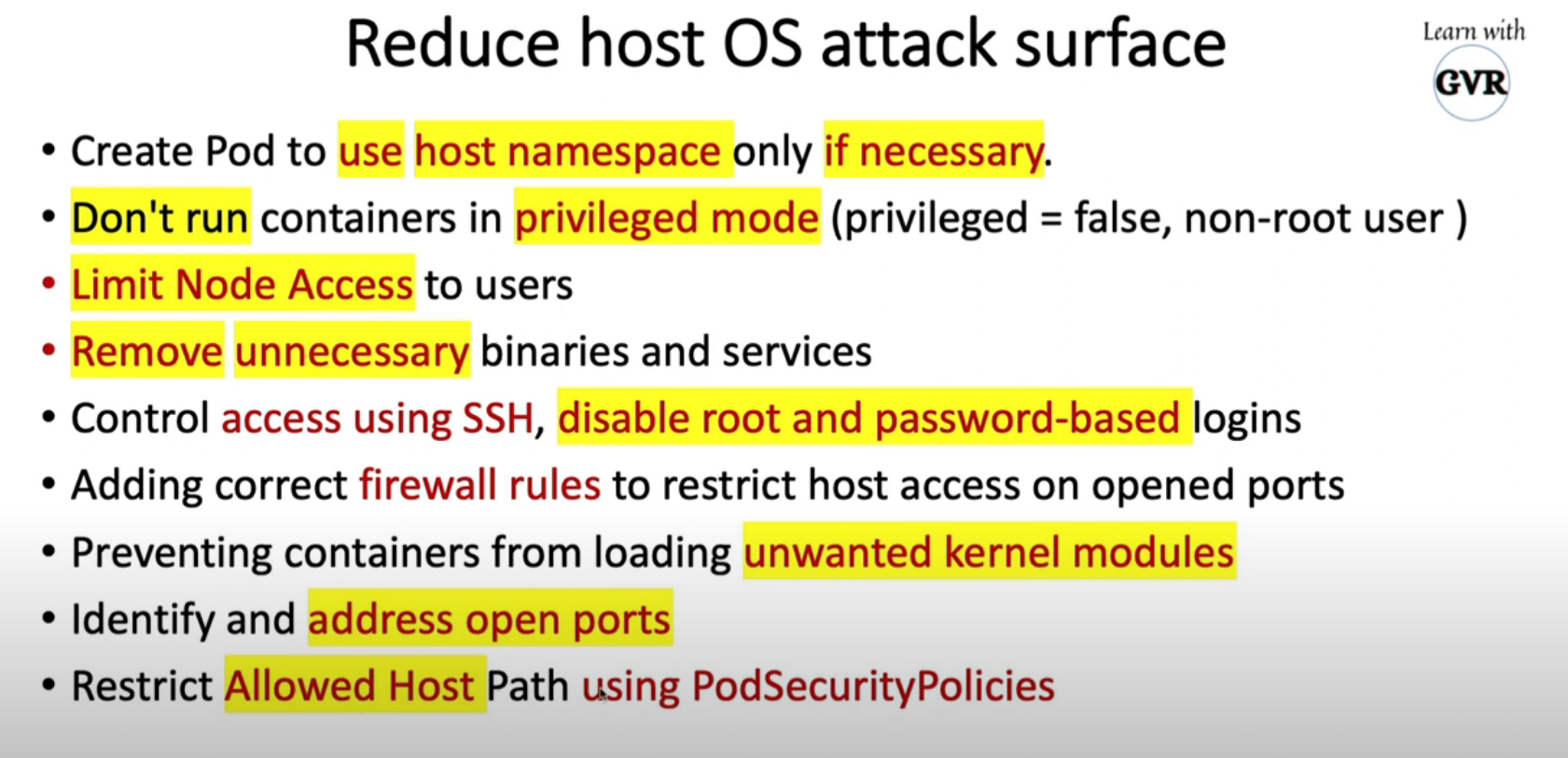

8. Minimize host OS footprint Reduce Attack Surface

k explain Pod.spec.hostIPC KIND: Pod VERSION: v1 FIELD: hostIPC <boolean> DESCRIPTION: Use the host's ipc namespace. Optional: Default to false. root@master:~# k explain Pod.spec.hostNetwork KIND: Pod VERSION: v1 FIELD: hostNetworkDESCRIPTION: Host networking requested for this pod. Use the host' s network namespace. If this option is set, the ports that will be used must be specified. Default to false.- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

8.1 limit node access to users

userdel user1 groupdel group1 usermod -s /usr/sbin/nologin user2 useradd -d /opt/sam -s /bin/bash -G admin -u 2328 sam- 1

- 2

- 3

- 4

8.2 remove obsolete/unnecessary software

systemctl list-units --type service systemctl stop squid systemctl disable squid apt remove squid- 1

- 2

- 3

- 4

8.3 ssh hardening

ssh-keygen -t rsa cat /home/mark/.ssh/authorized_keys PermiRootLogin no PasswordAuthentication no systemctl restart sshd- 1

- 2

- 3

- 4

- 5

- 6

- 7

8.4 ldentify & fix open ports

apt list --installed systemctl list -units --type service lsmod systemctl list-units --all | grep -i nginx systemctl stop nginx rm /lib/systemd/system/nginx.service apt remove nginx -y netstat -atnlp | grep -i 9090 | grep -w -i listen cat /etc/services | grep -i ssh netstat -an | grep 22 | grep -w -i listen netstat -natulp |grep -i light- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

8.5 Restrict Obsolete kernel modules

lsmod vim /letc/modprobe.d/blacklist.conf blacklist sctp blacklist dccp shutdown -r now- 1

- 2

- 3

- 4

- 5

8.6 restrict allowed hostPath using PSP

8.7 UFW

systemctl enable ufw systemctl start ufw ufw default allow outgoing ufw default deny incoming ufw allow 1000:2000/tcp ufw allow from 173.1.3.0/25 to any port 80 proto tcp ufw delete deny 80 ufw delete 5- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

9. Restrict Container Access with AppArmor

-

Kernel Security Module to granular access control for programs on Host OS

-

AppArmor Profile - Set of Rules, to be enabled in nodes

-

AppArmor Profile loaded in 2 modes

- Complain Mode - Discover the program

- Enforce Mode - prevent the program

-

create AppArmor Profile

$ sudo vi /etc/apparmor.d/deny-write #includeprofile k8s-apparmor-example-deny-write flags=(attach_disconnected) { #include file, # Deny all file writes. deny /** w, } - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

copy deny-write profile to worker

node01scp deny-write node01:/tmpload the profile on all our nodes default directory /etc/apparmor.d

sudo apparmor_parser /etc/apparmor.d/deny-writeapply to pod

apiVersion: v1 kind: Pod metadata: name: hello-apparmor annotations: container.apparmor.security.beta.kubernetes.io/hello: localhost/k8s-apparmor-example-deny-write spec: containers: - name: hello image: busybox command: [ "sh", "-c", "echo 'Hello AppArmor!' && sleep 1h" ]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

useful commands:

#check status systemctl status apparmor #check enabled in nodes cat /sys/module/apparmor/parameters/enabled #check profiles cat /sys/kernel/security/apparmor/profiles #install apt-get install apparmor-utils #default Profile file directory is /etc/apparmor.d/ #create apparmor profile aa-genprof /root/add_data.sh #apparmor module status aa-status #load profile file apparmor_parser -q /etc/apparmor.d/usr.sbin.nginx- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

$ aa-status apparmor module is loaded. 8 profiles are loaded. 8 profiles are in enforce mode. /sbin/dhclient /usr/bin/curl /usr/lib/NetworkManager/nm-dhcp-client.action /usr/lib/NetworkManager/nm-dhcp-helper /usr/lib/connman/scripts/dhclient-script /usr/sbin/tcpdump docker-default docker-nginx 0 profiles are in complain mode. 10 processes have profiles defined. 10 processes are in enforce mode. docker-default (2136) docker-default (2154) docker-default (2165) docker-default (2181) docker-default (2343) docker-default (2399) docker-default (2461) docker-default (2507) docker-default (2927) docker-default (2959) 0 processes are in complain mode. 0 processes are unconfined but have a profile defined.- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

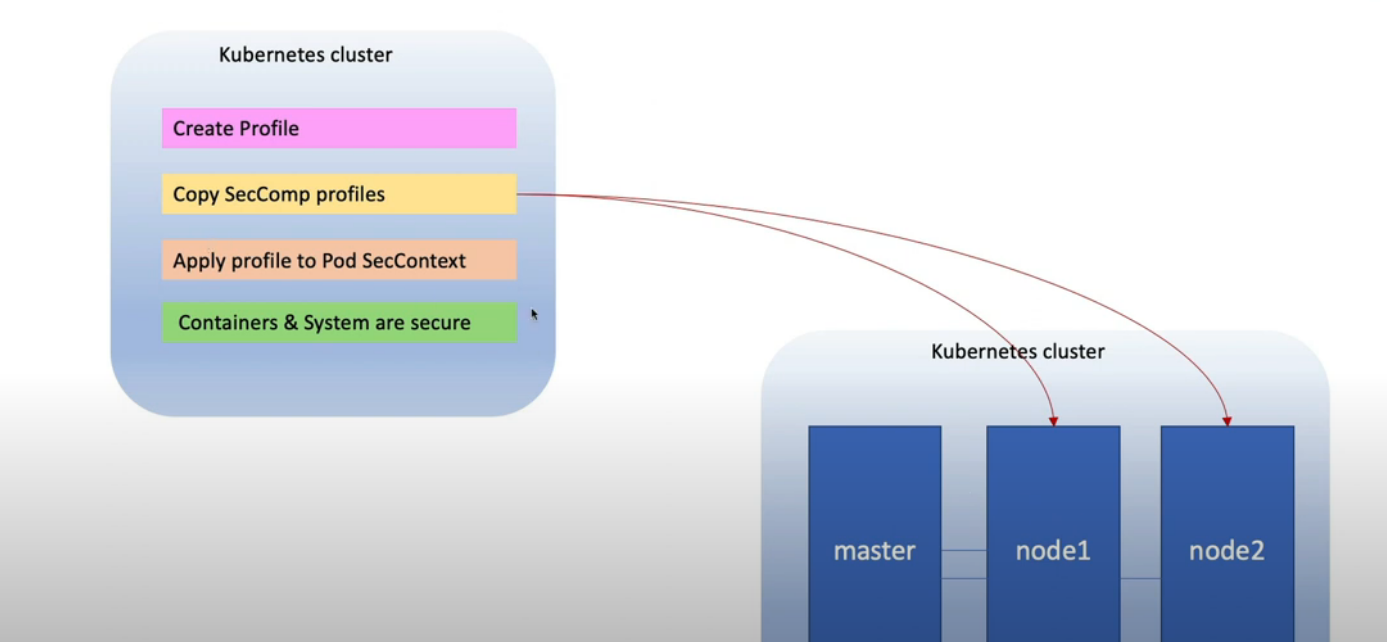

10. Restrict a Container’s Syscalls with seccomp

k create deployment web --image nginx --replicas 1

k get po

k11. Pod Security Policies (PodSecurityPolicy)

k create ns dev k create sa psp-test-sa -n dev- 1

- 2

apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: dev-psp spec: privileged: false # 不允许提权的 Pod! seLinux: rule: RunAsAny supplementalGroups: rule: RunAsAny runAsUser: rule: RunAsAny fsGroup: rule: RunAsAny volumes: - '*'- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

$ k apply -f psp.yaml $ k get psp -A NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES dev-psp false RunAsAny RunAsAny RunAsAny RunAsAny false * $ k describe psp dev-psp Name: dev-psp Namespace: Labels: <none> Annotations: <none> API Version: policy/v1beta1 Kind: PodSecurityPolicy Metadata: Creation Timestamp: 2022-09-01T08:40:58Z Managed Fields: API Version: policy/v1beta1 Fields Type: FieldsV1 fieldsV1: f:metadata: f:annotations: .: f:kubectl.kubernetes.io/last-applied-configuration: f:spec: f:allowPrivilegeEscalation: f:fsGroup: f:rule: f:runAsUser: f:rule: f:seLinux: f:rule: f:supplementalGroups: f:rule: f:volumes: Manager: kubectl-client-side-apply Operation: Update Time: 2022-09-01T08:40:58Z Resource Version: 308516 UID: fc6c4ea9-ec94-4217-aec5-b662d89b986b Spec: Allow Privilege Escalation: true Fs Group: Rule: RunAsAny Run As User: Rule: RunAsAny Se Linux: Rule: RunAsAny Supplemental Groups: Rule: RunAsAny Volumes: * Events: <none>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

k create clusterrole dev-cr --verb=use --resource=PodSecurityPolicy --resource-name=dev-psp k create rolebinding dev-binding -n dev --role=dev-cr --serviceaccount=dev:dev-sa- 1

- 2

- 3

12. Open Policy Agent - OPA Gatekeeper

kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.7/deploy/gatekeeper.yaml helm repo add gatekeeper https://open-policy-agent.github.io/gatekeeper/charts --force-update helm install gatekeeper/gatekeeper --name-template=gatekeeper --namespace gatekeeper-system --create-namespace- 1

- 2

- 3

- 4

$ k apply -f gatekeeper.yaml namespace/gatekeeper-system created resourcequota/gatekeeper-critical-pods created customresourcedefinition.apiextensions.k8s.io/configs.config.gatekeeper.sh created customresourcedefinition.apiextensions.k8s.io/constraintpodstatuses.status.gatekeeper.sh created customresourcedefinition.apiextensions.k8s.io/constrainttemplatepodstatuses.status.gatekeeper.sh created customresourcedefinition.apiextensions.k8s.io/constrainttemplates.templates.gatekeeper.sh created serviceaccount/gatekeeper-admin created podsecuritypolicy.policy/gatekeeper-admin created role.rbac.authorization.k8s.io/gatekeeper-manager-role created clusterrole.rbac.authorization.k8s.io/gatekeeper-manager-role created rolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding created clusterrolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding created secret/gatekeeper-webhook-server-cert created service/gatekeeper-webhook-service created deployment.apps/gatekeeper-audit created deployment.apps/gatekeeper-controller-manager created poddisruptionbudget.policy/gatekeeper-controller-manager created validatingwebhookconfiguration.admissionregistration.k8s.io/gatekeeper-validating-webhook-configuration created- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

$ k get all -n gatekeeper-system NAME READY STATUS RESTARTS AGE pod/gatekeeper-audit-85cb65679d-dxksb 1/1 Running 0 87s pod/gatekeeper-controller-manager-57f4675cb-js5wh 1/1 Running 0 87s pod/gatekeeper-controller-manager-57f4675cb-rh2lw 1/1 Running 0 87s pod/gatekeeper-controller-manager-57f4675cb-z994p 1/1 Running 0 87s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/gatekeeper-webhook-service ClusterIP 10.102.147.19 <none> 443/TCP 87s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/gatekeeper-audit 1/1 1 1 87s deployment.apps/gatekeeper-controller-manager 3/3 3 3 87s NAME DESIRED CURRENT READY AGE replicaset.apps/gatekeeper-audit-85cb65679d 1 1 1 87s replicaset.apps/gatekeeper-controller-manager-57f4675cb 3 3 3 87s- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

k8srequiredlabels_template.yaml

apiVersion: templates.gatekeeper.sh/v1beta1 kind: ConstraintTemplate metadata: name: k8srequiredlabels spec: crd: spec: names: kind: K8sRequiredLabels validation: # Schema for the `parameters` field openAPIV3Schema: type: object properties: labels: type: array items: type: string targets: - target: admission.k8s.gatekeeper.sh rego: | package k8srequiredlabels violation[{"msg": msg, "details": {"missing_labels": missing}}] { provided := {label | input.review.object.metadata.labels[label]} required := {label | label := input.parameters.labels[_]} missing := required - provided count(missing) > 0 msg := sprintf("you must provide labels: %v", [missing]) }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

k apply -f k8srequiredlabels_template.yaml- 1

$ k api-resources |grep crd customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition bgpconfigurations crd.projectcalico.org/v1 false BGPConfiguration bgppeers crd.projectcalico.org/v1 false BGPPeer blockaffinities crd.projectcalico.org/v1 false BlockAffinity clusterinformations crd.projectcalico.org/v1 false ClusterInformation felixconfigurations crd.projectcalico.org/v1 false FelixConfiguration globalnetworkpolicies crd.projectcalico.org/v1 false GlobalNetworkPolicy globalnetworksets crd.projectcalico.org/v1 false GlobalNetworkSet hostendpoints crd.projectcalico.org/v1 false HostEndpoint ipamblocks crd.projectcalico.org/v1 false IPAMBlock ipamconfigs crd.projectcalico.org/v1 false IPAMConfig ipamhandles crd.projectcalico.org/v1 false IPAMHandle ippools crd.projectcalico.org/v1 false IPPool kubecontrollersconfigurations crd.projectcalico.org/v1 false KubeControllersConfiguration networkpolicies crd.projectcalico.org/v1 true NetworkPolicy networksets crd.projectcalico.org/v1 true NetworkSet $ k api-resources ........... constrainttemplates templates.gatekeeper.sh/v1beta1 false ConstraintTemplate $ k get crd NAME CREATED AT bgpconfigurations.crd.projectcalico.org 2022-07-27T08:23:44Z bgppeers.crd.projectcalico.org 2022-07-27T08:23:44Z blockaffinities.crd.projectcalico.org 2022-07-27T08:23:44Z clusterinformations.crd.projectcalico.org 2022-07-27T08:23:44Z configs.config.gatekeeper.sh 2022-09-01T09:14:37Z constraintpodstatuses.status.gatekeeper.sh 2022-09-01T09:14:37Z constrainttemplatepodstatuses.status.gatekeeper.sh 2022-09-01T09:14:37Z constrainttemplates.templates.gatekeeper.sh 2022-09-01T09:14:37Z felixconfigurations.crd.projectcalico.org 2022-07-27T08:23:44Z globalnetworkpolicies.crd.projectcalico.org 2022-07-27T08:23:44Z globalnetworksets.crd.projectcalico.org 2022-07-27T08:23:44Z hostendpoints.crd.projectcalico.org 2022-07-27T08:23:44Z ipamblocks.crd.projectcalico.org 2022-07-27T08:23:44Z ipamconfigs.crd.projectcalico.org 2022-07-27T08:23:44Z ipamhandles.crd.projectcalico.org 2022-07-27T08:23:44Z ippools.crd.projectcalico.org 2022-07-27T08:23:44Z k8srequiredlabels.constraints.gatekeeper.sh 2022-09-01T09:24:54Z kubecontrollersconfigurations.crd.projectcalico.org 2022-07-27T08:23:44Z networkpolicies.crd.projectcalico.org 2022-07-27T08:23:44Z networksets.crd.projectcalico.org 2022-07-27T08:23:44Z $ k get constrainttemplates NAME AGE k8srequiredlabels 4m18s- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

$ k describe constrainttemplates k8srequiredlabels Name: k8srequiredlabels Namespace: Labels: <none> Annotations: <none> API Version: templates.gatekeeper.sh/v1beta1 Kind: ConstraintTemplate Metadata: Creation Timestamp: 2022-09-01T09:24:54Z Generation: 1 Managed Fields: API Version: templates.gatekeeper.sh/v1beta1 Fields Type: FieldsV1 fieldsV1: f:metadata: f:annotations: .: f:kubectl.kubernetes.io/last-applied-configuration: f:spec: .: f:crd: .: f:spec: .: f:names: .: f:kind: f:validation: .: f:openAPIV3Schema: .: f:properties: f:type: f:targets: Manager: kubectl-client-side-apply Operation: Update Time: 2022-09-01T09:24:54Z API Version: templates.gatekeeper.sh/v1beta1 Fields Type: FieldsV1 fieldsV1: f:status: .: f:byPod: f:created: Manager: gatekeeper Operation: Update Time: 2022-09-01T09:24:55Z Resource Version: 312752 UID: 6b85c61b-a1d9-403d-851c-bbe8b3e8ef7c Spec: Crd: Spec: Names: Kind: K8sRequiredLabels Validation: openAPIV3Schema: Properties: Labels: Items: Type: string Type: array Type: object Targets: Rego: package k8srequiredlabels violation[{"msg": msg, "details": {"missing_labels": missing}}] { provided := {label | input.review.object.metadata.labels[label]} required := {label | label := input.parameters.labels[_]} missing := required - provided count(missing) > 0 msg := sprintf("you must provide labels: %v", [missing]) } Target: admission.k8s.gatekeeper.sh Status: By Pod: Id: gatekeeper-audit-85cb65679d-dxksb Observed Generation: 1 Operations: audit status Template UID: 6b85c61b-a1d9-403d-851c-bbe8b3e8ef7c Id: gatekeeper-controller-manager-57f4675cb-js5wh Observed Generation: 1 Operations: webhook Template UID: 6b85c61b-a1d9-403d-851c-bbe8b3e8ef7c Id: gatekeeper-controller-manager-57f4675cb-rh2lw Observed Generation: 1 Operations: webhook Template UID: 6b85c61b-a1d9-403d-851c-bbe8b3e8ef7c Id: gatekeeper-controller-manager-57f4675cb-z994p Observed Generation: 1 Operations: webhook Template UID: 6b85c61b-a1d9-403d-851c-bbe8b3e8ef7c Created: true Events: <none>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

constraint.yaml ,要求

Deployment部署必须有labels: env$ cat constraint_2.yaml apiVersion: constraints.gatekeeper.sh/v1beta1 kind: K8sRequiredLabels metadata: name: deploy-must-have-env spec: match: kinds: - apiGroups: ["apps"] kinds: ["Deployment"] parameters: labels: ["env"]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

k apply -f constraint.yaml- 1

参考:Deployments

deployement.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

没有配置

env标签就会报错k apply -f deployment.yaml Error from server ([deploy-must-have-env] you must provide labels: {"env"}): error when creating "deployment.yaml": admission webhook "validation.gatekeeper.sh" denied the request: [deploy-must-have-env] you must provide labels: {"env"}- 1

- 2

- 3

修改deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx env: demo spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

执行:

$ k apply -f deployment.yaml deployment.apps/nginx-deployment created $ k get deployments NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 1/1 1 1 39s- 1

- 2

- 3

- 4

- 5

- 6

- 7

如果创建namespace必须有

labels: env

k8srequiredlabels_template_ns.yamlapiVersion: constraints.gatekeeper.sh/v1beta1 kind: K8sRequiredLabels metadata: name: ns-must-have-gk spec: match: kinds: - apiGroups: [""] kinds: ["Namespace"] parameters: labels: ["gatekeeper"]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

$ k apply -f k8srequiredlabels_template_ns.yaml $ k create ns test Error from server ([ns-must-have-gk] you must provide labels: {"gatekeeper"}): admission webhook "validation.gatekeeper.sh" denied the request: [ns-must-have-gk] you must provide labels: {"gatekeeper"}- 1

- 2

- 3

如何正确创建

$ k create ns test --dry-run=client -oyaml > test_ns.yaml $ vim test_ns.yaml apiVersion: v1 kind: Namespace metadata: creationTimestamp: null name: test labels: #添加 gatekeeper: test #添加 spec: {} status: {} #创建ns成功 $ k apply -f test_ns.yaml namespace/test created $ k get ns |grep test test Active 47s- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

13. Security Context for a Pod or Container

apiVersion: v1 kind: Pod metadata: name: security-context-demo spec: securityContext: runAsUser: 1000 runAsGroup: 3000 fsGroup: 2000 volumes: - name: sec-ctx-vol emptyDir: {} containers: - name: sec-ctx-demo image: busybox:1.28 command: [ "sh", "-c", "sleep 1h" ] volumeMounts: - name: sec-ctx-vol mountPath: /data/demo securityContext: allowPrivilegeEscalation: false- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

kubectl apply -f https://k8s.io/examples/pods/security/security-context.yaml kubectl get pod security-context-demo kubectl exec -it security-context-demo -- sh # id uid=1000 gid=3000 groups=2000- 1

- 2

- 3

- 4

- 5

14. Manage Kubernetes secrets

- Opaque(Generic) secrets -

- Service account token Secrets

- Docker config Secrets

kubectl create secret docker-registry my-secret --docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKER_USER --docker-password=DOCKER_PASSWORD --docker-email=DOCKER_EMAIL

- Basic authentication Secret -

- SSH authentication secrets -

- TLS secrets -

kubectl create secret tls tls-secret --cert=path/to/tls.cert --key=path/to/tls.key

- Bootstrap token Secrets -

Secret as Data to a Container Using a Volume

kubectl create secret generic mysecret --from-literal=username=devuser --from-literal=password='S!B\*d$zDsb='- 1

Decode Secret

kubectl get secrets/mysecret --template={{.data.password}} | base64 -d- 1

apiVersion: v1 kind: Pod metadata: name: mypod spec: containers: - name: mypod image: redis volumeMounts: - name: secret-volume mountPath: "/etc/secret-volume" readOnly: true volumes: - name: secret-volume secret: secretName: mysecret- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

Secret as Data to a Container Using Environment Variables

apiVersion: v1 kind: Pod metadata: name: secret-env-pod spec: containers: - name: mycontainer image: redis # refer all secret data envFrom: - secretRef: name: mysecret-2 # refer specific variable env: - name: SECRET_USERNAME valueFrom: secretKeyRef: name: mysecret key: username - name: SECRET_PASSWORD valueFrom: secretKeyRef: name: mysecret key: password restartPolicy: Never- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

Ref: https://kubernetes.io/docs/concepts/configuration/secret

Ref: https://kubernetes.io/docs/tasks/administer-cluster/encrypt-data

Ref: https://kubernetes.io/docs/tasks/configmap-secret/managing-secret-using-kubectl/

15. Container Runtime Sandboxes gVisor runsc kata containers

- 沙盒是容器与主机隔离的概念

- docker 使用默认的 SecComp 配置文件来限制特权。白名单或黑名单

- AppArmor 可以访问该容器的细粒度控制。白名单或黑名单

- 如果容器中有大量应用程序,那么 SecComp/AppArmor 就不是这样了

15.1gvisor

gVisor 位于容器和 Linux 内核之间。每个容器都有自己的

gVisiorgVisor 有 2 个不同的组件

Sentry: 作为容器的内核Gofer:是访问系统文件的文件代理。容器和操作系统之间的中间人

gVisor 在 hostOS 中使用 runsc 来运行沙箱(OCI 兼容)

apiVersion: node.k8s.io/v1 # RuntimeClass is defined in the node.k8s.io API group kind: RuntimeClass metadata: name: myclass # The name the RuntimeClass will be referenced by handler: runsc # non-namespaced, The name of the corresponding CRI configuration- 1

- 2

- 3

- 4

- 5

15.2 kata 容器

Kata 在 VM 中安装轻量级容器,为容器(如 VM)提供自己的内核

Kata 容器提供硬件虚拟化支持(不能在云端运行,GCP 支持)

Kata 容器使用 kata 运行时。

apiVersion: node.k8s.io/v1 # RuntimeClass is defined in the node.k8s.io API group kind: RuntimeClass metadata: name: myclass # The name the RuntimeClass will be referenced by handler: kata # non-namespaced, The name of the corresponding CRI configuration- 1

- 2

- 3

- 4

- 5

15.3 安装 gVisor