-

第04章 经典卷积神经网络模型

序言

1. 内容介绍

本章介绍深度学习算法-卷积神经网络用于 图片分类 的应用,主要介绍主流经典卷积神经网络 (CNN) 模型,包括 LeNet AlexNet VGGNet 的算法模型、数学推理、模型实现 以及 PyTorch框架 的实现。并能够把它应用于现实世界的 数据集 实现分类效果。

2. 理论目标

- LeNet 的基础模型架构、训练细节与数学推理

- AlexNet 的基础模型架构、训练细节与数学推理

- VGGNet 的基础模型架构、训练细节与数学推理

3. 实践目标

- 掌握PyTorch框架下LeNet AlexNet VGGNet 的实现

- 掌握迁移学习与特征提取

- 熟悉各经典算法在图像分类应用上的优缺点

4. 实践数据集

- Flower 数据集分类

- Oxford-IIIT 数据集分类

- CIFAR-10 数据集分类

5. 内容目录

- 1.卷积神经网络模型详解 LeNet

- 2.卷积神经网络模型详解 AlexNet

- 3.卷积神经网络模型详解 VGGNet

- 4.PyTorch 实践

- 5.图像分类实例 Flower 数据集

- 6.图像分类实例 Oxford-IIIT 数据集

- 7.图像分类实例 CIFAR-10 数据集

第1节 卷积神经网络模型 LeNet

1.1 LeNet 简介

LeNet 诞生于 1998 年,是最早的卷积神经网络之一,并且推动了深度学习领域的发展。自从 1998 年开始,在许多次成功的迭代后,这项由 Yann LeCun 完成的开拓性成果被命名为 LeNet5。LeNet5 的架构基于这样的观点:图像的特征分布在整张图像上,以及带有可学习参数的卷积是一种用少量参数在多个位置上提取相似特征的有效方式。在那时候,没有 GPU 帮助训练,甚至 CPU 的速度也很慢。因此,能够保存参数以及计算过程是一个关键进展。这和将每个像素用作一个大型多层神经网络。

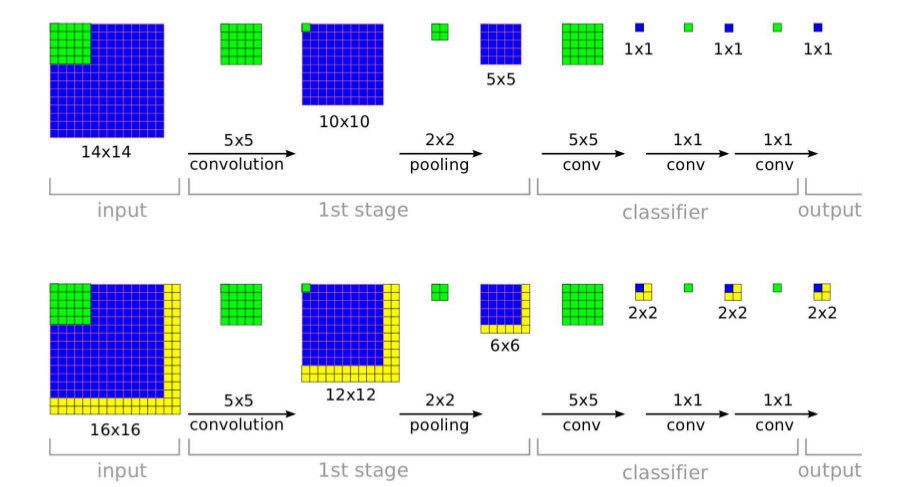

1.2 LeNet 模型结构

LeNet网络包含了 卷积层、池化层 和 全连接层,这些都是现代CNN 网络的基本组件:

- 输入层:二维图像,尺寸为 32\times3232×32 的灰色图像或RGB图像

- 卷积层:二维卷积C1、C3、C5层。其中 C1 与 C3 层运用卷积计算降低输入特征图的尺寸大小,C5 层将输入尺寸为 16\times5\times516×5×5 的特征图转化为尺寸为 120\times1\times1120×1×1,然后转换为长度为 120 的一维向量。这是一种常见的、将卷积层的输出转换为全连接层的输入的一种方法

- 池化层:池化S2、S4层,也就是下采样层,使用最大池化进行下采样,池化的尺寸大小为 2\times22×2。例如对 C1层 28\times2828×28 的图片,进行分块,每个块的大小为 2\times22×2,可以得到 14\times1414×14 个块,然后统计每个块中,最大的值作为下采样的新像素,因此S1结果为 6 个 14\times1414×14 大小的图像。LeNet 使用 sigmoid 函数作为激活函数,后续 CNN 模型多使用 ReLU 函数

- 全连接层:F6层,线性函数将输出特征向量从 120 减低为 84

- 输出层:由欧式径向基函数单元组成,后续 CNN 模型多使用 softmax 输出单元

1.3 LeNet PyTorch

# %load lenet.py import torch import torch.nn as nn import torch.nn.functional as F class LeNet(nn.Module): def __init__(self, num_classes=10): super(LeNet,self).__init__() self.conv1 = nn.Conv2d(3,16,kernel_size = 5) self.pool1 = nn.MaxPool2d(2,2) self.conv2 = nn.Conv2d(16,32,kernel_size = 5) self.pool2 = nn.MaxPool2d(2,2) self.fc1 = nn.Linear(32*5*5,120) self.fc2 = nn.Linear(120,84) self.fc3 = nn.Linear(84,num_classes) def forward(self, x): x = F.relu(self.conv1(x)) x = self.pool1(x) x = F.relu(self.conv2(x)) x = self.pool2(x) x = x.view(-1,32*5*5) x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x def build_lenet5(phase, num_classes): if phase != "test" and phase != "train": print("ERROR: Phase: " + phase + " not recognized") return return LeNet(num_classes=num_classes)from torchsummary import summary net = build_lenet5('train',10) net.cuda() summary(net,(3,32,32))- ----------------------------------------------------------------

- Layer (type) Output Shape Param #

- ================================================================

- Conv2d-1 [-1, 16, 28, 28] 1,216

- MaxPool2d-2 [-1, 16, 14, 14] 0

- Conv2d-3 [-1, 32, 10, 10] 12,832

- MaxPool2d-4 [-1, 32, 5, 5] 0

- Linear-5 [-1, 120] 96,120

- Linear-6 [-1, 84] 10,164

- Linear-7 [-1, 10] 850

- ================================================================

- Total params: 121,182

- Trainable params: 121,182

- Non-trainable params: 0

- ----------------------------------------------------------------

- Input size (MB): 0.01

- Forward/backward pass size (MB): 0.15

- Params size (MB): 0.46

- Estimated Total Size (MB): 0.63

- ----------------------------------------------------------------

第2节 卷积神经网络模型 AlexNet

2.1 AlexNet 简介

ImageNet 数据集 是一个开源的图片数据集,包含超过 14001400 万张图片和图片对应的标签,包含 22 万多个类别。自从 20102010 年以来,ImageNet 每年举办一次比赛,即 ImageNet 大规模视觉识别挑战赛 ILSVRC ,比赛使用 10001000 个类别图片。

2017年7月,ImageNet 宣布ILSVRC 于 2017 年正式结束,因为图像分类、物体检测、物体识别任务中计算机的正确率都远超人类,计算机视觉在感知方面的问题基本得到解决,后续将专注于目前尚未解决的问题。这一切都起源于 2012 年 Geoffrey Hinton 和他的学生 Alex Krizhevsky 推出了AlexNet 。在当年的ImageNet 图像分类竞赛中,AlexeNet 以远超第二名的成绩夺冠,使得深度学习重回历史舞台,具有重大历史意义。

2.2 AlexNet 模型结构

AlexNet 有 55 个广义卷积层和 33 个广义全连接层。

- 广义的卷积层:包含了卷积层、池化层、ReLU、LRN 层等。

- 广义全连接层:包含了全连接层、ReLU、Dropout 层等。

网络结构如下表所示:

-

输入层会将 3\times224\times2243×224×224 的三维图片预处理变成 3\times227\times2273×227×227 的三维图片。

-

第二层广义卷积层、第四层广义卷积层、第五层广义卷积层都是分组卷积,仅采用 GPU 内的通道数据进行计算。

-

第一层广义卷积层、第三层广义卷积层、第六层连接层、第七层连接层、第八层连接层执行的是全部通道数据的计算。

-

第二层广义卷积层的卷积、第三层广义卷积层的卷积、第四层广义卷积层的卷积、第五层广义卷积层的卷积均采用 same padding 填充。当卷积的步长为 11,核大小为 3\times33×3 时,如果不填充 00,则 feature map 的宽/高都会缩减 22 。因此这里填充 00,使得输出 feature map 的宽/高保持不变。其它层的卷积,以及所有的池化都是 valid 填充(即不填充 00)。

-

第六层广义连接层的卷积之后,会将 feature map 展平为长度为 40964096 的一维向量。

2.2 AlexNet PyTorch

# %load alex.py import math import torch import torch.nn as nn class AlexNet(nn.Module): def __init__(self,num_classes=1000, init_weights=False): super(AlexNet, self).__init__() self.features = nn.Sequential( nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), nn.Conv2d(64, 192, kernel_size=5, padding=2), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), nn.Conv2d(192, 384, kernel_size=3, padding=1), nn.ReLU(inplace=True), nn.Conv2d(384, 256, kernel_size=3, padding=1), nn.ReLU(inplace=True), nn.Conv2d(256, 256, kernel_size=3, padding=1), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), ) self.avgpool = nn.AdaptiveAvgPool2d((6, 6)) self.classifier = nn.Sequential( nn.Dropout(), nn.Linear(256 * 6 * 6, 4096), nn.ReLU(inplace=True), nn.Dropout(), nn.Linear(4096, 4096), nn.ReLU(inplace=True), nn.Linear(4096, num_classes), ) if init_weights: self._initialize_weights() def forward(self, x): x = self.features(x) x = torch.flatten(x, start_dim=1) x = self.classifier(x) return x def _initialize_weights(self): for m in self.modules(): if isinstance(m, nn.Conv2d): nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') if m.bias is not None: nn.init.constant_(m.bias, 0) elif isinstance(m, nn.Linear): nn.init.normal_(m.weight, 0, 0.01) nn.init.constant_(m.bias, 0) def build_alex(phase, num_classes, pretrained): if phase != "test" and phase != "train": print("ERROR: Phase: " + phase + " not recognized") return if not pretrained: model = AlexNet(num_classes=num_classes) else: model = AlexNet() model_weights_path = 'weights/alexnet-owt-4df8aa71.pth' model.load_state_dict(torch.load(model_weights_path), strict=False) for parma in model.parameters(): parma.requires_grad = False ratio = int(math.sqrt(4096/num_classes)) floor = math.floor(math.log2(ratio)) trans_size = int(math.pow(2,10-floor)) model.classifier = nn.Sequential(nn.Linear(256 * 6 * 6, 4096), nn.ReLU(inplace=True), nn.Dropout(p=0.5), nn.Linear(4096, trans_size), nn.ReLU(inplace=True), nn.Dropout(p=0.5), nn.Linear(trans_size, num_classes) ) return modelnet = build_alex('train',10,False) net.cuda() summary(net,(3,224,224))- ----------------------------------------------------------------

- Layer (type) Output Shape Param #

- ================================================================

- Conv2d-1 [-1, 64, 55, 55] 23,296

- ReLU-2 [-1, 64, 55, 55] 0

- MaxPool2d-3 [-1, 64, 27, 27] 0

- Conv2d-4 [-1, 192, 27, 27] 307,392

- ReLU-5 [-1, 192, 27, 27] 0

- MaxPool2d-6 [-1, 192, 13, 13] 0

- Conv2d-7 [-1, 384, 13, 13] 663,936

- ReLU-8 [-1, 384, 13, 13] 0

- Conv2d-9 [-1, 256, 13, 13] 884,992

- ReLU-10 [-1, 256, 13, 13] 0

- Conv2d-11 [-1, 256, 13, 13] 590,080

- ReLU-12 [-1, 256, 13, 13] 0

- MaxPool2d-13 [-1, 256, 6, 6] 0

- Dropout-14 [-1, 9216] 0

- Linear-15 [-1, 4096] 37,752,832

- ReLU-16 [-1, 4096] 0

- Dropout-17 [-1, 4096] 0

- Linear-18 [-1, 4096] 16,781,312

- ReLU-19 [-1, 4096] 0

- Linear-20 [-1, 10] 40,970

- ================================================================

- Total params: 57,044,810

- Trainable params: 57,044,810

- Non-trainable params: 0

- ----------------------------------------------------------------

- Input size (MB): 0.57

- Forward/backward pass size (MB): 8.30

- Params size (MB): 217.61

- Estimated Total Size (MB): 226.48

- ----------------------------------------------------------------

net = build_alex('train',10,True) net.cuda() summary(net,(3,224,224))- ----------------------------------------------------------------

- Layer (type) Output Shape Param #

- ================================================================

- Conv2d-1 [-1, 64, 55, 55] 23,296

- ReLU-2 [-1, 64, 55, 55] 0

- MaxPool2d-3 [-1, 64, 27, 27] 0

- Conv2d-4 [-1, 192, 27, 27] 307,392

- ReLU-5 [-1, 192, 27, 27] 0

- MaxPool2d-6 [-1, 192, 13, 13] 0

- Conv2d-7 [-1, 384, 13, 13] 663,936

- ReLU-8 [-1, 384, 13, 13] 0

- Conv2d-9 [-1, 256, 13, 13] 884,992

- ReLU-10 [-1, 256, 13, 13] 0

- Conv2d-11 [-1, 256, 13, 13] 590,080

- ReLU-12 [-1, 256, 13, 13] 0

- MaxPool2d-13 [-1, 256, 6, 6] 0

- Linear-14 [-1, 4096] 37,752,832

- ReLU-15 [-1, 4096] 0

- Dropout-16 [-1, 4096] 0

- Linear-17 [-1, 64] 262,208

- ReLU-18 [-1, 64] 0

- Dropout-19 [-1, 64] 0

- Linear-20 [-1, 10] 650

- ================================================================

- Total params: 40,485,386

- Trainable params: 38,015,690

- Non-trainable params: 2,469,696

- ----------------------------------------------------------------

- Input size (MB): 0.57

- Forward/backward pass size (MB): 8.17

- Params size (MB): 154.44

- Estimated Total Size (MB): 163.18

- ----------------------------------------------------------------

2.3 AlexNet 设计技巧

AlexNet 在 2012 年 ImageNet 大获成功的主要原因在于:

- 使用 ReLU 激活函数

- 使用 dropout、数据集增强 、重叠池化等防止 过拟合 的方法

- 使用 百万级 的大数据集来训练

- 使用 GPU 训练,以及的 LRN 使用

- 使用带动量的 mini batch 随机梯度下降来训练

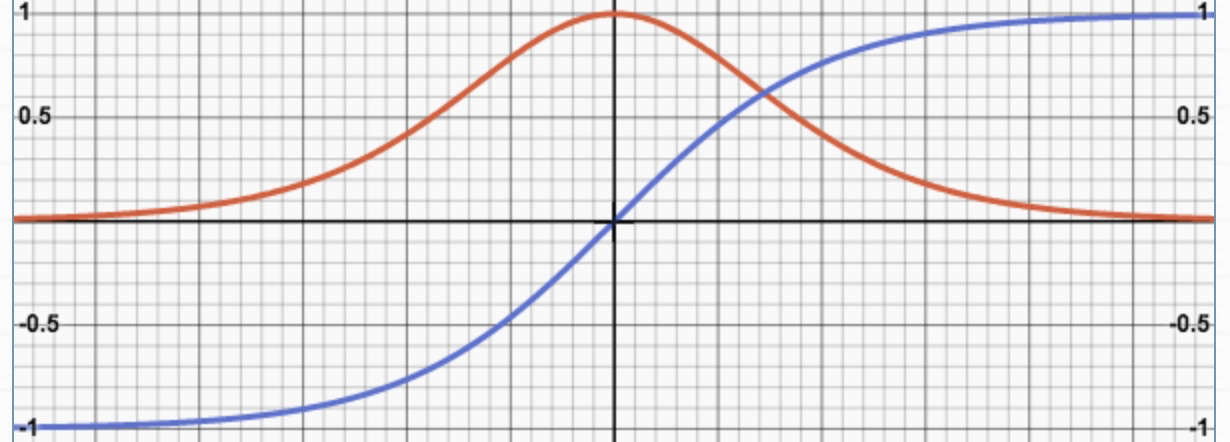

AlexNet 问世之前,标准的神经元激活函数是 tanh() 函数,即双曲正切函数,由基本双曲函数双曲正弦和双曲余弦推导而来

y = tanh(x) = \frac{sinh(x)}{cosh(x)} = \frac{e^{x} - e^{-x}}{e^{x} + e^{-x}}y=tanh(x)=cosh(x)sinh(x)=ex+e−xex−e−x y = tanh(x) = 2sigmoid(2x) - 1y=tanh(x)=2sigmoid(2x)−1

y' = \frac{4 e^{2x}}{(e^{2x} + 1)^2}y′=(e2x+1)24e2x

蓝色为原函数 y = tanh(x)y=tanh(x), 红色为微分函数 y'y′

tanh(x) 是一个奇函数,其函数图像为过原点并且穿越Ⅰ、Ⅲ 象限的严格单调递增曲线,其图像被限制在两水平渐近线 y = 1y=1 和 y = -1y=−1 之间。这种饱和的非线性函数在梯度下降的时候要比非饱和的非线性函数 慢 得多,因此,在 AlexNet 中使用 ReLU 函数作为激活函数

ReLU,即 Rectified Linear Unit,整流线性单元,激活部分神经元,增加稀疏性,当 x 小于 0 时,输出值为 0,当 x 大于 0 时,输出值为 x

y = max(0,x)y=max(0,x)

y' =

y′={0xifx≤0ifx>0{ 0 i f x ≤ 0 x i f x > 0

AlexNet 中使用的数据集增强手段包括:

-

随机裁剪、随机水平翻转:原始图片的尺寸为256xx256,裁剪大小为224x224

-

每一个epoch 中,对同一张图片进行随机性的裁剪,然后随机性的水平翻转。理论上相当于扩充了数据集 (256-224)^2 \times 2 = 2048(256−224)2×2=2048 倍

-

在预测阶段不是随机裁剪,而是固定裁剪图片四个角、一个中心位置,再加上水平翻转,一共获得 10 张图片, 并用这 10 张图片的预测结果的均值作为原始图片的预测结果

-

-

PCA 降噪:对 RGB 空间做 PCA 变换来完成去噪功能。同时在特征值上放大一个随机性的因子倍数(单位 1 加上一个 \aleph(0,0.1)ℵ(0,0.1) 的高斯绕动),从而保证图像的多样性

-

每一个 epoch 重新生成一个随机因子

-

该操作使得错误率下降 1%

-

AlexNet 使用随机剪裁的数据增强手段存在两个潜在问题:

- 固定裁剪四个角、一个中心的方式把图片的很多区域都给忽略掉了,所以很有可能一些重要信息被裁剪

- 裁剪窗口重叠这会引起冗余的计算量

针对此问题的改善思路为:

- 执行所有的裁剪方式,再对所有裁剪后的图片进行平均值预测,即可得到原始测试图片的预测结果

- 减少裁剪窗口重叠部分的冗余计算

因此,AlexNet 之后的多种不同的迭代模型将全连接层用等效的 卷积层替代,然后直接使用原始大小的测试图片进行预测。具体操作为将输出的各位置处的概率值按每一类取 平均值或最大值,以获得原始测试图像的输出类别概率。

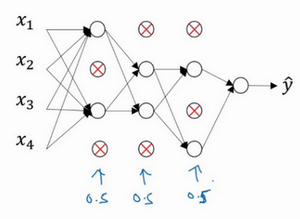

AlexNet 中设置的失活概率为 0.5,在测试的时候,使用所有的神经元但是要给它们的输出都乘以 0.5。Dropout 正则化方法解决过拟合问题时,会遍历网络的每一层,并设置消除神经网络中节点的概率。AlexNet 网络中的每一层,每个节点都会以抛硬币的方式设置概率,每个节点得以保留和消除的概率都是 0.5,设置完节点概率,一半节点会被 随机去除,然后删除掉从该节点进出的连线,最后得到一个节点更少,规模更小的网络,然后再用 反向传播 方法进行训练。

Dropout 正则化后,每个神经元都有失活的可能,对于单个神经元来说,输入的特征量存在被清除的可能,这就使得神经元不会依赖于任何一个特征。对于不同的层,应该设置不同的 keep_prob,即失活概率。那些神经元数量较少的层,keep_prob可以设置为 1,这样会保留该层所有神经元信息,而那些神经元较多的层,可以将 keep_prob 设置为较小的值。

Dropout 正则化广泛运用于计算机视觉领域,因为计算机视觉领域输入的特征一般特别多,而且用于训练的数据较少。需要注意的是,这是一种正则化的方法,在实践过程中,除非算法出现过拟合,否则不推荐使用Dropout 正则化, 因为其一大 缺点 就是代价函数不再被明确定义,每次迭代,都会随机移除一些节点,因此无法确保成本函数单调递减。

2.3.4 多GPU训练 Multi-GPU Processing

AlexNet 采用两块 GTX 580 3G 并行训练。网络结构图由上、下两部分组成:一个 GPU 运行图上方的通道数据,一个 GPU 运行图下方的通道数据,两个 GPU 只在特定的网络层通信, 即执行 分组卷积:

- 第二、四、五层卷积层的核只和同一个 GPU 上的前一层的 feature map 相连。

- 第三层卷积层的核和前一层所有 GPU 的 feature map 相连。

- 全连接层中的神经元和前一层中的所有神经元相连。

多 GPU 训练方法使 top-1 和 top-5 错误率和使用一个 GPU 训练一半的 kernels 相比分别降低了 1.7% 和 1.2%

2.3.5 局部响应归一化 Local Response Normalization,LRN

ReLU 函数不像 tanh 和 sigmoid 一样有一个有限的值域区间,所以在 ReLU 之后需要进行 归一化处理,LRN 的思想来源于神经生物学中一个叫做 “侧抑制” 的概念,指的是被激活的神经元抑制周围的神经元

-

局部响应规范层LRN:进行一个横向抑制,使得不同的卷积核所获得的响应产生竞争

- LRN 层现在很少使用,因为效果不是很明显,而且增加了内存消耗和计算时间

- AlexNet 中 LRN 策略提高了 1.2% 的准确率

-

LRN 的思想:输出通道 ii 在位置 (x,y)(x,y) 处的输出会受到相邻通道在相同位置输出的影响

- 为了刻画这种影响,将输出通道 ii 的原始值除以一个归一化因子

- LRN 使 AlexNet 的 top-1 和 top-5 错误率分别降低了 1.4% 和 1.2%

- \hat{a}^{(x,y)}_i = \left. {a^{(x,y)}_i} \middle/ {(k + \alpha\Sigma^{min(N-1,i+n/2)}_{j=max(0,i-n/2)}{(a^{(x,y)}_j)^2}})^\beta \right. , i = 0, 1, \dots, N-1a^i(x,y)=ai(x,y)/(k+αΣj=max(0,i−n/2)min(N−1,i+n/2)(aj(x,y))2)β,i=0,1,…,N−1

其中:a^{(x,y)}_iai(x,y) 为输出通道 ii 在位置 (x,y)(x,y) 处的原始值,\hat{a}^{(x,y)}a^(x,y) 为归一化之后的值。nn 为影响第 ii 通道的通道数量(分别从左侧、右侧 \left. n \middle/ 2 \right.n/2 个通道考虑。\alpha, \beta, kα,β,k 为超参数, 通常情况下 \alpha = 2, n = 5, \alpha = 10 ^ {-4}, \beta = 0.75α=2,n=5,α=10−4,β=0.75

2.3.6 重叠池化 Overlapping Pooling

一般的池化是不重叠的,池化区域的大小与步长相同。Alexnet 中,池化是可重叠的,即步长小于池化区域的大小。

-

重叠池化可以缓解过拟合,该策略贡献了 0.4% 的错误率。

-

重叠池化减少过拟合的原理 很难 用数学甚至直观上的观点来解答。一个稍微合理的解释是重叠池化会带来更多的特征,这些特征很可能会有利于提高模型的泛化能力。

AlexNet 使用了带动量的 mini-batch 随机梯度下降法。标准的带动量的mini-batch 随机梯度下降法为:

\vec{v}\gets \alpha\vec{v} - \epsilon \nabla_{\vec{\theta}}J(\vec{\theta})v←αv−ϵ∇θJ(θ)

\vec{\theta} \gets \vec{\theta} + \vec{\textbf{v}}θ←θ+v

AlexNet 使用修正动量的mini-batch为:

\vec{v}\gets \alpha\vec{v} - \beta\epsilon\vec{\theta} - \epsilon \nabla_{\vec{\theta}}J(\vec{\theta})v←αv−βϵθ−ϵ∇θJ(θ)

\vec{\theta} \gets \vec{\theta} + \vec{\textbf{v}}θ←θ+v

- \alpha, \beta, \epsilonα,β,ϵ 为学习率

- \beta\epsilon\vec{\theta}βϵθ 为 权重衰减 , 对于模型训练非常重要,不仅可以起到正则化效果,还可以减少训练误差。

第3节 卷积神经网络模型 VGGNet

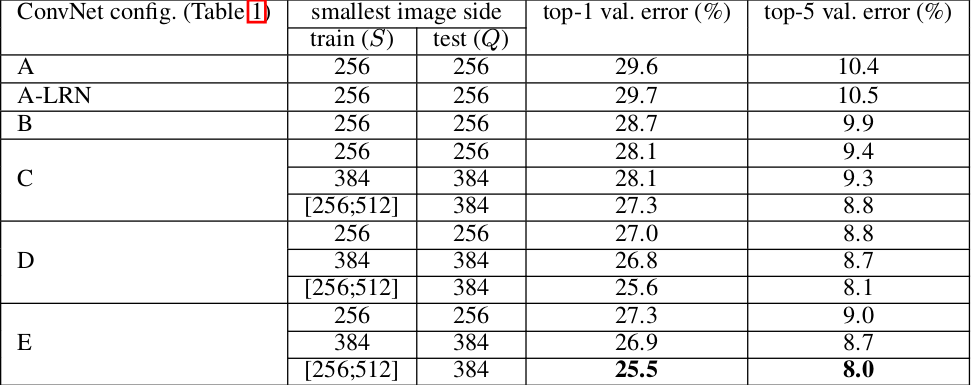

3.1 VGGNet 简介

VGGNet 是牛津大学计算机视觉组和DeepMind公司共同研发一种深度卷积网络,并且在 2014 年在ILSVRC比赛上获得了分类项目的第二名和定位项目的第一名。VGG-Net 的主要贡献是:

- 证明了 小尺寸卷积核 (3\times3)(3×3) 的深层网络优于大尺寸卷积核的浅层网络

- 证明了 深度 对网络的泛化性能的重要性

- 验证了尺寸抖动 scale jittering 这一数据增强技术的有效性

- VGGNet 最大的缺陷在于参数数量,VGG-19 是参数数量最多的卷积网络架构

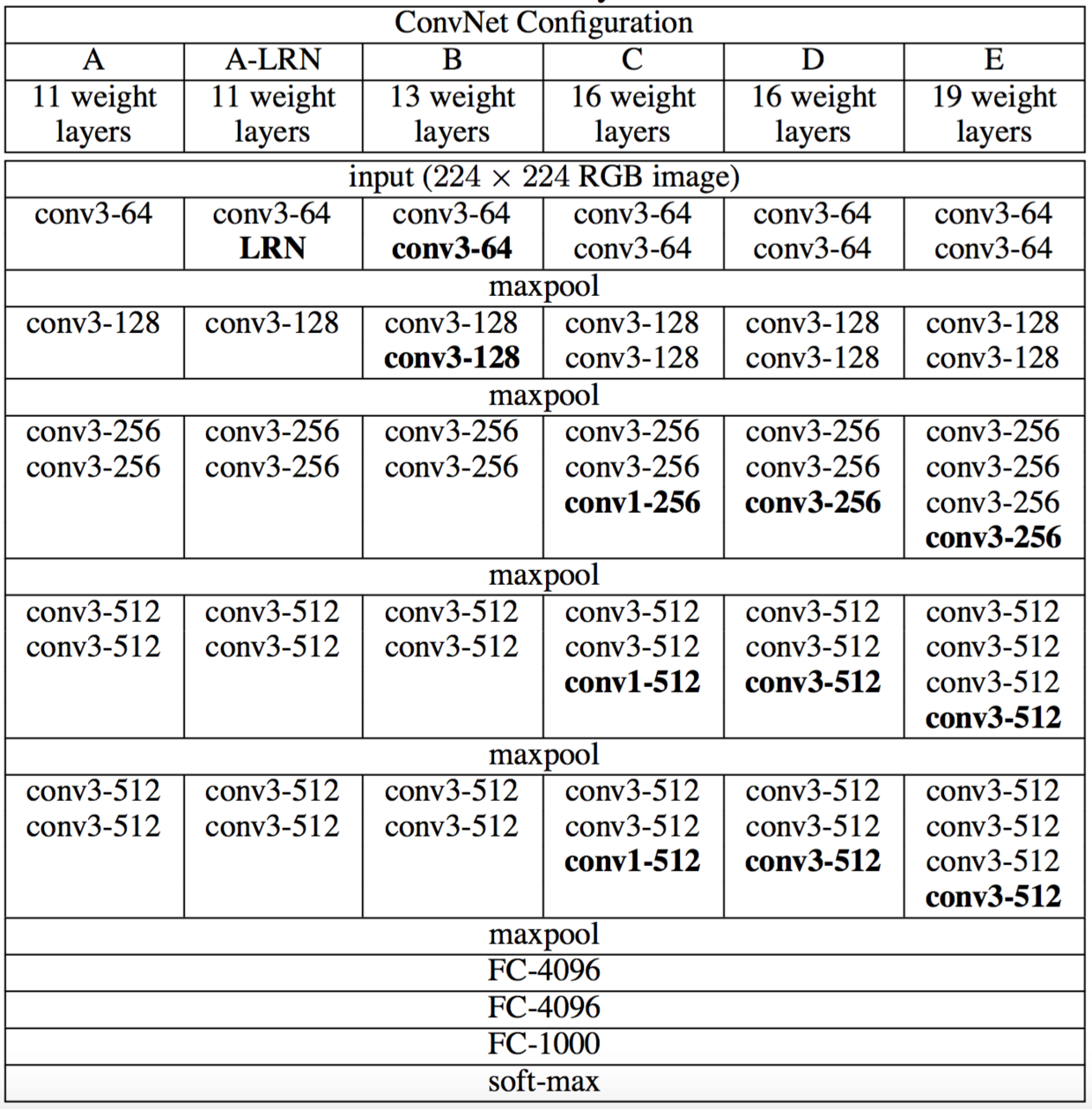

3.2 VGGNet 模型结构

VGGNet 一共有五组结构,可表示为 A-E,其每组结构都类似,区别在于网络深度上的不同。

-

结构中不同的部分用黑色粗体给出

-

卷积层的参数为 convx-y,其中 x 为卷积核大小,y 为卷积核数量,conv3-64 表示 6464 个 3\times33×3 的卷积核

-

卷积层的通道数刚开始很小(64通道),然后在每个池化层之后的卷积层通道数翻倍,直到512

-

每个卷积层之后都跟随一个 ReLU 激活函数

VggNet 通用结构:

-

输入层:固定大小的 224\times224224×224 的 RGB 图像

-

卷积层:卷积步长均为 1

- 填充方式:填充卷积层的输入,使得卷积前后保持同样的空间分辨率

- (3\times3)(3×3) 卷积:same 填充,即输入的上下左右各填充 1 个像素

- (1\times1)(1×1) 卷积:不需要填充

- 卷积核尺寸:有 3\times33×3 和 1\times11×1 两种

- (3\times3)(3×3) 卷积核:捕获左右、上下、中心等概念的最小尺寸

- (1\times1)(1×1) 卷积核:用于输入通道的线性变换, 在它之后接一个ReLU 激活函数,使得输入通道执行了非线性变换

- 填充方式:填充卷积层的输入,使得卷积前后保持同样的空间分辨率

-

池化层:采用 最大池化

- 池化层连接在卷积层之后,但并不是所有的卷积层之后都有池化

- 池化窗口为 2\times22×2,步长为 2

-

网络最后四层为:三个 全连接层 + 一个 softmax层

- 前两个全连接层都是 4096 个神经元,第三个全连接层是 1000 个神经元(ImageNet 1000 类的分类)

- 最后一层是softmax 层用于输出类别的概率

-

所有隐层都使用ReLU 激活函数

-

VGGNet 网络中第一个全连接层 FC-4096 的参数数量为:7x7x512x4096=1.02亿,网络绝大部分参数来自于该层

3.2 VGGNet PyTorch

# %load vgg.py import math import torch import torch.nn as nn cfg = [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'] class VGGNet(nn.Module): def __init__(self, features, num_classes=1000, init_weights=True): super(VGGNet, self).__init__() self.features = features self.avgpool = nn.AdaptiveAvgPool2d((7, 7)) self.classifier = nn.Sequential( nn.Linear(512 * 7 * 7, 4096), nn.ReLU(True), nn.Dropout(), nn.Linear(4096, 4096), nn.ReLU(True), nn.Dropout(), nn.Linear(4096, num_classes), ) if init_weights: self._initialize_weights() def forward(self, x): x = self.features(x) x = self.avgpool(x) x = torch.flatten(x, 1) x = self.classifier(x) return x def _initialize_weights(self): for m in self.modules(): if isinstance(m, nn.Conv2d): nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') if m.bias is not None: nn.init.constant_(m.bias, 0) elif isinstance(m, nn.BatchNorm2d): nn.init.constant_(m.weight, 1) nn.init.constant_(m.bias, 0) elif isinstance(m, nn.Linear): nn.init.normal_(m.weight, 0, 0.01) nn.init.constant_(m.bias, 0) def make_layers(cfg, batch_norm=False): layers = [] in_channels = 3 for v in cfg: if v == 'M': layers += [nn.MaxPool2d(kernel_size=2, stride=2)] else: conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1) if batch_norm: layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)] else: layers += [conv2d, nn.ReLU(inplace=True)] in_channels = v return nn.Sequential(*layers) def build_vgg16(phase,num_classes,pretrained): if phase != "test" and phase != "train": print("ERROR: Phase: " + phase + " not recognized") return if not pretrained: model = VGGNet(make_layers(cfg, False),num_classes=num_classes) else: model = VGGNet(make_layers(cfg, False)) model_weights_path = 'weights/vgg16-397923af.pth' model.load_state_dict(torch.load(model_weights_path), strict=False) for parma in model.parameters(): parma.requires_grad = False ratio = int(math.sqrt(25088/num_classes)) floor = math.floor(math.log2(ratio)) hidden_size = int(math.pow(2,12-floor)) model.classifier = nn.Sequential(nn.Linear(512 * 7 * 7, 4096), nn.ReLU(inplace=True), nn.Dropout(p=0.5), nn.Linear(4096, hidden_size), nn.ReLU(inplace=True), nn.Dropout(p=0.5), nn.Linear(hidden_size, num_classes)) return modelnet = build_vgg16('train',10,False) net.cuda() summary(net,(3,224,224))- ----------------------------------------------------------------

- Layer (type) Output Shape Param #

- ================================================================

- Conv2d-1 [-1, 64, 224, 224] 1,792

- ReLU-2 [-1, 64, 224, 224] 0

- Conv2d-3 [-1, 64, 224, 224] 36,928

- ReLU-4 [-1, 64, 224, 224] 0

- MaxPool2d-5 [-1, 64, 112, 112] 0

- Conv2d-6 [-1, 128, 112, 112] 73,856

- ReLU-7 [-1, 128, 112, 112] 0

- Conv2d-8 [-1, 128, 112, 112] 147,584

- ReLU-9 [-1, 128, 112, 112] 0

- MaxPool2d-10 [-1, 128, 56, 56] 0

- Conv2d-11 [-1, 256, 56, 56] 295,168

- ReLU-12 [-1, 256, 56, 56] 0

- Conv2d-13 [-1, 256, 56, 56] 590,080

- ReLU-14 [-1, 256, 56, 56] 0

- Conv2d-15 [-1, 256, 56, 56] 590,080

- ReLU-16 [-1, 256, 56, 56] 0

- MaxPool2d-17 [-1, 256, 28, 28] 0

- Conv2d-18 [-1, 512, 28, 28] 1,180,160

- ReLU-19 [-1, 512, 28, 28] 0

- Conv2d-20 [-1, 512, 28, 28] 2,359,808

- ReLU-21 [-1, 512, 28, 28] 0

- Conv2d-22 [-1, 512, 28, 28] 2,359,808

- ReLU-23 [-1, 512, 28, 28] 0

- MaxPool2d-24 [-1, 512, 14, 14] 0

- Conv2d-25 [-1, 512, 14, 14] 2,359,808

- ReLU-26 [-1, 512, 14, 14] 0

- Conv2d-27 [-1, 512, 14, 14] 2,359,808

- ReLU-28 [-1, 512, 14, 14] 0

- Conv2d-29 [-1, 512, 14, 14] 2,359,808

- ReLU-30 [-1, 512, 14, 14] 0

- MaxPool2d-31 [-1, 512, 7, 7] 0

- AdaptiveAvgPool2d-32 [-1, 512, 7, 7] 0

- Linear-33 [-1, 4096] 102,764,544

- ReLU-34 [-1, 4096] 0

- Dropout-35 [-1, 4096] 0

- Linear-36 [-1, 4096] 16,781,312

- ReLU-37 [-1, 4096] 0

- Dropout-38 [-1, 4096] 0

- Linear-39 [-1, 10] 40,970

- ================================================================

- Total params: 134,301,514

- Trainable params: 134,301,514

- Non-trainable params: 0

- ----------------------------------------------------------------

- Input size (MB): 0.57

- Forward/backward pass size (MB): 218.77

- Params size (MB): 512.32

- Estimated Total Size (MB): 731.67

- ----------------------------------------------------------------

net = build_vgg16('train',10,True) net.cuda() summary(net,(3,224,224))- ----------------------------------------------------------------

- Layer (type) Output Shape Param #

- ================================================================

- Conv2d-1 [-1, 64, 224, 224] 1,792

- ReLU-2 [-1, 64, 224, 224] 0

- Conv2d-3 [-1, 64, 224, 224] 36,928

- ReLU-4 [-1, 64, 224, 224] 0

- MaxPool2d-5 [-1, 64, 112, 112] 0

- Conv2d-6 [-1, 128, 112, 112] 73,856

- ReLU-7 [-1, 128, 112, 112] 0

- Conv2d-8 [-1, 128, 112, 112] 147,584

- ReLU-9 [-1, 128, 112, 112] 0

- MaxPool2d-10 [-1, 128, 56, 56] 0

- Conv2d-11 [-1, 256, 56, 56] 295,168

- ReLU-12 [-1, 256, 56, 56] 0

- Conv2d-13 [-1, 256, 56, 56] 590,080

- ReLU-14 [-1, 256, 56, 56] 0

- Conv2d-15 [-1, 256, 56, 56] 590,080

- ReLU-16 [-1, 256, 56, 56] 0

- MaxPool2d-17 [-1, 256, 28, 28] 0

- Conv2d-18 [-1, 512, 28, 28] 1,180,160

- ReLU-19 [-1, 512, 28, 28] 0

- Conv2d-20 [-1, 512, 28, 28] 2,359,808

- ReLU-21 [-1, 512, 28, 28] 0

- Conv2d-22 [-1, 512, 28, 28] 2,359,808

- ReLU-23 [-1, 512, 28, 28] 0

- MaxPool2d-24 [-1, 512, 14, 14] 0

- Conv2d-25 [-1, 512, 14, 14] 2,359,808

- ReLU-26 [-1, 512, 14, 14] 0

- Conv2d-27 [-1, 512, 14, 14] 2,359,808

- ReLU-28 [-1, 512, 14, 14] 0

- Conv2d-29 [-1, 512, 14, 14] 2,359,808

- ReLU-30 [-1, 512, 14, 14] 0

- MaxPool2d-31 [-1, 512, 7, 7] 0

- AdaptiveAvgPool2d-32 [-1, 512, 7, 7] 0

- Linear-33 [-1, 4096] 102,764,544

- ReLU-34 [-1, 4096] 0

- Dropout-35 [-1, 4096] 0

- Linear-36 [-1, 128] 524,416

- ReLU-37 [-1, 128] 0

- Dropout-38 [-1, 128] 0

- Linear-39 [-1, 10] 1,290

- ================================================================

- Total params: 118,004,938

- Trainable params: 103,290,250

- Non-trainable params: 14,714,688

- ----------------------------------------------------------------

- Input size (MB): 0.57

- Forward/backward pass size (MB): 218.68

- Params size (MB): 450.15

- Estimated Total Size (MB): 669.41

- ----------------------------------------------------------------

3.3 VGGNet 设计技巧

VGGNet 在 AlexNet 的基础上改进了:

- 使用 输入预处理 对输入图像进行标准剪裁

- 使用 多尺度训练 与 多尺度测试 的方法

- 使用 权重初始化 的模型进行训练

- 使用 学习率递减、带动量的最小批梯度下降算法、SGD优化函数等完善 训练策略

- 使用三种模型 评估方案

3.3.1 输入预处理 Data Preprocessing

输入预处理:通道像素零均值化。

- 先统计训练集中全部样本的通道均值:所有红色通道的像素均值 \bar{Red}Redˉ、所有绿色通道的像素均值 \bar{Green}Greenˉ、所有蓝色通道的像素均值 \bar{Blue}Blueˉ

\bar{Red} = \Sigma_n\Sigma_i\Sigma_jI_{n,0,i,j}Redˉ=ΣnΣiΣjIn,0,i,j

\bar{Green} = \Sigma_n\Sigma_i\Sigma_jI_{n,1,i,j}Greenˉ=ΣnΣiΣjIn,1,i,j

\bar{Blue} = \Sigma_n\Sigma_i\Sigma_jI_{n,2,i,j}Blueˉ=ΣnΣiΣjIn,2,i,j

假设红色通道为通道 0,绿色通道为通道 1,蓝色通道为通道 2; nn 遍历所有的训练样本, i,ji,j 遍历图片空间上的所有坐标。

- 对每个样本:红色通道的每个像素值减去 \bar{Red}Redˉ,绿色通道的每个像素值减去 \bar{Green}Greenˉ ,蓝色通道的每个像素值减去 \bar{Blue}Blueˉ

3.3.2 多尺度策略 Multi-Scale Strategy

多尺度训练将原始的图像缩放到 最小的边 S\ge224S≥224,然后在整副图像上截取 224\times224224×224 的区域来训练

-

在所有图像上固定 SS 用 S=256S=256 来训练一个模型,用 S=384S=384 来训练另一个模型。最后使用两个模型来评估

-

对每个图像,在 [S_{min},S_{max}][Smin,Smax] 之间随机选取一个 SS ,然后进行裁剪来训练一个模型。最后使用单个模型来评估

- 该方法只需要一个单一的模型

- 该方法相当于使用了尺寸抖动 (scale jittering) 的数据增强

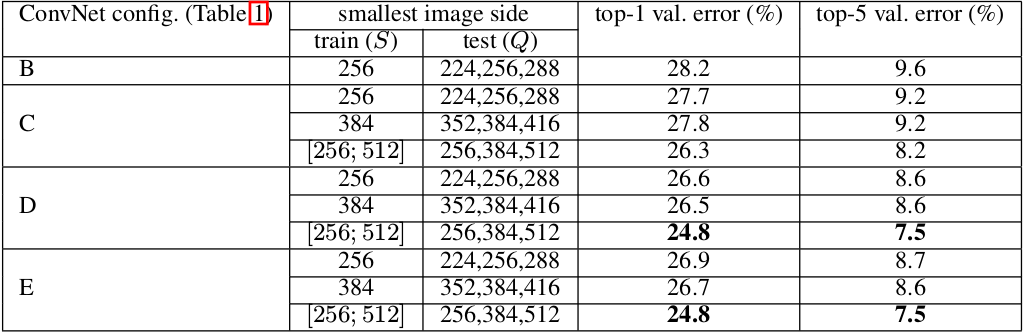

多尺度测试将测试的原始图像等轴的缩放到预定义的最小图像边,表示为 QQ (QQ 不一定等于 SS),称作测试尺度

- 在一张测试图像的几个归一化版本上运行模型,然后对得到的结果进行平均

- 不同版本对应于不同的 QQ 值

- 所有版本都执行通道像素归一化

- 该方法相当于在测试时使用了尺寸抖动 (scale jittering) 的数据增强

大部分神经网络的训练都遵循了 AlexNet 的训练方式,除了在输入采样上有所区别。VGGNet 训练使用了 带动量的最小批梯度下降算法( mini-batch gradient descent with momentum)来优化多项式逻辑回归( multinomial logistic regression)

- 为了进一步增强训练集,裁剪图像进行随机水平翻转和随机 RGB 颜色偏移

- 批次的大小设置为 256

- 动量设置为 0.9

- 在前两个全连接层(FC)使用 Dropout,值设置为 0.5

- 学习速率初始中设置为 1^{-2}1−2,当验证精度停止提升值,将学习速率衰减 10

- 整个训练过程中学习速率衰减 3 次,在经过 370K 此迭代,即 74 轮

VGGNet 训练之所以可以收敛的比 AlexNet 快,是因为:

- 通过增加深度和使用小的卷积 filter 隐式的进行了正则化

- 预初始化(pre-initialisation)确定的层

3.3.4 权重初始化 Weight Initialization

为解决 权重初始化 等问题,VggNet采用的是一种 Pre-training 的方式,先训练浅层的的简单网络 VGG11,再复用 VGG11 的权重来初始化 VGG13,如此反复训练并初始化 VGG19,能够使训练时收敛的速度更快。整个网络都使用卷积核尺寸为 3\times33×3 和最大池化尺寸 2\times22×2。比较常用的 VGG-16 的 16 指的是 conv+fc 的总层数是16,是不包括 max pool 的层数, 同时可以通过 Xavier 均匀初始化来直接初始化权重而不需要进行预训练操作

-

single-crop:对测试图片沿着最短边缩放,然后选择其中的 center crop 来裁剪图像,选择这个图像的预测结果作为原始图像的预测结果

该方法的缺点是:仅仅保留图片的 中央部分 可能会丢掉图片类别的关键信息。因此该方法很少在实际任务中使用,通常用于不同模型之间的性能比较

-

multi-crop:类似 AlexNet 的做法,对每个测试图像获取多个裁剪图像,平均每个裁剪图像的预测结果为原始图像的预测结果

该方法的缺点是:需要网络 重新计算 每个裁剪图像,效率较低

-

dense:将最后三个全连接层用等效的卷积层替代,成为一个全卷积网络。其中第一个全连接层用 7\times77×7 的卷积层替代,后面两个全连接层用 1\times11×1 的卷积层替代

该全卷积网络应用到整张图片上(无需裁剪),得到一个多位置的、各类别的概率字典。通过原始图片、水平翻转图片的各类别预测的均值,得到原始图片的各类别概率

该方法的优点是:不需要裁剪图片,支持 多尺度 的图片测试,计算效率较高

实验结果表明 multi-crop 评估方式要比 dense 评估方式表现更好,而二者的组合要优于任何单独的一种

第4节 PyTorch 实践

4.1 模型训练代码

加载同级目录下 train.py 程序代码

# %load train.py import os os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE" import time import argparse import sys import torch import torch.nn as nn import torch.optim as optim import torch.backends.cudnn as cudnn from torchvision import datasets, transforms from torch.autograd import Variable import matplotlib as mpl import matplotlib.pyplot as plt mpl.rc('axes', labelsize = 14) mpl.rc('xtick', labelsize = 12) mpl.rc('ytick', labelsize = 12) sys.path.append(os.path.dirname(os.path.dirname(os.path.dirname(os.path.abspath(__file__))))) from lenet import build_lenet5 from alex import build_alex from vgg import build_vgg16 from datasets.config import * from datasets.cifar import CIFAR10 from datasets.FLOWER.flower import shuffle_flower from datasets.oxford_iiit import shuffle_oxford def str2bool(v): return v.lower() in ("yes", "true", "t", "1") parser = argparse.ArgumentParser( description='Image Classification Training With Pytorch') train_set = parser.add_mutually_exclusive_group() parser.add_argument('--dataset', default='Flower', choices=['Flower', 'Oxford-IIIT', 'CIFAR-10'], type=str, help='Flower, Oxford-IIIT, CIFAR-10') parser.add_argument('--dataset_root', default=FLOWER_ROOT, help='Dataset root directory path') parser.add_argument('--model', default='LeNet', choices=['LeNet', 'AlexNet', 'VGGNet'], type=str, help='LeNet, AlexNet or VGGNet') parser.add_argument('--pretrained', default=True, type=str2bool, help='Using pretrained model weights') parser.add_argument('--crop_size', default=224, type=int, help='Resized crop value') parser.add_argument('--batch_size', default=32, type=int, help='Batch size for training') parser.add_argument('--num_workers', default=0, type=int, help='Number of workers used in dataloading') parser.add_argument('--epoch_size', default=20, type=int, help='Number of Epoches for training') parser.add_argument('--cuda', default=True, type=str2bool, help='Use CUDA to train model') parser.add_argument('--shuffle', default=False, type=str2bool, help='Shuffle new train and test folders') parser.add_argument('--lr', '--learning-rate', default=2e-4, type=float, help='initial learning rate') parser.add_argument('--save_folder', default='weights/', help='Directory for saving checkpoint models') parser.add_argument('--photo_folder', default='results/', help='Directory for saving photos') args = parser.parse_args() if not os.path.exists(args.save_folder): os.mkdir(args.save_folder) if not os.path.exists(args.photo_folder): os.mkdir(args.photo_folder) data_transform = transforms.Compose([transforms.RandomResizedCrop(args.crop_size), transforms.RandomHorizontalFlip(), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]) def train(): if args.dataset == 'Flower': if not os.path.exists(FLOWER_ROOT): parser.error('Must specify dataset_root if specifying dataset') args.dataset_root = FLOWER_ROOT train_path = os.path.join(FLOWER_ROOT, 'train') if not os.path.exists(train_path) or args.shuffle: shuffle_flower() dataset = datasets.ImageFolder(root=train_path,transform=data_transform) if args.dataset == 'Oxford-IIIT': if not os.path.exists(OXFORD_IIIT_ROOT): parser.error('Must specify dataset_root if specifying dataset') args.dataset_root = OXFORD_IIIT_ROOT train_path = os.path.join(OXFORD_IIIT_ROOT, 'train') if not os.path.exists(train_path) or args.shuffle: shuffle_oxford() dataset = datasets.ImageFolder(root=train_path,transform=data_transform) if args.dataset == 'CIFAR-10': if not os.path.exists(CIFAR_ROOT): parser.error('Must specify dataset_root if specifying dataset') args.dataset_root = CIFAR_ROOT dataset = CIFAR10(train=True,transform=data_transform,target_transform=None) classes = dataset.classes if args.model == 'LeNet': net = build_lenet5(phase='train', num_classes=len(classes)) if args.model == 'AlexNet': net = build_alex(phase='train', num_classes=len(classes), pretrained=args.pretrained) if args.model == 'VGGNet': net = build_vgg16(phase='train', num_classes=len(classes), pretrained=args.pretrained) if args.cuda and torch.cuda.is_available(): net = torch.nn.DataParallel(net) cudnn.benchmark = True net.cuda() optimizer = optim.Adam(net.parameters(), lr=args.lr) criterion = nn.CrossEntropyLoss() epoch_size = args.epoch_size print('Loading the dataset...') data_loader = torch.utils.data.DataLoader(dataset, args.batch_size, num_workers=args.num_workers, shuffle=True, pin_memory=True) print('Training on:', args.dataset) print('Using model:', args.model) print('Using the specified args:') print(args) loss_list = [] acc_list = [] for epoch in range(epoch_size): net.train() train_loss = 0.0 correct = 0 total = len(dataset) t0 = time.perf_counter() for step, data in enumerate(data_loader, start=0): images, labels = data if args.cuda: images = Variable(images.cuda()) labels = Variable(labels.cuda()) else: images = Variable(images) labels = Variable(labels) # forward outputs = net(images) # backprop optimizer.zero_grad() loss = criterion(outputs, labels) loss.backward() optimizer.step() # print statistics train_loss += loss.item() _, predicted = outputs.max(1) correct += predicted.eq(labels).sum().item() # print train process rate = (step + 1) / len(data_loader) a = "*" * int(rate * 50) b = "." * int((1 - rate) * 50) print("\rEpoch {}: {:^3.0f}%[{}->{}]{:.3f}".format(epoch+1, int(rate * 100), a, b, loss), end="") print(' Running time: %.3f' % (time.perf_counter() - t0)) acc = 100.*correct/ total loss = train_loss / step print('train loss: %.6f, acc: %.3f%% (%d/%d)' % (loss, acc, correct, total)) loss_list.append(loss) acc_list.append(acc/100) torch.save(net.state_dict(),args.save_folder + args.dataset + "_" + args.model + '.pth') plt.plot(range(epoch_size), loss_list, range(epoch_size), acc_list) plt.xlabel('Epoches') plt.ylabel('Sparse CrossEntropy Loss | Accuracy') plt.savefig(os.path.join( os.path.dirname( os.path.abspath(__file__)), args.photo_folder, args.dataset + "_" + args.model + "_train_details.png")) if __name__ == '__main__': train()- dataset:

训练采用的数据集,目前提供 Flower, Oxford-IIIT, CIFAR-10 供选择。 点击查看数据集加载Demo

- dataset_root:

数据集读取地址, default已设置为数据集相对路径,部署在云端可能需要修改

- model:

训练使用的算法模型,目前提供 LeNet, AlexNet, VGGNet, ResNet, DenseNet, SeNet 等卷积神经网络

- pretrained:

是否使用 PyTorch 预训练权重

- crop_size:

数据图像预处理剪裁大小,default为224,只有 LeNet 默认使用 32\times3232×32 尺寸大小

- shuffle:

是否重新生成新的train-test数据集样本

- batch_size:

单次训练所抓取的数据样本数量,default为32

- num_workers:

加载数据所使用线程个数,default为0,n\in (2,4,8,12\dots)n∈(2,4,8,12…)

- epoch_size:

训练次数, default为20

- cuda:

是否调用GPU训练

- lr:

超参数学习率,采用Adam优化函数,default为 0.0020.002

- save_folder:

模型权重保存地址

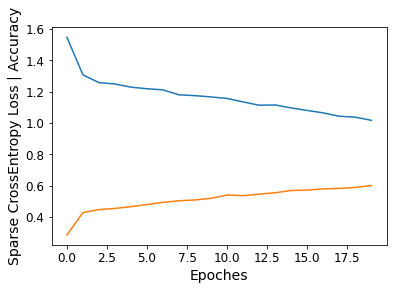

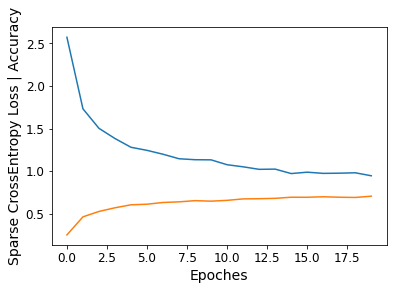

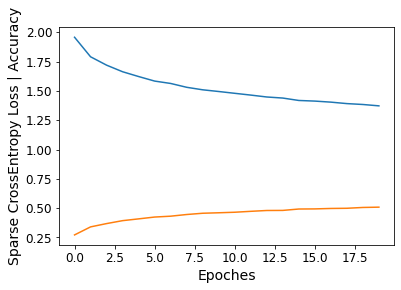

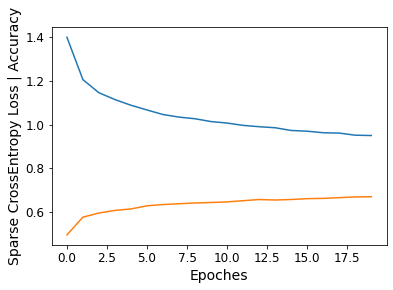

- 训练细节

print 于 python console, 包括单个epoch训练时间、训练集损失值、准确率

- 模型权重

模型保存路径为 ./weight/{dataset}_{model}.pth

- 损失函数与正确率

图片保存路径为 ./result/{dataset}_{model}_train_details.png

4.2 模型测试代码

加载同级目录下 test.py 程序代码

# %load test.py from algorithms.CNN_Image_Classification.train import train import sys import os os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE" import argparse import torch import torch.nn as nn import torch.backends.cudnn as cudnn from torchvision import transforms, datasets from torch.autograd import Variable import itertools import numpy as np import matplotlib as mpl import matplotlib.pyplot as plt mpl.rc('axes', labelsize = 14) mpl.rc('xtick', labelsize = 12) mpl.rc('ytick', labelsize = 12) sys.path.append(os.path.dirname(os.path.dirname(os.path.dirname(os.path.abspath(__file__))))) from lenet import build_lenet5 from alex import build_alex from vgg import build_vgg16 from datasets.config import * from datasets.cifar import CIFAR10 parser = argparse.ArgumentParser( description='Convolutional Neural Network Testing With Pytorch') parser.add_argument('--dataset', default='Flower', choices=['Flower', 'Oxford-IIIT', 'CIFAR-10'], type=str, help='Flower, Oxford-IIIT, or CIFAR-10') parser.add_argument('--dataset_root', default=FLOWER_ROOT, help='Dataset root directory path') parser.add_argument('--model', default='LeNet', choices=['LeNet', 'AlexNet', 'VGGNet'], type=str, help='LeNet, AlexNet or VGGNet') parser.add_argument('--crop_size', default=224, type=int, help='Resized crop value') parser.add_argument('--batch_size', default=32, type=int, help='Batch size for training') parser.add_argument('--num_workers', default=0, type=int, help='Number of workers used in dataloading') parser.add_argument('--weight', default='weights/{}_{}.pth', type=str, help='Trained state_dict file path to open') parser.add_argument('--cuda', default=True, type=bool, help='Use cuda to train model') parser.add_argument('--pretrained', default=True, type=bool, help='Using pretrained model weights') parser.add_argument('-f', default=None, type=str, help="Dummy arg so we can load in Jupyter Notebooks") args = parser.parse_args() args.weight = args.weight.format(args.dataset,args.model) data_transform = transforms.Compose([transforms.RandomResizedCrop(args.crop_size), transforms.RandomHorizontalFlip(), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]) def confusion_matrix(preds, labels, conf_matrix): for p, t in zip(preds, labels): conf_matrix[p, t] += 1 return conf_matrix def save_confusion_matrix(cm, classes, normalize=False, title='Confusion matrix', cmap=plt.cm.Blues): plt.imshow(cm, interpolation='nearest', cmap=cmap) plt.title(title) plt.colorbar() tick_marks = np.arange(len(classes)) plt.xticks(tick_marks, classes, rotation=90) plt.yticks(tick_marks, classes) plt.axis("equal") ax = plt.gca() left, right = plt.xlim() ax.spines['left'].set_position(('data', left)) ax.spines['right'].set_position(('data', right)) for edge_i in ['top', 'bottom', 'right', 'left']: ax.spines[edge_i].set_edgecolor("white") thresh = cm.max() / 2. for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])): num = '{:.2f}'.format(cm[i, j]) if normalize else int(cm[i, j]) plt.text(j, i, num, verticalalignment='center', horizontalalignment="center", color="white" if num > thresh else "black") plt.ylabel('True label') plt.xlabel('Predicted label') plt.savefig(os.path.join( os.path.dirname( os.path.abspath(__file__)), "results", args.dataset + '_confusion_matrix.png')) def test(): # load data if args.dataset == 'Flower': if not os.path.exists(FLOWER_ROOT): parser.error('Must specify dataset_root if specifying dataset') args.dataset_root = FLOWER_ROOT test_path = os.path.join(FLOWER_ROOT, 'val') if not os.path.exists(test_path): parser.error('Must train models before evaluating') dataset = datasets.ImageFolder(root=test_path,transform=data_transform) if args.dataset == 'Oxford-IIIT': if not os.path.exists(OXFORD_IIIT_ROOT): parser.error('Must specify dataset_root if specifying dataset') args.dataset_root = OXFORD_IIIT_ROOT test_path = os.path.join(OXFORD_IIIT_ROOT, 'val') if not os.path.exists(test_path): parser.error('Must train models before evaluating') dataset = datasets.ImageFolder(root=test_path,transform=data_transform) if args.dataset == 'CIFAR-10': if not os.path.exists(CIFAR_ROOT): parser.error('Must specify dataset_root if specifying dataset') args.dataset_root = CIFAR_ROOT dataset = CIFAR10(train=False,transform=data_transform,target_transform=None) classes = dataset.classes num_classes = len(classes) data_loader = torch.utils.data.DataLoader(dataset, args.batch_size, num_workers=args.num_workers, shuffle=True, pin_memory=True) # load net if args.model == 'LeNet': net = build_lenet5(phase='test', num_classes=num_classes) if args.model == 'AlexNet': net = build_alex(phase='test', num_classes=num_classes, pretrained=args.pretrained) if args.model == 'VGGNet': net = build_vgg16(phase='test', num_classes=num_classes, pretrained=args.pretrained) if args.cuda and torch.cuda.is_available(): net = torch.nn.DataParallel(net) cudnn.benchmark = True net.cuda() net.load_state_dict(torch.load(args.weight)) print('Finish loading model: ', args.weight) net.eval() print('Training on:', args.dataset) print('Using model:', args.model) print('Using the specified args:') print(args) # evaluation criterion = nn.CrossEntropyLoss() test_loss = 0 correct = 0 total = 0 conf_matrix = torch.zeros(num_classes, num_classes) class_correct = list(0 for i in range(num_classes)) class_total = list(0 for i in range(num_classes)) with torch.no_grad(): for step, data in enumerate(data_loader): images, labels = data if args.cuda: images = Variable(images.cuda()) labels = Variable(labels.cuda()) else: images = Variable(images) labels = Variable(labels) # forward outputs = net(images) loss = criterion(outputs, labels) test_loss += loss.item() _, predicted = outputs.max(1) conf_matrix = confusion_matrix(predicted, labels=labels, conf_matrix=conf_matrix) total += labels.size(0) correct += predicted.eq(labels).sum().item() c = (predicted.eq(labels)).squeeze() for i in range(c.size(0)): label = labels[i] class_correct[label] += c[i].item() class_total[label] += 1 acc = 100.* correct / total loss = test_loss / step print('test loss: %.6f, acc: %.3f%% (%d/%d)' % (loss, acc, correct, total)) for i in range(num_classes): print('accuracy of %s : %.3f%% (%d/%d)' % ( str(classes[i]), 100 * class_correct[i] / class_total[i], class_correct[i], class_total[i])) save_confusion_matrix(conf_matrix.numpy(), classes=classes, normalize=False, title = 'Normalized confusion matrix') if __name__ == '__main__': test()训练采用的数据集,目前提供 Flower, Oxford-IIIT, CIFAR-10 供选择。 点击查看数据集加载 Demo

- dataset_root:

数据集读取地址, default已设置为数据集相对路径,部署在云端可能需要修改

- model:

训练使用的算法模型,目前提供 LeNet, AlexNet, VGGNet, ResNet, DenseNet, SeNet 等卷积神经网络

- pretrained:

是否使用 PyTorch 预训练权重

- crop_size:

数据图像预处理剪裁大小,default为224,只有 LeNet 默认使用 32\times3232×32 尺寸大小

- shuffle:

单次训练所抓取的数据样本数量,default为32

- num_workers:

加载数据所使用线程个数,default为0,n\in (2,4,8,12\dots)n∈(2,4,8,12…)

- trained_model:

模型权重保存路径,default为 train.py 生成的ptb文件路径

- cuda:

是否调用GPU训练

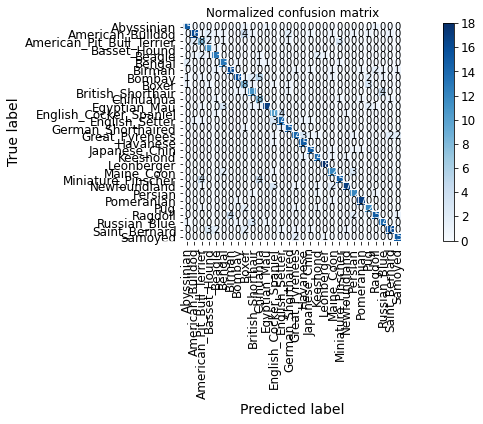

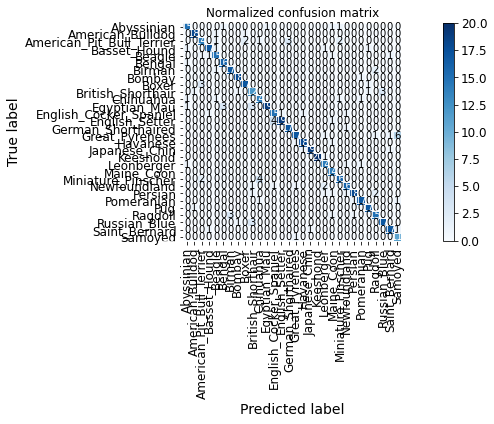

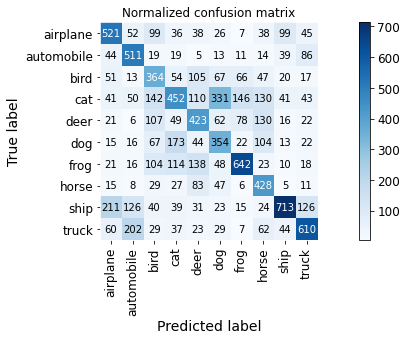

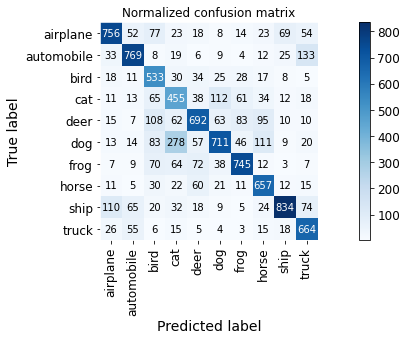

- 测试集损失值与准确率

print 于 python console 第一行

- 各类别准确率

print 于 python console 后续列表

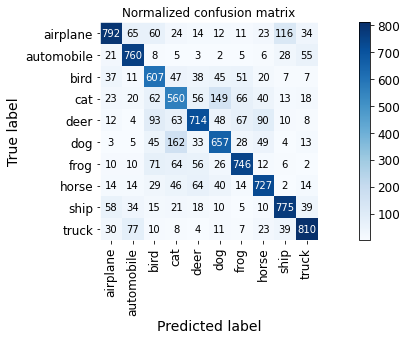

- 混淆矩阵

图片保存路径为 ./photos/%_confusion_matrix.png

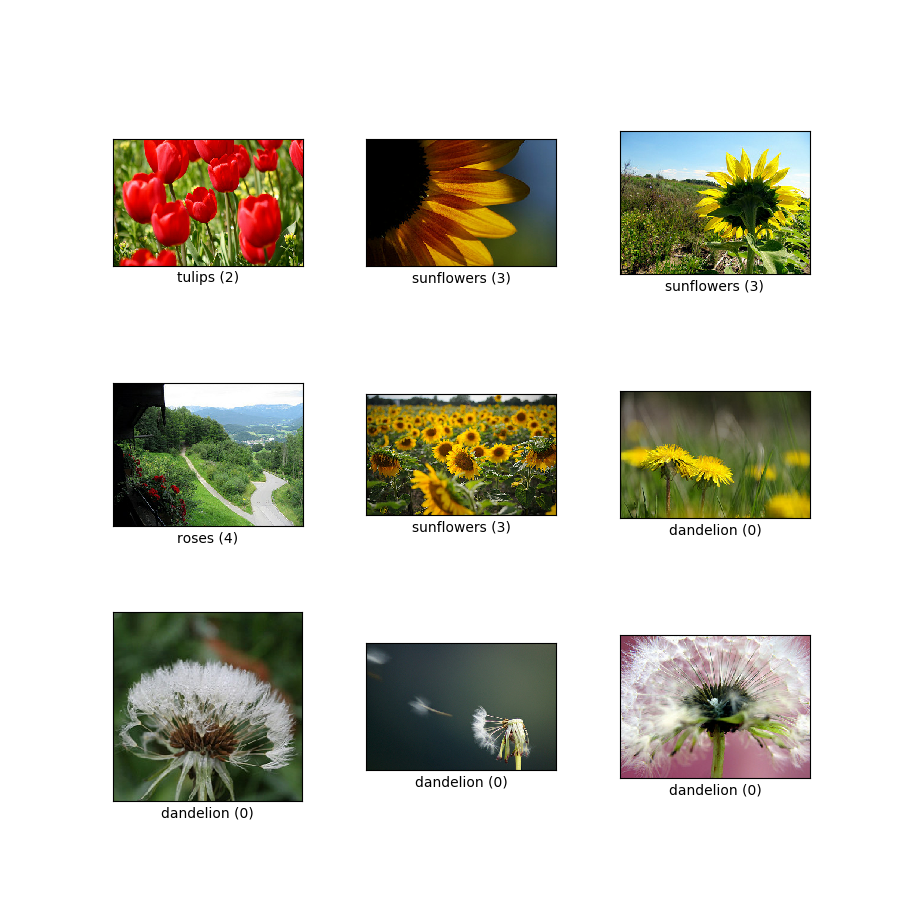

第5节 Flower 数据集

-

Flower 数据集 来自 Tensorflow 团队,创建于 2019 年 1 月,作为 入门级轻量数据集 包含5个花卉类别 [‘daisy’, ‘dandelion’, ‘roses’, ‘sunflowers’, ‘tulips’]

-

Flower 数据集 是深度学习图像分类中经典的一个数据集,各个类别有 [633, 898, 641, 699, 799] 个样本,每个样本都是一张 320\times232320×232 像素的RGB图片

-

Dataset 库中的 flower.py 按照 0.1 的比例实现训练集与测试集的 样本分离

5.1 LeNet

%run train.py --dataset Flower --model LeNet --crop_size 32- Dataset 'Flower' contains 5 catagories: ['daisy', 'dandelion', 'roses', 'sunflowers', 'tulips']

- [daisy] train/test dataset split [633/633] with ratio 0.1

- [dandelion] train/test dataset split [898/898] with ratio 0.1

- [roses] train/test dataset split [641/641] with ratio 0.1

- [sunflowers] train/test dataset split [699/699] with ratio 0.1

- [tulips] train/test dataset split [799/799] with ratio 0.1

- Loading the dataset...

- Training on: Flower

- Using model: LeNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=32, cuda=True, dataset='Flower', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\FLOWER', epoch_size=20, lr=0.0002, model='LeNet', num_workers=0, photo_folder='results/', pretrained=True, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]1.365 Running time: 9.335

- train loss: 1.548427, acc: 28.615% (946/3306)

- Epoch 2: 100%[**************************************************->]1.212 Running time: 9.215

- train loss: 1.308180, acc: 42.861% (1417/3306)

- Epoch 3: 100%[**************************************************->]1.296 Running time: 9.455

- train loss: 1.258674, acc: 44.797% (1481/3306)

- Epoch 4: 100%[**************************************************->]1.547 Running time: 9.580

- train loss: 1.249931, acc: 45.523% (1505/3306)

- Epoch 5: 100%[**************************************************->]1.264 Running time: 8.800

- train loss: 1.229576, acc: 46.673% (1543/3306)

- Epoch 6: 100%[**************************************************->]0.901 Running time: 9.047

- train loss: 1.219630, acc: 48.004% (1587/3306)

- Epoch 7: 100%[**************************************************->]1.822 Running time: 8.900

- train loss: 1.212125, acc: 49.425% (1634/3306)

- Epoch 8: 100%[**************************************************->]1.057 Running time: 8.680

- train loss: 1.180733, acc: 50.423% (1667/3306)

- Epoch 9: 100%[**************************************************->]1.056 Running time: 8.682

- train loss: 1.175452, acc: 50.938% (1684/3306)

- Epoch 10: 100%[**************************************************->]1.464 Running time: 8.733

- train loss: 1.167319, acc: 51.996% (1719/3306)

- Epoch 11: 100%[**************************************************->]1.513 Running time: 8.670

- train loss: 1.157543, acc: 54.083% (1788/3306)

- Epoch 12: 100%[**************************************************->]1.068 Running time: 8.686

- train loss: 1.135259, acc: 53.690% (1775/3306)

- Epoch 13: 100%[**************************************************->]1.168 Running time: 8.661

- train loss: 1.114434, acc: 54.628% (1806/3306)

- Epoch 14: 100%[**************************************************->]1.058 Running time: 8.828

- train loss: 1.116139, acc: 55.535% (1836/3306)

- Epoch 15: 100%[**************************************************->]0.996 Running time: 8.677

- train loss: 1.097041, acc: 56.987% (1884/3306)

- Epoch 16: 100%[**************************************************->]0.792 Running time: 8.588

- train loss: 1.080893, acc: 57.229% (1892/3306)

- Epoch 17: 100%[**************************************************->]1.116 Running time: 8.779

- train loss: 1.065502, acc: 58.016% (1918/3306)

- Epoch 18: 100%[**************************************************->]0.737 Running time: 8.683

- train loss: 1.043998, acc: 58.348% (1929/3306)

- Epoch 19: 100%[**************************************************->]0.814 Running time: 8.656

- train loss: 1.038189, acc: 58.923% (1948/3306)

- Epoch 20: 100%[**************************************************->]1.060 Running time: 8.660

- train loss: 1.018091, acc: 60.163% (1989/3306)

%run test.py --dataset Flower --model LeNet --crop_size 32 --pretrained False- Finish loading model: weights/Flower_LeNet.pth

- Training on: Flower

- Using model: LeNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=32, cuda=True, dataset='Flower', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\FLOWER', f=None, model='LeNet', num_workers=0, pretrained=True, weight='weights/Flower_LeNet.pth')

- test loss: 1.163283, acc: 56.868% (207/364)

- accuracy of daisy : 52.381% (33/63)

- accuracy of dandelion : 69.663% (62/89)

- accuracy of roses : 56.250% (36/64)

- accuracy of sunflowers : 60.870% (42/69)

- accuracy of tulips : 43.038% (34/79)

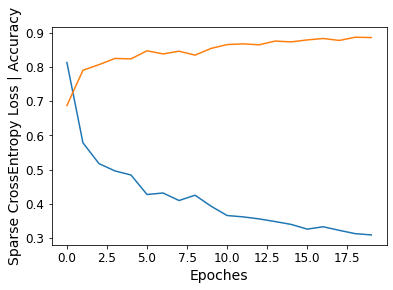

5.2 AlexNet

%run train.py --dataset Flower --model AlexNet --crop_size 224 --pretrained True- Loading the dataset...

- Training on: Flower

- Using model: AlexNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Flower', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\FLOWER', epoch_size=20, lr=0.0002, model='AlexNet', num_workers=0, photo_folder='results/', pretrained=True, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]0.823 Running time: 15.608

- train loss: 0.813369, acc: 68.754% (2273/3306)

- Epoch 2: 100%[**************************************************->]0.591 Running time: 15.173

- train loss: 0.578639, acc: 79.068% (2614/3306)

- Epoch 3: 100%[**************************************************->]0.672 Running time: 15.601

- train loss: 0.517714, acc: 80.702% (2668/3306)

- Epoch 4: 100%[**************************************************->]0.682 Running time: 16.043

- train loss: 0.496228, acc: 82.517% (2728/3306)

- Epoch 5: 100%[**************************************************->]1.111 Running time: 16.056

- train loss: 0.484389, acc: 82.396% (2724/3306)

- Epoch 6: 100%[**************************************************->]0.286 Running time: 16.655

- train loss: 0.427296, acc: 84.755% (2802/3306)

- Epoch 7: 100%[**************************************************->]0.342 Running time: 16.279

- train loss: 0.431695, acc: 83.848% (2772/3306)

- Epoch 8: 100%[**************************************************->]0.662 Running time: 16.429

- train loss: 0.409742, acc: 84.634% (2798/3306)

- Epoch 9: 100%[**************************************************->]0.633 Running time: 16.282

- train loss: 0.425239, acc: 83.485% (2760/3306)

- Epoch 10: 100%[**************************************************->]0.525 Running time: 16.102

- train loss: 0.393042, acc: 85.451% (2825/3306)

- Epoch 11: 100%[**************************************************->]0.700 Running time: 16.264

- train loss: 0.365828, acc: 86.570% (2862/3306)

- Epoch 12: 100%[**************************************************->]0.593 Running time: 15.894

- train loss: 0.361874, acc: 86.782% (2869/3306)

- Epoch 13: 100%[**************************************************->]0.740 Running time: 15.882

- train loss: 0.355939, acc: 86.509% (2860/3306)

- Epoch 14: 100%[**************************************************->]0.747 Running time: 15.625

- train loss: 0.348140, acc: 87.598% (2896/3306)

- Epoch 15: 100%[**************************************************->]0.151 Running time: 16.246

- train loss: 0.339823, acc: 87.356% (2888/3306)

- Epoch 16: 100%[**************************************************->]0.109 Running time: 16.298

- train loss: 0.326062, acc: 87.931% (2907/3306)

- Epoch 17: 100%[**************************************************->]0.207 Running time: 16.075

- train loss: 0.332952, acc: 88.355% (2921/3306)

- Epoch 18: 100%[**************************************************->]0.302 Running time: 16.000

- train loss: 0.322535, acc: 87.780% (2902/3306)

- Epoch 19: 100%[**************************************************->]0.689 Running time: 15.750

- train loss: 0.312812, acc: 88.717% (2933/3306)

- Epoch 20: 100%[**************************************************->]0.347 Running time: 15.821

- train loss: 0.309125, acc: 88.627% (2930/3306)

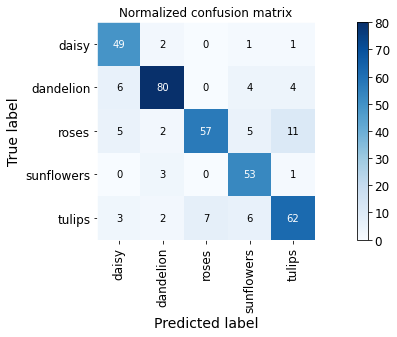

%run test.py --dataset Flower --model AlexNet --crop_size 224 --pretrained True- Finish loading model: weights/Flower_AlexNet.pth

- Training on: Flower

- Using model: AlexNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Flower', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\FLOWER', f=None, model='AlexNet', num_workers=0, pretrained=True, weight='weights/Flower_AlexNet.pth')

- test loss: 0.625130, acc: 82.692% (301/364)

- accuracy of daisy : 77.778% (49/63)

- accuracy of dandelion : 89.888% (80/89)

- accuracy of roses : 89.062% (57/64)

- accuracy of sunflowers : 76.812% (53/69)

- accuracy of tulips : 78.481% (62/79)

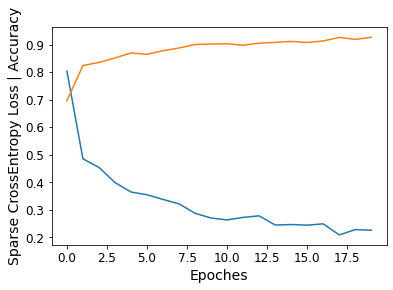

5.3 VGGNet

%run train.py --dataset Flower --model VGGNet --crop_size 224 --pretrained True- Loading the dataset...

- Training on: Flower

- Using model: VGGNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Flower', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\FLOWER', epoch_size=20, lr=0.0002, model='VGGNet', num_workers=0, photo_folder='results/', pretrained=True, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]0.444 Running time: 42.314

- train loss: 0.804131, acc: 69.661% (2303/3306)

- Epoch 2: 100%[**************************************************->]0.644 Running time: 38.402

- train loss: 0.485267, acc: 82.456% (2726/3306)

- Epoch 3: 100%[**************************************************->]0.554 Running time: 38.777

- train loss: 0.454202, acc: 83.575% (2763/3306)

- Epoch 4: 100%[**************************************************->]0.164 Running time: 38.346

- train loss: 0.399140, acc: 85.209% (2817/3306)

- Epoch 5: 100%[**************************************************->]0.346 Running time: 38.359

- train loss: 0.364771, acc: 87.024% (2877/3306)

- Epoch 6: 100%[**************************************************->]0.096 Running time: 38.401

- train loss: 0.354722, acc: 86.540% (2861/3306)

- Epoch 7: 100%[**************************************************->]0.182 Running time: 38.425

- train loss: 0.337731, acc: 87.840% (2904/3306)

- Epoch 8: 100%[**************************************************->]0.215 Running time: 38.656

- train loss: 0.321707, acc: 88.838% (2937/3306)

- Epoch 9: 100%[**************************************************->]0.063 Running time: 38.800

- train loss: 0.287286, acc: 90.109% (2979/3306)

- Epoch 10: 100%[**************************************************->]0.270 Running time: 38.718

- train loss: 0.270214, acc: 90.260% (2984/3306)

- Epoch 11: 100%[**************************************************->]0.143 Running time: 38.187

- train loss: 0.263321, acc: 90.381% (2988/3306)

- Epoch 12: 100%[**************************************************->]0.290 Running time: 38.329

- train loss: 0.272533, acc: 89.837% (2970/3306)

- Epoch 13: 100%[**************************************************->]0.723 Running time: 38.959

- train loss: 0.278160, acc: 90.593% (2995/3306)

- Epoch 14: 100%[**************************************************->]0.019 Running time: 38.523

- train loss: 0.244733, acc: 90.865% (3004/3306)

- Epoch 15: 100%[**************************************************->]0.151 Running time: 38.222

- train loss: 0.246557, acc: 91.228% (3016/3306)

- Epoch 16: 100%[**************************************************->]0.429 Running time: 38.806

- train loss: 0.244205, acc: 90.835% (3003/3306)

- Epoch 17: 100%[**************************************************->]0.112 Running time: 38.546

- train loss: 0.249062, acc: 91.379% (3021/3306)

- Epoch 18: 100%[**************************************************->]0.007 Running time: 38.902

- train loss: 0.208794, acc: 92.680% (3064/3306)

- Epoch 19: 100%[**************************************************->]0.170 Running time: 38.456

- train loss: 0.228088, acc: 91.954% (3040/3306)

- Epoch 20: 100%[**************************************************->]0.854 Running time: 38.961

- train loss: 0.225824, acc: 92.740% (3066/3306)

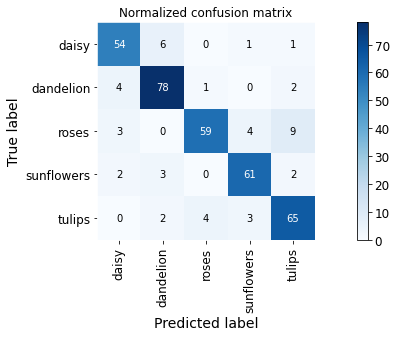

%run test.py --dataset Flower --model VGGNet --crop_size 224 --pretrained True- Finish loading model: weights/Flower_VGGNet.pth

- Training on: Flower

- Using model: VGGNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Flower', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\FLOWER', f=None, model='VGGNet', num_workers=0, pretrained=True, weight='weights/Flower_VGGNet.pth')

- test loss: 0.463054, acc: 87.088% (317/364)

- accuracy of daisy : 85.714% (54/63)

- accuracy of dandelion : 87.640% (78/89)

- accuracy of roses : 92.188% (59/64)

- accuracy of sunflowers : 88.406% (61/69)

- accuracy of tulips : 82.278% (65/79)

第6节 Oxford-IIIT 数据集

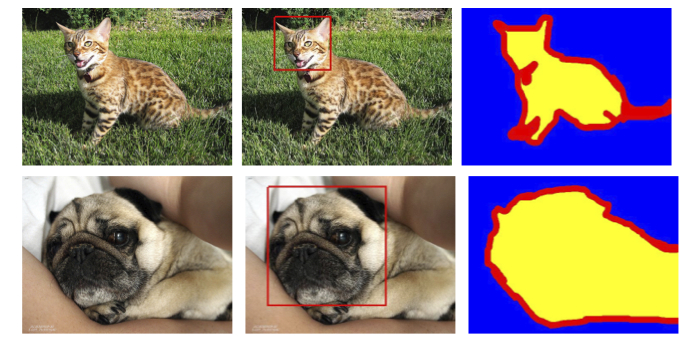

- Oxford-IIIT 数据集覆盖 30 个种类的猫狗品种,每个类别收集了约 200 张图像样本

- Oxford-IIIT 中每张图像在尺寸、姿势、光暗程度上有很大的浮动,但所有的图像都匹配了相关联的品种、头部框架定位、三维像素点语义分割的标注信息

- Dataset 库中的 oxford_iiit.py 按照 0.1 的比例实现训练集与测试集的 样本分离

6.1 LeNet

%run train.py --dataset Oxford-IIIT --model LeNet --crop_size 32 --pretrained False- Dataset 'Oxford-IIIT' contains 30 catagories: ['Abyssinian', 'American_Bulldog', 'American_Pit_Bull_Terrier', 'Basset_Hound', 'Beagle', 'Bengal', 'Birman', 'Bombay', 'Boxer', 'British_Shorthair', 'Chihuahua', 'Egyptian_Mau', 'English_Cocker_Spaniel', 'English_Setter', 'German_Shorthaired', 'Great_Pyrenees', 'Havanese', 'Japanese_Chin', 'Keeshond', 'Leonberger', 'Maine_Coon', 'Miniature_Pinscher', 'Newfoundland', 'Persian', 'Pomeranian', 'Pug', 'Ragdoll', 'Russian_Blue', 'Saint_Bernard', 'Samoyed']

- [Abyssinian] train/test dataset split [200/200] with ratio 0.1

- [American_Bulldog] train/test dataset split [200/200] with ratio 0.1

- [American_Pit_Bull_Terrier] train/test dataset split [200/200] with ratio 0.1

- [Basset_Hound] train/test dataset split [200/200] with ratio 0.1

- [Beagle] train/test dataset split [200/200] with ratio 0.1

- [Bengal] train/test dataset split [200/200] with ratio 0.1

- [Birman] train/test dataset split [200/200] with ratio 0.1

- [Bombay] train/test dataset split [200/200] with ratio 0.1

- [Boxer] train/test dataset split [200/200] with ratio 0.1

- [British_Shorthair] train/test dataset split [200/200] with ratio 0.1

- [Chihuahua] train/test dataset split [200/200] with ratio 0.1

- [Egyptian_Mau] train/test dataset split [200/200] with ratio 0.1

- [English_Cocker_Spaniel] train/test dataset split [200/200] with ratio 0.1

- [English_Setter] train/test dataset split [200/200] with ratio 0.1

- [German_Shorthaired] train/test dataset split [200/200] with ratio 0.1

- [Great_Pyrenees] train/test dataset split [200/200] with ratio 0.1

- [Havanese] train/test dataset split [200/200] with ratio 0.1

- [Japanese_Chin] train/test dataset split [200/200] with ratio 0.1

- [Keeshond] train/test dataset split [200/200] with ratio 0.1

- [Leonberger] train/test dataset split [200/200] with ratio 0.1

- [Maine_Coon] train/test dataset split [200/200] with ratio 0.1

- [Miniature_Pinscher] train/test dataset split [200/200] with ratio 0.1

- [Newfoundland] train/test dataset split [200/200] with ratio 0.1

- [Persian] train/test dataset split [200/200] with ratio 0.1

- [Pomeranian] train/test dataset split [200/200] with ratio 0.1

- [Pug] train/test dataset split [200/200] with ratio 0.1

- [Ragdoll] train/test dataset split [200/200] with ratio 0.1

- [Russian_Blue] train/test dataset split [200/200] with ratio 0.1

- [Saint_Bernard] train/test dataset split [200/200] with ratio 0.1

- [Samoyed] train/test dataset split [197/197] with ratio 0.1

- Loading the dataset...

- Training on: Oxford-IIIT

- Using model: LeNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=32, cuda=True, dataset='Oxford-IIIT', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\OXFORD-IIIT', epoch_size=20, lr=0.0002, model='LeNet', num_workers=0, photo_folder='results/', pretrained=False, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]3.339 Running time: 23.284

- train loss: 3.393844, acc: 4.687% (253/5398)

- Epoch 2: 100%[**************************************************->]3.468 Running time: 23.144

- train loss: 3.328217, acc: 7.206% (389/5398)

- Epoch 3: 100%[**************************************************->]3.146 Running time: 22.369

- train loss: 3.261986, acc: 8.392% (453/5398)

- Epoch 4: 100%[**************************************************->]3.165 Running time: 22.697

- train loss: 3.233198, acc: 9.207% (497/5398)

- Epoch 5: 100%[**************************************************->]3.339 Running time: 22.388

- train loss: 3.207408, acc: 9.726% (525/5398)

- Epoch 6: 100%[**************************************************->]3.039 Running time: 22.092

- train loss: 3.191449, acc: 10.467% (565/5398)

- Epoch 7: 100%[**************************************************->]3.238 Running time: 22.248

- train loss: 3.167063, acc: 11.541% (623/5398)

- Epoch 8: 100%[**************************************************->]2.879 Running time: 22.819

- train loss: 3.143465, acc: 10.986% (593/5398)

- Epoch 9: 100%[**************************************************->]3.076 Running time: 22.619

- train loss: 3.124420, acc: 12.690% (685/5398)

- Epoch 10: 100%[**************************************************->]3.200 Running time: 22.696

- train loss: 3.110711, acc: 13.097% (707/5398)

- Epoch 11: 100%[**************************************************->]3.334 Running time: 22.007

- train loss: 3.074659, acc: 13.394% (723/5398)

- Epoch 12: 100%[**************************************************->]3.115 Running time: 21.971

- train loss: 3.059965, acc: 13.690% (739/5398)

- Epoch 13: 100%[**************************************************->]2.947 Running time: 22.448

- train loss: 3.045815, acc: 14.098% (761/5398)

- Epoch 14: 100%[**************************************************->]3.011 Running time: 23.345

- train loss: 3.033115, acc: 14.672% (792/5398)

- Epoch 15: 100%[**************************************************->]3.315 Running time: 22.555

- train loss: 3.008330, acc: 14.302% (772/5398)

- Epoch 16: 100%[**************************************************->]3.115 Running time: 22.701

- train loss: 3.005380, acc: 15.098% (815/5398)

- Epoch 17: 100%[**************************************************->]2.642 Running time: 22.415

- train loss: 2.995956, acc: 15.320% (827/5398)

- Epoch 18: 100%[**************************************************->]3.228 Running time: 22.398

- train loss: 2.991024, acc: 15.635% (844/5398)

- Epoch 19: 100%[**************************************************->]2.689 Running time: 23.170

- train loss: 2.966305, acc: 15.821% (854/5398)

- Epoch 20: 100%[**************************************************->]2.850 Running time: 22.989

- train loss: 2.970545, acc: 15.858% (856/5398)

%run test.py --dataset Oxford-IIIT --model LeNet --crop_size 32 --pretrained False- Finish loading model: weights/Oxford-IIIT_LeNet.pth

- Training on: Oxford-IIIT

- Using model: LeNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=32, cuda=True, dataset='Oxford-IIIT', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\OXFORD-IIIT', f=None, model='LeNet', num_workers=0, pretrained=True, weight='weights/Oxford-IIIT_LeNet.pth')

- test loss: 3.162863, acc: 18.531% (111/599)

- accuracy of Abyssinian : 20.000% (4/20)

- accuracy of American_Bulldog : 15.000% (3/20)

- accuracy of American_Pit_Bull_Terrier : 0.000% (0/20)

- accuracy of Basset_Hound : 20.000% (4/20)

- accuracy of Beagle : 20.000% (4/20)

- accuracy of Bengal : 45.000% (9/20)

- accuracy of Birman : 25.000% (5/20)

- accuracy of Bombay : 70.000% (14/20)

- accuracy of Boxer : 5.000% (1/20)

- accuracy of British_Shorthair : 15.000% (3/20)

- accuracy of Chihuahua : 5.000% (1/20)

- accuracy of Egyptian_Mau : 20.000% (4/20)

- accuracy of English_Cocker_Spaniel : 5.000% (1/20)

- accuracy of English_Setter : 5.000% (1/20)

- accuracy of German_Shorthaired : 5.000% (1/20)

- accuracy of Great_Pyrenees : 15.000% (3/20)

- accuracy of Havanese : 10.000% (2/20)

- accuracy of Japanese_Chin : 25.000% (5/20)

- accuracy of Keeshond : 20.000% (4/20)

- accuracy of Leonberger : 10.000% (2/20)

- accuracy of Maine_Coon : 5.000% (1/20)

- accuracy of Miniature_Pinscher : 10.000% (2/20)

- accuracy of Newfoundland : 30.000% (6/20)

- accuracy of Persian : 30.000% (6/20)

- accuracy of Pomeranian : 5.000% (1/20)

- accuracy of Pug : 5.000% (1/20)

- accuracy of Ragdoll : 30.000% (6/20)

- accuracy of Russian_Blue : 20.000% (4/20)

- accuracy of Saint_Bernard : 25.000% (5/20)

- accuracy of Samoyed : 42.105% (8/19)

6.2 AlexNet

%run train.py --dataset Oxford-IIIT --model AlexNet --crop_size 224 --pretrained True- Loading the dataset...

- Training on: Oxford-IIIT

- Using model: AlexNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Oxford-IIIT', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\OXFORD-IIIT', epoch_size=20, lr=0.0002, model='AlexNet', num_workers=0, photo_folder='results/', pretrained=True, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]1.908 Running time: 33.767

- train loss: 2.572819, acc: 24.954% (1347/5398)

- Epoch 2: 100%[**************************************************->]1.467 Running time: 33.638

- train loss: 1.731733, acc: 46.184% (2493/5398)

- Epoch 3: 100%[**************************************************->]1.703 Running time: 34.106

- train loss: 1.502371, acc: 52.538% (2836/5398)

- Epoch 4: 100%[**************************************************->]1.473 Running time: 34.103

- train loss: 1.382494, acc: 56.836% (3068/5398)

- Epoch 5: 100%[**************************************************->]1.035 Running time: 35.174

- train loss: 1.279476, acc: 60.356% (3258/5398)

- Epoch 6: 100%[**************************************************->]1.437 Running time: 33.971

- train loss: 1.243415, acc: 61.041% (3295/5398)

- Epoch 7: 100%[**************************************************->]1.087 Running time: 33.781

- train loss: 1.197725, acc: 63.079% (3405/5398)

- Epoch 8: 100%[**************************************************->]1.887 Running time: 34.815

- train loss: 1.144729, acc: 63.820% (3445/5398)

- Epoch 9: 100%[**************************************************->]1.252 Running time: 34.342

- train loss: 1.133453, acc: 65.228% (3521/5398)

- Epoch 10: 100%[**************************************************->]0.813 Running time: 34.848

- train loss: 1.131729, acc: 64.635% (3489/5398)

- Epoch 11: 100%[**************************************************->]1.486 Running time: 35.012

- train loss: 1.074429, acc: 65.580% (3540/5398)

- Epoch 12: 100%[**************************************************->]0.711 Running time: 33.890

- train loss: 1.049976, acc: 67.284% (3632/5398)

- Epoch 13: 100%[**************************************************->]1.421 Running time: 33.940

- train loss: 1.020311, acc: 67.506% (3644/5398)

- Epoch 14: 100%[**************************************************->]1.146 Running time: 33.795

- train loss: 1.022906, acc: 67.951% (3668/5398)

- Epoch 15: 100%[**************************************************->]0.785 Running time: 33.609

- train loss: 0.970554, acc: 69.248% (3738/5398)

- Epoch 16: 100%[**************************************************->]1.075 Running time: 33.834

- train loss: 0.986888, acc: 69.192% (3735/5398)

- Epoch 17: 100%[**************************************************->]0.906 Running time: 32.842

- train loss: 0.972849, acc: 69.804% (3768/5398)

- Epoch 18: 100%[**************************************************->]1.277 Running time: 32.982

- train loss: 0.974976, acc: 69.248% (3738/5398)

- Epoch 19: 100%[**************************************************->]0.797 Running time: 32.309

- train loss: 0.979732, acc: 68.989% (3724/5398)

- Epoch 20: 100%[**************************************************->]1.116 Running time: 33.062

- train loss: 0.944694, acc: 70.471% (3804/5398)

%run test.py --dataset Oxford-IIIT --model AlexNet --crop_size 224 --pretrained True- Finish loading model: weights/Oxford-IIIT_AlexNet.pth

- Training on: Oxford-IIIT

- Using model: AlexNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Oxford-IIIT', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\OXFORD-IIIT', f=None, model='AlexNet', num_workers=0, pretrained=True, weight='weights/Oxford-IIIT_AlexNet.pth')

- test loss: 1.047209, acc: 68.781% (412/599)

- accuracy of Abyssinian : 75.000% (15/20)

- accuracy of American_Bulldog : 80.000% (16/20)

- accuracy of American_Pit_Bull_Terrier : 40.000% (8/20)

- accuracy of Basset_Hound : 55.000% (11/20)

- accuracy of Beagle : 65.000% (13/20)

- accuracy of Bengal : 65.000% (13/20)

- accuracy of Birman : 80.000% (16/20)

- accuracy of Bombay : 80.000% (16/20)

- accuracy of Boxer : 40.000% (8/20)

- accuracy of British_Shorthair : 55.000% (11/20)

- accuracy of Chihuahua : 40.000% (8/20)

- accuracy of Egyptian_Mau : 85.000% (17/20)

- accuracy of English_Cocker_Spaniel : 50.000% (10/20)

- accuracy of English_Setter : 70.000% (14/20)

- accuracy of German_Shorthaired : 75.000% (15/20)

- accuracy of Great_Pyrenees : 70.000% (14/20)

- accuracy of Havanese : 75.000% (15/20)

- accuracy of Japanese_Chin : 75.000% (15/20)

- accuracy of Keeshond : 70.000% (14/20)

- accuracy of Leonberger : 90.000% (18/20)

- accuracy of Maine_Coon : 60.000% (12/20)

- accuracy of Miniature_Pinscher : 75.000% (15/20)

- accuracy of Newfoundland : 85.000% (17/20)

- accuracy of Persian : 60.000% (12/20)

- accuracy of Pomeranian : 85.000% (17/20)

- accuracy of Pug : 60.000% (12/20)

- accuracy of Ragdoll : 75.000% (15/20)

- accuracy of Russian_Blue : 70.000% (14/20)

- accuracy of Saint_Bernard : 80.000% (16/20)

- accuracy of Samoyed : 78.947% (15/19)

6.3 VGGNet

%run train.py --dataset Oxford-IIIT --model VGGNet --crop_size 224 --pretrained True- Loading the dataset...

- Training on: Oxford-IIIT

- Using model: VGGNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Oxford-IIIT', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\OXFORD-IIIT', epoch_size=20, lr=0.0002, model='VGGNet', num_workers=0, photo_folder='results/', pretrained=True, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]1.641 Running time: 70.449

- train loss: 2.196864, acc: 35.217% (1901/5398)

- Epoch 2: 100%[**************************************************->]1.456 Running time: 69.130

- train loss: 1.290058, acc: 59.596% (3217/5398)

- Epoch 3: 100%[**************************************************->]1.503 Running time: 71.794

- train loss: 1.078245, acc: 65.858% (3555/5398)

- Epoch 4: 100%[**************************************************->]0.894 Running time: 70.677

- train loss: 0.977357, acc: 69.248% (3738/5398)

- Epoch 5: 100%[**************************************************->]0.831 Running time: 71.447

- train loss: 0.889816, acc: 71.360% (3852/5398)

- Epoch 6: 100%[**************************************************->]1.159 Running time: 69.928

- train loss: 0.807542, acc: 74.509% (4022/5398)

- Epoch 7: 100%[**************************************************->]0.808 Running time: 70.562

- train loss: 0.800703, acc: 74.657% (4030/5398)

- Epoch 8: 100%[**************************************************->]0.355 Running time: 70.667

- train loss: 0.766814, acc: 75.695% (4086/5398)

- Epoch 9: 100%[**************************************************->]0.715 Running time: 70.067

- train loss: 0.737668, acc: 76.028% (4104/5398)

- Epoch 10: 100%[**************************************************->]0.830 Running time: 70.479

- train loss: 0.722530, acc: 76.658% (4138/5398)

- Epoch 11: 100%[**************************************************->]0.550 Running time: 71.665

- train loss: 0.693594, acc: 78.066% (4214/5398)

- Epoch 12: 100%[**************************************************->]0.717 Running time: 70.093

- train loss: 0.695827, acc: 77.418% (4179/5398)

- Epoch 13: 100%[**************************************************->]0.486 Running time: 72.732

- train loss: 0.673381, acc: 78.010% (4211/5398)

- Epoch 14: 100%[**************************************************->]0.723 Running time: 70.313

- train loss: 0.630606, acc: 79.807% (4308/5398)

- Epoch 15: 100%[**************************************************->]0.416 Running time: 70.576

- train loss: 0.650497, acc: 79.604% (4297/5398)

- Epoch 16: 100%[**************************************************->]0.426 Running time: 71.687

- train loss: 0.630823, acc: 79.659% (4300/5398)

- Epoch 17: 100%[**************************************************->]0.470 Running time: 71.292

- train loss: 0.607875, acc: 80.382% (4339/5398)

- Epoch 18: 100%[**************************************************->]0.846 Running time: 71.260

- train loss: 0.614151, acc: 80.345% (4337/5398)

- Epoch 19: 100%[**************************************************->]0.393 Running time: 71.111

- train loss: 0.613701, acc: 80.493% (4345/5398)

- Epoch 20: 100%[**************************************************->]0.636 Running time: 71.137

- train loss: 0.588036, acc: 81.271% (4387/5398)

%run test.py --dataset Oxford-IIIT --model VGGNet --crop_size 224 --pretrained True- Finish loading model: weights/Oxford-IIIT_VGGNet.pth

- Training on: Oxford-IIIT

- Using model: VGGNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='Oxford-IIIT', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\OXFORD-IIIT', f=None, model='VGGNet', num_workers=0, pretrained=True, weight='weights/Oxford-IIIT_VGGNet.pth')

- test loss: 0.566790, acc: 81.636% (489/599)

- accuracy of Abyssinian : 75.000% (15/20)

- accuracy of American_Bulldog : 90.000% (18/20)

- accuracy of American_Pit_Bull_Terrier : 70.000% (14/20)

- accuracy of Basset_Hound : 85.000% (17/20)

- accuracy of Beagle : 75.000% (15/20)

- accuracy of Bengal : 80.000% (16/20)

- accuracy of Birman : 85.000% (17/20)

- accuracy of Bombay : 90.000% (18/20)

- accuracy of Boxer : 85.000% (17/20)

- accuracy of British_Shorthair : 60.000% (12/20)

- accuracy of Chihuahua : 70.000% (14/20)

- accuracy of Egyptian_Mau : 95.000% (19/20)

- accuracy of English_Cocker_Spaniel : 75.000% (15/20)

- accuracy of English_Setter : 95.000% (19/20)

- accuracy of German_Shorthaired : 85.000% (17/20)

- accuracy of Great_Pyrenees : 85.000% (17/20)

- accuracy of Havanese : 90.000% (18/20)

- accuracy of Japanese_Chin : 95.000% (19/20)

- accuracy of Keeshond : 100.000% (20/20)

- accuracy of Leonberger : 70.000% (14/20)

- accuracy of Maine_Coon : 70.000% (14/20)

- accuracy of Miniature_Pinscher : 80.000% (16/20)

- accuracy of Newfoundland : 80.000% (16/20)

- accuracy of Persian : 90.000% (18/20)

- accuracy of Pomeranian : 85.000% (17/20)

- accuracy of Pug : 85.000% (17/20)

- accuracy of Ragdoll : 75.000% (15/20)

- accuracy of Russian_Blue : 85.000% (17/20)

- accuracy of Saint_Bernard : 85.000% (17/20)

- accuracy of Samoyed : 57.895% (11/19)

第7节 CIFAR-10 数据集

- CIFAR-10 数据集是 Visual Dictionary (Teaching computers to recognize objects) 的子集,由三个多伦多大学教授收集,主要来自Google和各类搜索引擎的图片

- CIFAR-10 数据集包含 60000 张 32\times3232×32 的RBG彩色图像,共计 10 个 包含 6000 张样本图像的不同类别,训练集包含 50000 张图像样本,测试集包含 10000 张图像样本

- CIFAR-10 数据集在深度学习初期 (ImageNet 问世前) 一直是衡量各种算法模型的 benchmark,但其 32\times3232×32 的图像尺寸逐渐无法满足日渐飞速迭代的神经网络结构

7.1 LeNet

%run train.py --dataset CIFAR-10 --model LeNet --crop_size 32- Loading the dataset...

- Training on: CIFAR-10

- Using model: LeNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=32, cuda=True, dataset='CIFAR-10', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\CIFAR-10', epoch_size=20, lr=0.0002, model='LeNet', num_workers=0, photo_folder='results/', pretrained=True, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]2.067 Running time: 18.131

- train loss: 1.957531, acc: 27.192% (13596/50000)

- Epoch 2: 100%[**************************************************->]1.967 Running time: 18.142

- train loss: 1.790899, acc: 34.038% (17019/50000)

- Epoch 3: 100%[**************************************************->]1.774 Running time: 18.248

- train loss: 1.719512, acc: 36.854% (18427/50000)

- Epoch 4: 100%[**************************************************->]2.105 Running time: 18.549

- train loss: 1.663305, acc: 39.356% (19678/50000)

- Epoch 5: 100%[**************************************************->]1.800 Running time: 18.677

- train loss: 1.622431, acc: 40.874% (20437/50000)

- Epoch 6: 100%[**************************************************->]1.487 Running time: 18.907

- train loss: 1.583741, acc: 42.430% (21215/50000)

- Epoch 7: 100%[**************************************************->]1.489 Running time: 18.950

- train loss: 1.563447, acc: 43.164% (21582/50000)

- Epoch 8: 100%[**************************************************->]1.264 Running time: 19.690

- train loss: 1.530724, acc: 44.590% (22295/50000)

- Epoch 9: 100%[**************************************************->]1.803 Running time: 19.383

- train loss: 1.509350, acc: 45.668% (22834/50000)

- Epoch 10: 100%[**************************************************->]2.050 Running time: 19.377

- train loss: 1.494817, acc: 46.074% (23037/50000)

- Epoch 11: 100%[**************************************************->]1.305 Running time: 19.001

- train loss: 1.479431, acc: 46.528% (23264/50000)

- Epoch 12: 100%[**************************************************->]1.386 Running time: 19.197

- train loss: 1.464298, acc: 47.312% (23656/50000)

- Epoch 13: 100%[**************************************************->]1.400 Running time: 19.015

- train loss: 1.448093, acc: 48.020% (24010/50000)

- Epoch 14: 100%[**************************************************->]1.598 Running time: 19.190

- train loss: 1.438962, acc: 48.090% (24045/50000)

- Epoch 15: 100%[**************************************************->]1.325 Running time: 19.532

- train loss: 1.418389, acc: 49.276% (24638/50000)

- Epoch 16: 100%[**************************************************->]1.312 Running time: 19.307

- train loss: 1.413084, acc: 49.370% (24685/50000)

- Epoch 17: 100%[**************************************************->]1.144 Running time: 19.146

- train loss: 1.404172, acc: 49.750% (24875/50000)

- Epoch 18: 100%[**************************************************->]1.383 Running time: 19.099

- train loss: 1.391703, acc: 49.908% (24954/50000)

- Epoch 19: 100%[**************************************************->]1.085 Running time: 19.255

- train loss: 1.384111, acc: 50.604% (25302/50000)

- Epoch 20: 100%[**************************************************->]1.462 Running time: 19.090

- train loss: 1.372485, acc: 50.862% (25431/50000)

%run test.py --dataset CIFAR-10 --model LeNet --crop_size 32 --pretrained False- Finish loading model: weights/CIFAR-10_LeNet.pth

- Training on: CIFAR-10

- Using model: LeNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=32, cuda=True, dataset='CIFAR-10', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\CIFAR-10', f=None, model='LeNet', num_workers=0, pretrained=True, weight='weights/CIFAR-10_LeNet.pth')

- test loss: 1.377149, acc: 50.180% (5018/10000)

- accuracy of airplane : 52.100% (521/1000)

- accuracy of automobile : 51.100% (511/1000)

- accuracy of bird : 36.400% (364/1000)

- accuracy of cat : 45.200% (452/1000)

- accuracy of deer : 42.300% (423/1000)

- accuracy of dog : 35.400% (354/1000)

- accuracy of frog : 64.200% (642/1000)

- accuracy of horse : 42.800% (428/1000)

- accuracy of ship : 71.300% (713/1000)

- accuracy of truck : 61.000% (610/1000)

7.2 AlexNet

%run train.py --dataset CIFAR-10 --model AlexNet --crop_size 224 --pretrained True --lr 0.0002- Loading the dataset...

- Training on: CIFAR-10

- Using model: AlexNet

- Using the specified args:

- Namespace(batch_size=32, crop_size=224, cuda=True, dataset='CIFAR-10', dataset_root='C:\\Users\\sbzy\\Documents/GitHub/dl_algorithm/datasets\\CIFAR-10', epoch_size=20, lr=0.0002, model='AlexNet', num_workers=0, photo_folder='results/', pretrained=True, save_folder='weights/', shuffle=False)

- Epoch 1: 100%[**************************************************->]0.762 Running time: 109.670

- train loss: 1.399911, acc: 49.404% (24702/50000)

- Epoch 2: 100%[**************************************************->]1.160 Running time: 109.014

- train loss: 1.205459, acc: 57.554% (28777/50000)

- Epoch 3: 100%[**************************************************->]0.319 Running time: 109.240

- train loss: 1.145497, acc: 59.440% (29720/50000)

- Epoch 4: 100%[**************************************************->]0.995 Running time: 109.151

- train loss: 1.114250, acc: 60.624% (30312/50000)

- Epoch 5: 100%[**************************************************->]1.127 Running time: 110.430

- train loss: 1.088160, acc: 61.314% (30657/50000)

- Epoch 6: 100%[**************************************************->]0.710 Running time: 111.913

- train loss: 1.066579, acc: 62.746% (31373/50000)

- Epoch 7: 100%[**************************************************->]1.191 Running time: 109.896