-

TransFuse跑自己的数据集

原始链接如下

https://github.com/Rayicer/TransFuse

https://github.com/Rayicer/TransFuse这个复现不难,下面给下关键步骤

https://github.com/Rayicer/TransFuse这个复现不难,下面给下关键步骤1.数据准备

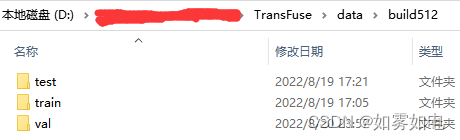

一级目录,数据分为训练(train)、验证(val)、测试(test),如果数据少的话验证和测试一样也行,但是一定都要有,因为代码里有个地方写死了,当然,看完代码比较熟悉的话可以自己改掉。

另外,所有文件夹的名字务必和我一样,这个代码里也写死了,熟悉的可以自己改。

二级目录

三级目录,注意这个是二分类,标签的值是0和255

2.数据加载

复现GitHub的关键其实就是数据加载部分,这个项目原本是读取了npy的,直接改成读图片就好了,下面是我改的util/dataloader.py,这里需要注意的是图片的大小,原始的数据大小是256*192,我这里把我512*512的图resize到了256*192,这个规定的大小要更改有点麻烦,得自己去模型文件里探索下,留给大家自己来吧。

- import os

- from PIL import Image

- import torch

- import torch.utils.data as data

- import torchvision.transforms as transforms

- import numpy as np

- import matplotlib.pyplot as plt

- import albumentations as A

- import cv2

- class SkinDataset(data.Dataset):

- """

- dataloader for skin lesion segmentation tasks

- """

- def __init__(self, image_root, gt_root):

- imgs_full_root = os.path.join(image_root, 'images')

- labs_full_root = os.path.join(gt_root, 'labels')

- image_names = os.listdir(imgs_full_root)

- label_names = os.listdir(labs_full_root)

- self.images = [os.path.join(imgs_full_root, im_name) for im_name in image_names]

- self.gts = [os.path.join(labs_full_root, lab_name) for lab_name in label_names]

- self.size = len(self.images)

- self.img_transform = transforms.Compose([

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406],

- [0.229, 0.224, 0.225])

- ])

- self.gt_transform = transforms.Compose([

- transforms.ToTensor()])

- self.transform = A.Compose(

- [

- A.ShiftScaleRotate(shift_limit=0.15, scale_limit=0.15, rotate_limit=25, p=0.5, border_mode=0),

- A.ColorJitter(),

- A.HorizontalFlip(),

- A.VerticalFlip()

- ]

- )

- def __getitem__(self, index):

- image_idx = self.images[index]

- image = cv2.imread(image_idx)

- image = cv2.resize(image, (256, 192))

- # image = image.transpose(2,1,0)

- gt_idx = self.gts[index]

- gt = cv2.imread(gt_idx, 0)

- gt = cv2.resize(gt, (256, 192))

- gt = gt / 255.0

- transformed = self.transform(image=image, mask=gt)

- image = self.img_transform(transformed['image'])

- # image = image / 255.0

- gt = self.gt_transform(transformed['mask'])

- return image, gt

- def __len__(self):

- return self.size

- def get_loader(image_root, gt_root, batchsize, shuffle=True, num_workers=4, pin_memory=True):

- dataset = SkinDataset(image_root, gt_root)

- data_loader = data.DataLoader(dataset=dataset,

- batch_size=batchsize,

- shuffle=shuffle,

- num_workers=num_workers,

- pin_memory=pin_memory)

- return data_loader

- class test_dataset:

- def __init__(self, image_root, gt_root):

- imgs_full_root = os.path.join(image_root, 'images')

- labs_full_root = os.path.join(gt_root, 'labels')

- image_names = os.listdir(imgs_full_root)

- label_names = os.listdir(labs_full_root)

- self.images = [os.path.join(imgs_full_root, im_name) for im_name in image_names]

- self.gts = [os.path.join(labs_full_root, lab_name) for lab_name in label_names]

- self.transform = transforms.Compose([

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406],

- [0.229, 0.224, 0.225])

- ])

- self.gt_transform = transforms.ToTensor()

- self.size = len(self.images)

- self.index = 0

- def load_data(self):

- image_idx = self.images[self.index]

- image = cv2.imread(image_idx)

- image = cv2.resize(image, (256, 192), interpolation=cv2.INTER_NEAREST)

- image = self.transform(image).unsqueeze(0)

- gt_idx = self.gts[self.index]

- gt = cv2.imread(gt_idx, 0)

- gt = cv2.resize(gt, (256, 192), interpolation=cv2.INTER_NEAREST)

- gt = gt/255.0

- self.index += 1

- return image, gt

- if __name__ == '__main__':

- path = 'data/'

- tt = SkinDataset(path+'data_train.npy', path+'mask_train.npy')

- for i in range(50):

- img, gt = tt.__getitem__(i)

- img = torch.transpose(img, 0, 1)

- img = torch.transpose(img, 1, 2)

- img = img.numpy()

- gt = gt.numpy()

- plt.imshow(img)

- plt.savefig('vis/'+str(i)+".jpg")

- plt.imshow(gt[0])

- plt.savefig('vis/'+str(i)+'_gt.jpg')

3.训练

train_isic.py主要改前面的参数部分,还有数据加载路径

- import torch

- from torch.autograd import Variable

- import argparse

- from datetime import datetime

- from lib.TransFuse import TransFuse_S

- from utils.dataloader import get_loader, test_dataset

- from utils.utils import AvgMeter

- import torch.nn.functional as F

- import numpy as np

- import matplotlib.pyplot as plt

- from test_isic import mean_dice_np, mean_iou_np

- import os

- def structure_loss(pred, mask):

- weit = 1 + 5*torch.abs(F.avg_pool2d(mask, kernel_size=31, stride=1, padding=15) - mask)

- wbce = F.binary_cross_entropy_with_logits(pred, mask, reduction='none')

- wbce = (weit*wbce).sum(dim=(2, 3)) / weit.sum(dim=(2, 3))

- pred = torch.sigmoid(pred)

- inter = ((pred * mask)*weit).sum(dim=(2, 3))

- union = ((pred + mask)*weit).sum(dim=(2, 3))

- wiou = 1 - (inter + 1)/(union - inter+1)

- return (wbce + wiou).mean()

- def train(train_loader, model, optimizer, epoch, best_loss):

- model.train()

- loss_record2, loss_record3, loss_record4 = AvgMeter(), AvgMeter(), AvgMeter()

- accum = 0

- for i, pack in enumerate(train_loader, start=1):

- # ---- data prepare ----

- images, gts = pack

- images = Variable(images).cuda()

- gts = Variable(gts).cuda()

- # ---- forward ----

- lateral_map_4, lateral_map_3, lateral_map_2 = model(images)

- # ---- loss function ----

- loss4 = structure_loss(lateral_map_4, gts)

- loss3 = structure_loss(lateral_map_3, gts)

- loss2 = structure_loss(lateral_map_2, gts)

- loss = 0.5 * loss2 + 0.3 * loss3 + 0.2 * loss4

- # ---- backward ----

- loss.backward()

- torch.nn.utils.clip_grad_norm_(model.parameters(), opt.grad_norm)

- optimizer.step()

- optimizer.zero_grad()

- # ---- recording loss ----

- loss_record2.update(loss2.data, opt.batchsize)

- loss_record3.update(loss3.data, opt.batchsize)

- loss_record4.update(loss4.data, opt.batchsize)

- # ---- train visualization ----

- if i % 20 == 0 or i == total_step:

- print('{} Epoch [{:03d}/{:03d}], Step [{:04d}/{:04d}], '

- '[lateral-2: {:.4f}, lateral-3: {:0.4f}, lateral-4: {:0.4f}]'.

- format(datetime.now(), epoch, opt.epoch, i, total_step,

- loss_record2.show(), loss_record3.show(), loss_record4.show()))

- print('lr: ', optimizer.param_groups[0]['lr'])

- save_path = 'snapshots/{}/'.format(opt.train_save)

- os.makedirs(save_path, exist_ok=True)

- if (epoch+1) % 1 == 0:

- meanloss = test(model, opt.test_path)

- if meanloss < best_loss:

- print('new best loss: ', meanloss)

- best_loss = meanloss

- torch.save(model.state_dict(), save_path + 'TransFuse-%d.pth' % epoch)

- print('[Saving Snapshot:]', save_path + 'TransFuse-%d.pth'% epoch)

- return best_loss

- def test(model, path):

- model.eval()

- mean_loss = []

- for s in ['val', 'test']:

- image_root = '{}/{}'.format(path, s)

- gt_root = '{}/{}'.format(path, s)

- test_loader = test_dataset(image_root, gt_root)

- dice_bank = []

- iou_bank = []

- loss_bank = []

- acc_bank = []

- for i in range(test_loader.size):

- image, gt = test_loader.load_data()

- image = image.cuda()

- with torch.no_grad():

- _, _, res = model(image)

- loss = structure_loss(res, torch.tensor(gt).unsqueeze(0).unsqueeze(0).cuda())

- res = res.sigmoid().data.cpu().numpy().squeeze()

- gt = 1*(gt>0.5)

- res = 1*(res > 0.5)

- dice = mean_dice_np(gt, res)

- iou = mean_iou_np(gt, res)

- acc = np.sum(res == gt) / (res.shape[0]*res.shape[1])

- loss_bank.append(loss.item())

- dice_bank.append(dice)

- iou_bank.append(iou)

- acc_bank.append(acc)

- print('{} Loss: {:.4f}, Dice: {:.4f}, IoU: {:.4f}, Acc: {:.4f}'.

- format(s, np.mean(loss_bank), np.mean(dice_bank), np.mean(iou_bank), np.mean(acc_bank)))

- mean_loss.append(np.mean(loss_bank))

- return mean_loss[0]

- if __name__ == '__main__':

- parser = argparse.ArgumentParser()

- parser.add_argument('--epoch', type=int, default=80, help='epoch number')

- parser.add_argument('--lr', type=float, default=7e-5, help='learning rate')

- parser.add_argument('--batchsize', type=int, default=8, help='training batch size')

- parser.add_argument('--grad_norm', type=float, default=2.0, help='gradient clipping norm')

- parser.add_argument('--train_path', type=str,

- default='./data/build512/', help='path to train dataset')

- parser.add_argument('--test_path', type=str,

- default='./data/build512/', help='path to test dataset')

- parser.add_argument('--train_save', type=str, default='TransFuse_S')

- parser.add_argument('--beta1', type=float, default=0.5, help='beta1 of adam optimizer')

- parser.add_argument('--beta2', type=float, default=0.999, help='beta2 of adam optimizer')

- opt = parser.parse_args()

- # ---- build models ----

- model = TransFuse_S(pretrained=True).cuda()

- params = model.parameters()

- optimizer = torch.optim.Adam(params, opt.lr, betas=(opt.beta1, opt.beta2))

- image_root = '{}/train'.format(opt.train_path)

- gt_root = '{}/train'.format(opt.train_path)

- train_loader = get_loader(image_root, gt_root, batchsize=opt.batchsize)

- total_step = len(train_loader)

- print("#"*20, "Start Training", "#"*20)

- best_loss = 1e5

- for epoch in range(1, opt.epoch + 1):

- best_loss = train(train_loader, model, optimizer, epoch, best_loss)

4.预测验证

test_isic.py预测也主要是改了路径,细节自己对比下吧

- import torch

- import torch.nn.functional as F

- import numpy as np

- import os, argparse

- from lib.TransFuse import TransFuse_S

- from utils.dataloader import test_dataset

- import imageio

- import cv2

- def mean_iou_np(y_true, y_pred, **kwargs):

- """

- compute mean iou for binary segmentation map via numpy

- """

- axes = (0, 1)

- intersection = np.sum(np.abs(y_pred * y_true), axis=axes)

- mask_sum = np.sum(np.abs(y_true), axis=axes) + np.sum(np.abs(y_pred), axis=axes)

- union = mask_sum - intersection

- smooth = .001

- iou = (intersection + smooth) / (union + smooth)

- return iou

- def mean_dice_np(y_true, y_pred, **kwargs):

- """

- compute mean dice for binary segmentation map via numpy

- """

- axes = (0, 1) # W,H axes of each image

- intersection = np.sum(np.abs(y_pred * y_true), axis=axes)

- mask_sum = np.sum(np.abs(y_true), axis=axes) + np.sum(np.abs(y_pred), axis=axes)

- smooth = .001

- dice = 2*(intersection + smooth)/(mask_sum + smooth)

- return dice

- if __name__ == '__main__':

- parser = argparse.ArgumentParser()

- parser.add_argument('--ckpt_path', type=str, default='snapshots/TransFuse_S/TransFuse-6.pth')

- parser.add_argument('--test_path', type=str,

- default='./data/build512', help='path to test dataset')

- parser.add_argument('--save_path', type=str, default='./predict', help='path to save inference segmentation')

- opt = parser.parse_args()

- model = TransFuse_S().cuda()

- model.load_state_dict(torch.load(opt.ckpt_path))

- model.cuda()

- model.eval()

- if opt.save_path is not None:

- os.makedirs(opt.save_path, exist_ok=True)

- print('evaluating model: ', opt.ckpt_path)

- image_root = '{}/test'.format(opt.test_path)

- gt_root = '{}/test'.format(opt.test_path)

- test_loader = test_dataset(image_root, gt_root)

- dice_bank = []

- iou_bank = []

- acc_bank = []

- for i in range(test_loader.size):

- image, gt = test_loader.load_data()

- # gt = 1*(gt>0.5)

- gt[gt > 0] = 1

- image = image.cuda()

- with torch.no_grad():

- _, _, res = model(image)

- res = res.sigmoid().data.cpu().numpy().squeeze()

- # res = 1*(res > 0.5)

- res[res > 0.5] = 1

- res[res <= 0.5] = 0

- dice = mean_dice_np(gt, res)

- iou = mean_iou_np(gt, res)

- acc = np.sum(res == gt) / (res.shape[0]*res.shape[1])

- acc_bank.append(acc)

- dice_bank.append(dice)

- iou_bank.append(iou)

- if opt.save_path is not None:

- res = 255 * res

- gt = 255 * gt

- cv2.imwrite(opt.save_path+'/'+str(i)+'_pred.jpg', res)

- cv2.imwrite(opt.save_path+'/'+str(i)+'_gt.jpg', gt)

- print('Dice: {:.4f}, IoU: {:.4f}, Acc: {:.4f}'.

- format(np.mean(dice_bank), np.mean(iou_bank), np.mean(acc_bank)))

5.结果

预测IOU大概在0.6左右,效果还需要自己调,这个项目里的学习率策略还可以加一下,还有数据增强

积分下载:

-

相关阅读:

大学新生开学数码装备,2022年值得买的几款数码好物

阿里P8大能倾力编撰的“Java 进阶面试手册”,助力跳槽外包毕业生秋招收获大厂offer

区块链技术研究探讨

【路径优化】基于A*算法的路径优化问题(Matlab代码实现)

Ubuntu将图标放置于任务栏

Compiling and Loading

Redis为什么要使用SDS作为基本数据结构

国内厨电行业数据分析

2023NOIP A层联测19-多边形

windows安装npm教程

- 原文地址:https://blog.csdn.net/qq_20373723/article/details/126450108