-

dumpsys meminfo 详解

源码基于:Android R

0. 前言

其实,很久以前在 android 查看内存使用情况 一文中已经分析过dumpsys meminfo,但最近在统计内存数据的时候发现怎么也对不上,所以重新分析了下源码,之前在 android 查看内存使用情况 一文只是讲了个大概框架或含义。本篇博文会结合代码详细分析下AMS 下meminfo service 以及dump 的过程。

1. meminfo 的起点dumpsys

AMS 下的meminfo 统计是通过dumpsys 命令进行dump 的,这个是存放在 /system/bin/下的bin 文件。源码目录位于 frameworks/native/cmds/dumpsys/下,详细的请查看 android 中的dumpsys 一文。

2. meminfo services -- MemBinder

可以dump的这些service都是在ServiceManager里面添加上的,例如meminfo是在:frameworks/base/services/java/com/android/server/am/ActivityManagerService.java的函数setSystemProcess添加的:

- frameworks/base/services/core/java/com/android/server/am/AMS.java

- public void setSystemProcess() {

- try {

- ...

- ServiceManager.addService("meminfo", new MemBinder(this), /* allowIsolated= */ false,

- DUMP_FLAG_PRIORITY_HIGH);

- ...

- }

注意:

通过 android 中的dumpsys 一文的step7,我们得知,如果某service(例如,meminfo) 需要通过dumpsys 命令进行dump 操作,必要将指定dump flag。这里meminfo 在addService 的时候指定了dump flag 为 DUMP_FLAG_PRIORITY_HIGH。

下面来看下:

- frameworks/base/services/core/java/com/android/server/am/AMS.java

- static class MemBinder extends Binder {

- ActivityManagerService mActivityManagerService;

- private final PriorityDump.PriorityDumper mPriorityDumper =

- new PriorityDump.PriorityDumper() {

- @Override

- public void dumpHigh(FileDescriptor fd, PrintWriter pw, String[] args,

- boolean asProto) {

- dump(fd, pw, new String[] {"-a"}, asProto);

- }

- @Override

- public void dump(FileDescriptor fd, PrintWriter pw, String[] args, boolean asProto) {

- mActivityManagerService.dumpApplicationMemoryUsage(

- fd, pw, " ", args, false, null, asProto);

- }

- };

- MemBinder(ActivityManagerService activityManagerService) {

- mActivityManagerService = activityManagerService;

- }

- @Override

- protected void dump(FileDescriptor fd, PrintWriter pw, String[] args) {

- try {

- mActivityManagerService.mOomAdjuster.mCachedAppOptimizer.enableFreezer(false);

- if (!DumpUtils.checkDumpAndUsageStatsPermission(mActivityManagerService.mContext,

- "meminfo", pw)) return;

- PriorityDump.dump(mPriorityDumper, fd, pw, args);

- } finally {

- mActivityManagerService.mOomAdjuster.mCachedAppOptimizer.enableFreezer(true);

- }

- }

- }

里面定义了两个成员变量:

- mActivityManagerService:记录AMS 对象;

- mPriorityDumper:通过其dump() 接口,连接AMS.dumpApplicationMemoryUsage();

真正实现的地方是在AMS 的dumpApplicationMemoryUsage() 中。

3. dumpsys meminfo -h

进入dumpApplicationMemoryUsage() 之后,会看到一个while 循环用来分析dumpsys meminfo 附带的参数,这里首先来看下 -h,通过此参数进一步了解 dumpsys meminfo 的其他参数选项。

代码这里就不贴了,直接来看下终端的输出:

- meminfo dump options: [-a] [-d] [-c] [-s] [--oom] [process]

- -a: include all available information for each process.

- -d: include dalvik details.

- -c: dump in a compact machine-parseable representation.

- -s: dump only summary of application memory usage.

- -S: dump also SwapPss.

- --oom: only show processes organized by oom adj.

- --local: only collect details locally, don't call process.

- --package: interpret process arg as package, dumping all

- processes that have loaded that package.

- --checkin: dump data for a checkin

- --proto: dump data to proto

- If [process] is specified it can be the name or

- pid of a specific process to dump.

- -a:包含所有可用信息,更确切它包含了-d、-s、-S;

- -d:需要包含dalvik 详细信息;

- -c:dump 成一个压缩的机器解析的表达形式;

- -s:只dump 应用内存的概要;

- -S:将swap pss 信息也dump 出来;

- --oom:只dump RSS/PSS 的OOM adj 信息;

- --local:只在本地收集信息,只有在-a 或 -s 指定的时候才生效;

- --package:dump 所有加载指定package 的进程;

- --checkin:将数据dump 下来checkin;

- --proto:dump proto的数据;

- dumpsys meminfo 可以跟 [process],此process 可以是进程名,也可以是进程pid;

4. collectProcesses()

当dumpsys meminfo 的命令行参数解析完之后,会调用collectProcesses() 来确认符合命令的packages 或pid:

- frameworks/base/services/core/java/com/android/server/am/ProcessList.java

- ArrayList

collectProcessesLocked(int start, boolean allPkgs, String[] args) { - ArrayList

procs; - if (args != null && args.length > start

- && args[start].charAt(0) != '-') {

- procs = new ArrayList

(); - int pid = -1;

- try {

- pid = Integer.parseInt(args[start]);

- } catch (NumberFormatException e) {

- }

- for (int i = mLruProcesses.size() - 1; i >= 0; i--) {

- ProcessRecord proc = mLruProcesses.get(i);

- if (proc.pid > 0 && proc.pid == pid) {

- procs.add(proc);

- } else if (allPkgs && proc.pkgList != null

- && proc.pkgList.containsKey(args[start])) {

- procs.add(proc);

- } else if (proc.processName.equals(args[start])) {

- procs.add(proc);

- }

- }

- if (procs.size() <= 0) {

- return null;

- }

- } else {

- procs = new ArrayList

(mLruProcesses); - }

- return procs;

- }

代码还是比较清晰的:

- 如果命令行指定了pid,那么就收集这些进程;

- 如果设定了packageName,就收集这些package;

- 如果没有设定,则收集所有的 LRU process;

注意,前两点有可能收集的 proc 为空,因为设定的参数有可能是假的或者无法匹配。

这个时候终端上还提示No process:

shift:/ # dumpsys meminfo 12345

No process found for: 123455. dumpApplicationMemoryUsage()

这个函数的代码量太大,这里抽重点的地方剖析下。

5.1 几个变量thread、pid、oomadj、mi

- frameworks/base/services/core/java/com/android/server/am/AMS.java

- private final void dumpApplicationMemoryUsage() {

- ...

- for (int i = numProcs - 1; i >= 0; i--) {

- final ProcessRecord r = procs.get(i);

- final IApplicationThread thread;

- final int pid;

- final int oomAdj;

- final boolean hasActivities;

- synchronized (this) {

- thread = r.thread;

- pid = r.pid;

- oomAdj = r.getSetAdjWithServices();

- hasActivities = r.hasActivities();

- }

- if (thread != null) {

- if (mi == null) {

- mi = new Debug.MemoryInfo();

- }

在最开始的时候需要注意几个重点的初始化:

- thread:指定IApplicationThread,当 --local 没有设定时(大部分不会设定),需要通过该thread 读取应用端数据,并通过pipe 方式传递给AMS。

- oomAdj:dumpsys meminfo 中重要的一组数据,针对LMKD,详细查看 adj score的算法剖析 一文;

- pid:当前应用的pid,dumpsys meminfo 的时候会伴随显示;

- mi:内存数据的核心,下面会详细讲解这个变量,详细见第 5.2 节;

5.2 Debug.MemoryInfo()

源码目录:frameworks/base/core/java/android/os/Debug.java

这里提供了很多的native 接口:

- public static native long getNativeHeapSize();

- public static native long getNativeHeapAllocatedSize();

- public static native long getNativeHeapFreeSize();

- public static native void getMemoryInfo(MemoryInfo memoryInfo);

- public static native boolean getMemoryInfo(int pid, MemoryInfo memoryInfo);

- public static native long getPss();

- public static native long getPss(int pid, long[] outUssSwapPssRss, long[] outMemtrack);

5.3 Debug.getMemoryInfo()

接着第 5.1 节的代码继续往下看:

- if (opts.dumpDetails || (!brief && !opts.oomOnly)) {

- reportType = ProcessStats.ADD_PSS_EXTERNAL_SLOW;

- startTime = SystemClock.currentThreadTimeMillis();

- if (!Debug.getMemoryInfo(pid, mi)) {

- continue;

- }

- endTime = SystemClock.currentThreadTimeMillis();

- hasSwapPss = mi.hasSwappedOutPss;

- } else {

- reportType = ProcessStats.ADD_PSS_EXTERNAL;

- startTime = SystemClock.currentThreadTimeMillis();

- long pss = Debug.getPss(pid, tmpLong, null);

- if (pss == 0) {

- continue;

- }

- mi.dalvikPss = (int) pss;

- endTime = SystemClock.currentThreadTimeMillis();

- mi.dalvikPrivateDirty = (int) tmpLong[0];

- mi.dalvikRss = (int) tmpLong[2];

- }

- opts.dumpDetails 主要用以获取应用的详细信息。一般在指定pid 或者 package的时候,或者直接指定命令参数 -s 的时候该变量被置为 true;

- brief 默认值为false,从MemBinder 传递过来;

- opts.oomOnly 只有在 --oom 指定时被置为 true;

在没有指定 --oom 时,代码都是会走 if 的case,会通过Debug.getMemoryInfo() 获取进程的详细内存信息:

- frameworks/base/core/jni/android_os_Debug.cpp

- static jboolean android_os_Debug_getDirtyPagesPid(JNIEnv *env, jobject clazz,

- jint pid, jobject object)

- {

- bool foundSwapPss;

- stats_t stats[_NUM_HEAP];

- memset(&stats, 0, sizeof(stats));

- if (!load_maps(pid, stats, &foundSwapPss)) {

- return JNI_FALSE;

- }

- struct graphics_memory_pss graphics_mem;

- if (read_memtrack_memory(pid, &graphics_mem) == 0) {

- stats[HEAP_GRAPHICS].pss = graphics_mem.graphics;

- ...

- }

- for (int i=_NUM_CORE_HEAP; i<_NUM_EXCLUSIVE_HEAP; i++) {

- stats[HEAP_UNKNOWN].pss += stats[i].pss;

- ...

- }

- for (int i=0; i<_NUM_CORE_HEAP; i++) {

- env->SetIntField(object, stat_fields[i].pss_field, stats[i].pss);

- ...

- }

- env->SetBooleanField(object, hasSwappedOutPss_field, foundSwapPss);

- ...

- for (int i=_NUM_CORE_HEAP; i<_NUM_HEAP; i++) {

- ...

- }

- env->ReleasePrimitiveArrayCritical(otherIntArray, otherArray, 0);

- return JNI_TRUE;

- }

- 通过 load_maps() 从 /proc/pid/smaps 中获取进程的内存信息;

- 通过read_memtrack_memory() 通过libmemtrack.so 获取 graphics 的内存信息;

- 通过 env->SetIntField() 将 UNKNOWN、dalvik、heap 所属组的信息设置到 Meminfo 中;

- 通过otherArray 将其他信息设置到 MemoryInfo.otherStats[] 中;

5.3.1 load_maps()

- static bool load_maps(int pid, stats_t* stats, bool* foundSwapPss)

- {

- *foundSwapPss = false;

- uint64_t prev_end = 0;

- int prev_heap = HEAP_UNKNOWN;

- std::string smaps_path = base::StringPrintf("/proc/%d/smaps", pid);

- auto vma_scan = [&](const meminfo::Vma& vma) {

- ...

- };

- return meminfo::ForEachVmaFromFile(smaps_path, vma_scan);

- }

meminfo::ForEachVmaFromFile() 是 libmeminfo.so 接口,代码位于 /system/memory/libmeminfo/下。

这里总结一个调用框架图:

主要是读取进程 smaps 节点,根据属性的不同,进程的虚拟地址空间会被划分成若干个 VMA,每个VMA 通过vm_next 和vm_prev 组成双向链表,链表位于进程的 task_struct->mm_struct->mmap 中。当通过proc接口读取进程的smaps文件时,内核会首先找到该进程的vma链表头,遍历链表中的每一个vma, 通过walk_page_vma统计这块vma的使用情况,最后显示出来。

下面是其中一块 VMA 的统计信息:

- Rss:常驻物理内存,是进程在RAM 中实际持有的物理内存。Rss 中包含了共享库占用的内存,所以可能产生误导;

- Pss:比例使用的物理内存,Pss 与Rss 区别是Pss 统计了共享库是平均占用内存。若一个so 占用内存30kb,这个so 被3 个进程共享,那么某一个进程Pss 统计时该so 占用的内存为 30/3 kb;

- Private_Dirty:私有的内存数据,只不过是Dirty的,与Private_Clean 相反。Dirty 包括了page_dirty 和pte_dirty,page_dirty 就是所说的脏页(文件读到内存中被修改过,就会标记为脏页)。pte_dirty 则当 vma 用以anonymous 的时候,读写这段 vma 触发page fault 时调用do_anonymous_page,如果vma_flags 中包含 VM_WRITE,则会通过pte_mkdirty(entry) 标记;

- Private_Clean:私有干净数据,与Private_Dirty 相反;

- Shared_Dirty:同Private_Dirty,该内存至少有一个其他进程在引用,而且至少有一个进程在修改;

- Shared_Clean:同Private_Clean,与Shared_Dirty 相反;

- Swap:被交换的native 页,可称为SwapRss;

- SwapPss:被交换的native 页,按照比例统计;

一般情况下,在Android 中就是ZRAM,通过压缩内存页面并将其放入动态分配的内存交换区来增加系统中的可用内存量,压缩的都是匿名页。

但下面这段vma 是文件映射的,但还有swap字段的,这是因为这个文件是通过mmap到进程地址空间的。当标记中有MAP_PRIVATE时,这表示是一个copy-on-write的映射,虽然是file-backed,但当向这个数据写入数据的时候,会把数据拷贝到匿名页里,所以看到上面的 Anonymous: 也不为0。

5.3.2 smaps 中vma 与 HEAP 枚举对照

[heap]

[anon:libc_malloc]

[anon:scudo:

[anon:GWP-ASan

HEAP_NATIVE [anon:dalvik-*

HEAP_DALVIK_OTHER *.so HEAP_SO *.jar HEAP_JAR *.apk HEAP_APK *.ttf HEAP_TTF *.odex (*.dex)

HEAP_DEX

----HEAP_DEX_APP_DEX

*.vdex (@boot /boot /apex)

HEAP_DEX

----HEAP_DEX_BOOT_VDEX

----HEAP_DEX_APP_VDEX

*.oat HEAP_OAT *.art

*.art] (@boot /boot /apex)

HEAP_ART

----HEAP_ART_BOOT

----HEAP_ART_APP

/dev/ HEAP_UNKNOWN_DEV /dev/kgsl-3d0 HEAP_GL_DEV /dev/ashmem/CursorWindow HEAP_CURSOR /dev/ashmem/jit-zygote-cache

/memfd:jit-cache

/memfd:jit-zygote-cache

HEAP_DALVIK_OTHER /dev/ashmem HEAP_ASHMEM 详细的which_heap 和sub_heap 看load_maps() 函数。

5.3.3 read_memtrack_memory()

通过本函数,获取进程在GPU 上的分配内存。

下面是调用框架图:

上图读取进程在GPU上分配的内存,每个厂商统计的策略可能不一样,这也是出现HAL层的原因。

5.4 thread.dumpMemInfo()在第 5.1 节中列举了几个重要的变量,其中一个就是 IApplicationThread,每个进程独有。

当然这个case 是当 opts.dumpDetails 为true,也就是针对单个应用的meminfo;

- try {

- TransferPipe tp = new TransferPipe();

- try {

- thread.dumpMemInfo(tp.getWriteFd(),

- mi, opts.isCheckinRequest, opts.dumpFullDetails,

- opts.dumpDalvik, opts.dumpSummaryOnly, opts.dumpUnreachable, innerArgs);

- tp.go(fd, opts.dumpUnreachable ? 30000 : 5000);

- } finally {

- tp.kill();

- }

创建TransferPipe 对象,里面会创建一个thread 和 一个pipe。然后将writeFd 给app 进程的ActivithThread,在ActivityThread 将解析app 进程的内存使用。当调用go() 函数时会启动TransferPipe 创建的thread,并等待pipe 通信,如果AMS 通过 readFd 获取到数据后,会通知 dumpsys 进程表示dump 完成。

下面来看下dumpMemInfo():

---->

- frameworks/base/core/java/android/app/ActivityThread.java

- public void dumpMemInfo(ParcelFileDescriptor pfd, Debug.MemoryInfo mem, boolean checkin,

- boolean dumpFullInfo, boolean dumpDalvik, boolean dumpSummaryOnly,

- boolean dumpUnreachable, String[] args) {

- FileOutputStream fout = new FileOutputStream(pfd.getFileDescriptor());

- PrintWriter pw = new FastPrintWriter(fout);

- try {

- dumpMemInfo(pw, mem, checkin, dumpFullInfo, dumpDalvik, dumpSummaryOnly, dumpUnreachable);

- } finally {

- pw.flush();

- IoUtils.closeQuietly(pfd);

- }

- }

详细的代码这里不继续分析了,总结一个调用框架图:

dumpMeminfo() 中会通过getRuntime() 获取app 进程dalvik 的totalMemory 和freeMemory,并计算出 dalvikAllocated,得到app 进程虚拟机内存使用情况。

并且通过Debug.getNativeHeapSize() 等三个native 接口统计app 进程的native 使用。这里同上面第 5.3 节,依然会进入androi_os_Debug.cpp 中:

- frameworks/base/core/jni/android_os_Debug.cpp

- static jlong android_os_Debug_getNativeHeapSize(JNIEnv *env, jobject clazz)

- {

- struct mallinfo info = mallinfo();

- return (jlong) info.usmblks;

- }

- static jlong android_os_Debug_getNativeHeapAllocatedSize(JNIEnv *env, jobject clazz)

- {

- struct mallinfo info = mallinfo();

- return (jlong) info.uordblks;

- }

- static jlong android_os_Debug_getNativeHeapFreeSize(JNIEnv *env, jobject clazz)

- {

- struct mallinfo info = mallinfo();

- return (jlong) info.fordblks;

- }

mallinfo() 返回内存分配的统计信息,函数声明在 android/bionic/libc/include/malloc.h,函数定义在 android/bionic/libc/bionic/malloc_common.cpp:

- extern "C" struct mallinfo mallinfo() {

- auto dispatch_table = GetDispatchTable();

- if (__predict_false(dispatch_table != nullptr)) {

- return dispatch_table->mallinfo();

- }

- return Malloc(mallinfo)();

- }

mallinfo() 主要是返回一个结构体,结构体中包含了通过malloc() 和其相关的函数调用所申请的内存信息,下面是结构体mallinfo 的具体信息(其他信息见man):

- struct mallinfo {

- int arena; /* Non-mmapped space allocated (bytes) */

- int ordblks; /* Number of free chunks */

- int smblks; /* Number of free fastbin blocks */

- int hblks; /* Number of mmapped regions */

- int hblkhd; /* Space allocated in mmapped regions (bytes) */

- int usmblks; /* Maximum total allocated space (bytes) */

- int fsmblks; /* Space in freed fastbin blocks (bytes) */

- int uordblks; /* Total allocated space (bytes) */

- int fordblks; /* Total free space (bytes) */

- int keepcost; /* Top-most, releasable space (bytes) */

- };

我们这里返回的三个信息:

- usmblks:alloc 的最大总内存(单位:字节);

- uordblks:总的alloc 的内存(单位:字节);

- fordblks:总的free 的内存(单位:字节);

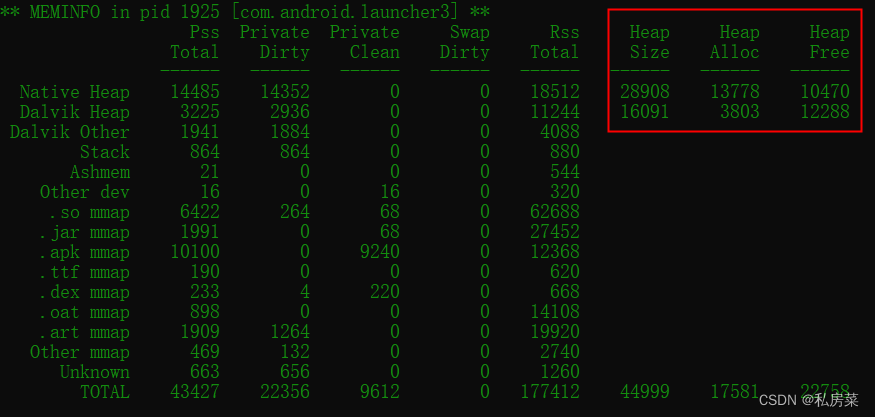

这两个输在如下图所示:

5.5 MemInfoReader

在将MemInfoReader 之前,需要回到代码最开始,了解下变量 collectNative:

final boolean collectNative = !opts.isCheckinRequest && numProcs > 1 && !opts.packages;当dump 多个应用或者所有应用的meminfo 时,变量 collectNative 被置true;如果只是打印单个应用的meminfo 时该值为 false,所以最终不会打印。

- MemInfoReader memInfo = new MemInfoReader();

- memInfo.readMemInfo();

详细的代码不再剖析,这里总结一个调用框架:

输出如下图:

6. meminfo 数据分析

6.1 单个应用的meminfo

- shift:/ # dumpsys meminfo com.android.launcher3

- Applications Memory Usage (in Kilobytes):

- Uptime: 4443605 Realtime: 4443605

- ** MEMINFO in pid 1834 [com.android.launcher3] **

- Pss Private Private Swap Rss Heap Heap Heap

- Total Dirty Clean Dirty Total Size Alloc Free

- ------ ------ ------ ------ ------ ------ ------ ------

- Native Heap 13647 13548 0 0 16152 21672 16064 1962

- Dalvik Heap 5338 5144 0 0 9496 6657 3329 3328

- Dalvik Other 1317 1192 0 0 2376

- Stack 636 636 0 0 644

- Ashmem 2 0 0 0 20

- Other dev 140 0 140 0 392

- .so mmap 7350 232 12 0 57116

- .jar mmap 2359 0 76 0 24984

- .apk mmap 22108 0 15208 0 51748

- .ttf mmap 110 0 0 0 376

- .dex mmap 348 8 336 0 432

- .oat mmap 1384 0 0 0 14560

- .art mmap 2434 1608 4 0 20496

- Other mmap 495 60 24 0 2896

- Unknown 599 588 0 0 1128

- TOTAL 58267 23016 15800 0 58267 28329 19393 5290

- App Summary

- Pss(KB) Rss(KB)

- ------ ------

- Java Heap: 6756 29992

- Native Heap: 13548 16152

- Code: 15872 149484

- Stack: 636 644

- Graphics: 0 0

- Private Other: 2004

- System: 19451

- Unknown: 6544

- TOTAL PSS: 58267 TOTAL RSS: 202816 TOTAL SWAP (KB): 0

- Objects

- Views: 89 ViewRootImpl: 1

- AppContexts: 10 Activities: 1

- Assets: 14 AssetManagers: 0

- Local Binders: 34 Proxy Binders: 43

- Parcel memory: 13 Parcel count: 69

- Death Recipients: 1 OpenSSL Sockets: 0

- WebViews: 0

- SQL

- MEMORY_USED: 682

- PAGECACHE_OVERFLOW: 292 MALLOC_SIZE: 117

- DATABASES

- pgsz dbsz Lookaside(b) cache Dbname

- 4 16 20 1/14/1 /data/user/0/com.android.launcher3/databases/widgetpreviews.db

- 4 252 71 99/18/5 /data/user/0/com.android.launcher3/databases/app_icons.db

- 4 16 57 6/17/4 /data/user/0/com.android.launcher3/databases/launcher.db

来看下其中几个主要概念:

- Java Heap: 24312 dalvik heap + .art mmap

- Native Heap: 62256

- Code: 66452 .so mmap + .jar mmap + .apk mmap + .ttf mmap + .dex mmap + .oat mmap

- Stack: 84

- Graphics: 5338 Gfx dev + EGL mtrack + GL mtrack

- Private Other: 9604 TotalPrivateClean + TotalPrivateDirty - java - native - code - stack - graphics

- System: 12900 TotalPss - TotalPrivateClean - TotalPrivateDirty

https://developer.android.com/studio/profile/investigate-ram?hl=zh-cn

-

Dalvik Heap

应用中 Dalvik 分配占用的 RAM。Pss Total 包括所有 Zygote 分配(如上述 PSS 定义所述,通过进程之间的共享内存量来衡量)。Private Dirty 数值是仅分配到您应用的堆的实际 RAM,由您自己的分配和任何 Zygote 分配页组成,这些分配页自从 Zygote 派生应用进程以来已被修改。

-

Heap Alloc

是 Dalvik 和原生堆分配器为您的应用跟踪的内存量。此值大于 Pss Total 和 Private Dirty,因为您的进程从 Zygote 派生,且包含您的进程与所有其他进程共享的分配。

-

.so mmap 和 .dex mmap

映射的 .so(原生)和 .dex(Dalvik 或 ART)代码占用的 RAM。Pss Total 数值包括应用之间共享的平台代码;Private Clean 是您的应用自己的代码。通常情况下,实际映射的内存更大 - 此处的 RAM 仅为应用执行的代码当前所需的 RAM。不过,.so mmap 具有较大的私有脏 RAM,因为在加载到其最终地址时对原生代码进行了修改。

-

.oat mmap

这是代码映像占用的 RAM 量,根据多个应用通常使用的预加载类计算。此映像在所有应用之间共享,不受特定应用影响。

-

.art mmap

这是堆映像占用的 RAM 量,根据多个应用通常使用的预加载类计算。此映像在所有应用之间共享,不受特定应用影响。尽管 ART 映像包含 Object 实例,它仍然不会计入您的堆大小。

- But as to what the difference is between "Pss", "PrivateDirty", and "SharedDirty"... well now the fun begins.

- A lot of memory in Android (and Linux systems in general) is actually shared across multiple processes.

- So how much memory a processes uses is really not clear. Add on top of that paging out to disk (let alone swap which we don't use on

- Android) and it is even less clear.

- Thus if you were to take all of the physical RAM actually mapped in to each process, and add up all of the processes,

- you would probably end up with a number much greater than the actual total RAM.

- The Pss number is a metric the kernel computes that takes into account memory sharing -- basically each page of RAM in a process is

- scaled by a ratio of the number of other processes also using that page. This way you can (in theory) add up the pss across all

- processes to see the total RAM they are using, and compare pss between processes to get a rough idea of their relative weight.

- The other interesting metric here is PrivateDirty, which is basically the amount of RAM inside the process that can not be paged

- to disk (it is not backed by the same data on disk), and is not shared with any other processes. Another way to look at this is the

- RAM that will become available to the system when that process goes away (and probably quickly subsumed into caches and other uses

- of it).

从这篇文章中得知:

一般的android和linux系统中很多进程会共享一些mem,所以一个进程用到的mem其实不是十分清楚,因此如果将每个进程实际占用的mem加到一起,可能会发现这个结果会远远的超过实际的总的mem。

Pss 是kernel根据共享mem计算得到的值,Pss的值是一块共享mem中一定比例的值。这样,将所有进程Pss加起来就是总的RAM值了,也可以通过进程间Pss值得到这些进程使用比重情况。

PrivateDirty,它基本上是进程内不能被分页到磁盘的内存,也不和其他进程共享。查看进程的内存用量的另一个途径,就是当进程结束时刻,系统可用内存的变化情况(也可能会很快并入高速缓冲或其他使用该内存区的进程)。

- 其他类型 smap 路径名称 描述

- Ashmem /dev/ashmem 匿名共享内存用来提供共享内存通过分配一个多个进程

- 可以共享的带名称的内存块

- Other dev /dev/ 内部driver占用的在 “Other dev”

- .so mmap .so C 库代码占用的内存

- .jar mmap .jar Java 文件代码占用的内存

- .apk mmap .apk apk代码占用的内存

- .ttf mmap .ttf ttf 文件代码占用的内存

- .dex mmap .dex Dex 文件代码占用的内存

- Other mmap 其他文件占用的内存

6.2 系统meminfo 统计

- Total RAM: 3,768,168K (status normal)

- Free RAM: 2,186,985K ( 64,861K cached pss + 735,736K cached kernel + 1,386,388K free)

- ION: 58,408K ( 53,316K mapped + -128K unmapped + 5,220K pools)

- Used RAM: 1,440,349K (1,116,469K used pss + 323,880K kernel)

- Lost RAM: 140,822K

- ZRAM: 12K physical used for 0K in swap (2,097,148K total swap)

- Tuning: 256 (large 512), oom 640,000K, restore limit 213,333K (high-end-gfx)

6.2.1 Total RAM

pw.print(stringifyKBSize(memInfo.getTotalSizeKb()));Total RAM 就是/proc/meminfo 中的 MemTotal

6.2.2 Free RAM

- pw.print(stringifyKBSize(cachedPss + memInfo.getCachedSizeKb()

- + memInfo.getFreeSizeKb()));

括号中的:

- cached pss:All pss of process oom_score_adj >= 900

- cached kernel:/proc/meminfo.Buffers + /proc/meminfo.KReclaimable + /proc/meminfo.Cached - /proc/meminfo.Mapped

- free:/proc/meminfo.MemFree

6.2.3 Used RAM

pw.print(stringifyKBSize(totalPss - cachedPss + kernelUsed));括号中的

- used pss:totalPss - cachedPss

- KernelUsed:/proc/meminfo.Shmem + /proc/meminfo.SlabUnreclaim + VmallocUsed + /proc/meminfo.PageTables + /proc/meminfo.KernelStack + [ionHeap]

6.2.4 Lost RAM

- final long lostRAM = memInfo.getTotalSizeKb() - (totalPss - totalSwapPss)

- - memInfo.getFreeSizeKb() - memInfo.getCachedSizeKb()

- - kernelUsed - memInfo.getZramTotalSizeKb();

Lost RAM = proc/meminfo.Memtotal - (totalPss - totalSwapPss) - /proc/meminfo.Memfree - /proc/meminfo.Cached - kernel used - zram used

注意:

从这里大致可以推算出

FREE RAM + USed RAM - totalSwapPss + Lost RAM + ZRAM = Total RAM

6.2.5 Tuning

- pw.print(" Tuning: ");

- pw.print(ActivityManager.staticGetMemoryClass());

- pw.print(" (large ");

- pw.print(ActivityManager.staticGetLargeMemoryClass());

- pw.print("), oom ");

- pw.print(stringifySize(

- mProcessList.getMemLevel(ProcessList.CACHED_APP_MAX_ADJ), 1024));

- pw.print(", restore limit ");

- pw.print(stringifyKBSize(mProcessList.getCachedRestoreThresholdKb()));

- if (ActivityManager.isLowRamDeviceStatic()) {

- pw.print(" (low-ram)");

- }

- if (ActivityManager.isHighEndGfx()) {

- pw.print(" (high-end-gfx)");

- }

- pw.println();

large:dalvik.vm.heapsize属性取值,单位为MB

oom:ProcessList中mOomMinFree数组最后一个元素取值

restore limit:ProcessList中mCachedRestoreLevel变量取值,将一个进程从cached 到background的最大值,一般是mOomMinFree 最后一个值的 1/3.

low-ram:是否为low ram 设备,一般通过prop ro.config.low_ram,或者当ro.debuggable 使能时prop debug.force_low_ram。

high-end-gfx:ro.config.low_ram 为false,且ro.config.avoid_gfx_accel 为false,且config config_avoidGfxAccel 的值为false。

参考:https://blog.csdn.net/feelabclihu/article/details/105534175

-

相关阅读:

使用U盘同步WSL2中的git项目

WMCTF2022 WEB

Redux中间件redux-thunk

基于JAVA的学校图书管理系统(Swing+GUI)

利用python批量创建文件夹、批量创建文件、批量复制文件到指定文件夹

C# 将本地图片插入到Excel文件中

BAT 设置WIFI代理

上帝视角看支付总架构解析

Java进阶架构实战——Redis在京东到家的订单中的使用

【重识云原生】第六章容器6.1.5节——Docker核心技术Namespace

- 原文地址:https://blog.csdn.net/jingerppp/article/details/126269031