-

Rancher安装并部署Kubernetes高可用集群

目录

一、Rancher是什么?

Rancher是一个开源的企业级多集群Kubernetes管理平台,实现了Kubernetes集群在混合云+本地数据中心的集中部署与管理,以确保集群的安全性,加速企业数字化转型。通过Rancher企业再也不必自己使用一系列的开源软件去从头搭建容器服务平台。Rancher提供了在生产环境中使用的管理Docker和Kubernetes的全栈化容器部署与管理平台。Rancher是美国一家科技公司的产物,后被SUSE收购。

二、前期准备

1.硬件要求(最低标准)

Role CPU Memory Os IP rancher-server 2C 5G Centos:7.9 10.10.10.130 k8s-master01 2C 3G Centos:7.9 10.10.10.120 k8s-master02 2C 3G Centos:7.9 10.10.10.110 k8s-node01 1C 2G Centos:7.9 10.10.10.100 k8s-node02 1C 2G Centos:7.9 10.10.10.90

2.设置主机名

- #修改机器名称使用下面这条命令

- hostnamectl set-hostname 名称

- #修改hosts文件

- cat > /etc/hosts <<-'EOF'

- 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

- ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

- rancher-server 10.10.10.130

- k8s-master01 10.10.10.120

- k8s-master02 10.10.10.110

- k8s-node01 10.10.10.100

- k8s-node02 10.10.10.90

- EOF

3.关闭SElinux防火墙与swap 交换分区

- #关闭交换分区

- swapoff -a

- sed -ri 's/.swap./#&/' /etc/fstab

- #关闭防火墙

- setenforce 0

- systemctl stop firewalld

- systemctl disable firewalld

4.配置时间同步

- #安装时间服务(每台机器都安装并且要配置)

- yum -y install chrony

- #修改配置文件

- cat > /etc/chrony.conf <<-'EOF'

- pool 10.10.10.130 iburst

- driftfile /var/lib/chrony/drift

- makestep 1.0 3

- rtcsync

- allow 10.10.10.0/24

- local stratum 10

- keyfile /etc/chrony.keys

- leapsectz right/UTC

- logdir /var/log/chrony

- EOF

- #启动时间服务

- systemctl restart chronyd.service --now

- #手动同步系统时钟

- chronyc -a makestep

- #查看时间同步源、查看时间同步源状态

- chronyc sources -v

- chronyc sourcestats -v

- #重启依赖于系统时间的服务

- systemctl restart rsyslog && systemctl restart crond

5.配置系统日志持久化保存日志的目录

centos7以后,引导方式改为了systemd,所以会有两个日志系统同时工作只保留一个日志(journald)的方法 设置rsyslogd 和 systemd journald。

- mkdir /var/log/journal

- cat > /etc/systemd/journald.conf <<-'EOF'

- [Journal]

- #持久化保存到磁盘

- Storage=persistent

- #压缩历史日志

- Compress=yes

- SyncIntervalSec=5m

- RateLimitInterval=30s

- RateLimitBurst=1000

- #最大占用空间10G

- SystemMaxUse=10G

- #单日志文件最大200M

- SystemMaxFileSize=200M

- #日志保存时间 2

- MaxRetentionSec=2week

- #不将日志转发到

- syslog ForwardToSyslog=no

- EOF

- #重启journald配置

- systemctl restart systemd-journald

6.安装Docker

- #安装常用工具

- yum -y install vim net-tools lrzsz iptables curl wget git yum-utils device-mapper-persistent-data lvm2

- #配置docker源

- yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

- #更新安装docker-ce

- yum makecache fast

- yum -y install docker-ce-20.10.10-3.el7 (这里我选择安装较稳定版本)

- #配置docker镜像加速

- mkdir -p /etc/docker

- cat > /etc/docker/daemon.json <<-'EOF'

- {

- "registry-mirrors": ["https://26ahzfln.mirror.aliyuncs.com"]

- }

- EOF

- #启动docker

- systemctl daemon-reload && systemctl restart docker && systemctl enable docker

三、部署Rancher

1.拉取rancher镜像

- #我选择安装rancher2.5版本,你们可以自行安装个人感觉2.6版本ui不太友好

- #镜像站:https://hub.docker.com/r/rancher/rancher/tags?page=1&ordering=last_updated&name=2.5

- docker pull rancher/rancher:v2.5.15-linux-amd64

2.启动Rancher

- #建rancher数据目录

- mkdir -p /home/rancher

- #启动

- docker run -d --privileged -p 80:80 -p 443:443 -v /home/rancher:/var/lib/rancher/ --restart=always --name rancher2.5 rancher/rancher:v2.5.15-linux-amd64

3.登录rancher

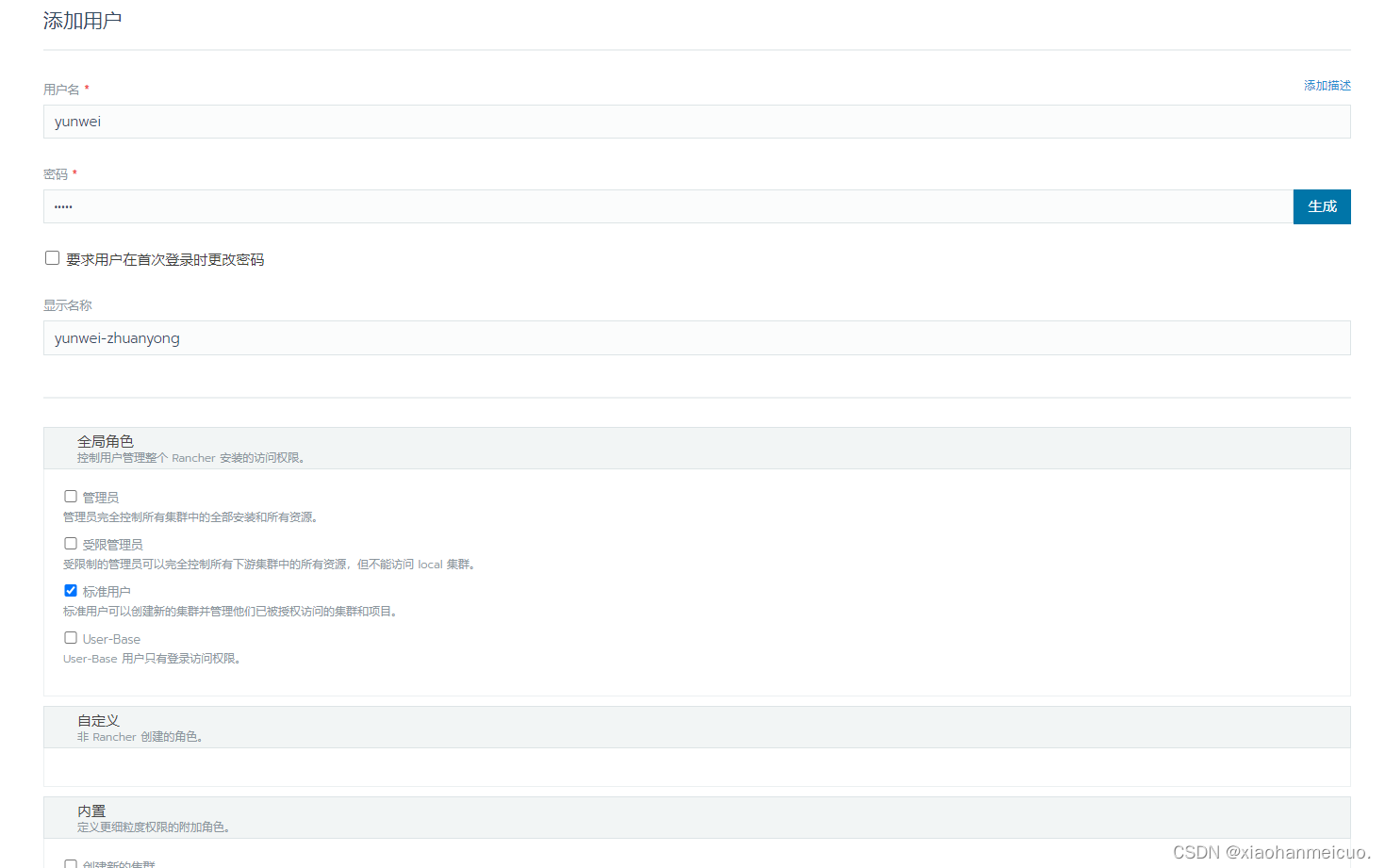

4. 创建rancher用户

根据权重自行授予权限即可.

四、部署高可用Kubernetes集群

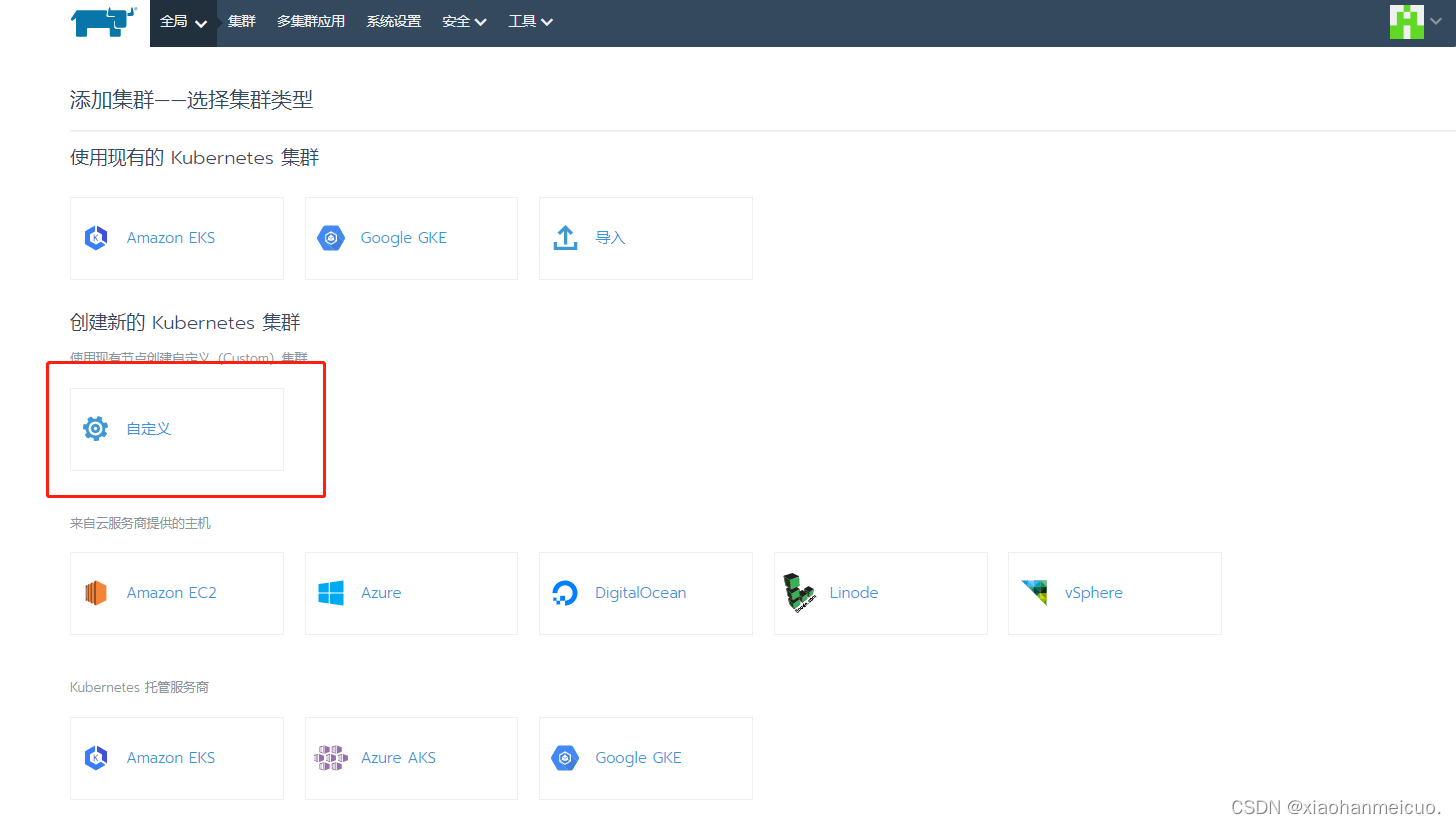

1.创建集群

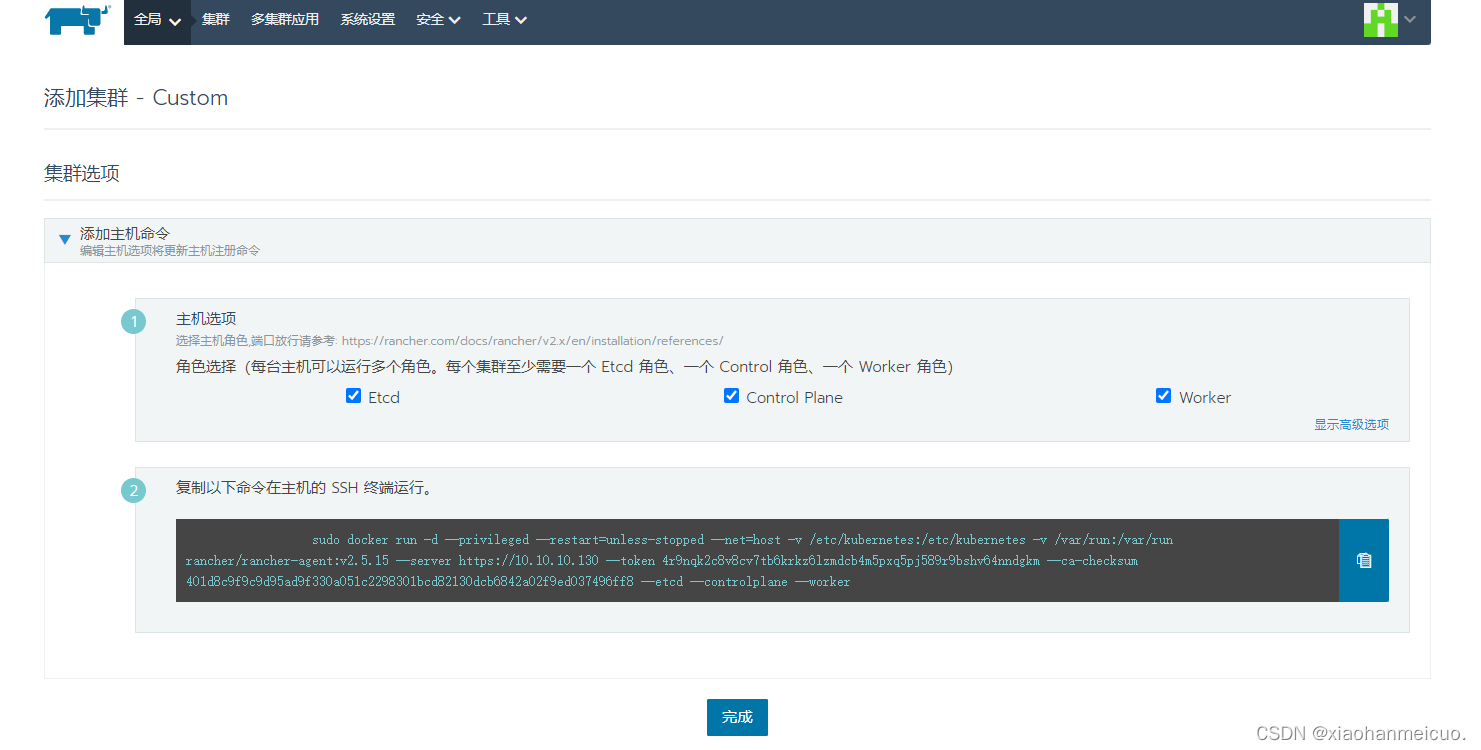

1)添加master节点

- #两台master节点的话就把复制的命令在两台节点上执行

- sudo docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.5.15 --server https://10.10.10.130 --token 4r9nqk2c8v8cv7tb6krkz6lzmdcb4m5pxq5pj589r9bshv64nndgkm --ca-checksum 401d8c9f9c9d95ad9f330a051c2298301bcd82130dcb6842a02f9ed037496ff8 --etcd --controlplane --worker

- #执行过程中可能有点慢,速度取决于您的硬件速度及网络速度

2)添加node工作节点

- #添加node节点复制命令在主机上执行

- sudo docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.5.15 --server https://10.10.10.130 --token 4r9nqk2c8v8cv7tb6krkz6lzmdcb4m5pxq5pj589r9bshv64nndgkm --ca-checksum 401d8c9f9c9d95ad9f330a051c2298301bcd82130dcb6842a02f9ed037496ff8 --worker

- #等待节点添加完成开始工作

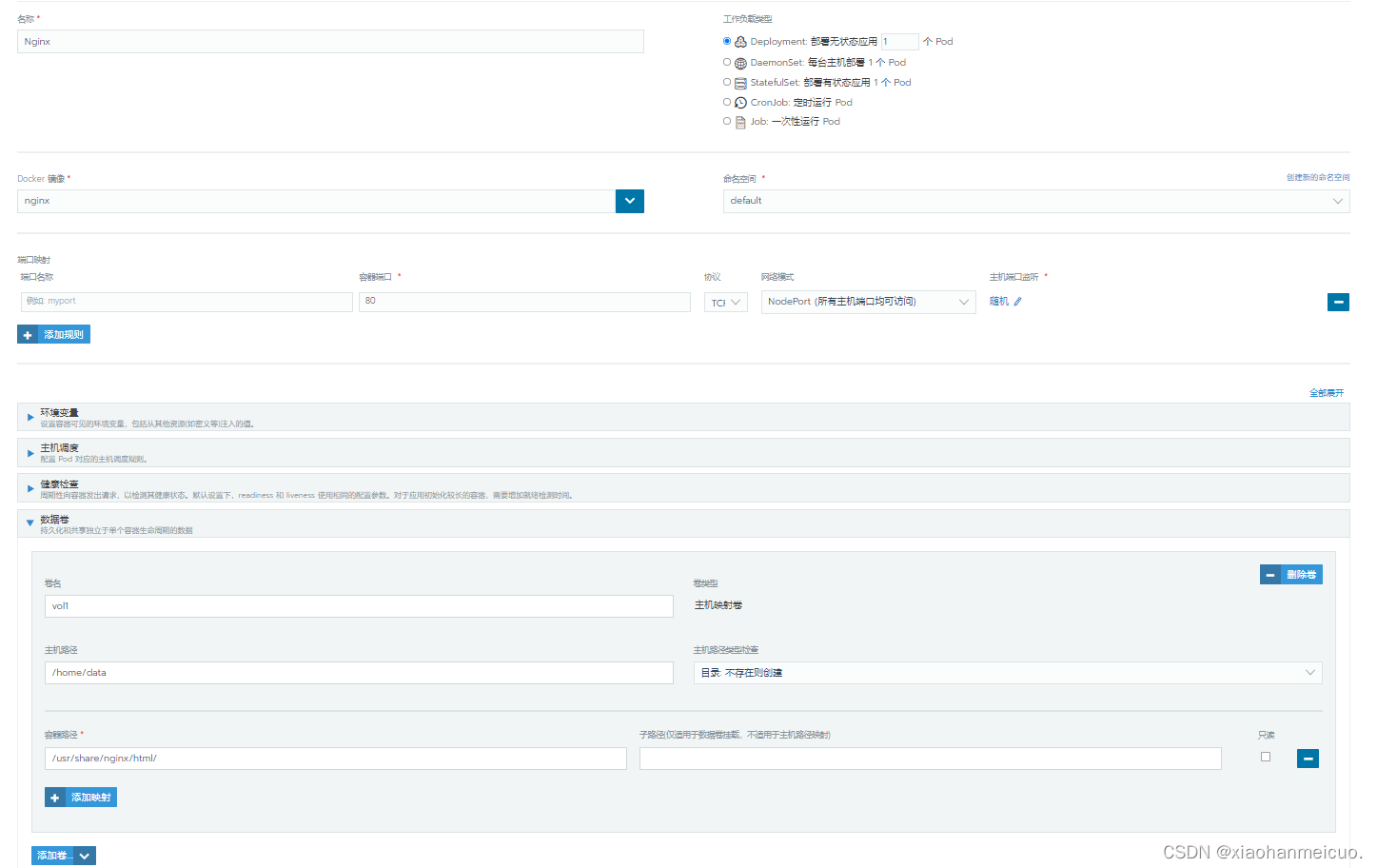

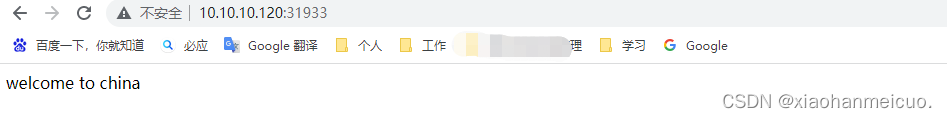

2.部署一个Nginx服务并运行

可以根据自己需求定义容器,下面显示已经创建完成

- #写测试文件

- [root@k8s-master01 ~]# cd /home/data/

- [root@k8s-master01 data]# echo "welcome to china" > index.html

- #下面通过ip+端口访问

容器的其它操作

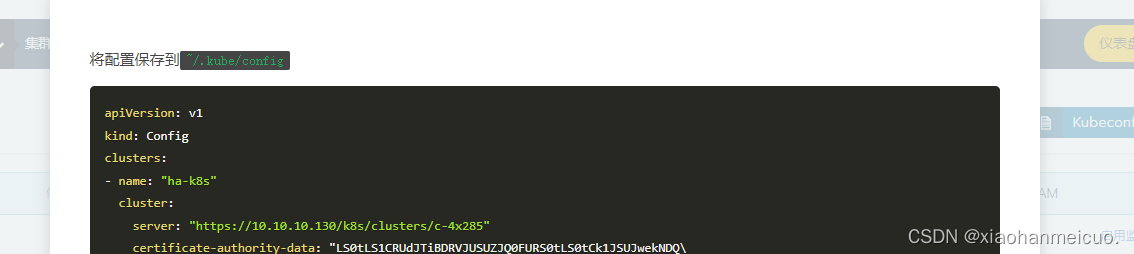

3.配置宿主机kubectl命令

使用Rancher安装的k8s集群,默认不能使用kubectl通过命令行对主机进行直接管理。一旦Rancher挂掉之后,会很头疼。

1)在宿主机上下载kubectl二进制文件

- [root@k8s-master02]# curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

- [root@k8s-master02]# chmod +x ./kubectl

- [root@k8s-master02]# mv ./kubectl /usr/local/bin/kubectl

2)从Rancher上复制集群的kubeconfig文件

- [root@k8s-master02 ~]# mkdir ~/.kube

- [root@k8s-master02 ~]# vim ~/.kube/config

- apiVersion: v1

- kind: Config

- clusters:

- - name: "ha-k8s"

- cluster:

- server: "https://10.10.10.130/k8s/clusters/c-4x285"

- certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJwekNDQ\

- VUyZ0F3SUJBZ0lCQURBS0JnZ3Foa2pPUFFRREFqQTdNUnd3R2dZRFZRUUtFeE5rZVc1aGJXbGoKY\

- kdsemRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR1Z1WlhJdFkyRXdIa\

- GNOTWpJdwpOekF4TURJeE1qRXlXaGNOTXpJd05qSTRNREl4TWpFeVdqQTdNUnd3R2dZRFZRUUtFe\

- E5rZVc1aGJXbGpiR2x6CmRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR\

- 1Z1WlhJdFkyRXdXVEFUQmdjcWhrak8KUFFJQkJnZ3Foa2pPUFFNQkJ3TkNBQVRTdnozV21oNG5GW\

- Wl2VDlmb3dDaFR2c0ZPWi8zVmVidldGMkZna3BwNQpFVXZSck5VeWczcVVLVnBicmJBc0xCKzd4S\

- FpaRHQrR0MwSDdnbE0xR2dvcW8wSXdRREFPQmdOVkhROEJBZjhFCkJBTUNBcVF3RHdZRFZSMFRBU\

- UgvQkFVd0F3RUIvekFkQmdOVkhRNEVGZ1FVTFd3RTNrZlV5bTBJSUwvWGVZaDQKT1U2L2o0SXdDZ\

- 1lJS29aSXpqMEVBd0lEU0FBd1JRSWhBSUMrY1FHNU9mZzN1Wm9tTmpFNTRSM245Vi9WS2J1bAppb\

- CtvcHVzZ1pHc2lBaUExbzF3RUkvOUluVVpNOXRJYkJpL2poMVdBNVduVUdnODNkU244UXVnWC93P\

- T0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQ=="

- - name: "ha-k8s-k8s-master01"

- cluster:

- server: "https://10.10.10.120:6443"

- certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM0VENDQ\

- WNtZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFTTVJBd0RnWURWUVFERXdkcmRXSmwKT\

- FdOaE1CNFhEVEl5TURjd01UQXlNemt3TlZvWERUTXlNRFl5T0RBeU16a3dOVm93RWpFUU1BNEdBM\

- VVFQXhNSAphM1ZpWlMxallUQ0NBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ\

- 0VCQU01UVAwemllb1U5ClZtN0VyU1YwZ2ZRZWJoKzBIUXFXcE4vdHdzNXQrd1BMRG04amFkZ1g3a\

- E43d2dtMmRZV3M0UE9QQjYralg3WmkKb1pnRVR1dHVDZU9MZTQ3dmY4aGIzdXhKeU4zYXlBODZ5T\

- GhncFI2YXpBNUwyUG45eFZWNDlBT01ManZTYVRWeAowQU1NWHVDd2tsM3ZTVVYzWVYwbVlXS21IQ\

- 3RFbU9nTitCS3JPUjYxbEcveEVVU0M3SHpGOWxXS25iVlhNZVJQCnBTK3R4REo2U0tOL0E5ZTdOM\

- lFtUUN1M0xZVFVVU0dNQzYvQjlML0dIZTZBUVVjVTZ3VHdzVkZBRVdZZWdnWEYKandpQzBuUkFxb\

- FdoOFpmaC9wOTV5VWdyekdJOXduNy9TcURIWFBYdFhraTltK1FoOHJYc3dLRnptdkRZOGNRMgpvU\

- Hc5cEhqN1RFMENBd0VBQWFOQ01FQXdEZ1lEVlIwUEFRSC9CQVFEQWdLa01BOEdBMVVkRXdFQi93U\

- UZNQU1CCkFmOHdIUVlEVlIwT0JCWUVGSFJxR1JtS1ZFUUVQNjh6azlYR1Iwd2IyUFA3TUEwR0NTc\

- UdTSWIzRFFFQkN3VUEKQTRJQkFRQnB5N3FRd3NyYVdhbkZLSWFTZDJTN3ZzQnVoS2hvdTBYNUhxU\

- FFOa1FNZFJwYVlZQVRQclc0Y0p4WQpzVHZOT0E4Y09GREdYVlc2U3NvN0JnalBlWWdTY08xQnBCV\

- 0xlbEYrUFA1YkhDYVExODhTZEFtbmlGSCszSWczClB1Q0RFc1RuUlArZlMxNmFxeGRGZzRkT0FyW\

- mF5Rzk2K2VLclVGdzNxd0hzWjBqUTdVR1pzcFRDQ3Ard0ZLN0IKUVNCSktkRnMxS2FXd1FHSUxqU\

- nY4ZVg1R2lhY0lJUXB5ZXBiVno3TEtDNXlFUGRONUM2bGJaanVwWlRhY2RFVAowcDAvRDU0RS9RW\

- TlhM0RWaHYxVGJtdjJHT2xWUGhGalp5cnVWaGpuOUpGeEkrZHZxSXNKMEhIOUNUbXJKUHhDCndGa\

- 3JuU25UK3FMcnUwQ1FOdG02SVlrc05KYzEKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo="

- - name: "ha-k8s-k8s-master02"

- cluster:

- server: "https://10.10.10.110:6443"

- certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM0VENDQ\

- WNtZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFTTVJBd0RnWURWUVFERXdkcmRXSmwKT\

- FdOaE1CNFhEVEl5TURjd01UQXlNemt3TlZvWERUTXlNRFl5T0RBeU16a3dOVm93RWpFUU1BNEdBM\

- VVFQXhNSAphM1ZpWlMxallUQ0NBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ\

- 0VCQU01UVAwemllb1U5ClZtN0VyU1YwZ2ZRZWJoKzBIUXFXcE4vdHdzNXQrd1BMRG04amFkZ1g3a\

- E43d2dtMmRZV3M0UE9QQjYralg3WmkKb1pnRVR1dHVDZU9MZTQ3dmY4aGIzdXhKeU4zYXlBODZ5T\

- GhncFI2YXpBNUwyUG45eFZWNDlBT01ManZTYVRWeAowQU1NWHVDd2tsM3ZTVVYzWVYwbVlXS21IQ\

- 3RFbU9nTitCS3JPUjYxbEcveEVVU0M3SHpGOWxXS25iVlhNZVJQCnBTK3R4REo2U0tOL0E5ZTdOM\

- lFtUUN1M0xZVFVVU0dNQzYvQjlML0dIZTZBUVVjVTZ3VHdzVkZBRVdZZWdnWEYKandpQzBuUkFxb\

- FdoOFpmaC9wOTV5VWdyekdJOXduNy9TcURIWFBYdFhraTltK1FoOHJYc3dLRnptdkRZOGNRMgpvU\

- Hc5cEhqN1RFMENBd0VBQWFOQ01FQXdEZ1lEVlIwUEFRSC9CQVFEQWdLa01BOEdBMVVkRXdFQi93U\

- UZNQU1CCkFmOHdIUVlEVlIwT0JCWUVGSFJxR1JtS1ZFUUVQNjh6azlYR1Iwd2IyUFA3TUEwR0NTc\

- UdTSWIzRFFFQkN3VUEKQTRJQkFRQnB5N3FRd3NyYVdhbkZLSWFTZDJTN3ZzQnVoS2hvdTBYNUhxU\

- FFOa1FNZFJwYVlZQVRQclc0Y0p4WQpzVHZOT0E4Y09GREdYVlc2U3NvN0JnalBlWWdTY08xQnBCV\

- 0xlbEYrUFA1YkhDYVExODhTZEFtbmlGSCszSWczClB1Q0RFc1RuUlArZlMxNmFxeGRGZzRkT0FyW\

- mF5Rzk2K2VLclVGdzNxd0hzWjBqUTdVR1pzcFRDQ3Ard0ZLN0IKUVNCSktkRnMxS2FXd1FHSUxqU\

- nY4ZVg1R2lhY0lJUXB5ZXBiVno3TEtDNXlFUGRONUM2bGJaanVwWlRhY2RFVAowcDAvRDU0RS9RW\

- TlhM0RWaHYxVGJtdjJHT2xWUGhGalp5cnVWaGpuOUpGeEkrZHZxSXNKMEhIOUNUbXJKUHhDCndGa\

- 3JuU25UK3FMcnUwQ1FOdG02SVlrc05KYzEKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo="

- users:

- - name: "ha-k8s"

- user:

- token: "kubeconfig-user-m25dm.c-4x285:kxvdw7jdqpc8qgm9ttpxg49m4vz2s249b6sb49cfp6n4lr9nmc7nzm"

- contexts:

- - name: "ha-k8s"

- context:

- user: "ha-k8s"

- cluster: "ha-k8s"

- - name: "ha-k8s-k8s-master01"

- context:

- user: "ha-k8s"

- cluster: "ha-k8s-k8s-master01"

- - name: "ha-k8s-k8s-master02"

- context:

- user: "ha-k8s"

- cluster: "ha-k8s-k8s-master02"

- current-context: "ha-k8s"

3)宿主机测试

- [root@k8s-master02 ~]# kubectl get node

- NAME STATUS ROLES AGE VERSION

- k8s-master01 Ready controlplane,etcd,worker 3h27m v1.19.16

- k8s-master02 Ready controlplane,etcd,worker 3h27m v1.19.16

- k8s-node01 Ready worker 150m v1.19.16

- k8s-node02 Ready worker 170m v1.19.16

- [root@k8s-master02 ~]# kubectl get ns

- NAME STATUS AGE

- cattle-system Active 3h27m

- default Active 3h28m

- fleet-system Active 3h26m

- ingress-nginx Active 3h27m

- kube-node-lease Active 3h28m

- kube-public Active 3h28m

- kube-system Active 3h28m

- security-scan Active 3h26m

- [root@k8s-master02 ~]# kubectl get pod -A

- NAMESPACE NAME READY STATUS RESTARTS AGE

- cattle-system cattle-cluster-agent-7697fd77df-vfssz 1/1 Running 4 3h27m

- cattle-system cattle-node-agent-5kkkx 1/1 Running 0 3h27m

-

相关阅读:

AcWing:3498. 日期差值

地下室选择笔记

「网页开发|后端开发|Flask」08 python接口开发快速入门:技术选型&写一个HelloWorld接口

并发支持库(2)-原子操作

springcloud学习笔记:使用gateway实现路由转发

线性表的双链表

【并发编程三】C++进程通信(管道pipe)

如何让自己的精力集中 Maven自学笔记 马云演讲观看

FPGA【Verilog语法】

Iceberg 数据治理及查询加速实践

- 原文地址:https://blog.csdn.net/yeyslspi59/article/details/125540549