-

机器学习day4

超参数选择方法

交叉验证

1.交叉验证定义

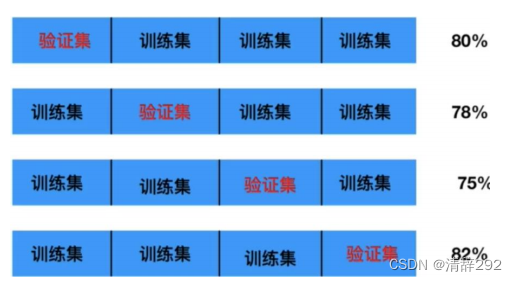

交叉验证是一种数据集的分割方法,将训练集划分为n份,拿一份做测试集,其他n-1份做训练集2.交叉验证法原理

将数据集划分为 cv=4 份

1. 第一次:把第一份数据做验证集,其他数据做训练

2. 第二次:把第二份数据做验证集,其他数据做训练

3. ... 以此类推,总共训练4次,评估4次。

4. 使用训练集+验证集多次评估模型,取平均值做交叉验证为模型得分

5. 若k=5模型得分最好,再使用全部训练集(训练集+验证集) 对k=5模型再训练 一边,再使用测试

集对k=5模型做评估

网格搜索

为什么要进行网格搜索?

网格搜索是一种自动化的超参数优化方法,它通过穷举搜索不同的超参数组合来找到模型的最优配置。由于模型性能受多个超参数影响,手动调整这些参数既繁琐又低效,因此网格搜索非常必要。它能自动完成参数组合、模型训练与评估,让我们能更专注于模型设计。结合交叉验证,网格搜索还能提高模型可靠性和稳定性。

预测乳腺癌的良性或恶性案例

题目: 预测乳腺癌的良性或恶性要求: 使用乳腺癌数据集中的临床特征,构建一个机器学习模型,预测肿瘤是良性还是恶性。评估标准: 模型准确率( Accuracy )解题提示

1. 数据预处理: 在构建模型之前,记得对数据进行标准化,以确保不同特征的尺度一致。2. 选择合适的算法: 考虑使用 K 近邻算法( KNN )进行分类任务。3. 调参优化: 使用交叉验证来选择最优的 K 值,以提高模型的性能。4. 模型评估: 使用准确率作为评估标准,评估模型的性能。代码展示

- import matplotlib.pyplot as plt

- from sklearn.datasets import load_breast_cancer

- from sklearn.preprocessing import StandardScaler

- from sklearn.model_selection import train_test_split

- from sklearn.neighbors import KNeighborsClassifier

- from sklearn.model_selection import cross_val_score

- from sklearn.metrics import accuracy_score

- # 获取数据集

- breast_cancer = load_breast_cancer()

- # print(breast_cancer)

- # 数据基本处理-划分数据集

- x_train, x_test, y_train, y_test = train_test_split(breast_cancer.data, breast_cancer.target, test_size=0.2, random_state=22)

- # 数据预处理-数据标准化

- transfer = StandardScaler()

- x_train = transfer.fit_transform(x_train)

- x_test = transfer.fit_transform(x_test)

- # print(x_train)

- # 模型训练

- # estimator = KNeighborsClassifier(n_neighbors=5)

- # estimator.fit(x_train, y_train)

- # # 模型评估

- # myscore = estimator.score(x_test, y_test)

- # print(myscore)

- # 设定K值的范围

- k_range = range(1, 10)

- k_scores = []

- # 交叉验证

- for k in k_range:

- estimator = KNeighborsClassifier(n_neighbors=k)

- scores = cross_val_score(estimator, x_train, y_train, cv=5, scoring='accuracy')

- k_scores.append(scores.mean())

- # 绘制K值与准确率的关系图

- plt.plot(k_range, k_scores)

- plt.xlabel('Value of K for KNN')

- plt.ylabel('Cross-Validated Accuracy')

- plt.title('Accuracy scores for Values of K')

- plt.show()

- # 找到最优的K值

- optimal_k = k_scores.index(max(k_scores)) + 1

- print(optimal_k)

- # 使用最优的K值重新训练模型

- estimator_optimal = KNeighborsClassifier(n_neighbors=optimal_k)

- estimator_optimal.fit(x_train, y_train)

- # 使用最优模型进行预测

- y_pred_optimal = estimator_optimal.predict(x_test)

- # 计算最优模型的准确率

- accuracy_optimal = accuracy_score(y_test, y_pred_optimal)

- print(accuracy_optimal)

效果图

-

相关阅读:

java计算机毕业设计济南旅游网站MyBatis+系统+LW文档+源码+调试部署

CPU监控工具(CPU使用率及CPU温度监控)

获取Windows远程桌面端口

Jmeter 链接MySQL测试

【Python安全攻防】【网络安全】一、常见被动信息搜集手段

Spring/SpringBoot自定义线程池

七天学会C语言-第六天(指针)

PIL+pyplot+transforms.ToTensor+unsqueeze+div

基于matlab统计Excel文件一列数据中每个数字出现的频次和频率

【2018年数据结构真题】

- 原文地址:https://blog.csdn.net/qq_74261455/article/details/138207212