-

k8s部署xxl-job后,执行任务提示拒绝连接Connection Refused

一、问题背景

1.1 问题说明

之前由于网络插件flannel安装不成功,导致xxl-job执行任务的时候,提示拒绝服务,如下图所示:

但是安装flannel安装成功后,依然无法联通,还是提示相同问题1.2 排查网络

通过ifconfig查看各个k8s节点的网络情况

1) master:cni0和flannel.1的网段是对应的,都是10.244.0.x[root@zzyk8s01 scripts]# ifconfig cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.1 netmask 255.255.255.0 broadcast 10.244.0.255 inet6 fe80::1c6c:c1ff:fe59:846d prefixlen 64 scopeid 0x20<link> ether 1e:6c:c1:59:84:6d txqueuelen 1000 (Ethernet) RX packets 494717 bytes 39902995 (38.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 792397 bytes 57936901 (55.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::70a0:e8ff:fe34:99ba prefixlen 64 scopeid 0x20<link> ether 72:a0:e8:34:99:ba txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

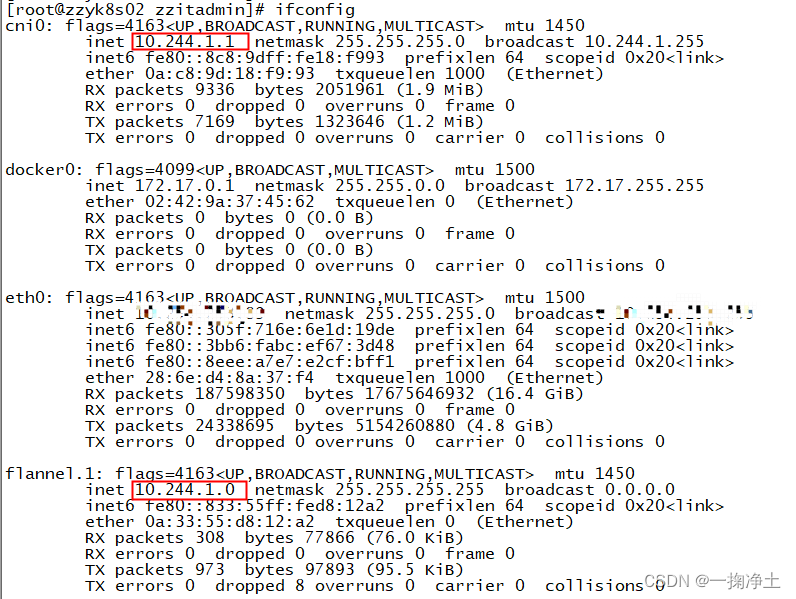

2)worker1:cni0和flannel.1的网段是没有对应上,正常cni0应该是10.244.1.1

[root@zzyk8s02 zzitadmin]# ifconfig cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.1 netmask 255.255.255.0 broadcast 10.244.0.255 inet6 fe80::a432:5bff:fea6:bef7 prefixlen 64 scopeid 0x20<link> ether a6:32:5b:a6:be:f7 txqueuelen 1000 (Ethernet) RX packets 23183004 bytes 4647637027 (4.3 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 17425600 bytes 2903842258 (2.7 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.1.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::acf6:b2ff:fe89:9d1b prefixlen 64 scopeid 0x20<link> ether ae:f6:b2:89:9d:1b txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

3)worker2:cni0和flannel.1的网段是也没有对应上,正常cni0应该是10.244.2.1

[root@zzyk8s03 zzitadmin]# ifconfig cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.1 netmask 255.255.255.0 broadcast 10.244.0.255 inet6 fe80::7878:40ff:fe29:d7de prefixlen 64 scopeid 0x20<link> ether 7a:78:40:29:d7:de txqueuelen 1000 (Ethernet) RX packets 493875 bytes 103157632 (98.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 369347 bytes 42352328 (40.3 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.2.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::bcaf:54ff:feca:bd0d prefixlen 64 scopeid 0x20<link> ether be:af:54:ca:bd:0d txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

1.3 解决办法

在master节点和所有worker节点依次执行如下命令,重新安装网络插件flannel

ifconfig cni0 down ip link delete cni0 ifconfig flannel.1 down ip link delete flannel.1 rm -rf /var/lib/cni/flannel/* rm -rf /var/lib/cni/networks/cbr0/* rm -rf /var/lib/cni/cache/* rm -f /etc/cni/net.d/* systemctl restart kubelet systemctl restart docker chmod a+w /var/run/docker.sock- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

执行完之后,再次查看ifconfig ,发现cni0和flannel.1网络先是没有了,过了一会儿自动生成了新的。

如下图:worker1上的cni0的网段和flannel.1的网段保持了一致。worker3情况类似。

再次查看网络插件的运行情况:均是Running

二、回归测试

最后,通过执行一次xxljob进行测试,发现执行成功,且后台日志正常。

END

-

相关阅读:

StateFlow 和 SharedFlow

分析了60款链游,发现40%的玩家都是机器人

Flask 学习-39.Flask-RESTful 请求参数校验inputs

C#中LinkedList、Queue<T>和Stack<T>的使用

Windows C++ VS2022 OpenVINO 物体检测 Demo

【无标题】

ImmunoChemistry艾美捷总细胞毒性试验试剂盒方案

Java Rsa 签名 验签

初探UAF漏洞

通讯网关软件003——利用CommGate X2Mbt实现Modbus TCP访问OPC Server

- 原文地址:https://blog.csdn.net/wdy_2099/article/details/134008547