-

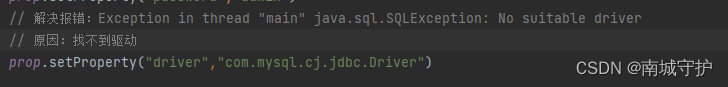

Exception in thread “main“ java.sql.SQLException: No suitable driver

详细报错信息如下:

Exception in thread "main" java.sql.SQLException: No suitable driver

at java.sql.DriverManager.getDriver(DriverManager.java:315)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.$anonfun$driverClass$2(JDBCOptions.scala:107)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.(JDBCOptions.scala:107)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcOptionsInWrite.(JDBCOptions.scala:218)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcOptionsInWrite.(JDBCOptions.scala:222)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:46)

at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:75)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:73)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.executeCollect(commands.scala:84)

at org.apache.spark.sql.execution.QueryExecutionanonfun$eagerlyExecuteCommands$1.$anonfun$applyOrElse$1(QueryExecution.scala:110)atorg.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$5(SQLExecution.scala:103)atorg.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:163)atorg.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:90)atorg.apache.spark.sql.SparkSession.withActive(SparkSession.scala:775)atorg.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)atorg.apache.spark.sql.execution.QueryExecutionanonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:110)

at org.apache.spark.sql.execution.QueryExecutionanonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:106)atorg.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:481)atorg.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:82)atorg.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:481)atorg.apache.spark.sql.catalyst.plans.logical.LogicalPlan.org$apache$spark$sql$catalyst$plans$logical$AnalysisHelpersuper$transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning(AnalysisHelper.scala:267)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning$(AnalysisHelper.scala:263)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:457)

at org.apache.spark.sql.execution.QueryExecution.eagerlyExecuteCommands(QueryExecution.scala:106)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted$lzycompute(QueryExecution.scala:93)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted(QueryExecution.scala:91)

at org.apache.spark.sql.execution.QueryExecution.assertCommandExecuted(QueryExecution.scala:128)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:848)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:382)

at org.apache.spark.sql.DataFrameWriter.saveInternal(DataFrameWriter.scala:355)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:247)

at org.apache.spark.sql.DataFrameWriter.jdbc(DataFrameWriter.scala:745)

at gs_1.gs_1_util.writeMySql(gs_1_util.scala:38)

at gs_1.task03$.test01(task03.scala:50)

at gs_1.task03$.main(task03.scala:61)

at gs_1.task03.main(task03.scala)

-

相关阅读:

电商商品详情 API(商品主图、sku)

计算机网路学习-time_wait过多

Kubernetes - Ingress暴露应用(四)

2022-09-05 mysql/stonedb-查询时多线程并行处理开发路线图

C语言之extern关键字实例总结(八十二)

cos文件上传demo (精简版通用)

出海 SaaS 企业增长修炼手册:聊聊 PLG 的关键指标、技术栈和挑战

速盾:ddos高防ip原理

性能问题从发现到优化一般思路

【疑难杂症】恢复挂载的nacos持久卷

- 原文地址:https://blog.csdn.net/W2484980893/article/details/133351874