-

Hadoop分布式文件系统

目录

一、Hadoop

Hadoop之父Doug Cutting

Hadoop的发音[hædu:p] ,Cutting儿子对玩具小象的昵称

狭义上Hadoop指的是Apache软件基金会的一款开源软件。用java语言实现,开源。允许用户使用简单的编程模型实现跨机器集群对海量数据进行分布式计算处理。

Hadoop核心组件

Hadoop HDFS(分布式文件存储系统):解决海量数据存储

Hadoop YARN(集群资源管理和任务调度框架):解决资源任务调度

Hadoop MapReduce(分布式计算框架):解决海量数据计算

广义上Hadoop指的是围绕Hadoop打造的大数据生态圈。

1、Hadoop简介

Hadoop起源于Apache Lucene子项目:Nutch

Nutch的设计目的是构建一个大型的全网搜索引擎。遇到瓶颈:如何解决数十亿网页的存储和索引问题。

Google三篇论文

1、《The Google file system》:谷歌分布式文件系统GFS

2、《MapReduce:Simplified Data Processing on Large Clusters》:谷歌分布式计算框架MapReduce

3、《Bigtable:A Distributed Storage System for Structured Data》:谷歌结构化数据存储系统

Hadoop现状

1、HDFS作为分布式文件存储系统,处在生态圈的底层与核心地位

2、YARN作为分布式通用的集群资源管理系统和任务调度平台,支撑各种计算引擎运行,保证了Hadoop地位。

3、MapReduce作为大数据生态圈第一代分布式计算引擎,由于自身设计的模型所产生的弊端,导致企业一线几乎不再直接使用MapReduce进行编程处理,但是很多软件的底层依然在使用MapReduce引擎来处理数据。

1.1、Hadoop发展简史

- 2002年10月,Doug Cutting和Mike Cafarella创建了开源网页爬虫项目Nutch。

- 2003年10月,Google发表Google File System论文。

- 2004年7月,Doug Cutting和Mike Cafarella在Nutch中实现了类似GFS的功能,即后来HDFS的前身。

- 2004年10月,Google发表了MapReduce论文。

- 2005年2月,Mike Cafarella在Nutch中实现了MapReduce的最初版本。

- 2005年12月,开源搜索项目Nutch移植到新框架,使用MapReduce和HDFS在20个节点稳定运行。

- 2006年1月,Doug Cutting加入雅虎,Yahoo!提供一个专门的团队和资源将Hadoop发展成一个可在网络上运行的系统。

- 2006年2月,Apache Hadoop项目正式启动以支持MapReduce和HDFS的独立发展。

- 2006年3月,Yahoo!建设了第一个Hadoop集群用于开发。

- 2006年4月,第一个Apache Hadoop发布。

- 2006年11月,Google发表了Bigtable论文,激起了Hbase的创建。

- 2007年10月,第一个Hadoop用户组会议召开,社区贡献开始急剧上升。

- 2007年,百度开始使用Hadoop做离线处理。

- 2007年,中国移动开始在“大云”研究中使用Hadoop技术。

- 2008年,淘宝开始投入研究基于Hadoop的系统——云梯,并将其用于处理电子商务相关数据。

- 2008年1月,Hadoop成为Apache顶级项目。

- 2008年2月,Yahoo!运行了世界上最大的Hadoop应用,宣布其搜索引擎产品部署在一个拥有1万个内核的Hadoop集群上。

- 2008年4月,在900个节点上运行1TB排序测试集仅需209秒,成为世界最快。

- 2008年8月,第一个Hadoop商业化公司Cloudera成立。

- 2008年10月,研究集群每天装载10TB的数据。

- 2009 年3月,Cloudera推出世界上首个Hadoop发行版——CDH(Cloudera's Distribution including Apache Hadoop)平台,完全由开放源码软件组成。 Cloudera公司网址

- 2009年6月,Cloudera的工程师Tom White编写的《Hadoop权威指南》初版出版,后被誉为Hadoop圣经。

- 2009年7月 ,Hadoop Core项目更名为Hadoop Common;

- 2009年7月 ,MapReduce 和 Hadoop Distributed File System (HDFS) 成为Hadoop项目的独立子项目。

- 2009年8月,Hadoop创始人Doug Cutting加入Cloudera担任首席架构师。

- 2009年10月,首届Hadoop World大会在纽约召开。

- 2010年5月,IBM提供了基于Hadoop 的大数据分析软件——InfoSphere BigInsights,包括基础版和企业版。

- 2011年3月,Apache Hadoop获得Media Guardian Innovation Awards媒体卫报创新奖

- 2012年3月,企业必须的重要功能HDFS NameNode HA被加入Hadoop主版本。

- 2012年8月,另外一个重要的企业适用功能YARN成为Hadoop子项目。

- 2014年2月,Spark逐渐代替MapReduce成为Hadoop的缺省执行引擎,并成为Apache基金会顶级项目。

- 2017年12月,Release 3.0.0 generally available

1.2、Hadoop特性优点

1、扩容能力:Hadoop是在可用的计算机集群间分配数据并完成计算任务的,这些集群可方便灵活的方式扩展到数以千计的节点。

2、成本低:Hadoop集群允许通过部署普通廉价的机器组成集群来处理大数据,以至于成本很低。看重的是集群整体能力。

3、效率高:通过并发数据,Hadoop可以在节点之间动态并行的移动数据,使得速度非常快。

4、可靠性:能自动维护数据的多份复制,并且在任务失败后能自动地重新部署(redeploy)计算任务。所以Hadoop的按位存储和处理数据的能力值得人们信赖。

Hadoop成功的魅力--通用性

Hadoop成功的魅力--简单

1.3、Hadoop应用

Yahoo:支持广告系统、用户行为分析、反垃圾邮件系统

Facebook:存储处理数据挖掘和日志统计、构建基于Hadoop数据仓库平台(Hive来自FB)

IBM:蓝云基础设施构建

百度:数据分析和挖掘 竞价排名

阿里巴巴:交易数据 信用分析

腾讯:用户关系数据

华为:对Hadoop的HA方案,以及HBase领域有深入的研究

1.4、Hadoop发行版本

开源社区版:Apache开源社区发行,也是官方发行版本。

优点:更新迭代快 缺点:兼容稳定性不周

商业发行版:商业公司发行 基于Apache开源协议某些服务需要收费

优点:稳定兼容好 缺点:收费 版本更新慢

Apache开源社区版本

商业发行版本

Cloudera:Apache Hadoop open source ecosystem | Cloudera

Hortonworks:Hortonworks Data Platform | Cloudera

1.5、Hadoop架构变迁(1.0-2.0变迁)

Hadoop1.0

HDFS(分布式文件存储)

MapReduce(资源管理和分布式数据处理)

Hadoop2.0

HDFS(分布式文件存储)

MapReduce(分布式数据处理)

YARN(集群资源管理、任务调度)

Hadoop3.0

Hadoop 3.0架构组件和Hadoop 2.0类似,3.0着重于性能优化。

通用方面:精简内核、类路径隔离、shell脚本重构

Hadoop HDFS:EC纠删码、多NameNode支持

Hadoop MapReduce:任务本地化优化、内存参数自动推断

Hadoop YARN:Timeline Service V2、队列配置

2、Hadoop安装部署

2.1、Hadoop集群概述

Hadoop集群包括两个集群:HDFS集群、YARN集群

两个集群逻辑上分离、通常物理上在一起

两个集群都是标准的主从架构集群

HDFS集群(分布式存储)

主角色:NameNode

从角色:DataNode

主角色辅助角色:SecondaryNameNode

YARN集群(资源管理、调度)

主角色:RosoureManager

从角色:NodeManager

逻辑上分离:两个集群互相之间没有依赖,互不影响

物理上在一起:某些角色进程往往部署在同一台物理服务器上

MapReduce集群呢?MapReduce是计算框架,代码层面的组件,没有集群之说

2.2、Hadoop集群模式安装

官网下载

hadoop-3.3.6-src.tar.gz :源码包

hadoop-3.3.6.tar.gz:官方编译安装包

为什么要重新编译Hadoop源码?

匹配不同操作系统本地库环境,Hadoop某些操作比如压缩、IO需要调用系统本地库(*.so|*.dll) 修改源码、重构源码

如何编译Hadoop?

源码包根目录下文件:BUILDING.txt

2.2.1、集群角色规划:

资源上有抢夺冲突的,尽量不要部署在一起

工作上需要相互配合的,尽量部署在一起

依赖内存工作的NameNode是不是部署在大内存机器上

服务器

运行角色 node1 namenode datanode resourcemanager nodemanager node2 secondarynamenode datanode nodemanager node3 datanode nodemanager 2.2.2、服务器基础环境准备

1、主机名(3台机器)

vi /etc/hostname- [root@node1 ~]# cat /etc/hostname

- node1.lwz.cn

2、Host映射(3台机器)

vi /etc/hosts- [root@node1 ~]# cat /etc/hosts

- 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

- ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

- 192.168.18.81 node1 node1.lwz.cn

- 192.168.18.82 node2 node2.lwz.cn

- 192.168.18.83 node3 node3.lwz.cn

3、关闭防火墙

- systemctl stop firewalld.service #关闭防火墙

- systemctl disable firewalld.service #禁止防火墙开机自启

4、配置SSH免密登录(node1执行->node|node2|node3)

- ssh-keygen #4个回车 生成公钥、私钥

- shh-copy-id node1、shh-copy-id node2、shh-copy-id node3 #

5、集群时间同步(3台机器)

- yum -y install ntpdate

- ntpdate ntp4.aliyun.com #集群时间同步

- [root@node1 ~]# ntpdate ntp4.aliyun.com

- 25 Dec 23:36:11 ntpdate[9578]: adjust time server 203.107.6.88 offset 0.164550 sec

- [root@node1 ~]# date

- Mon Dec 25 23:37:34 CST 2023

6、创建统一工作目录(3台机器)

- mkdir -p /export/server/ #软件安装路径

- mkdir -p /export/data/ #数据存储路径

- mkdir -p /export/software/ #安装包存放路径

7、JDK1.8安装

8、上传,解压Hadoop安装包

[root@node1 hadoop-3.3.6]# tar zxvf hadoop-3.3.6.tar.gz2.2.3、Hadoop安装说明

Hadoop安装包目录

目录 说明 bin Hadoop最基本的管理脚本和使用脚本的目录,这些脚本是sbin目录下管理脚本的基础实现,用户可以直接使用这些脚本管理和使用Hadoop etc Hadoop配置文件所在的目录 include 对外提供的编程库头文件(具体动态库和静态库在lib目录中),这些头文件均是用C++定义的,通常用于C++程序访问HDFS或者编写MapReduce程序。 lib 该目录包含了Hadoop对外提供的编程动态库和静态库,与include目录中的头文件结合使用。 libexec 各个服务对用的shell配置文件所在的目录,可用于配置日志输出、启动参数(比如JVM参数)等基本信息。 sbin Hadoop管理脚本所在的目录,主要包含HDFS和YARN中各类服务的启动/关闭脚本。 share Hadoop各个模块编译后的jar包所在的目录,官方自带示例。 配置文件概述

官网文档:Hadoop – Apache Hadoop 3.3.6

第一类1个:hadoop-env.sh

第二类4个:xxxx-site.xml ,site表示的是用户定义的配置,会覆盖default中的默认配置。

core-site.xml 核心模块配置

hdfs-site.xml hdfs文件系统模块配置

mapred-site.xml MapReduce模块配置

yarn-site.xml yarn模块配置

第三类1个:workers

所有的配置文件目录:/export/server/hadoop-3.3.6/etc/hadoop

修改配置文件

hadoop-env.sh

- 文件最后添加

- export JAVA_HOME=/export/server/jdk1.8.0_202

- export HDFS_NAMENODE_USER=root

- export HDFS_DATANODE_USER=root

- export HDFS_SECONDARYNAMENODE_USER=root

- export YARN_RESOURCEMANAGER_USER=root

- export YARN_NODEMANAGER_USER=root

core-site.xml 拷贝到

中间 - <property>

- <name>fs.defaultFSname>

- <value>hdfs://node1:8020value>

- property>

- <property>

- <name>hadoop.tmp.dirname>

- <value>/export/data/hadoop-3.3.6value>

- property>

- <property>

- <name>hadoop.http.staticuser.username>

- <value>rootvalue>

- property>

- <property>

- <name>hadoop.proxyuser.root.hostsname>

- <value>*value>

- property>

- <property>

- <name>hadoop.proxyuser.root.groupsname>

- <value>*value>

- property>

- <property>

- <name>fs.trash.intervalname>

- <value>1440value>

- property>

hdfs-site.xml 拷贝到

中间 - <property>

- <name>dfs.namenode.secondary.http-addressname>

- <value>node2:9868value>

- property>

mapred-site.xml 拷贝到

中间 - <property>

- <name>mapreduce.framework.namename>

- <value>yarnvalue>

- property>

- <property>

- <name>mapreduce.jobhistory.addressname>

- <value>node1:10020value>

- property>

- <property>

- <name>mapreduce.jobhistory.webapp.addressname>

- <value>node1:19888value>

- property>

- <property>

- <name>yarn.app.mapreduce.am.envname>

- <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}value>

- property>

- <property>

- <name>mapreduce.map.envname>

- <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}value>

- property>

- <property>

- <name>mapreduce.reduce.envname>

- <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}value>

- property>

yarn-site.xml 拷贝到

中间 - <property>

- <name>yarn.resourcemanager.hostnamename>

- <value>node1value>

- property>

- <property>

- <name>yarn.nodemanager.aux-servicesname>

- <value>mapreduce_shufflevalue>

- property>

- <property>

- <name>yarn.nodemanager.pmem-check-enabledname>

- <value>falsevalue>

- property>

- <property>

- <name>yarn.nodemanager.vmem-check-enabledname>

- <value>falsevalue>

- property>

- <property>

- <name>yarn.log-aggregation-enablename>

- <value>truevalue>

- property>

- <property>

- <name>yarn.log.server.urlname>

- <value>http://node1:19888/jobhistory/logsvalue>

- property>

- <property>

- <name>yarn.log-aggregation.retain-secondsname>

- <value>604800value>

- property>

workers 把原来localhost内容删除,拷贝下面内容保存

- node1.lwz.cn

- node2.lwz.cn

- node3.lwz.cn

分发同步hadoop安装包

- cd /export/server

- scp -r hadoop-3.3.6 root@node2:$PWD

- scp -r hadoop-3.3.6 root@node3:$PWD

将hadoop添加到环境变量(3台机器都做)

- vi /etc/profile

- export HADOOP_HOME=/export/server/hadoop-3.3.6

- export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

- source /etc/profile

Hadoop集群启动

(首次启动)初始化namenode

首次启动HDFS时,必须对其进行格式化操作。

format本质上是初始化工作,进行HDFS清理和准备工作,只能执行一次。集群启动前执行,在主机器上执行。

如果多次format除了造成数据丢失外,还会导致hdfs集群主从角色之间互不识别。通过删除所有机器hadoop.tmp.dir目录重新format解决

hdfs namenode -format- [root@node1 hadoop]# hdfs namenode -format

- 2023-12-27 00:24:35,400 INFO namenode.NameNode: STARTUP_MSG:

- /************************************************************

- STARTUP_MSG: Starting NameNode

- STARTUP_MSG: host = node1/192.168.18.81

- STARTUP_MSG: args = [-format]

- STARTUP_MSG: version = 3.3.6

- STARTUP_MSG: classpath = /export/server/hadoop-3.3.6/etc/hadoop:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-aarch_64.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-socks-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-math3-3.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-xml-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-xml-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-sctp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-collections-3.2.2.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jsp-api-2.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-codec-1.15.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/reload4j-1.2.22.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jersey-server-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jsch-0.1.55.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerby-util-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-io-2.8.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/token-provider-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/stax2-api-4.2.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-dns-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/avro-1.7.7.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-text-1.10.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-native-kqueue-4.1.89.Final-osx-aarch_64.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/hadoop-auth-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerby-config-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-lang3-3.12.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-server-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jersey-servlet-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-common-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/hadoop-shaded-protobuf_3_7-1.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/guava-27.0-jre.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-rxtx-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jersey-core-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-logging-1.1.3.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/curator-recipes-5.2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-resolver-dns-classes-macos-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-handler-ssl-ocsp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-native-epoll-4.1.89.Final-linux-aarch_64.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/curator-framework-5.2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jul-to-slf4j-1.7.36.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-http-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-http2-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-haproxy-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/checker-qual-2.5.2.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jsr305-3.0.2.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-server-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-util-ajax-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-client-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/slf4j-api-1.7.36.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-handler-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/zookeeper-3.6.3.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/hadoop-shaded-guava-1.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-beanutils-1.9.4.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-util-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-configuration2-2.8.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jersey-json-1.20.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-udt-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-compress-1.21.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-memcache-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-cli-1.2.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jettison-1.5.4.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-smtp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-native-epoll-4.1.89.Final-linux-x86_64.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-http-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-common-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-daemon-1.0.13.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/httpcore-4.4.13.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/failureaccess-1.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jakarta.activation-api-1.2.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-stomp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-all-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/metrics-core-3.2.4.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-native-unix-common-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-x86_64.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jackson-core-2.12.7.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-servlet-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/dnsjava-2.1.7.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/zookeeper-jute-3.6.3.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-redis-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-webapp-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-util-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jackson-annotations-2.12.7.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/hadoop-annotations-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jackson-databind-2.12.7.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-handler-proxy-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/paranamer-2.3.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-core-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/gson-2.9.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-security-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-native-kqueue-4.1.89.Final-osx-x86_64.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/re2j-1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/httpclient-4.5.13.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/curator-client-5.2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-classes-epoll-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-resolver-dns-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-resolver-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jetty-io-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/commons-net-3.9.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/snappy-java-1.1.8.2.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/woodstox-core-5.4.0.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-transport-classes-kqueue-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-codec-mqtt-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/nimbus-jose-jwt-9.8.1.jar:/export/server/hadoop-3.3.6/share/hadoop/common/lib/netty-buffer-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/common/hadoop-common-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/common/hadoop-nfs-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/common/hadoop-common-3.3.6-tests.jar:/export/server/hadoop-3.3.6/share/hadoop/common/hadoop-registry-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/common/hadoop-kms-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-aarch_64.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-socks-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/HikariCP-java7-2.4.12.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-xml-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-xml-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-sctp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-codec-1.15.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/reload4j-1.2.22.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jersey-server-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jsch-0.1.55.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-io-2.8.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kotlin-stdlib-1.4.10.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/stax2-api-4.2.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-dns-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/avro-1.7.7.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-text-1.10.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-native-kqueue-4.1.89.Final-osx-aarch_64.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/hadoop-auth-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-lang3-3.12.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-server-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jersey-servlet-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/hadoop-shaded-protobuf_3_7-1.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-rxtx-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jersey-core-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/curator-recipes-5.2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-resolver-dns-classes-macos-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-handler-ssl-ocsp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/okio-2.8.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-native-epoll-4.1.89.Final-linux-aarch_64.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/curator-framework-5.2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-http-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-http2-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-haproxy-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jsr305-3.0.2.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-util-ajax-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-handler-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/zookeeper-3.6.3.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/hadoop-shaded-guava-1.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-beanutils-1.9.4.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-util-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-configuration2-2.8.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jersey-json-1.20.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-udt-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-compress-1.21.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-memcache-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jettison-1.5.4.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-smtp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-native-epoll-4.1.89.Final-linux-x86_64.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-http-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-common-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/httpcore-4.4.13.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jakarta.activation-api-1.2.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-stomp-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-all-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/metrics-core-3.2.4.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-native-unix-common-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-x86_64.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jackson-core-2.12.7.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-servlet-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/re2j-1.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/zookeeper-jute-3.6.3.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-redis-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-webapp-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jackson-annotations-2.12.7.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/hadoop-annotations-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jackson-databind-2.12.7.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-handler-proxy-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/paranamer-2.3.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-3.10.6.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/gson-2.9.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-security-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-native-kqueue-4.1.89.Final-osx-x86_64.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/httpclient-4.5.13.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/curator-client-5.2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-classes-epoll-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-resolver-dns-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-resolver-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jetty-io-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/okhttp-4.9.3.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/commons-net-3.9.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/snappy-java-1.1.8.2.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/woodstox-core-5.4.0.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-transport-classes-kqueue-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-codec-mqtt-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/nimbus-jose-jwt-9.8.1.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/netty-buffer-4.1.89.Final.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/lib/kotlin-stdlib-common-1.4.10.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.6-tests.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.6-tests.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-3.3.6-tests.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-client-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-nfs-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-client-3.3.6-tests.jar:/export/server/hadoop-3.3.6/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.6-tests.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/java-util-1.9.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/objenesis-2.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jakarta.xml.bind-api-2.3.2.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/fst-2.50.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/websocket-client-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jersey-client-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/bcpkix-jdk15on-1.68.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.12.7.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/asm-tree-9.4.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/javax.inject-1.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/snakeyaml-2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/javax-websocket-server-impl-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/websocket-api-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/asm-commons-9.4.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/bcprov-jdk15on-1.68.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jackson-jaxrs-base-2.12.7.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/javax-websocket-client-impl-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jline-3.9.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/websocket-server-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/javax.websocket-api-1.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/javax.websocket-client-api-1.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jetty-plus-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jetty-client-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jetty-annotations-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/websocket-servlet-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/json-io-2.5.1.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jetty-jndi-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/aopalliance-1.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/websocket-common-9.4.51.v20230217.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jersey-guice-1.19.4.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jna-5.2.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.12.7.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/lib/guice-4.0.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-registry-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-applications-mawo-core-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-client-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-router-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-tests-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-services-core-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-services-api-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-common-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-server-common-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.3.6.jar:/export/server/hadoop-3.3.6/share/hadoop/yarn/hadoop-yarn-api-3.3.6.jar

- STARTUP_MSG: build = https://github.com/apache/hadoop.git -r 1be78238728da9266a4f88195058f08fd012bf9c; compiled by 'ubuntu' on 2023-06-18T08:22Z

- STARTUP_MSG: java = 1.8.0_202

- ************************************************************/

- 2023-12-27 00:24:35,410 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

- 2023-12-27 00:24:35,521 INFO namenode.NameNode: createNameNode [-format]

- 2023-12-27 00:24:36,139 INFO namenode.NameNode: Formatting using clusterid: CID-4dc541c7-8aa2-499a-bea5-69f139b26977

- 2023-12-27 00:24:36,182 INFO namenode.FSEditLog: Edit logging is async:true

- 2023-12-27 00:24:36,210 INFO namenode.FSNamesystem: KeyProvider: null

- 2023-12-27 00:24:36,211 INFO namenode.FSNamesystem: fsLock is fair: true

- 2023-12-27 00:24:36,212 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

- 2023-12-27 00:24:36,234 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

- 2023-12-27 00:24:36,234 INFO namenode.FSNamesystem: supergroup = supergroup

- 2023-12-27 00:24:36,235 INFO namenode.FSNamesystem: isPermissionEnabled = true

- 2023-12-27 00:24:36,235 INFO namenode.FSNamesystem: isStoragePolicyEnabled = true

- 2023-12-27 00:24:36,235 INFO namenode.FSNamesystem: HA Enabled: false

- 2023-12-27 00:24:36,283 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

- 2023-12-27 00:24:36,445 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit : configured=1000, counted=60, effected=1000

- 2023-12-27 00:24:36,445 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

- 2023-12-27 00:24:36,449 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

- 2023-12-27 00:24:36,450 INFO blockmanagement.BlockManager: The block deletion will start around 2023 Dec 27 00:24:36

- 2023-12-27 00:24:36,451 INFO util.GSet: Computing capacity for map BlocksMap

- 2023-12-27 00:24:36,451 INFO util.GSet: VM type = 64-bit

- 2023-12-27 00:24:36,452 INFO util.GSet: 2.0% max memory 1.7 GB = 34.8 MB

- 2023-12-27 00:24:36,453 INFO util.GSet: capacity = 2^22 = 4194304 entries

- 2023-12-27 00:24:36,463 INFO blockmanagement.BlockManager: Storage policy satisfier is disabled

- 2023-12-27 00:24:36,463 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

- 2023-12-27 00:24:36,470 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.999

- 2023-12-27 00:24:36,470 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

- 2023-12-27 00:24:36,470 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

- 2023-12-27 00:24:36,471 INFO blockmanagement.BlockManager: defaultReplication = 3

- 2023-12-27 00:24:36,471 INFO blockmanagement.BlockManager: maxReplication = 512

- 2023-12-27 00:24:36,471 INFO blockmanagement.BlockManager: minReplication = 1

- 2023-12-27 00:24:36,471 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

- 2023-12-27 00:24:36,471 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

- 2023-12-27 00:24:36,471 INFO blockmanagement.BlockManager: encryptDataTransfer = false

- 2023-12-27 00:24:36,471 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

- 2023-12-27 00:24:36,537 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

- 2023-12-27 00:24:36,538 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

- 2023-12-27 00:24:36,538 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

- 2023-12-27 00:24:36,538 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

- 2023-12-27 00:24:36,578 INFO util.GSet: Computing capacity for map INodeMap

- 2023-12-27 00:24:36,578 INFO util.GSet: VM type = 64-bit

- 2023-12-27 00:24:36,578 INFO util.GSet: 1.0% max memory 1.7 GB = 17.4 MB

- 2023-12-27 00:24:36,578 INFO util.GSet: capacity = 2^21 = 2097152 entries

- 2023-12-27 00:24:36,579 INFO namenode.FSDirectory: ACLs enabled? true

- 2023-12-27 00:24:36,579 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

- 2023-12-27 00:24:36,579 INFO namenode.FSDirectory: XAttrs enabled? true

- 2023-12-27 00:24:36,580 INFO namenode.NameNode: Caching file names occurring more than 10 times

- 2023-12-27 00:24:36,585 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

- 2023-12-27 00:24:36,587 INFO snapshot.SnapshotManager: SkipList is disabled

- 2023-12-27 00:24:36,591 INFO util.GSet: Computing capacity for map cachedBlocks

- 2023-12-27 00:24:36,591 INFO util.GSet: VM type = 64-bit

- 2023-12-27 00:24:36,592 INFO util.GSet: 0.25% max memory 1.7 GB = 4.3 MB

- 2023-12-27 00:24:36,592 INFO util.GSet: capacity = 2^19 = 524288 entries

- 2023-12-27 00:24:36,600 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

- 2023-12-27 00:24:36,601 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

- 2023-12-27 00:24:36,601 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

- 2023-12-27 00:24:36,605 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

- 2023-12-27 00:24:36,605 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

- 2023-12-27 00:24:36,607 INFO util.GSet: Computing capacity for map NameNodeRetryCache

- 2023-12-27 00:24:36,607 INFO util.GSet: VM type = 64-bit

- 2023-12-27 00:24:36,607 INFO util.GSet: 0.029999999329447746% max memory 1.7 GB = 534.2 KB

- 2023-12-27 00:24:36,607 INFO util.GSet: capacity = 2^16 = 65536 entries

- 2023-12-27 00:24:36,632 INFO namenode.FSImage: Allocated new BlockPoolId: BP-626243755-192.168.18.81-1703607876623

- 2023-12-27 00:24:36,653 INFO common.Storage: Storage directory /export/data/hadoop-3.3.6/dfs/name has been successfully formatted.

- 2023-12-27 00:24:36,680 INFO namenode.FSImageFormatProtobuf: Saving image file /export/data/hadoop-3.3.6/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

- 2023-12-27 00:24:36,837 INFO namenode.FSImageFormatProtobuf: Image file /export/data/hadoop-3.3.6/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds .

- 2023-12-27 00:24:36,855 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

- 2023-12-27 00:24:36,882 INFO namenode.FSNamesystem: Stopping services started for active state

- 2023-12-27 00:24:36,882 INFO namenode.FSNamesystem: Stopping services started for standby state

- 2023-12-27 00:24:36,887 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

- 2023-12-27 00:24:36,887 INFO namenode.NameNode: SHUTDOWN_MSG:

- /************************************************************

- SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.18.81

- ************************************************************/

- [root@node1 hadoop]#

2023-12-27 00:24:36,653 INFO common.Storage: Storage directory /export/data/hadoop-3.3.6/dfs/name has been successfully formatted. 代表成功

2.2.4、集群启停&Web UI页面

手动逐个进程启停

每台机器上每次手动启动关闭一个角色进程,可以精准控制每个进程启停,避免群起群停。

HDFS集群

- #hadoop2.x版本命令

- hadoop-daemon.sh start|stop namenode|datanode|secondarynamenode

- #hadoop3.x版本命令

- hdfs --daemon start|stop namenode|datanode|secondarynamenode

YARN集群

- #hadoop2.x版本命令

- yarn-daemon.sh start|stop resourcemanager|nodemanager

- #hadoop3.x版本命令

- yarn --daemon start|stop resourcemanager|nodemanager

shell脚本一键启停

在node1上,使用软件自带的shell脚本一键启动。前提:配置好机器之间的SSH免密登录和workers文件。

HDFS集群

start-dfs.sh、stop-dfs.sh

- ####################################node1

- [root@node1 ~]# start-dfs.sh

- Starting namenodes on [node1]

- Last login: Tue Jan 2 22:33:42 CST 2024 from 192.168.18.69 on pts/0

- Starting datanodes

- Last login: Tue Jan 2 22:48:37 CST 2024 on pts/0

- node3.lwz.cn: Warning: Permanently added 'node3.lwz.cn' (ECDSA) to the list of known hosts.

- node1.lwz.cn: Warning: Permanently added 'node1.lwz.cn' (ECDSA) to the list of known hosts.

- node2.lwz.cn: Warning: Permanently added 'node2.lwz.cn' (ECDSA) to the list of known hosts.

- node2.lwz.cn: WARNING: /export/server/hadoop-3.3.6/logs does not exist. Creating.

- node3.lwz.cn: WARNING: /export/server/hadoop-3.3.6/logs does not exist. Creating.

- Starting secondary namenodes [node2]

- Last login: Tue Jan 2 22:48:40 CST 2024 on pts/0

- [root@node1 ~]# jps

- 1604 NameNode

- 1732 DataNode

- 2045 Jps

- [root@node1 ~]#

- ####################################node2

- [root@node2 ~]# jps

- 1543 SecondaryNameNode

- 1480 DataNode

- 1630 Jps

- [root@node2 ~]#

- ####################################node3

- [root@node3 ~]# jps

- 1476 DataNode

- 1547 Jps

- [root@node3 ~]#

YARN集群

start-yarn.sh、stop-yarn.sh

- ####################################node1

- [root@node1 ~]# start-yarn.sh

- Starting resourcemanager

- Last login: Tue Jan 2 22:48:44 CST 2024 on pts/0

- Starting nodemanagers

- Last login: Tue Jan 2 22:51:50 CST 2024 on pts/0

- [root@node1 ~]# jps

- 2673 Jps

- 2322 NodeManager

- 1604 NameNode

- 1732 DataNode

- 2187 ResourceManager

- [root@node1 ~]#

- ####################################node2

- [root@node2 ~]# jps

- 1696 NodeManager

- 1543 SecondaryNameNode

- 1480 DataNode

- 1802 Jps

- ####################################node3

- [root@node3 ~]# jps

- 1476 DataNode

- 1607 NodeManager

- 1710 Jps

Hadoop集群

start-all.sh、stop-all.sh

进程状态、日志查看

1、启动完毕之后可以使用jps命令查看进程是否启动成功

2、Hadoop启动日志路径:/export/server/hadoop-3.3.6/logs

- [root@node1 ~]# cd /export/server/hadoop-3.3.6/logs

- [root@node1 logs]# ll

- total 212

- -rw-r--r--. 1 root root 41996 Jan 2 22:48 hadoop-root-datanode-node1.lwz.cn.log

- -rw-r--r--. 1 root root 692 Jan 2 22:48 hadoop-root-datanode-node1.lwz.cn.out

- -rw-r--r--. 1 root root 51244 Jan 2 22:49 hadoop-root-namenode-node1.lwz.cn.log

- -rw-r--r--. 1 root root 692 Jan 2 22:48 hadoop-root-namenode-node1.lwz.cn.out

- -rw-r--r--. 1 root root 46338 Jan 2 22:52 hadoop-root-nodemanager-node1.lwz.cn.log

- -rw-r--r--. 1 root root 2210 Jan 2 22:52 hadoop-root-nodemanager-node1.lwz.cn.out

- -rw-r--r--. 1 root root 52832 Jan 2 22:52 hadoop-root-resourcemanager-node1.lwz.cn.log

- -rw-r--r--. 1 root root 2226 Jan 2 22:51 hadoop-root-resourcemanager-node1.lwz.cn.out

- -rw-r--r--. 1 root root 0 Dec 27 00:20 SecurityAuth-root.audit

- drwxr-xr-x. 2 root root 6 Jan 2 22:51 userlogs

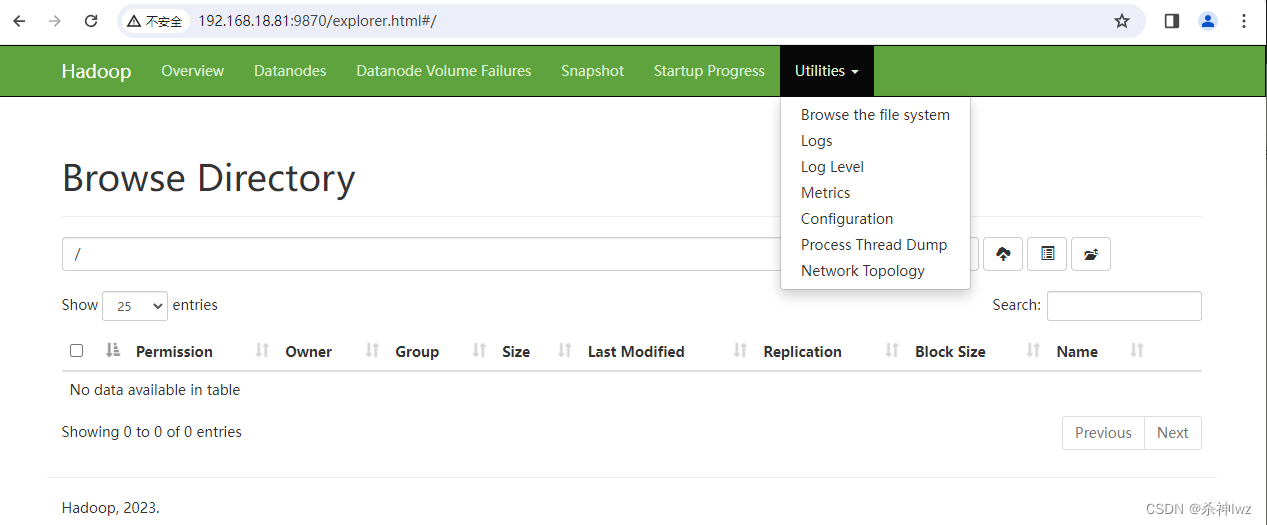

Web UI

HDFS集群

地址:http://namenode_host:9870

其中namenode_host是namenode运行所在机器的主机名或者ip

如果使用主机名访问,别忘了在windows配置hosts

最常用的是Utilities-->Browse the file system

YARN集群

地址:http://resourcemanager_host:8088

其中resourcemanager_host是resourcemanager运行所在机器的主机名或者ip

如果使用主机名访问,别忘了在windows配置hosts

3、HDFS操作

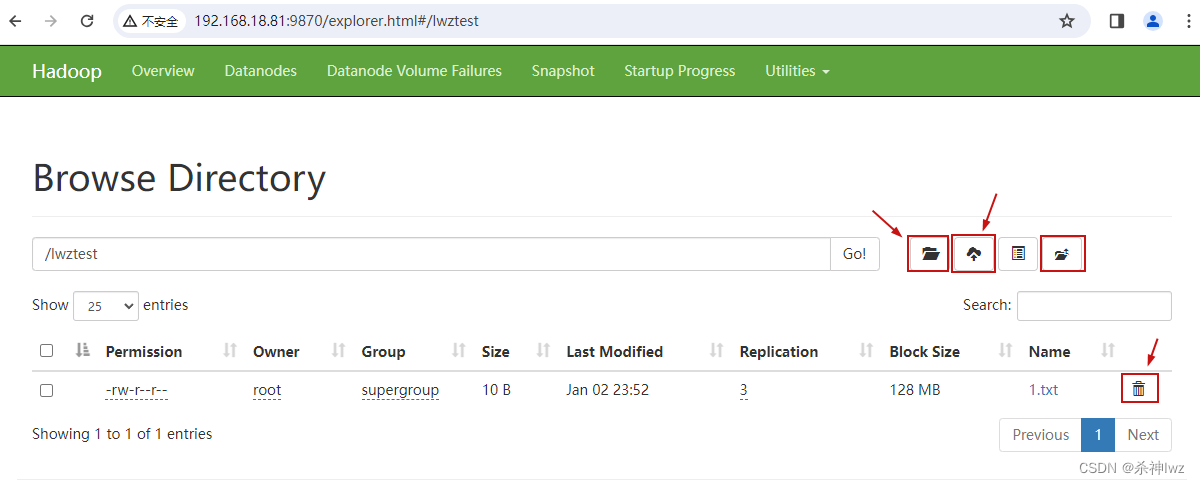

3.1、shell命令操作

- [root@node1 ~]# hadoop fs -mkdir /lwztest

- [root@node1 ~]# echo 123456adf > 1.txt

- [root@node1 ~]# cat 1.txt

- 123456adf

- [root@node1 ~]# hadoop fs -put 1.txt /lwztest

- [root@node1 ~]# hadoop fs -ls /

- Found 1 items

- drwxr-xr-x - root supergroup 0 2024-01-02 23:52 /lwztest

- [root@node1 ~]#

3.2、Web UI页面操作

思考:

1、HDFS本质就是一个文件系统

2、有目录树结构和Linux类似,分文件,文件夹

3、为什么上传一个小文件也这么慢?

4、MapReduce+YARN操作

案例1:执行Hadoop官方自带的MapReduce案例,评估圆周率π的值

- [root@node1 ~]# cd /export/server/hadoop-3.3.6/share/hadoop/mapreduce/

- [root@node1 mapreduce]# ls

- hadoop-mapreduce-client-app-3.3.6.jar hadoop-mapreduce-client-jobclient-3.3.6.jar hadoop-mapreduce-examples-3.3.6.jar

- hadoop-mapreduce-client-common-3.3.6.jar hadoop-mapreduce-client-jobclient-3.3.6-tests.jar jdiff

- hadoop-mapreduce-client-core-3.3.6.jar hadoop-mapreduce-client-nativetask-3.3.6.jar lib-examples

- hadoop-mapreduce-client-hs-3.3.6.jar hadoop-mapreduce-client-shuffle-3.3.6.jar sources

- hadoop-mapreduce-client-hs-plugins-3.3.6.jar hadoop-mapreduce-client-uploader-3.3.6.jar

- [root@node1 mapreduce]# hadoop jar hadoop-mapreduce-examples-3.3.6.jar pi 2 4

- Number of Maps = 2

- Samples per Map = 4

- Wrote input for Map #0

- Wrote input for Map #1

- Starting Job

- 2024-01-03 00:05:35,990 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at node1/192.168.18.81:8032

- 2024-01-03 00:05:36,427 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_17042071157 02_0001

- 2024-01-03 00:05:36,633 INFO input.FileInputFormat: Total input files to process : 2

- 2024-01-03 00:05:36,739 INFO mapreduce.JobSubmitter: number of splits:2

- 2024-01-03 00:05:36,889 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1704207115702_0001

- 2024-01-03 00:05:36,889 INFO mapreduce.JobSubmitter: Executing with tokens: []

- 2024-01-03 00:05:37,048 INFO conf.Configuration: resource-types.xml not found

- 2024-01-03 00:05:37,048 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

- 2024-01-03 00:05:37,469 INFO impl.YarnClientImpl: Submitted application application_1704207115702_0001

- 2024-01-03 00:05:37,517 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1704207115702_0001/

- 2024-01-03 00:05:37,518 INFO mapreduce.Job: Running job: job_1704207115702_0001

- 2024-01-03 00:05:44,645 INFO mapreduce.Job: Job job_1704207115702_0001 running in uber mode : false

- 2024-01-03 00:05:44,647 INFO mapreduce.Job: map 0% reduce 0%

- 2024-01-03 00:05:49,790 INFO mapreduce.Job: map 100% reduce 0%

- 2024-01-03 00:05:56,882 INFO mapreduce.Job: map 100% reduce 100%

- 2024-01-03 00:05:57,937 INFO mapreduce.Job: Job job_1704207115702_0001 completed successfully

- 2024-01-03 00:05:58,074 INFO mapreduce.Job: Counters: 54

- File System Counters

- FILE: Number of bytes read=50

- FILE: Number of bytes written=831342

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=520

- HDFS: Number of bytes written=215

- HDFS: Number of read operations=13

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=3

- HDFS: Number of bytes read erasure-coded=0

- Job Counters

- Launched map tasks=2

- Launched reduce tasks=1

- Data-local map tasks=2

- Total time spent by all maps in occupied slots (ms)=5594

- Total time spent by all reduces in occupied slots (ms)=3894

- Total time spent by all map tasks (ms)=5594

- Total time spent by all reduce tasks (ms)=3894

- Total vcore-milliseconds taken by all map tasks=5594

- Total vcore-milliseconds taken by all reduce tasks=3894

- Total megabyte-milliseconds taken by all map tasks=5728256

- Total megabyte-milliseconds taken by all reduce tasks=3987456

- Map-Reduce Framework

- Map input records=2

- Map output records=4

- Map output bytes=36

- Map output materialized bytes=56

- Input split bytes=284

- Combine input records=0

- Combine output records=0

- Reduce input groups=2

- Reduce shuffle bytes=56

- Reduce input records=4

- Reduce output records=0

- Spilled Records=8

- Shuffled Maps =2

- Failed Shuffles=0

- Merged Map outputs=2

- GC time elapsed (ms)=226

- CPU time spent (ms)=1710

- Physical memory (bytes) snapshot=887963648

- Virtual memory (bytes) snapshot=8386064384

- Total committed heap usage (bytes)=736624640

- Peak Map Physical memory (bytes)=314757120

- Peak Map Virtual memory (bytes)=2793766912

- Peak Reduce Physical memory (bytes)=260431872

- Peak Reduce Virtual memory (bytes)=2799820800

- Shuffle Errors

- BAD_ID=0

- CONNECTION=0

- IO_ERROR=0

- WRONG_LENGTH=0

- WRONG_MAP=0

- WRONG_REDUCE=0

- File Input Format Counters

- Bytes Read=236

- File Output Format Counters

- Bytes Written=97

- Job Finished in 22.166 seconds

- Estimated value of Pi is 3.50000000000000000000

- [root@node1 mapreduce]#

案例2:文件单词统计案例

- [root@node1 mapreduce]# hadoop fs -mkdir -p /wordcount/input

- [root@node1 mapreduce]# echo hi lwz i m ok > hi.txt

- [root@node1 mapreduce]# hadoop fs -put hi.txt /wordcount/input

- [root@node1 mapreduce]# hadoop jar hadoop-mapreduce-examples-3.3.6.jar wordcount /wordcount/input /wordcount/output

- 2024-01-03 00:19:26,657 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at node1/192.168.18.81:8032

- 2024-01-03 00:19:27,210 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1704207115702_0002

- 2024-01-03 00:19:27,547 INFO input.FileInputFormat: Total input files to process : 1

- 2024-01-03 00:19:27,680 INFO mapreduce.JobSubmitter: number of splits:1

- 2024-01-03 00:19:27,852 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1704207115702_0002

- 2024-01-03 00:19:27,852 INFO mapreduce.JobSubmitter: Executing with tokens: []

- 2024-01-03 00:19:28,010 INFO conf.Configuration: resource-types.xml not found

- 2024-01-03 00:19:28,010 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

- 2024-01-03 00:19:28,072 INFO impl.YarnClientImpl: Submitted application application_1704207115702_0002

- 2024-01-03 00:19:28,106 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1704207115702_0002/

- 2024-01-03 00:19:28,107 INFO mapreduce.Job: Running job: job_1704207115702_0002

- 2024-01-03 00:19:35,223 INFO mapreduce.Job: Job job_1704207115702_0002 running in uber mode : false

- 2024-01-03 00:19:35,225 INFO mapreduce.Job: map 0% reduce 0%

- 2024-01-03 00:19:40,342 INFO mapreduce.Job: map 100% reduce 0%

- 2024-01-03 00:19:45,397 INFO mapreduce.Job: map 100% reduce 100%

- 2024-01-03 00:19:45,415 INFO mapreduce.Job: Job job_1704207115702_0002 completed successfully

- 2024-01-03 00:19:45,557 INFO mapreduce.Job: Counters: 54

- File System Counters

- FILE: Number of bytes read=50

- FILE: Number of bytes written=553581

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=119

- HDFS: Number of bytes written=24

- HDFS: Number of read operations=8

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=2

- HDFS: Number of bytes read erasure-coded=0

- Job Counters

- Launched map tasks=1

- Launched reduce tasks=1

- Data-local map tasks=1

- Total time spent by all maps in occupied slots (ms)=2563

- Total time spent by all reduces in occupied slots (ms)=2582

- Total time spent by all map tasks (ms)=2563

- Total time spent by all reduce tasks (ms)=2582

- Total vcore-milliseconds taken by all map tasks=2563

- Total vcore-milliseconds taken by all reduce tasks=2582

- Total megabyte-milliseconds taken by all map tasks=2624512

- Total megabyte-milliseconds taken by all reduce tasks=2643968

- Map-Reduce Framework

- Map input records=1

- Map output records=5

- Map output bytes=34

- Map output materialized bytes=50

- Input split bytes=105

- Combine input records=5

- Combine output records=5

- Reduce input groups=5

- Reduce shuffle bytes=50

- Reduce input records=5

- Reduce output records=5

- Spilled Records=10

- Shuffled Maps =1

- Failed Shuffles=0

- Merged Map outputs=1

- GC time elapsed (ms)=118

- CPU time spent (ms)=1350

- Physical memory (bytes) snapshot=532602880

- Virtual memory (bytes) snapshot=5595779072

- Total committed heap usage (bytes)=392167424

- Peak Map Physical memory (bytes)=266100736

- Peak Map Virtual memory (bytes)=2794917888

- Peak Reduce Physical memory (bytes)=266502144

- Peak Reduce Virtual memory (bytes)=2800861184

- Shuffle Errors

- BAD_ID=0

- CONNECTION=0

- IO_ERROR=0

- WRONG_LENGTH=0

- WRONG_MAP=0

- WRONG_REDUCE=0

- File Input Format Counters

- Bytes Read=14

- File Output Format Counters

- Bytes Written=24

- [root@node1 mapreduce]#

思考:

1、执行MapReduce的时候,为什么首先请求YARN?

2、MapReduce看上去好像是两个阶段?先Map,再Reduce?

3、处理小数据的时候,MapReduce速度快吗?

再小的努力,乘以365都很明显!

一个程序员最重要的能力是:写出高质量的代码!!

有道无术,术尚可求也,有术无道,止于术。

无论你是年轻还是年长,所有程序员都需要记住:时刻努力学习新技术,否则就会被时代抛弃! -

相关阅读:

c#自动生成缺陷图像-添加从待匹配目标文件夹图像及xml移动至指定目标文件中功能--20240612

配置Kafka消息保留时间

Grid布局介绍

论文笔记 - SIMILAR: Submodular Information Measures Based Active Learning In Realistic Scenarios

✔ ★ 算法基础笔记(Acwing)(三)—— 搜索与图论(17道题)【java版本】

jquery中的ajax请求方式的写法 & 重要且常用参数的释义 & ajax返回值,return获取不到数据值

InfluxDB 数据备份与恢复

安装深度(Deepin)系统

Flink SQL --Flink 整合 hive

九、Spring Boot 缓存(1)

- 原文地址:https://blog.csdn.net/weixin_42472027/article/details/132957921