-

一、Flume使用

一、部署

1.下载安装包

http://archive.apache.org/dist/flume/

2.创建目录

mkdir -r /home/sxvbd/bigdata- 1

3.上传flume包到目录下

apache-flume-1.9.0-bin.tar.gz- 1

4.解压并修改名称

tar -zxvf apache-flume-1.9.0-bin.tar.gz- 1

5.在/etc/profile中配置flume路径

export FLUME_HOME="/home/sxvbd/bigdata/flume-1.9.0" export PATH=$PATH:$FLUME_HOME/bin export CLASSPATH=.:$FLUME_HOME/lib- 1

- 2

- 3

6.使配置生效

source /etc/profile- 1

7.进入flume配置文件目录

cd /home/sxvbd/bigdata/flume-1.9.0/conf- 1

8.修改配置文件名称

cp flume-conf.properties.template flume-conf.properties cp flume-env.sh.template flume-env.sh- 1

- 2

9.修改配置flume-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_333- 1

10.创建需要采集的测试文件目录

mkdir -r /home/sxvbd/bigdata/flumeTestDir- 1

11.修改配置文件flume-conf.properties

agent.sources = source1 agent.channels = channel1 agent.sinks = sink1 #For each one of the sources, the type is defined #监控一个目录的,如果目录中出现了新的文件,就把文件内容采集过来 agent.sources.source1.type = spooldir agent.sources.source1.bind = 0.0.0.0 agent.sources.source1.port = 44444 agent.sources.source1.spoolDir = /home/sxvbd/bigdata/flumeTestDir/ agent.channels.channel1.type = memory agent.channels.channel1.capacity = 1000000 agent.channels.channel1.transactionCapacity = 10000 agent.channels.channel1.keep-alive = 60 #Each channel's type is defined. #配置kafka的集群和topic agent.sinks.sink1.type = org.apache.flume.sink.kafka.KafkaSink agent.sinks.sink1.kafka.bootstrap.servers = node24:9092,node25:9092,node26:9092 agent.sinks.sink1.kafka.topic = data-ncm-hljk-topic agent.sinks.sink1.parseAsFlumeEvent = false agent.sources.source1.channels = channel1 agent.sinks.sink1.channel = channel1- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

12.启动flume

nohup ../bin/flume-ng agent --conf conf -f /home/sxvbd/bigdata/flume-1.9.0/conf/flume-conf.properties -n agent -Dflume.root.logger=INFO,console > flume.log 2>&1 &- 1

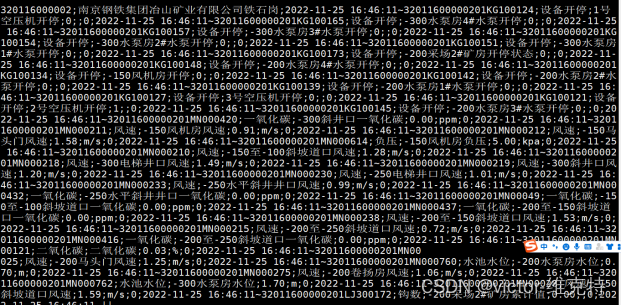

13.在/home/sxvbd/bigdata/flumeTestDir目录中上传文件,在kafka中可以消费到数据

-

相关阅读:

【Spring-1】源码分析

高级深入--day40

计算机图形学——二维变换

4. JAVA 多线程并发

Java基础~线程和进程(3) 多线程编程细节

bash调试方法总结

解决Zotero显示pdf文件中的图片左上角有黑色遮挡的问题

webStorm内存溢出问题

使用VirtualBox安装Ubuntu系统【保姆级】

Java中线程池大小浅析

- 原文地址:https://blog.csdn.net/qinqinde123/article/details/128130131