-

VMware环境配置

一、环境配置

1、修改主机名,然后切换到root用户

sudo hostnamectl set-hostname Master001

su -l root

2、确认主机网关

a.确认windows主机网关

b.确认虚拟机主机网关

3、修改网络配置

vi /etc/sysconfig/network-scripts/ifcfg-ens33- 1

ONBOOT=yes IPADDR=192.168.241.101 NETWASK=255.255.255.0 PREFIX=24 GATEWAY=192.168.241.2 BOOTPROTO=static- 1

- 2

- 3

- 4

- 5

- 6

- 7

修改主机名

修改主机名

4、设置DNS域名解析的配置文件resolv.conf。

vi /etc/resolv.conf

5、修改hosts文件

vi /etc/hosts- 1

6、重启网络服务

nmcli connection reload nmcli connection up ens33 nmcli d connect ens33- 1

- 2

- 3

7、验证网络服务

a.虚拟机ping百度

b.主机ping虚拟机

二、Hadoop伪分布式安装

1、创建hadoop用户

a.新建用户

adduser hadoop passwd hadoop- 1

- 2

b.添加用户组

usermod -a -G hadoop hadoop- 1

c.赋予root权限

vi /etc/sudoers hadoop ALL=(ALL) ALL- 1

- 2

2、切换到hadoop,创建压缩包上传文件和安装文件目录

3、上传压缩包

4、解压jdk和hadoop

tar -zxf jdk-8u221-linux-x64.tar.gz -C /home/hadoop/module/ tar -zxf hadoop-3.3.1.tar.gz -C /home/hadoop/module/- 1

- 2

5、配置jdk、hadoop环境变量

vi /etc/profile- 1

#JAVA export JAVA_HOME=/home/hadoop/module/jdk1.8.0_221 export PATH=$PATH:$JAVA_HOME/bin #HADOOP export HADOOP_HOME=/home/hadoop/module/hadoop-3.3.1 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native" export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

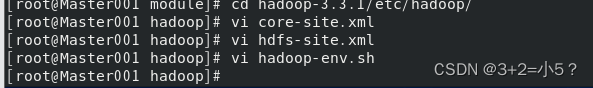

6、修改hadoop配置文件

core-site.xml

<property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/module/hadoop-3.3.1/tmp</value> </property> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

hdfs-site.xml

<property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/home/hadoop/module/hadoop-3.3.1/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/home/hadoop/module/hadoop-3.3.1/tmp/dfs/data</value> </property>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

hadoop-env.xml

7、执行NameNode的格式化

如果要多次执行格式化,要删除data目录,否则datanode进程无法启动

hdfs namenode -format- 1

格式化成功后name目录多了一个current文件夹8、配置免密登录

ssh-keygen -t rsa -P ''- 1

将密钥传给Master001ssh-copy-id Master001

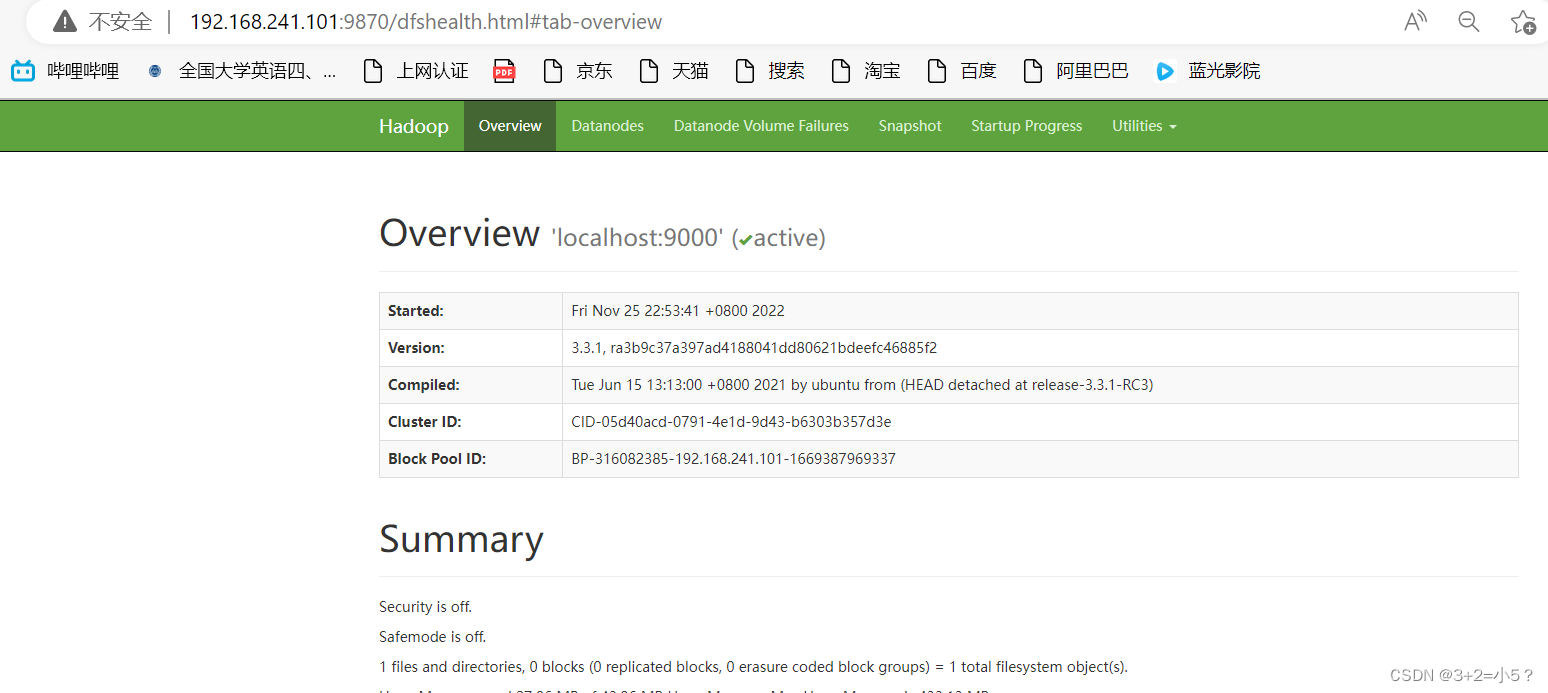

9、启动hadoop集群

hadoop配置安装完成

三、hive安装

1、上传安装包

安装包下载:https://hive.apache.org/downloads.html

Hive安装

2、上传安装包并解压

3、将hive添加到环境变量

export HIVE_HOME=/home/hadoop/module/hive-3.1.2 export PATH=$PATH:$HIVE_HOME/bin- 1

- 2

4、修改hive配置文件

Hive.env.xml

Hive.site.xml

hive-log4j2.properties

5、安装mysql

查看linux系统中是否自带数据库

rpm –qa | grep mysql

安装mysql 数据库

yum install –y mysql-server mysql mysql-devel

使mysql开机启动systemctl enable mysqld.service

启动mysql服务,查看状态service mysqld start

Service mysqld status

初始化mysql

创建管理员用户密码

登录mysql数据库mysql –u root -p

创建存放元数据的数据库

6、配置Hive相关配置文件

下载mysql-connector-java-8.0.26.java,上传到hive安装目录lib目录下

cp mysql-connector-java-8.0.26.jar /home/hadoop/module/hive-3.1.2/lib/

7、初始化hive

schematool -dbType mysql -initSchema

8、Hive启动

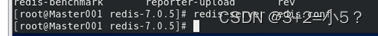

四、redis安装

1、下载

2、解压redis

3、安装gcc

yum install gcc

4、安装make命令

yum -y install gcc automake autoconf libtool make

5、执行make命令

进入解压目录中redis/src

6、执行make install

7、查看安装目录

/usr/local/bin

8、启动

redis-server

9、修改redis.conf

a. 先备份redis.conf, 比如cp redis.conf redis.conf1

b.再配置 redis.conf , 将daemonize no 修改为 daemonize yes

10、后台启动

11、启动客户端

redis-cli

五、Scala安装

使用的Spark版本是3.1.2,其对应的Scala版本是2.12.x

1、下载

https://www.scala-lang.org/download/2.12.15.html

2、上传到虚拟机

3、安装

tar -zxf scala-2.12.15.tgz -C /home/hadoop/module/

4、添加环境变量

export SCALA_HOME=/home/hadoop/module/scala-2.12.15 export PATH=$PATH:$SCALA_HOME/bin- 1

- 2

5、启动Scala

scala

使用:quit就能退出

使用:quit就能退出六、spark安装

1、下载spark

https://archive.apache.org/dist/spark/spark-3.1.2/

2、将下载的安装包上传到虚拟机

3、解压安装

tar -zxf spark-3.1.2-bin-without-hadoop.tgz -C /home/hadoop/module/

4、添加环境变量

export SPARK_HOME=/home/hadoop/module/spark-3.1.2 export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin- 1

- 2

5、修改文件spark-env.sh、works

cp spark-env.sh.template spark-env.sh

cp workers.template workers

export JAVA_HOME=/home/hadoop/module/jdk1.8.0_221 export HADOOP_HOME=/home/hadoop/module/hadoop-3.3.1 export HADOOP_CONF_DIR=/home/hadoop/module/hadoop-3.3.1/etc/hadoop export SPARK_MASTER_IP=192.168.241.101 export SPARK_DIST_CLASSPATH=$(/home/hadoop/module/hadoop-3.3.1/bin/hadoop classpath)- 1

- 2

- 3

- 4

- 5

- 6

works

works

6、spark启动

start-dfs.sh

start-yarn.sh

start-master.sh

./sbin/start-slave.sh spark://Master001:7077 启动master后查看网页http://192.168.241.101:8080/

启动master后查看网页http://192.168.241.101:8080/ 然后再启动works,启动 worker 时需要 master 的参数,该参数为spark://Master001:7077

然后再启动works,启动 worker 时需要 master 的参数,该参数为spark://Master001:7077查看进程

7、启动spark-shell

spark-shell

执行如下命令启动Spark Shell连接到YARN集群管理器上

./bin/spark-shell --master yarn

## 8、spark编程练习

## 8、spark编程练习

在Spark-shell中读取本地文件“/home/hadoop/test.txt”,统计文件行数

## 9、安装sbt或maven

## 9、安装sbt或maven

使用 Scala 编写的程序需要使用 sbt或Maven 进行编译打包sbt下载

https://www.scala-sbt.org/download.html

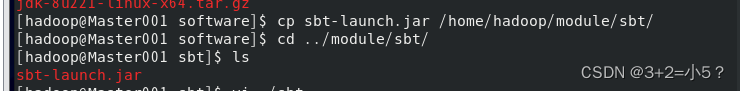

解压sbt,将bin目录下的sbt-launch,jar复制到/home/hadoop/module/sbt/中

解压sbt,将bin目录下的sbt-launch,jar复制到/home/hadoop/module/sbt/中

编辑./sbt

编辑./sbtSBT_OPTS=“-Xms512M -Xmx1536M -Xss1M -XX:+CMSClassUnloadingEnabled -XX:MaxPermSize=256M”

java $SBT_OPTS -jardirname $0/sbt-launch.jar “$@”为 ./sbt 脚本增加可执行权限

chmod u+x ./sbt

运行如下命令,检验 sbt 是否可用

./sbt sbtVersion

maven下载

https://dlcdn.apache.org/maven/maven-3/3.6.3/binaries/

maven安装unzip apache-maven-3.8.3-bin.zip -d /home/hadoop/module/

!!!出现的问题!!!

1、Master001: ERROR: Unable to write in /home/hadoop/module/hadoop-3.3.1/logs. Aborting.

2、Warning: Permanently added ‘localhost’ (ECDSA) to the list of known hosts

3、root用户hadoop启动报错:Attempting to operate on hdfs namenode as root

4、执行yum install -y mysql-server mysql mysql-devel报错

为 repo ‘appstream’ 下载元数据失败 : Cannot prepare internal mirrorlist: No URLs in mirrorlist

5、Underlying cause: java.sql.SQLException : null, message from server: “Host ‘Master001’ is not allowed to connect to this MySQL server”

6、hadoop 不在 sudoers 文件中。此事将被报告

!!!解决!!!

1、权限不够,授予权限

sudo chmod 777 /home/hadoop/module/hadoop-3.3.1/logs/- 1

2、设置免密登录

3、在环境变量中添加如下几个配置:vi /etc/profile- 1

export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=roo- 1

- 2

- 3

- 4

- 5

使环境变量生效

source /etc/profile- 1

4、可以在/etc/yum.repos.d中更新一下源。使用vault.centos.org代替mirror.centos.org。

执行一下两行代码进行修改sudo sed -i -e "s|mirrorlist=|#mirrorlist=|g" /etc/yum.repos.d/CentOS-* sudo sed -i -e "s|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g" /etc/yum.repos.d/CentOS-*- 1

- 2

5、进入mysql,更新权限

update user set host ='%' where user ='root';- 1

重启mysql:

service mysqld stop; service mysqld start;- 1

- 2

6、用root身份登录,然后执行如下命令:

visudo

在打开的文件中,找到下面这一行:

root ALL=(ALL) ALL

并紧帖其下面,添上自己的用户名,比如:hadoop

hadoop ALL=(ALL) ALL如果只做到这一步,然后保存退出,那么就能使用sudo命令了,但是此时需要输入root密码才可以。要让执行时不需要输入密码,再找到下面这一句:

#%wheel ALL=(ALL) NOPASSWD: ALL

将#号去掉,使其生效。

接着我们执行如下命令,将用户"hadoop"加入到"wheel"组中

gpasswd -a hadoop wheel

-

相关阅读:

Web渗透:文件包含漏洞(part.1)

五、线程同步 synchronized

C和指针 第15章 输入/输出函数 15.2 终止执行

使用GPT帮忙修改论文

【高级篇】线程与线程池

notes_质谱&蛋白组学数据分析基础知识

2、在Windows 10中安装和配置 PostgreSQL 15.4

iOS Flutter Engine源码调试和修改

web3.js:使用eth包

倒计时15天!百度世界2023抢先看

- 原文地址:https://blog.csdn.net/lnxcw/article/details/128042999