-

云原生--kubernetes二

主机名 IP地址 内存 k8s-master1 172.17.8.101 4C8G k8s-master2 172.17.8.102 2C2G k8s-master3 172.17.8.103 2C2G k8s-node1 172.17.8.107 2C4G k8s-node2 172.17.8.108 2C4G k8s-node3 172.17.8.109 2C4G k8s-etcd-1 172.17.8.110 2C2G k8s-etcd-2 172.17.8.111 2C2G k8s-etcd-3 172.17.8.112 2C2G ha1 172.17.8.105 1C1G ha2 172.17.8.106 1C1G harbor 172.17.8.104 1C1G 环境的准备

1、关闭防火墙和selinux 2、配置好yum仓库 3、时间同步 4、配置好/etc/hosts文件 5、免密登录 6、配置镜像加速器- 1

- 2

- 3

- 4

- 5

- 6

1、配置yum仓库

[root@k8s-master1 ~]#cat /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ https://mirrors.tuna.tsinghua.edu.cn/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0- 1

- 2

- 3

- 4

- 5

- 6

- 7

2、配置免密登录

#⽣成密钥对 root@k8s-master1:~# ssh-keygen #安装sshpass命令⽤于同步公钥到各k8s服务器 [root@k8s-master1 ~]#yum -y install sshpass #分发公钥脚本: [root@k8s-master1 ~]# cat scp-key.sh #!/bin/bash #⽬标主机列表 IP=" 172.17.8.101 172.17.8.102 172.17.8.103 172.17.8.106 172.17.8.107 172.17.8.108 172.17.8.111 172.17.8.112 1172.17.8.113 " for node in ${IP};do sshpass -p 123456 ssh-copy-id ${node} -o StrictHostKeyChecking=no if [ $? -eq 0 ];then echo "${node} 秘钥copy完成" else echo "${node} 秘钥copy失败" fi done- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

3、时间同步

[root@k8s-master1 ~]#ntpdate time1.aliyun.com && hwclock -w- 1

4、配置/etc/hosts文件(如果用IP访问可以不配置)

[root@k8s-master1 ~]#cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.17.8.104 harbor.magedu.com- 1

- 2

- 3

- 4

5、配置镜像加速器

[root@k8s-master1 ~]#cat /etc/docker/daemon.json { "exec-opts":["native.cgroupdriver=systemd"], "registry-mirrors": [ "http://harbor.magedu.com", #harbor仓库的域名 "https://uxlgig3a.mirror.aliyuncs.com" #阿里云的镜像加速地址 ], "insecure-registries": ["https://harbor.intra.com"], "max-concurrent-downloads": 10, "log-driver": "json-file", "log-level": "warn", "log-opts": { "max-size": "10m", "max-file": "3" }, "data-root": "/var/lib/docker" }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

安装实验步骤

1、安装keepalive+haproxy

[root@haproxy1 ~]#yum -y install keepalived haprox [root@haproxy1 ~]#vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { acassen } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL } vrrp_instance VI_1 { state MASTER interface eth0 garp_master_delay 10 smtp_alert virtual_router_id 51 priority 80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.17.8.88 dev eth0 label eth0:0 [root@haproxy1 ~]#systemctl enable --now keepalived.service [root@haproxy1 ~]#vim /etc/haproxy/haproxy.cfg listen k8s_api_nodes_6443 bind 172.17.8.88:6443 mode tcp server 172.17.8.101 172.17.8.101:6443 check inter 2000 fall 3 rise 5 server 172.17.8.102 172.17.8.102:6443 check inter 2000 fall 3 rise 5 server 172.17.8.103 172.17.8.103:6443 check inter 2000 fall 3 rise 5 [root@haproxy1 ~]#systemctl enable --now haproxy.service- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

2、登录http:github.com下载ansible一键安装包

[root@k8s-master1 ~]#export release=3.3.1 [root@k8s-master1 ~]#wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown [root@k8s-master1 ~]#chmod +x ./ezdown [root@k8s-master1 ~]#ll 总用量 140 -rw-------. 1 root root 1259 4月 30 21:03 anaconda-ks.cfg -rwxr-xr-x 1 root root 15075 10月 11 17:40 ezdown ##在ezdown文件中可以查看kubeasz代码、二进制、默认容器镜像等 #下载kubeasz代码、二进制、默认容器镜像 [root@k8s-master1 ~]#./ezdown -D #./ezdown -D执行成功后,下载的所有文件(kubeasz代码、二进制、离线镜像)均已整理好放入目录/etc/kubeasz [root@k8s-master1 kubeasz]#cd /etc/kubernetes/ [root@k8s-master1 kubeasz]#ll 总用量 84 -rw-rw-r-- 1 root root 20304 4月 26 2021 ansible.cfg #ansible的配置文件 drwxr-xr-x 3 root root 4096 10月 5 13:00 bin drwxr-xr-x 3 root root 20 10月 5 13:20 clusters drwxrwxr-x 8 root root 92 4月 26 2021 docs drwxr-xr-x 3 root root 4096 10月 5 13:10 down #安装组件的压缩包 drwxrwxr-x 2 root root 70 4月 26 2021 example -rwxrwxr-x 1 root root 24629 4月 26 2021 ezctl -rwxrwxr-x 1 root root 15075 4月 26 2021 ezdown drwxrwxr-x 10 root root 145 4月 26 2021 manifests drwxrwxr-x 2 root root 322 4月 26 2021 pics drwxrwxr-x 2 root root 4096 10月 5 13:58 playbooks #安装各组件的yml文件 -rw-rw-r-- 1 root root 5953 4月 26 2021 README.md drwxrwxr-x 22 root root 323 4月 26 2021 roles # drwxrwxr-x 2 root root 48 4月 26 2021 tools #创建k8s集群 [root@k8s-master1 kubeasz]#pwd /etc/kubeasz #必须在/etc/kubeasz路径创建集群 [root@k8s-master1 kubeasz]#kubeasz ezctl new k8s-01 (k8s集群的名字:k8s-01) #集群创建完后会产生ansible host文件,host文件中指定了etcd节点、master节点、node节点、VIP、运⾏时、⽹络组建类型、service IP与pod IP范围等配置信息 [root@k8s-master1 clusters]#pwd /etc/kubeasz/clusters [root@k8s-master1 clusters]#ll 总用量 0 drwxr-xr-x 5 root root 203 10月 5 14:16 k8s-01 [root@k8s-master1 ]#vim /etc/kubeasz/clusters/k8s-01 # 'etcd' cluster should have odd member(s) (1,3,5,...) [etcd] #etcd主机清单 172.17.8.110 172.17.8.111 172.17.8.112 # master node(s) #master节点主机清单 [kube_master] 172.17.8.101 172.17.8.102 # work node(s) #node节点主机清单 [kube_node] 172.17.8.107 172.17.8.108 # [optional] harbor server, a private docker registry # 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one [harbor] #harbor仓库主机清单 #172.17.8.8 NEW_INSTALL=false # [optional] loadbalance for accessing k8s from outside [ex_lb] #负载均衡的主机清单 172.17.8.105 LB_ROLE=master EX_APISERVER_VIP=172.17.8.88 EX_APISERVER_PORT=6443 172.17.8.106 LB_ROLE=backup EX_APISERVER_VIP=172.17.8.88 EX_APISERVER_PORT=6443 [chrony] #时间同步的主机清单 #172.17.8.1 [all:vars] # --------- Main Variables --------------- # Secure port for apiservers SECURE_PORT="6443" #master节点端口 # Cluster container-runtime supported: docker, containerd CONTAINER_RUNTIME="docker" #容器的运行池 # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn CLUSTER_NETWORK="calico" #k8s网络类型 # Service proxy mode of kube-proxy: 'iptables' or 'ipvs' PROXY_MODE="ipvs" # K8S Service CIDR, not overlap with node(host) networking #service网络 SERVICE_CIDR="10.100.0.0/16" # Cluster CIDR (Pod CIDR), not overlap with node(host) networking #pod网络 CLUSTER_CIDR="10.200.0.0/16" (注意:集群外的客户端若要和node节点部署的pod通信必须经过node网络-----service网络----pod网络,service网络的地址一定不能和pod网络的地址冲突) # NodePort Range #node节点暴露给客户端访问的端口范围 NODE_PORT_RANGE="30000-40000" # Cluster DNS Domain #这一定要与后面部署DNS插件的域名相同 CLUSTER_DNS_DOMAIN="magedu.local" # -------- Additional Variables (don't change the default value right now) --- # Binaries Directory bin_dir="/usr/local/bin" # Deploy Directory (kubeasz workspace) base_dir="/etc/kubeasz" # Directory for a specific cluster cluster_dir="{{ base_dir }}/clusters/k8s-01" # CA and other components cert/key Directory ca_dir="/etc/kubernetes/ssl" config.yml 文件里可以指定哪些插件不自动安装包括指定安装网络的类型、node节点的数量等 [root@k8s-master1 k8s-01]#vim config.yml ############################ # prepare ############################ # 可选离线安装系统软件包 (offline|online) INSTALL_SOURCE: "online" # 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening OS_HARDEN: false # 设置时间源服务器【重要:集群内机器时间必须同步】 ntp_servers: - "ntp1.aliyun.com" - "time1.cloud.tencent.com" - "0.cn.pool.ntp.org" # 设置允许内部时间同步的网络段,比如"10.0.0.0/8",默认全部允许 local_network: "0.0.0.0/0" ############################ # role:deploy ############################ # default: ca will expire in 100 years # default: certs issued by the ca will expire in 50 years CA_EXPIRY: "876000h" CERT_EXPIRY: "438000h" # kubeconfig 配置参数 CLUSTER_NAME: "cluster1" CONTEXT_NAME: "context-{{ CLUSTER_NAME }}" ############################ # role:etcd ############################ # 设置不同的wal目录,可以避免磁盘io竞争,提高性能 ETCD_DATA_DIR: "/var/lib/etcd" ETCD_WAL_DIR: "" ############################ # role:runtime [containerd,docker] ############################ # ------------------------------------------- containerd # [.]启用容器仓库镜像 ENABLE_MIRROR_REGISTRY: true # [containerd]基础容器镜像 SANDBOX_IMAGE: "easzlab/pause-amd64:3.4.1" # [containerd]容器持久化存储目录 CONTAINERD_STORAGE_DIR: "/var/lib/containerd" # ------------------------------------------- docker # [docker]容器存储目录 DOCKER_STORAGE_DIR: "/var/lib/docker" # [docker]开启Restful API ENABLE_REMOTE_API: false # [docker]信任的HTTP仓库 INSECURE_REG: '["127.0.0.1/8"]' ############################ # role:kube-master ############################ # k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名) MASTER_CERT_HOSTS: - "10.1.1.1" - "k8s.test.io" #- "www.test.com" # node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址) # 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段 # https://github.com/coreos/flannel/issues/847 NODE_CIDR_LEN: 24 ############################ # role:kube-node ############################ # Kubelet 根目录 KUBELET_ROOT_DIR: "/var/lib/kubelet" # node节点最大pod 数 MAX_PODS: 400 # 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量 # 数值设置详见templates/kubelet-config.yaml.j2 KUBE_RESERVED_ENABLED: "yes" # k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况; # 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2 # 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留 # 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存 SYS_RESERVED_ENABLED: "no" # haproxy balance mode BALANCE_ALG: "roundrobin" ############################ # role:network [flannel,calico,cilium,kube-ovn,kube-router] ############################ # ------------------------------------------- flannel # [flannel]设置flannel 后端"host-gw","vxlan"等 FLANNEL_BACKEND: "vxlan" DIRECT_ROUTING: false # [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64" flannelVer: "v0.13.0-amd64" flanneld_image: "easzlab/flannel:{{ flannelVer }}" # [flannel]离线镜像tar包 flannel_offline: "flannel_{{ flannelVer }}.tar" # ------------------------------------------- calico # [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md CALICO_IPV4POOL_IPIP: "Always" # [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现 IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}" # [calico]设置calico 网络 backend: brid, vxlan, none CALICO_NETWORKING_BACKEND: "brid" # [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x] calico_ver: "v3.15.3" # [calico]calico 主版本 calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}" # [calico]离线镜像tar包 calico_offline: "calico_{{ calico_ver }}.tar" # ------------------------------------------- cilium # [cilium]CILIUM_ETCD_OPERATOR 创建的 etcd 集群节点数 1,3,5,7... ETCD_CLUSTER_SIZE: 1 # [cilium]镜像版本 cilium_ver: "v1.4.1" # [cilium]离线镜像tar包 cilium_offline: "cilium_{{ cilium_ver }}.tar" # ------------------------------------------- kube-ovn # [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点 OVN_DB_NODE: "{{ groups['kube_master'][0] }}" # [kube-ovn]离线镜像tar包 kube_ovn_ver: "v1.5.3" kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar" # ------------------------------------------- kube-router # [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet" OVERLAY_TYPE: "full" # [kube-router]NetworkPolicy 支持开关 FIREWALL_ENABLE: "true" # [kube-router]kube-router 镜像版本 kube_router_ver: "v0.3.1" busybox_ver: "1.28.4" # [kube-router]kube-router 离线镜像tar包 kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar" busybox_offline: "busybox_{{ busybox_ver }}.tar" ############################ # role:cluster-addon ############################ # coredns 自动安装 dns_install: "yes" corednsVer: "1.8.0" ENABLE_LOCAL_DNS_CACHE: no busybox_offline: "busybox_{{ busybox_ver }}.tar" ############################ # role:cluster-addon ############################ # coredns 自动安装 dns_install: "yes" corednsVer: "1.8.0" ENABLE_LOCAL_DNS_CACHE: no dnsNodeCacheVer: "1.17.0" # 设置 local dns cache 地址 LOCAL_DNS_CACHE: "169.254.20.10" # metric server 自动安装 metricsserver_install: "no" metricsVer: "v0.3.6" # dashboard 自动安装 dashboard_install: "no" dashboardVer: "v2.2.0" dashboardMetricsScraperVer: "v1.0.6" # ingress 自动安装 ingress_install: "no" ingress_backend: "traefik" traefik_chart_ver: "9.12.3" 因为没有给负载均衡器安装证书,所以在里面把负载角色注释或删除,以免安装时会报错,(报错也不影响安装) [root@k8s-master1 ~]#vim /etc/kubeasz/playbooks/01.prepare.yml - hosts: - kube_master - kube_node - etcd- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

3、安装master和node节点的核心组件

#进入到/etc/kubeasz路径下执行ezctl安装各个组件(如果不知道命令可以ezctl --help 查询) [root@k8s-master1 kubeasz]#ezctl setup --help【可以依次安装也可以一步安装(这里我选择一步一步安装)】 Usage: ezctl setup <cluster> <step> available steps: 01 prepare to prepare CA/certs & kubeconfig & other system settings 02 etcd to setup the etcd cluster 03 container-runtime to setup the container runtime(docker or containerd) 04 kube-master to setup the master nodes 05 kube-node to setup the worker nodes 06 network to setup the network plugin 07 cluster-addon to setup other useful plugins 90 all to run 01~07 all at once 10 ex-lb to install external loadbalance for accessing k8s from outside 11 harbor to install a new harbor server or to integrate with an existed one examples: ./ezctl setup test-k8s 01 (or ./ezctl setup test-k8s prepare) ./ezctl setup test-k8s 02 (or ./ezctl setup test-k8s etcd) ./ezctl setup test-k8s all ./ezctl setup test-k8s 04 -t restart_master #安装前的装备工作,安装证书、配置文件等 [root@k8s-master1 kubeasz]#ezctl setup 01 #安装etcd集群 [root@k8s-master1 kubeasz]#ezctl setup 02 #安装容器运行池(安装docker) [root@k8s-master1 kubeasz]#ezctl setup 03 #安装master节点 [root@k8s-master1 kubeasz]#ezctl setup 04 #验证是否安装成功 [root@k8s-master1 kubeasz]#kubectl get node NAME STATUS ROLES AGE VERSION 172.17.8.101 Ready,SchedulingDisabled master 14d v1.21.0 172.17.8.102 Ready,SchedulingDisabled master 14d v1.21.0 #安装node节点 [root@k8s-master1 kubeasz]#ezctl setup 05 #验证是否安装成功 [root@k8s-master1 kubeasz]#kubectl get node NAME STATUS ROLES AGE VERSION 172.17.8.101 Ready,SchedulingDisabled master 14d v1.21.0 172.17.8.102 Ready,SchedulingDisabled master 14d v1.21.0 172.17.8.107 Ready node 14d v1.21.0 172.17.8.108 Ready node 14d v1.21.0 #安装k8s的网络(我安装的是calico网络) [root@k8s-master1 kubeasz]#ezctl setup 06 #验证calico网络的状态是否正常 [root@k8s-master1 kubeasz]#calicoctl node status Calico process is running. IPv4 BGP status +--------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +--------------+-------------------+-------+----------+-------------+ | 172.17.8.102 | node-to-node mesh | up | 01:11:27 | Established | | 172.17.8.107 | node-to-node mesh | up | 01:11:27 | Established | | 172.17.8.108 | node-to-node mesh | up | 01:11:28 | Established | +--------------+-------------------+-------+----------+-------------+ IPv6 BGP status No IPv6 peers found. #测试网络的连通性 [root@k8s-master1 ~]#kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP(podIP) NODE (nodeIP) NOMINATED NODE READINESS GATES net-test1 1/1 Running 3 14d 10.200.36.72 172.17.8.107 <none> <none> net-test2 1/1 Running 3 14d 10.200.36.71 172.17.8.107 <none> <none> [root@k8s-master1 ~]# kubectl exec -it net-test1 sh kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. / # ping 10.200.36.71 PING 10.200.36.71 (10.200.36.71): 56 data bytes 64 bytes from 10.200.36.71: seq=0 ttl=63 time=0.185 ms 64 bytes from 10.200.36.71: seq=1 ttl=63 time=0.070 ms ^C --- 10.200.36.71 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.070/0.127/0.185 ms / #- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

升级步骤(生产中一般都是夸一个小版本升级)

1、扩容

#添加master [root@k8s-master1 ~]#ezctl add-master k8s-01 172.17.8.103 #验证 [root@k8s-master1 ~]#kubectl get node -A NAME STATUS ROLES AGE VERSION 172.17.8.101 Ready,SchedulingDisabled master 14d v1.21.0 172.17.8.102 Ready,SchedulingDisabled master 14d v1.21.0 172.17.8.103 Ready,SchedulingDisabled master 25m v1.21.0 172.17.8.107 Ready node 14d v1.21.0 172.17.8.108 Ready node 14d v1.21.0 #添加node节点 [root@k8s-master1 ~]#ezctl add-node k8s-01 172.17.8.109 #验证 [root@k8s-master1 ~]#kubectl get node -A NAME STATUS ROLES AGE VERSION 172.17.8.101 Ready,SchedulingDisabled master 14d v1.21.0 172.17.8.102 Ready,SchedulingDisabled master 14d v1.21.0 172.17.8.103 Ready,SchedulingDisabled master 25m v1.21.0 172.17.8.107 Ready node 14d v1.21.0 172.17.8.108 Ready node 14d v1.21.0 172.17.8.109 Ready- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

升级版本(v1.21.0-v.1.21.12)

升级思路

- 下载升级安装包并解压到指定路径

- 停止要升级的master节点服务

- 在负载均衡器停止向升级的master服务转发

- 把升级版本的kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler拷贝到升级的master节点的启动目录下(我是在/usr/local/bin下)

- 启动master服务

- 启动负载均衡向升级的master转发

升级master版本

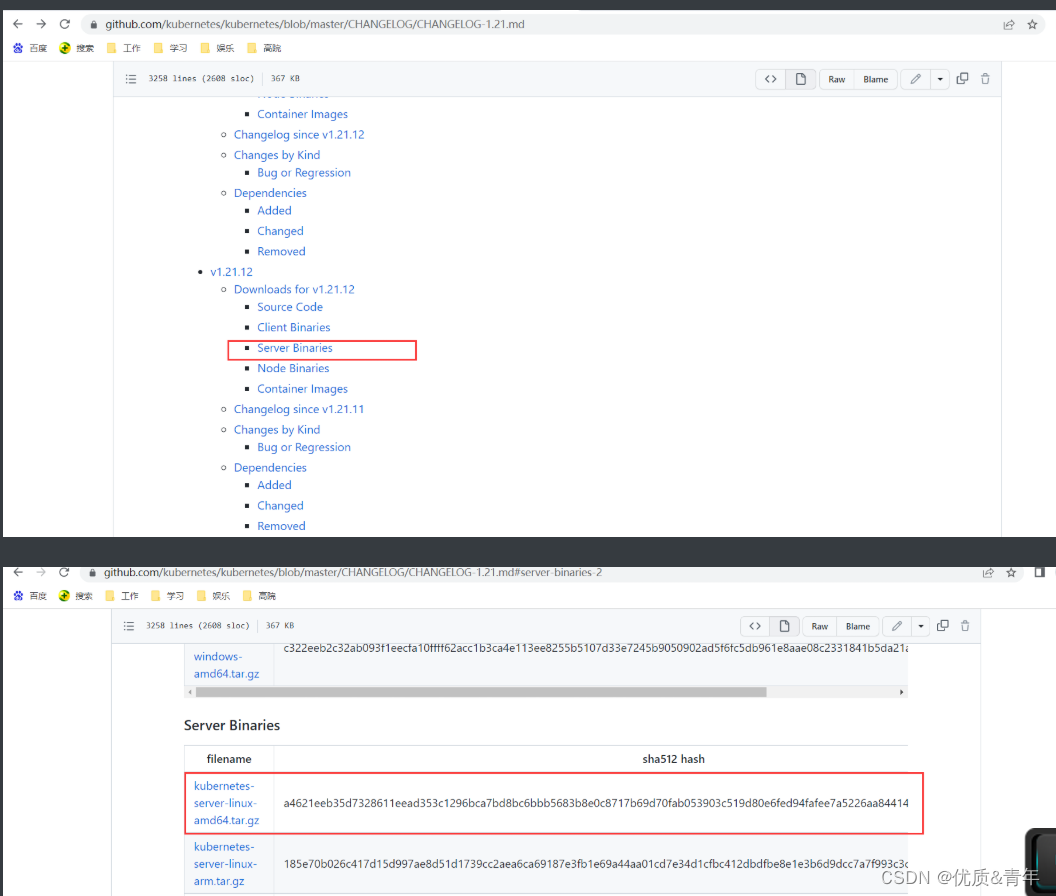

1、下载升级安装包

[root@k8s-master1]#mkdir /app/kubernetes -p [root@k8s-master1]#cd /app/kubernetes -p [root@k8s-master1]#wget https://dl.k8s.io/v1.21.12/kubernetes-server-linux-amd64.tar.gz [root@k8s-master1]#tar xvf kubernetes-server-linux-amd64.tar.gz [root@k8s-master1 server]#pwd /app/kubernetes/kubernetes/server- 1

- 2

- 3

- 4

- 5

- 6

Master节点上需要升级kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler

其中kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler有service,需要先停止

#停止服务 [root@k8s-master1 server]#systemctl stop kube-apiserver kube-proxy kube-controller-manager kube-scheduler kubelet #停止负载均衡向升级的master转发 #把升级的软件拷贝到指定目录 [root@k8s-master1 ~]#scp kube-apiserver kube-proxy kube-scheduler kube-controller-manager kubelet 172.17.8.103:/usr/local/ #启动服务 [root@k8s-master1 ~]#systemctl start kube-apiserver kube-proxy kube-scheduler kube-controller-manager kubelet #开启负载均衡向升级的master转发- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

升级node节点(略)和maser升级相同

ubelet kube-proxy kube-scheduler

其中kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler有service,需要先停止[外链图片转存中…(img-3Ruofyrk-1666249332476)]

#停止服务 [root@k8s-master1 server]#systemctl stop kube-apiserver kube-proxy kube-controller-manager kube-scheduler kubelet #停止负载均衡向升级的master转发 #把升级的软件拷贝到指定目录 [root@k8s-master1 ~]#scp kube-apiserver kube-proxy kube-scheduler kube-controller-manager kubelet 172.17.8.103:/usr/local/ #启动服务 [root@k8s-master1 ~]#systemctl start kube-apiserver kube-proxy kube-scheduler kube-controller-manager kubelet #开启负载均衡向升级的master转发- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

升级node节点(略)和maser升级相同

这里可能是centos7和kubernetesv1.21.12的kubelet有版本不兼容问题,结果不对就没有截图,同理node节点我也放升级截图了,原理都是相同。这是第二次学习k8s,明显感觉比第一次好多了,温故而知新,再接再厉。

-

相关阅读:

Redis集群模式和常用数据结构

Java单元测试及常用语句 | 京东物流技术团队

自动登录远程linux服务器

数据结构:Map和Set(1)

【java】Java中的异步实现方式

LeetCode209-长度最小的子数组

vivo 全球商城:电商平台通用取货码设计

Springboot日常总结-idea全局配置maven

【MySQL】的存储过程

【iOS开发-AFNetWorking下的POST和GET】

- 原文地址:https://blog.csdn.net/weixin_58519482/article/details/127427610